- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GeForce GTX 480 – GF100 Has Landed

We’ve learned a lot about NVIDIA’s GF100 (Fermi) architecture over the past year, and after what seemed like an eternal wait, the company has officially announced the first two cards as part of the series; the GeForce GTX 470 and GTX 480. To start, we’re taking a look at the latter, so read on to see if it GF100 was worth the wait.

Page 14 – Power & Temperatures

To test our graphics cards for both temperatures and power consumption, we utilize OCCT for the stress-testing, GPU-Z for the temperature monitoring, and a Kill-a-Watt for power monitoring. The Kill-a-Watt is plugged into its own socket, with only the PC connect to it.

As per our guidelines when benchmarking with Windows, when the room temperature is stable (and reasonable), the test machine is boot up and left to sit at the Windows desktop until things are completely idle. Once things are good to go, the idle wattage is noted, GPU-Z is started up to begin monitoring card temperatures, and OCCT is set up to begin stress-testing.

To push the cards we test to their absolute limit, we use OCCT in full-screen 2560×1600 mode, and allow it to run for 30 minutes, which includes a one minute lull at the start, and a three minute lull at the end. After about 10 minutes, we begin to monitor our Kill-a-Watt to record the max wattage.

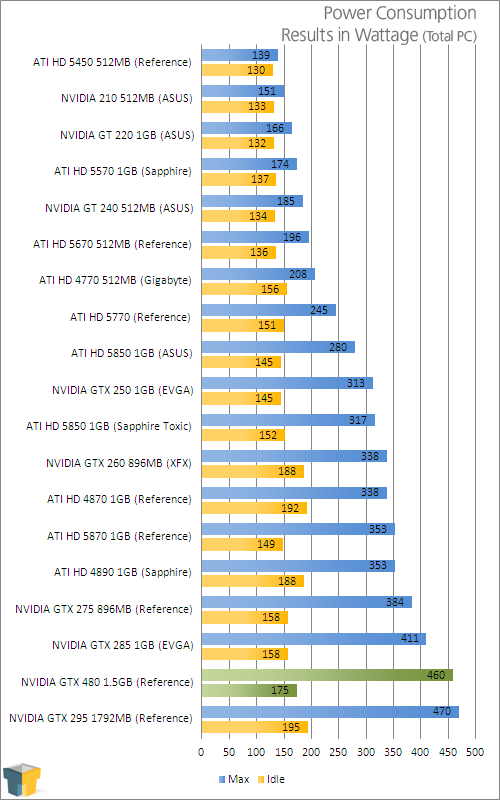

The entire fleet of NVIDIA’s last-generation cards fell behind ATI where temperatures and power was concerned, and not much changes with GF100… although the situation has gotten worse. The GTX 480 almost managed to break a TG lab record with its top-end temperature of 96°, falling just behind the GTX 295’s 98°C. With such non-impressive temperatures, can we bet on modest power consumption at least?

I think it’s safe to say, “No.”

While AMD, both in its CPU and GPU divisions, and Intel, are both striving to make sure its products are as power-efficient as possible, NVIDIA seems to be going in the opposite direction, focusing on higher performance despite the power draw increase.

We’re not talking about a small power increase here. In fact, the differences between NVIDIA’s own current and previous generation, and especially to ATI’s current cards, is just staggering. Compared to the GTX 285, the GTX 480 draws an additional 49W at full load. Given the performance gain, that’s somewhat reasonable. But compared to the HD 5870, the GTX 480 sucks down an additional 107W at full load.

Ever since AMD’s HD 5000 launch, I’ve commended the company for developing its graphics cards with such excellent power efficiency, but looking at the power draw from the GTX 480 truly emphasizes two possible facts… that AMD has really done a tremendous job on designing its architecture for ultimate power efficiency and performance, or that NVIDIA has done the stark opposite.

I’ll talk more about this on the following page, along with the rest of my final thoughts.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!