- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GTC 2014 Recap: Pascal, TITAN Z, Deep-learning & More

Being a developer’s conference, NVIDIA’s GTC always boasts tremendous potential to show us some truly awesome technology – and 2014’s show delivered in spades. In this short article, we take a look at some of the biggest products and technologies announced at the show, and sprinkle in some opinion to make for a balanced breakfast.

In the summer of 2008, NVIDIA held an event in San Jose that centered around visual computing. Called NVISION, it featured seminars for developers to attend, a full-blown LAN party, and vendor exhibits. At around the same time, GPGPU technology had been gaining some serious traction, with NVIDIA leading the pack with its CUDA programming model. The growth and overall importance of GPGPU inevitably led NVIDIA to shelf NVISION and replace it with GTC, or GPU Technology Conference.

While I had attended that NVISION in 2008, last week’s GTC was the first I’ve gone to. In some ways, despite the altered focus of the event, it all felt familiar – no doubt helped by the fact that both NVISION and this latest GTC were held at the San Jose Convention Center. Prior to attending GTC last week, I wasn’t quite sure what to expect, though I guessed it’d be similar to Intel’s Developer Forum – only with an NVIDIA focus. After-the-fact, I can’t say that assumption was far off.

NVISION’s design enabled the general public to attend, but GTC isn’t quite as welcoming given its developer focus. Even as press, it was difficult to find something other than the keynotes to attend, and where I did check out smaller seminars, much of the information went well over my head. I truly feel my dumbest when I attend conferences like these, because there are so many brilliant people about.

I didn’t report on GTC too much during the event outside of social media, so I’d like to use this article as a platform to talk about some of the biggest announcements made at the event, along with other fun things.

More Bandwidth Leads to Pascal

Bandwidth capabilities of modern GPUs is mind-blowing in comparison to other components in a PC, but NVIDIA doesn’t think it’s good enough, and it sure doesn’t want it to become a major bottleneck. NVLink aims to negate that problem. It’s a chip-to-chip solution that’s effectively PCIe-like with enhanced DMA capabilities.

One of the most important aspects of NVLink is that it’d allow for unified memory between the GPU and CPU, and improve cache coherency. Ultimately, it could far exceed PCIe bandwidth by 5-12x. Best of all, it’d allow for a GPU-to-GPU interconnect, which could prove beneficial not only for GPGPU use, but gaming as well.

Improving bandwidth even further will be NVIDIA’s use of 3D memory, where chips are literally stacked atop of each other in order to vastly improve both performance and power efficiency, and of course, capacity.

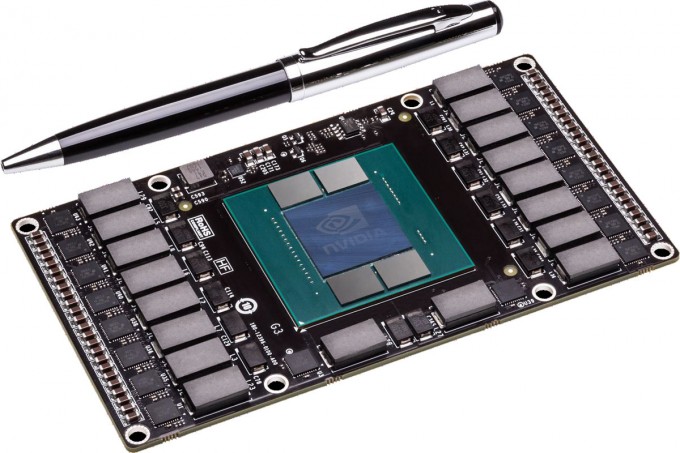

Both of these enhancements lead to a brand-new GPU architecture, and in celebration of Blaise Pascal’s achievements in the 1600s, Maxwell’s successor becomes “Pascal”. A demo board (seen above) was waved around by Jen-Hsun as he claimed this would be the board we’d see in notebooks, workstations, cloud computers, gaming rigs, and so forth. This board is much smaller than a standard graphics card, so it’ll be interesting to see if during the Pascal generation, the graphics cards are in fact shorter than they are today.

GeForce GTX TITAN Z

Before introducing TITAN Z, Jen-Hsun brought up successes of the original TITAN. Simply put, it sold “like hotcakes”. Gaming enthusiasts reading this might find that a little odd, given that TITAN doesn’t offer the best bang-for-the-buck, and even NVIDIA would readily admit that. But it’s not gamers who snatched TITAN up; instead, it was researchers who needed supercomputer-like power that they could buy at their local computer shop.

TITAN’s biggest differentiating feature is its incredible double-precision performance. To get the same level of performance with another solution, researchers would have to adopt solutions at least four times as expensive, such as NVIDIA’s own Quadro K6000. On the AMD side, even its highest-end workstation offering (FirePro W9000) falls short. $1,000 for a GPU like this might be expensive to a gamer, but for a researcher who needs either unparalleled DP or top-rate SP performance, it can actually prove to be a bargain.

That being the case, there’s some bad news for gamers where TITAN Z is concerned: It’s not for you. Well – to be fair, it could be, but the $3,000 price tag is going to be a major deterrent for most people. TITAN Z is for researchers, plain and simple.

An animation showed two TITAN Blacks coming together to become one three-slot TITAN Z, so I’d imagine that the specs would be TITAN Black x 2. Due to the increased heat that comes with dual-GPU cards, though, the GPU core clock is likely to be reduced a little bit. If that’s the case, the card could be a bit of a hard sell, given that dual TITAN Black is $2,000 and would be clocked the same as TITAN Z, or better. But, we’ll have to wait and see. For all we know, NVIDIA might have another trick up its sleeve.

During a press dinner, I asked NVIDIA for a TDP rating, but no surprise: I didn’t get one. One thing’s for sure, I wouldn’t complain about having one of these bad boys installed in my PC.

Linux Everywhere

This seems so minor, but given the fact that I am a fan of Linux, it stood out. During all of the presentations where development tools were shown, I don’t remember a single instance where something was shown on Windows or OS X. Instead, Linux enjoyed all of the limelight. NVIDIA’s own demos used Ubuntu as a base, while Pixar’s development software was shown running in Red Hat.

Walking through the hall with vendor exhibits, Linux continued to shine. Even Puget Systems was on-site to show off what it’s doing with workstation-class PCs, and once again, its demo was Linux-based (Ubuntu, like NVIDIA’s own demos).

One thing I didn’t notice is if any of the game demos were shown on Linux, but given NVIDIA’s love for DirectX 12, I have doubts of that. Either way, it was good to see Linux so heavily relied-upon for such intensive, important workloads.

Et cetera

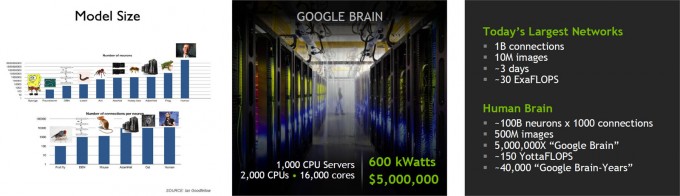

At an event like GTC, the amount of information shot your way is intense, so it’s easy to forget about some of the cooler things that were discussed. Like Google Brain, Google’s deep-learning project that was demoed a couple of years ago. This supercomputer featured 2,000 CPUs with a total of 16,000 cores, and operated at 600kW. Oh – and it cost $5,000,000. The project was used to see what it’d take for computers to learn things on their own, just as a normal brain would.

The problem with emulating the brain is that an enormous amount of computing power is required. So much so, that it’s hard to predict whether we’ll ever get there. It’s not so much about whether or not chips will be released down the road that are an order of magnitude faster than what we have today; we have to worry about power consumption as well. At 600,000 watts, Google Brain is one hell of a power-sucker. Now consider the fact that you’d need roughly 5,000,000 Google Brains to match a human brain’s potential.

That right there proves that while today’s supercomputers offer incredible performance, when compared to the human brain, they look like child’s play.

Of course, the mention of Google Brain had to have an NVIDIA twist, and that’s that Stanford’s AI lab used one server equipped with three TITAN Z graphics cards which matched the core count of Google Brain. And, it cost far less, at $12K (vs. $5M). The reduction of power from 600,000W to 2,000W sure doesn’t hurt either.

Similarly, NVIDIA also announced IRAY VCA, a server that will retail for about $50,000 and come equipped with 8 Kepler-based GPUs – in all, it’ll completely dwarf the performance of a single workstation equipped with a Quadro K5000 card (to be expected, as those retail for under $2,000). It’ll further have 12GB per GPU, 1xGbps LAN, 2x 10Gbps LAN, and 1x InfiniBand connection.

The Google Brain example above was impressive, but it wasn’t the only thing mind-blowing at the show. During his keynote, Jen-Hsun showed-off an Audi car that was being rendered in extremely high detail with the help of ray tracing. We’ve seen demos like this before, but the level of detail seen here was unparalleled.

For starters, billions of light rays had bounced around to produce the final image, and during the demo, it was revealed that every single component of the car had been rendered; at one point, the car was sliced up, revealing its internal components – components which also reflected light naturally.

What was most impressive, though, was learning that this demo was being powered by 19 IRAY VCAs. Yup – $950,000 worth of computing power. Truly unbelievable – especially since it still took about 10 seconds for each position-change of the camera to completely re-render.

Overall, this GTC offered an impressive look at both NVIDIA’s future products and technologies as well as current technologies and a realization of the amazing things that can be accomplished through a graphics chip.

To learn more about what I’ve talked about here, along with other things I didn’t mention, I’d recommend watching Jen-Hsun’s keynote, shown above. You’ll see many cool demos, and glean some information I couldn’t cover in a short article. It’s worth noting that Pixar’s keynote was also excellent, and you can watch it and access other content here.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!