- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

A Look at the NVIDIA Tesla-infused Titan Supercomputer

This past June, IBM’s Sequoia supercomputer took over the #1 spot for being the fastest in the world, but soon, the folks at the Oak Ridge National Laboratory hope to replace it. Their weapon? Titan, a supercomputer featuring 18,688 CPUs and GPUs. We take a look at why ORNL went with this design, its benefits, and of course, its capabilities.

Page 1 – Introduction

The folks at the Oak Ridge National Laboratory (ORNL) in Tennessee have this week unveiled their Titan supercomputer – one that just might become the world’s fastest. For a couple of reasons, Titan isn’t an ordinary supercomputer. For starters, it has a rich history, having been evolved from the Jaguar supercomputer first put into operation in 2005. Since then, it’s gone through multiple upgrades, but Titan takes the cake.

In 2005, Jaguar, based on Cray’s XT3 design, pushed forth a then-staggering peak performance rating of 25 teraflops – that is, 25 trillion floating-point operations per second. By comparison, many desktop graphics cards today offer 1+ teraflops performance, so the technological advances that have occurred over the years are very obvious.

At its launch, Jaguar was a fast supercomputer, but it wasn’t the fastest. After a couple of upgrades, it earned that recognition in 2009, thanks to its peak performance of 1.4 petaflops (1.4 quadrillion floating-point operations per second). In a mere four years, Jaguar’s performance was multiplied by about 56x.

Further improvements to Jaguar brought its peak performance to 1.75 petaflops, which allowed it to place third place on the TOP500 Supercomputer list as of last summer. While it’s difficult to imagine such a powerful supercomputer being decommissioned, as time passes, computer hardware becomes not only more powerful, but more power efficient. Even as home users, performance-per-watt has become an important consideration when researching a new PC. Now, imagine the importance when dealing with thousands, if not tens of thousands of processors.

So, it was inevitable that Jaguar’s performance/power ratio would become less-than-ideal soon enough, so ORNL began researching its next upgrade – and it’d prove to be a major one. So major, in fact, that it wouldn’t have been appropriate to retain the Jaguar name. Thus, the Titan transformation began.

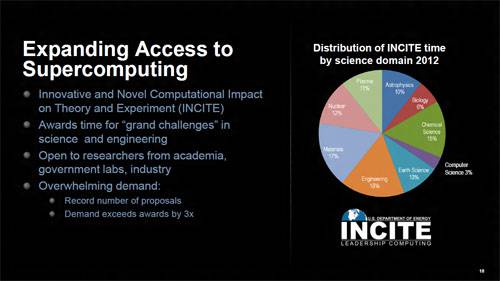

Whereas Jaguar consisted of only CPUs (AMD Opteron), ORNL had other ideas for Titan. By now, you’re likely well aware of the benefits that GPGPU computing can avail, but as we’ve seen in the past, its uses can go far beyond video encoding and image manipulation. It’s proven to be a major boon to science; those working in fields such as biology, astrophysics, nuclear, chemicals, engineering and so forth. This is due to the fact that compared to the traditional desktop CPU, GPUs are very focused in their computational abilities. Fortunately, today’s highly parallel GPU architectures perfectly favor the calculations needing to be rendered by those working in the fields above, among others.

While you and I sit comfortably at home using our GPU to throw items around the room with a gravity gun or cause a meltdown in a nuclear reactor, a materials scientist is using a similar GPU to determine the magnetic properties of various materials, while nuclear researchers use it to analyze how neutrons behave in a nuclear reactor.

Those scientific uses perfectly illustrate what Titan will be used for, and that brings us to another point that makes this supercomputer unique: it’s “open”. Through the INCITE program (Innovative and Novel Computational Impact on Theory and Experiment), researchers will be able to apply for a timeslot on Titan to run the calculations they need to. As seen in the below slide, there’s huge demand from many different industries. This further makes Titan a bit unique as it wasn’t built for a singular purpose.

Researchers already awarded compute time on Titan along with their goals can be viewed here (PDF).

Let’s talk for a moment about the actual hardware, and then get into the reasons for it. Jaguar, in its final state, delivered about 2.3 petaflops of peak performance, utilizing 7 megawatts of power. This is equivalent to the power requirements of 7,000 homes. For Jaguar to reach the same 20 petaflop goal of Titan simply by multiplying its current components, its power draw would become 60 megawatts – enough to power 60,000 homes.

When a supercomputer draws as much power as a small city, one must begin to question other options. That option, for those at ORNL, was to implement 18,688 Kepler-based Tesla K20 GPUs from NVIDIA along with an equal number of 16-core Opteron 6274 CPUs from AMD. Despite its plentiful number of powerful CPUs, it’s being said that 90% of the floating-point performance Titan delivers comes from the GPUs.

Oh, and also: Titan comes equipped with 710 terabytes of RAM. Cue the But can it run Crysis? jokes.

| Jaguar (2011) | Titan (2012) | IBM Sequoia (2012) | |

| Compute Nodes | 18,688 | 18,688 | 98,304 |

| Login & I/O Nodes | 256 | 512 | 768 |

| Memory Per Node | 16GB | 32GB (CPU) + 6GB (GPU) | 16GB |

| CPU Cores | 224,256 | 299,008 | 1,572,864 |

| NVIDIA K20 GPUs | N/A | 18,688 | N/A |

| Total Memory | 300 TB | 700 TB | 1,536 TB |

| Peak Performance | 2.3 Petaflops | 20+ Petaflops | 20 Petaflops |

| Power Draw | 7.0 MW | 9.0 MW | 8.0 MW |

In the table above, you can see that the number of “nodes” in Titan perfectly matches the number of individual CPUs and GPUs. This does not mean that each physical unit installed into Titan is a node, but rather, judging from all of the images we were provided it seems each of these installed units contains four nodes laid out in a specific pattern. The CPUs and GPUs are of course cooled with large heatsinks, but the memory is left exposed as it runs much cooler.

Also listed in the table above is IBM’s Sequoia, a supercomputer currently sitting in the #1 spot in the TOP500 supercomputer list, which Titan hopes to dethrone. Launched in June, Sequoia offers similar peak performance as Titan, 20 petaflops. In lieu of using GPUs, IBM opted to use its own 16-core CPUs, resulting in a total number of 98,304, vs. 18,688 for Titan. Despite its dramatically increased CPU count, Sequoia draws 1MW less power than Titan.

The fact that IBM’s supercomputer offers a similar peak performance to Titan yet draws less power might seem a bit striking, but there are a couple of reasons for it. For starters, IBM builds its own CPUs, so it has unparalleled control in developing a product best-suited for this sort of computing. Another reason is that NVIDIA’s Tesla GPU draws a lot more power than a typical CPU, though the benefit is that it offers a far-improved performance-per-watt rating. This is the reason that Sequoia requires 98,304 individual processors while Titan delivers similar or better performance with only 37,376 (CPU + GPU).

Other fun facts that would be nice to know but are impossible to come by would be the physical size of each supercomputer, their cooling requirements, and perhaps more importantly, their cooling costs.

One thing outsiders rarely get to experience is the build process of a supercomputer, but the situation is a bit different here. In addition to having been provided with numerous photographs, NVIDIA also provided a time-lapse video that showcases the build process from start to finish. It’s a worthy watch at only 2 minutes:

For those at work who may not be able to view the above video, here’s one of the better photos, showing a worker installing a CPU heatsink on a decked-out node:

Another 15 photos from the build process can be seen on the following page. Just be prepared for a geekgasm.

With Titan offering a similar peak performance to Sequoia with less than half the number of physical processors, this might be a sign that supercomputers like Titan are soon to become common. Compared to the CPU, GPU vendors (AMD included) have worked hard over the last couple of years to optimize their offerings for supercomputer-like workloads, and further research and development aren’t likely to slow down anytime soon. And this is where things begin to get really exciting.

Titan, at 20 petaflops, proves to be 800x faster than Jaguar as it was released in 2005, and ~8.70x faster than its pre-Titan transformation. We’re seeing incredible gains in performance here occurring over the span of only a couple of years. If Titan does in fact take the #1 spot, it won’t be there for long, as the supercomputing TOP500 list has long proven. Technological advances are taking place all the time, making the quest for exascale computing (1 exaflops = 1,000 petaflops) all the more exciting. Given how things are going, that goal will be reached sooner than later.

Discuss this article in our forums!

Have a comment you wish to make on this article? Recommendations? Criticism? Feel free to head over to our related thread and put your words to our virtual paper! There is no requirement to register in order to respond to these threads, but it sure doesn’t hurt!

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!