- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Clearing Up Misconceptions about CUDA and Larrabee

Both Intel and NVIDIA have lots to say about their respective GPU architectures, as well as the competition’s. Partly because of this, there are numerous misconceptions floating around about both Larrabee and CUDA, so we decided to see if we could put to rest a few of the most common ones.

Where Larrabee and CUDA are concerned, the fun is just getting started, and won’t end until well after Larrabee’s launch, I’m sure. Both Intel and NVIDIA have their own ideas of what it takes to build the ultimate graphics processor, which is made interesting by the fact that both are doing completely different things.

Earlier this month, Intel opened up a bit about their Larrabee architecture, which I outlined in the appropriately titled, “Intel Opens Up About Larrabee“. Shortly after that article was posted, NVIDIA further cleared up some misconceptions about their own CUDA architecture.

Though the waters surrounding both offerings has been muddied enough as is, NVIDIA sent out a unique paper to the media this week, which aimed not only to clear up CUDA’s own misconceptions, but to raise questions about Intel’s implementation of Larrabee, and potential downsides.

Talking to both Intel and NVIDIA on the phone, I tried to piece together the full story, and clear up misconceptions not for just NVIDIA, but Intel as well, and also let them add in their own two cents. Thanks to Tom Forsyth, Larrabee developer at Intel and also Andy Keane, GM of the GPU Computing Group at NVIDIA, for taking the time to discuss things.

Larrabee Questions That Need Answering

In the previously mentioned paper, NVIDIA raised three main questions around Larrabee:

- Will apps written for today’s Intel CPUs run unmodified on Larrabee?

- Will apps written for Larrabee run unmodified on today’s Intel multi-core CPUs?

- The SIMD part of Larrabee is different from Intel’s CPUs – so won’t that create compatibility problems?

The first two questions are quite similar, and the straight answer is “No.”. But, there’s a caveat. If we were to change ‘apps’ to ‘code’, then the story would change, because as it stands, Intel claims that the same Larrabee code can be compiled to run on either the CPU or GPU (Larrabee). It’s all a matter of what you choose in the compiler to do.

Intel noted that the code wouldn’t be compiled for both the CPU and GPU at the same time, since the compiler will optimize the binary for the respective architecture. They went on to say that it could technically be compiled for both, but that would not be the ideal scenario, due to various optimizations.

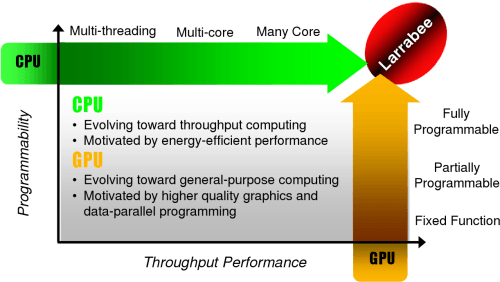

In the Larrabee article last week, Intel claimed that GPUs up to this point haven’t been fully programmable, but NVIDIA states otherwise. Thanks to CUDA, they say, the GPU is indeed fully programmable. It’s a matter of the developer exploiting their code in order to have the GPU behave as they’d like. This goes beyond gaming, of course, and more into the general-purpose scheme of things.

The third question cannot be answered now, for obvious reasons. We haven’t seen real-world results, like we have with CUDA, and until we do, we won’t be able to conclude on anything. Larrabee could very-well fail in some regards, but it could also excel in most… it’s hard to say.

Can Larrabee or CUDA Scale?

Another point raised by NVIDIA is with regards to scaling all the available cores:

NVIDIA’s approach to parallel computing has already proven to scale from 8 to 240 GPU cores. This allows the developer to write an application once and run across multiple platforms. Developers now have the choice to write only for the GPU or nwrite and compile for the multi-CPU as well. In fact, NVIDIA demonstrated CUDA for both GPU and CPU at our annual financial analyst day and ran an astrophysics simulation on an 8-core GPU inside a chipset, a G80-class GPU and a quad core CPU.

The argument is simple. Current applications for the PC don’t scale well. NVIDIA’s stance is that their GPUs and CUDA architecture do allow reliable scaling, as the example above outlines. Their example showcased an IGP running with 8 cores (the GTX 280 runs 240), which likely didn’t fare well in terms of overall performance, but the goal was simply to show the scaling ability.

I am confused as to why a beefier GPU wasn’t used, however, given that NVIDIA claims the application should have taken advantage of all the cores available in whatever the highest-end card at the time was.

Regardless of that, it’s a good point to be raised. As it stands right now, it’s rare to find a multi-threaded application that will exploit all of the available cores of a Quad-Core. I found this out first-hand when tackling our Intel Skulltrail article, which featured their enthusiast platform that allows two Quad-Cores to work together, essentially offering you eight cores to use as you’d like.

Taking a look at the results laid out there, we can see that Skulltrail is designed for either the specific workstation app, or for intense multi-tasking. None of our real-world benchmarks fared 100% better with two identical Quad-Cores, than with one, which is why NVIDIA raises the concerns of scaling an application for Larrabee, which will feature many more cores than what our desktop processors currently do.

I admit that I haven’t taken advantage of CUDA much in the past, so the situation with NVIDIA might very-well be the same as Intel. Plus, NVIDIA boasts that their GPUs and CUDA architecture were designed with specific computation in mind, which is why they believe that their scaling-ability far excels whatever Larrabee would be capable of.

But all of that aside, Intel does state that Larrabee will scale well, so it’s only a matter of time before we can see the results for ourselves.

During the phone conversation, NVIDIA also pointed out that their solution to thread-management could prove far more reliable than Intel’s, as theirs is a hardware-based solution, rather than the software-based handling of Larrabee, which NVIDIA claims could result in unneeded complexity and complication.

There Seems to be No Current “Winner”

So who’s solution is better? Well until Larrabee samples show face, that question is obviously impossible to answer. Both solutions look good, and both seem to make perfect sense for the most part. It’s all a matter of waiting until Larrabee samples are available, then we can compare the two directly and expose the weaknesses of each.

Coming back to the code itself, Intel stressed that Larrabee code will compile without issue with an industry-standard compiler, such as gcc. This is a bold statement, as if true, compiling on a no-nonsense compiler without hassle would be a dream come true for developers. Likewise though, NVIDIA states that CUDA offers the same ability. Their C might be based on the PathScale compiler, but it too should compile on a regular compiler without issue, on top of being able to create a binary for execution on either the GPU or CPU.

Whew, after taking a look at each side here and the facts at hand, I can’t help but still feel lost in all of it. NVIDIA’s paper to the media outline some great questions and reassurances about the CUDA architecture, but both companies continue to contradict what the other one’s technology is capable of. Then we have other things being said that seem iffy, such as being able to compile native CUDA or Larrabee code with a standard compiler, all without issue.

All we can do right now is continue to wait, and see what other facts are brought to light. Although Intel’s Developer Forum takes place next week, it’s unlikely that they’ll be talking much about Larrabee, since their briefings last week and SIGGRAPH presentation this week pretty much cleared up all they wanted to say.

I do plan talking more to each company though, and seeing what else can be understood from all this. IDF is next week, with NVISION directly after, which we’ll be covering in some detail, so stay tuned.

Discuss in our forums!

If you have a comment you wish to make on this review, feel free to head on into our forums! There is no need to register in order to reply to such threads.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!