- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Mastering rsync and Bash to Backup Your Linux Desktop or Server

Keeping good backups of your data is important; don’t be the sucker who loses important files and has to deal with it afterwards! In this in-depth guide, you’ll learn about using rsync and lftp to transfer files, writing your own scripts and automating them, and backing up to external storage, a NAS, and a remote server without passwords.

Page 1 – Introduction, rsync Basics

I hate to admit it, but I’ve screwed up many times throughout the years when it’s come to using computers. My first big “Oh shi!” moment came when I was about 8-years-old, goofing around on my family’s trusty 286 with monochrome monitor. DOS was a new world to me, and I quickly found out that format c: wasn’t the proper command for deleting the contents off of a floppy disk. What a depressing day that was.

I’ve learned much since that experience, but even in recent memory I can recall careless mistakes which have led to lost files. But, no more. I’ve made it a goal to keep perfect backups of my data so that I don’t suffer such a fate again, and I highly recommend everyone reading this article do the same. It’s one of the worst feelings in the world when you realize you’ve lost an important file that can’t be brought back, so doing your best to prevent that seems smart.

This article aims to help you out by making use of a glorious piece of Unix software called ‘rsync’. You have to be willing to get your hands a little dirty, however, as this involves command-line usage. That’s part of the beauty here though – with simple commands and scripts, you’ll have complete control over your backup scheme. Best of all, your scripts can run in the background at regular intervals, and are hidden from view.

Seeing double is great when it comes to your data

It’s important to note that this article will not be focusing on backing up an entire system (as in, OS included), but rather straightforward data. Also, because a command-line interface is not for everyone, we’re considering dedicating a future article to taking a look at GUI options (please let us know if that’d be of interest!)

There are many different types of media that you can backup to, but we’re going to take a look at the three most popular and go through the entire setup process for each: Removable storage, network-attached storage (NAS), and a remote server also running Linux.

On this first page, we’re going to delve into the basics of rsync, giving examples for you to edit and test out.

Why rsync?

“Why not rsync?” might be the better question. rsync is a file synchronization application that will match the target with a source, with proper permissions retained. The beauty is that its usage is not simply limited to the PC you are on, but it can also access remote servers via RSH or SSH.

As an example, let’s use the scenario where you want to backup your top-secret documents folder to a flash drive. When given the “OK”, rsync will copy all of the files to your flash drive, making sure to retain the correct permissions while verifying that all of the files match. If you update a file and run rsync again, it will update that file to the storage you specify – simple as that. Though it’s not entirely user-friendly given its command-line nature, once you gain the basic knowledge of how it works, you will truly wonder how you went so long without using it.

Am I missing something that’s worth mentioning in this tutorial? By all means let me know and I can consider an inclusion. Most of what’s being tackled here is derived from a personal need, so I might not be including all worthy scenarios.

How To Use rsync

As mentioned above, rsync is a very powerful application and we can’t exhaust all of the possibilities with this article. We will touch on the basics however, with the hope that you will finish reading and find yourself more knowledgeable overall, and raring to go. Once you set up your cool backup scheme, it will effectively mimic commercial GUI’d software; while rsync isn’t pretty to look at, it’s seriously effective, and it’s free.

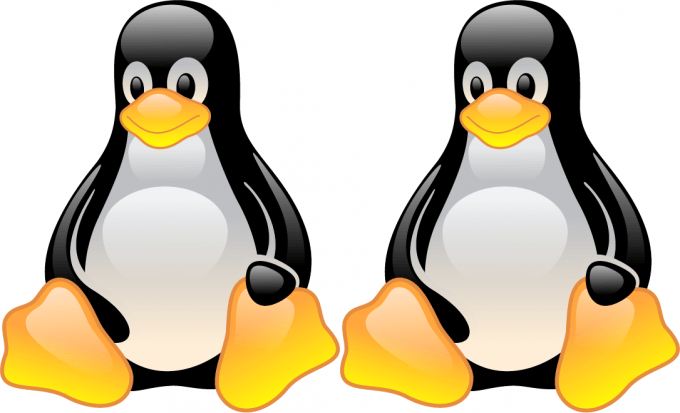

rsync can even be used on Windows through Cygwin

It’s worth noting that rsync can also be used in Windows, via the free terminal application Cygwin, which brings a Unix-like CLI environment to Microsoft’s OS. The rsync package could be installed during the initial setup of Cygwin, or after-the-fact via its package manager. Once the Windows folders are mounted inside of Cygwin, rsync can be used just as it can be under a real Unix/Linux OS.

Run-down of Common rsync Switches & Processes

When running an rsync command, the first thing that should come after rsync is the desired switch or switches. Common switches include:

- -r (Recursive; includes sub-folders)

- -a (Archive mode; includes sub-folders, while preserving permissions, groups, users, and times)

- -v (Verbose; the entire process is printed to the terminal rather than remain hidden)

- -e (Execute; calls upon an application required to make a connection, such as SSH)

- -c (Sync based on checksum – takes a while for a lot of files, or large files)

You can add multiple switches to the same rsync command; eg: rsync -avc.

Following the switches are the source and target folders; “source” is where the original files are located, and “target” is the folder where the files are going to be copied to:

rsync –av /source/ /target/

eg: rsync -av /home/username/ /mnt/Backup/

Copy Data from One Folder to Another

rsync –r /home/techgage/folder1/ /home/techgage/folder2/

This copies the entire contents of one folder (including other folders) to another, without much care to preserving permissions. To make an exact duplicate, the -a switch can be used instead (it encompasses -r and other important switches):

rsync –a /home/techgage/folder1/ /home/techgage/folder2/

To retain all owners (+usergroups) correctly, it might be best to do all of this in superuser (root) mode.

To increase the verbosity of the output, add a -v switch; this will show you each file as it’s being transferred and give interesting bits of info such as overall speed and total size at the end.

rsync –av /home/techgage/folder1/ /home/techgage/folder2/

Removing Old Files from the Target to Keep a Perfect Sync

Many who backup a folder or sets of folders want both the target and source to be identical. However, rsync’s default method is simply to overwrite what’s there with newer data, ignoring the fact that there might be files on the target that no longer exist on the source. An example: You keep a regular backup of your home folder, but regularly have scrap files on the desktop. If the sync occurs while these scrap files are on the desktop, then they get copied to the target and are left there, unless you specifically tell rsync to delete files on the target that no longer exist on the source.

rsync –av ––delete /home/techgage/folder1/ /home/techgage/folder2/

If you’re new to rsync, you might be thinking, “Is that all there is to it?” The answer is yes. Though all of these examples look (and are) simple, there are many things you could pull off without even leaving your desk. Imagine backing up a Windows location mounted on your PC to a local or server location:

rsync –av ––delete /mnt/momPC/Users/ /mnt/NAS/Backups/momPC/Users/

The above example would assume that each location is properly mounted via fstab, a technique we tackle on page three of this article.

What about backing up your server or another Linux machine in your house? Time to bring SSH into the picture. This assumes that each computer has SSH installed and that the source machine has an SSH server running. If SSH is not running on one machine, it should be as easy as running /etc/init.d/sshd start as root (or sudo).

rsync –av ––delete -e ssh [email protected]:/home/techgage/ /mnt/NAS/Backups/MainPC/techgage/

rsync –av ––delete -e ssh root@targetipaddress:/remotefolder/ /localfolder/

SSH in itself offers a lot of potential. If you have multiple servers and need to duplicate a folder from one to the other… just SSH in and run your rsync script. The possibilities are not only endless, they’re exciting.

This is about as much of rsync as we will tackle, but don’t hesitate to check out man rsync for a full options list and other examples. That’s not as far as our examples go though, as we have many scripts on the last page of this article. For now, let’s move into making use of external storage for backup purposes.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!