- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA Packs 16 Tesla V100s Into New DGX-2 To Deliver 2 PFLOPS Of Deep-learning Performance

For the past year, those looking to get explosive deep-learning and general GPU compute performance at their desk have seen NVIDIA’s DGX Station as an attractive choice, with 4 powerful Tesla V100s under-the-hood to deliver half a petaflop of deep-learning and artificial intelligence performance (and over 60 TFLOPs of single-precision). Enterprise customers would quicker jump on the DGX-1 rackmount version, sporting 8 GPUs.

At 2018’s GTC in San Jose, California, NVIDIA unveiled its DGX-1 follow-up, aptly called the DGX-2. It’s important to note that the original is not being replaced, which makes sense as all three DGXs include the same V100 GPU. The DGX-2 is for those who want even more performance from a single unit. We’re talking 2 PFLOPs of DL, and over 240 TFLOPS of single-precision performance.

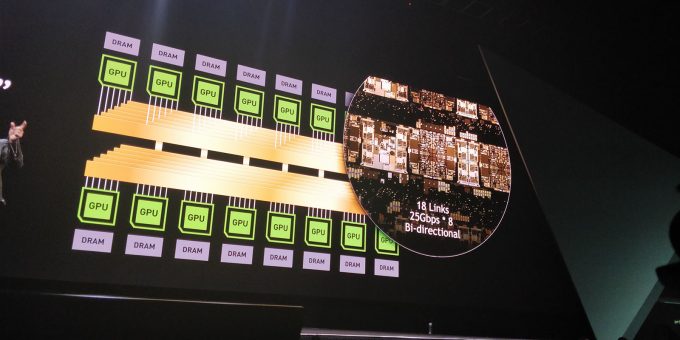

In our quick look at NVIDIA’s new Quadro GV100, it was mentioned that two could be paired-up with an NVLink bridge introduced. The DGX-2 uses the same core technology here, but expands it with NVSwitch, allowing up to 16 GPUs to be used together without any bandwidth limitations.

To be clear, NVSwitch doesn’t only allow all of these GPUs to work together in the same system without stepping over each other, but will allow the aggregate memory to be treated as one. Using 32GB Tesla V100s, that’d allow for a total of 512GB of addressable VRAM, with 14.4TB/s bandwidth! This change alone will open up DGX to audiences that need such (almost mind-boggling) resources. Yes, it can run Crysis. Like a thousand times over.

That’s enough power to train the FAIRSeq neural machine translation model in under two days, which is a 10x improvement over the original DGX-1. It’s not clear how much of this gain is directly attributed to the increased memory (and bandwidth), but even the original DGX-1 will see massive improvements when updated to NVIDIA’s latest TensorRT (4), which in turn provides even better ROI to those running it.

TensorRT has also just been integrated into Google’s TensorFlow, which is massive news considering just how equally massive the framework is in the machine learning world. Further, Kaldi, the most popular framework for speech recognition, now supports GPU acceleration. It doesn’t take much imagination to figure out how major that could be, given the sheer amount of voice recognition solutions out there. All in all, these updates are big (and admittedly just scratch the surface, so I’d recommend looking here for even more information).

Pricing is a rather ‘fair’ $399,000 for a DGX-2, using 10KW of power. We expect availability to be ‘sometime soon’.