- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

AMD Details Vega Architecture – HBM2, NCU & Primitive Shaders

AMD will be launching its Vega-based GPUs in the coming months, but to get the ball rolling, a high-level overview of the architecture has been detailed. With HBM2 already confirmed, we take a look at some of the other features that make up Vega, including new cache mechanics, primitive shaders and moving into the Compute market.

It’s been a long time coming, but finally some details about AMD’s latest GPU architecture are beginning to come through.

While the cards themselves have not yet been released, and probably won’t for a few more months, we can look at what direction AMD is moving in.

AMD’s Vega is a follow-up to the Fury and Nano cards released nearly 18 months ago, which were the first GPUs released in a long time with a radically different design approach; replacing the GDDR memory chips with on-package High Bandwidth Memory (HBM).

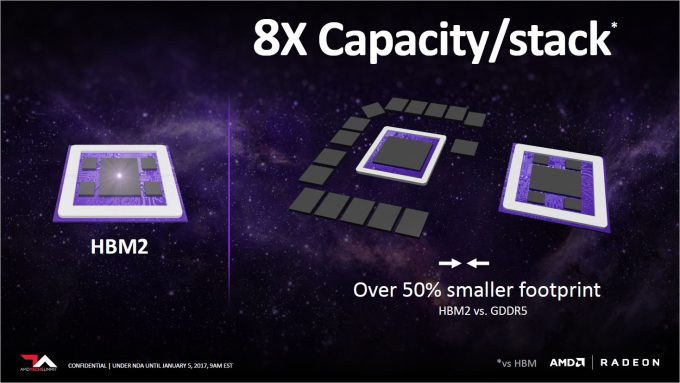

Vega uses an updated memory system called HBM2, which unsurprisingly, offers double the bandwidth available than the previous chip. But it goes well beyond that, as HBM2 is just another cog in the entire graphical pipeline that AMD is trying to build. Layer upon layer of cache.

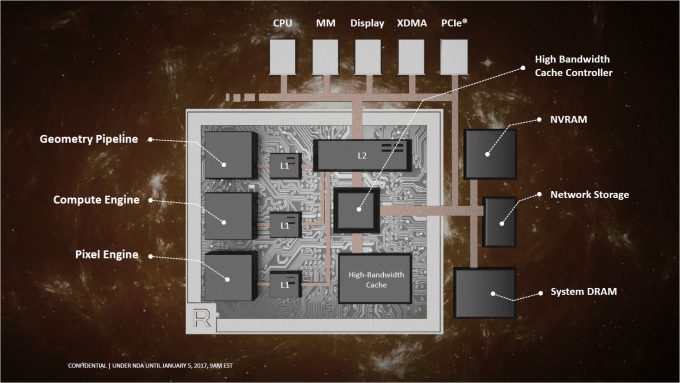

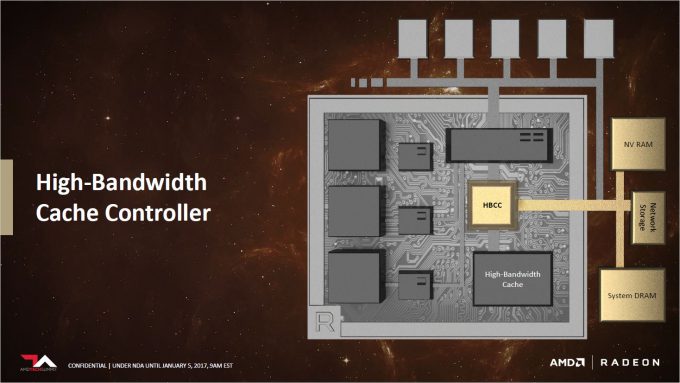

Each sub-system has its own L1 cache, these then all share an L2 cache, this L2 cache connects to a High Bandwidth Cache Controller (HBCC), and this then connects to all available sources of cached data, including the HBM2 memory.

the HBCC treats everything as cache, some of it slower than others, but it unifies all the data as a singular entity that can be directly accessed by the processor, than discrete sub-systems in the storage and memory architectures. This gives Vega a total addressable storage space of up to 512TB, with data rates in excess of 1TB/s, mixing and matching its own HBM2 memory, system memory, and system storage, all into one virtual space.

To see this in action, look no further than the experimental Radeon SSG announced a few months back at SIGGFRAPH 2016 with 1TB of on-board SSD storage, completely dedicated to the GPU, and separate from the rest of the system. Experimentally, it was capable of debayering an uncompressed 8K video in real-time, which is a monstrous amount of data to handle.

We don’t know at this time how much HMB2 memory will be available on the cards, although 8GB would be a safe conservative estimate. HBM2 can scale to 8x the capacity of HMB, which was limited to that rather painful 4GB with the Fury. This means that a high-end Vega card could have 16GB of VRAM, while an HPC or workstation card could have 32GB. This is just speculation though, we’ll find out the details in a couple of months.

However, bandwidth isn’t everything. While games are one of the most data driven and bandwidth intensive tasks out there, all that data still needs to be crunched and turned into pixels. Fury showed us that a focus purely on memory bandwidth can lead to sometimes disappointing results. Don’t get us wrong though, the Fury cards were cool, especially the Nano, but rendering pipelines need to be updated to take advantage of all that free-flowing information.

The unification of the data pool under a single virtual address does have its advantages, as it does allow for better prefetching – grabbing data before its needed. Games are notorious for overloading VRAM with assets, so any way to reduce latency in this area can have some big performance advantages.

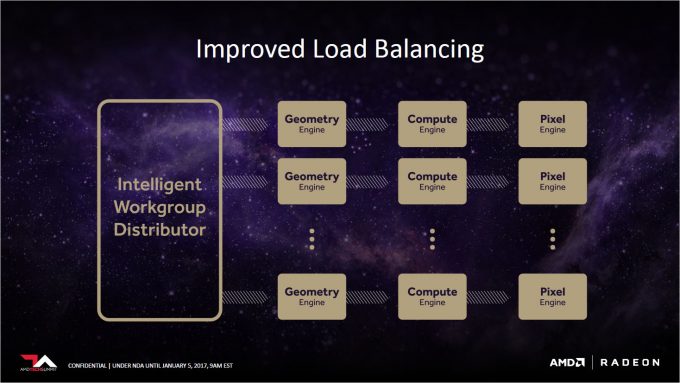

What does become clear through the presentation and overview of the architecture, AMD is targeting not just games, but High Performance Computing (HPC). While HPC tends to be more latency driven, rather than bandwidth, the highly scalable and parallel nature of GPUs, lends them well to certain types of data analysis, and that’s one of the key focuses of the next item – precision switching.

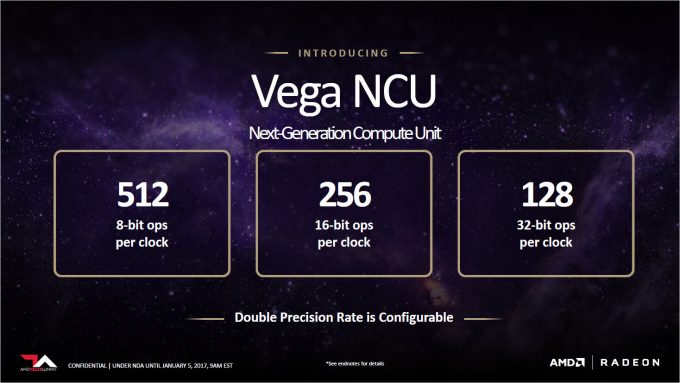

You may be familiar with the term TFLOPS when we talk about GPUs, but that is a bit of a fast and loose term that’s easy to take out of context. Floating-point operations per second are as the name suggests, but there are different precision levels of floating point; Half, single, double and quad, each denoting 16, 32, 64 and 128-bit respectively.

Vega is introducing something it calls ‘Rapid-Packed Math’ which allows the FP module to switch precision levels of a particular operation. The most likely case for this is to drop from single to half-precision, as this basically allows twice the number of operations to fit inside the register and consequently doubling the FLOPS. Thing is, half-precision isn’t used much inside games, it’s not accurate enough for various lighting effects (resulting in artifacts). Where it does make sense, is Deep Learning and data analysis, where scale trumps precision (since scale can provide precision, depending on the workload).

AMD has been locked out of the data center for long enough. While it provides workstation cards, it lacks a number of key technologies needed for HPC, scaling and interoperability, and it’s something AMD is all too aware of. This is what’s driving its GPU Open initiative through RTG; getting broader support not just at the OS level with drivers, but with software libraries and programming tools, transcoding CUDA into C in real-time.

HPC is not something we cover too much though, so we’ll leave it at that, just know that AMD is branching out, and as such, some of the technologies being introduced with Vega are not strictly for gaming, but a framework for a much broader range of products in different sectors. Battle harden the architecture in a known market before pushing it into the unknown.

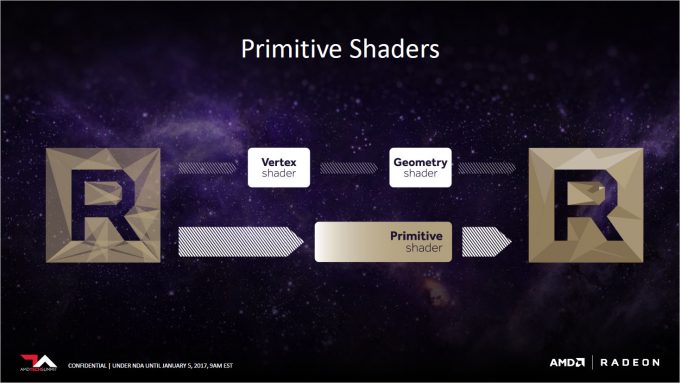

With that said, there are still some interesting architectural changes that may benefit games, one of which is the Primitive Shader. This is set to replace the vertex and geometry shaders that have existed since the DX10 days. However, developers have been pushing more and more through them, that they have to use compute shaders to perform the same task. To make matters worse, not all objects in a scene are viewable to the camera (thus the player). So huge amounts of geometry are shaded when it’s not even in view.

Part of this fix is with the primitive shader. It’s a more programmable geometry shader that offers the fixed hardware speed of a dedicated shader, but with some of the flexibility of the compute shader. Keeping the workflow within these programmable shaders also helps will culling unseen geometry and speeding up shading.

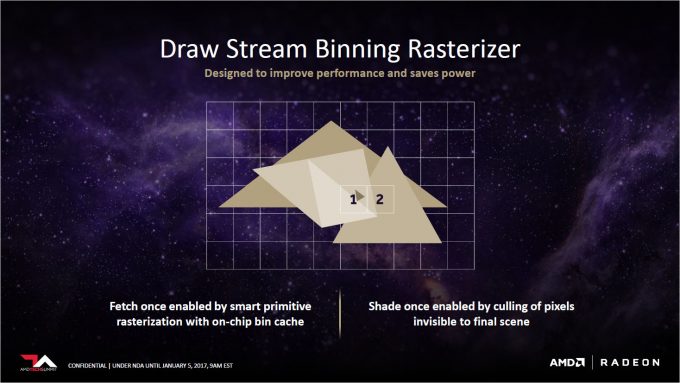

Speaking of shading, there is an overhaul in the department with the Draw-stream Binning Rasterizer. This is meant to be a single-step rasterizer that uses a tile-based approach to rendering – breaking a scene up into tiles, figuring out which objects overlap, and only rendering what’s seen, all in a single pass. This effectively doubles the peak geometry rendering, so we expect to see some rather impressive numbers in the coming months.

That about wraps up the details we’ve been given, and we won’t hear about raw performance numbers, frequencies, SKU versions and such for at least another couple of months. How well all these architecture tweaks roll out into real-world performance, we’ll have to wait and see.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!