- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

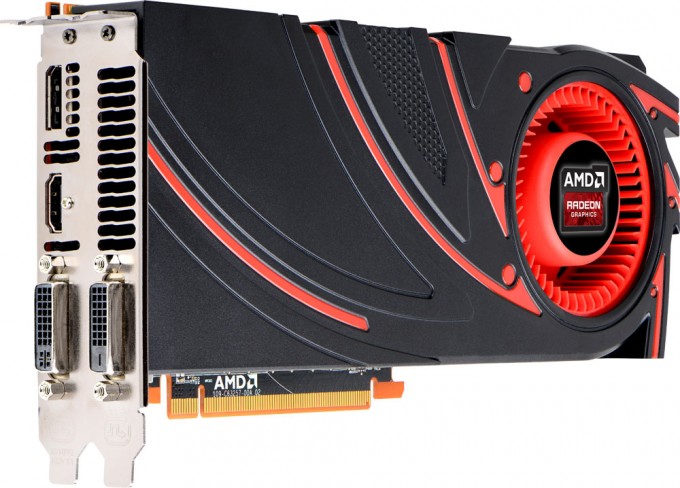

AMD Radeon R9 270X Graphics Card Review

We took AMD’s $300 Radeon R9 280X for a spin last week and were left quite impressed overall with the results, versus the GTX 760. Will we get the same sort of reaction with the company’s Radeon R9 270X? With the help of NVIDIA’s ~$175 GeForce GTX 660, we’re going to find that out.

Page 1 – Introduction

After putting AMD’s Radeon R9 280X through the ringer against NVIDIA’s GeForce GTX 760 last week, we discovered that AMD had established itself as the bang-for-the-buck leader at that given $250~$300 price-point.

In this article, we’ll be taking a look at a similar match-up, but at a different price-point: $200. The cards in question are AMD’s Radeon R9 270X and NVIDIA’s GeForce GTX 660. At AMD’s launch last week, both cards retailed for $199; post-launch, NVIDIA dropped its pricing by $20~25. This should be interesting.

The most common gaming resolution at the moment is 1080p – or 1920×1080 to be more specific. NVIDIA’s GTX 660 was designed out-of-the-gate to support such a resolution with current games no problem at its launch, so it’s no surprise to see AMD do the same with its R9 270X. Admittedly though, 1080p isn’t quite as strenuous as it once was, so as we did last week, we’re going to involve 2560×1440 testing along with a single multi-monitor resolution of 4800×900 (3×1).

At the moment, AMD is still keeping mum on its upcoming flagship, the R9 290X, but expect that to change soon. For now, let’s tackle the current and upcoming lineup:

| AMD Radeon | Cores | Core MHz | Memory | Mem MHz | Mem Bus | TDP | Price |

| R9 290X | ??? | ??? | ??? | ??? | ??? | ??? | ??? |

| R9 290 | ??? | ??? | ??? | ??? | ??? | ??? | ??? |

| R9 280X | 2048 | <1000 | 3072MB | 6000 | 384-bit | 250W | $299 |

| R9 270X | 1280 | <1050 | 2048MB | 5600 | 256-bit | 180W | $199 |

| R7 260X | 896 | <1100 | 2048MB | 6500 | 128-bit | 115W | $139 |

| R7 250 | 384 | <1050 | 1024MB | 4600 | 128-bit | 65W | $??? |

AMD has cut the core count down to 60% on the R9 270X, versus the R9 280X, though the 270X has a slightly higher top-end clock speed. Given its other couple of changes, the results are sure to be interesting.

A bit of a repeat from our 280X look as it pertains to this as well:

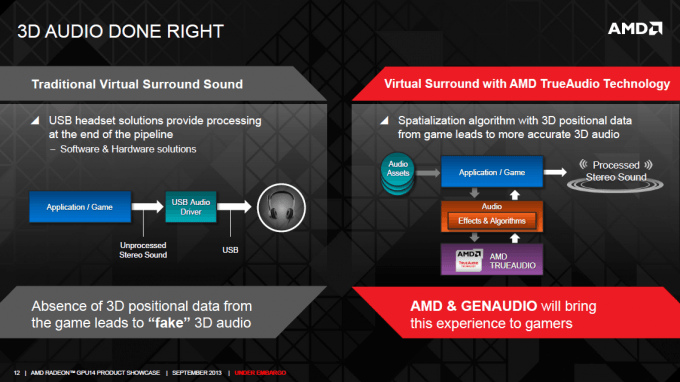

It’s not often that we hear (no pun) a GPU vendor talk about audio, but that was one of AMD’s major focuses at its event in Hawaii. With its DSP “TrueAudio”, the company (partnered with others) hopes to redefine our expectations of gaming audio. A common goal with audio is to allow us to pinpoint where a sound is coming from; that’s one of AMD’s goals here, but what makes it all the more interesting is that we’re meant to get that benefit even from stereo output. Color me intrigued.

I am not an audio guy – far from it – so there’s not a whole lot I can talk about at this point. There’s no way for anyone outside of AMD and its partner companies to test TrueAudio at this point, so right now, it’s just a waiting game until support gets here. Here’s the takeaway, though: Game developers have long had programmable shaders to work with; picture the same sort of flexibility with audio.

While not an issue on everyone’s mind, AMD is also taking advantage of this launch to bolster its 4K capabilities. The company is pushing for a new VESA standard that allows two outputs to drive two separate streams to a single 4K display. This would allow us to get over the hurdle of being stuck at 30Hz @ 4K, a typical problem at the moment (and obviously an issue that’s evident for gaming). Down the road, more advanced connectors should make this kind of work-around unnecessary.

Also on the display front, AMD’s latest GPUs continue to support 3 monitors off the same card, or 6 when a DisplayPort extender is used.

That all said, let’s get right into a look at our (revised) GPU testing methodology, and then tackle our first results.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!