- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

AMD’s Sub-$100 Line-up: Radeon HD 6450, 6570 & 6670 Review

Rounding out its Radeon HD 6000 series, AMD this month launched three sub-$100 graphics cards; the $55 HD 6450; the $79 HD 6570 and the $99 HD 6670. Despite being low-end options, all three support Eyefinity and are of course, extremely power efficient. Let’s see if they also have the gaming performance to boot.

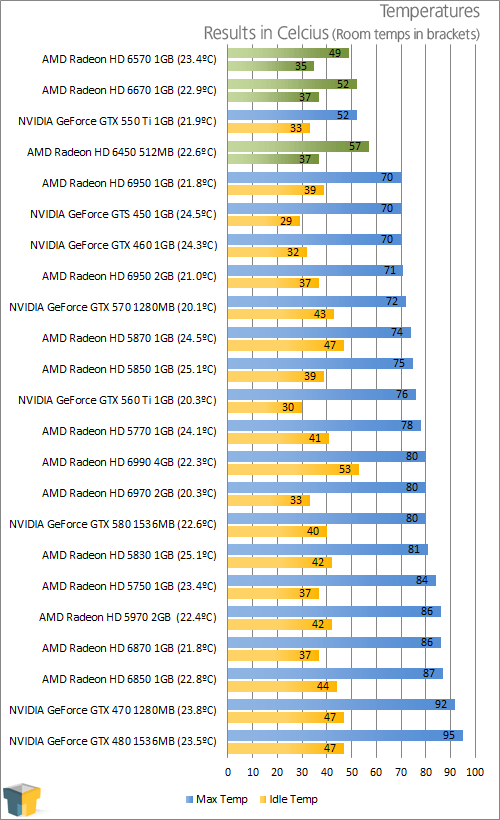

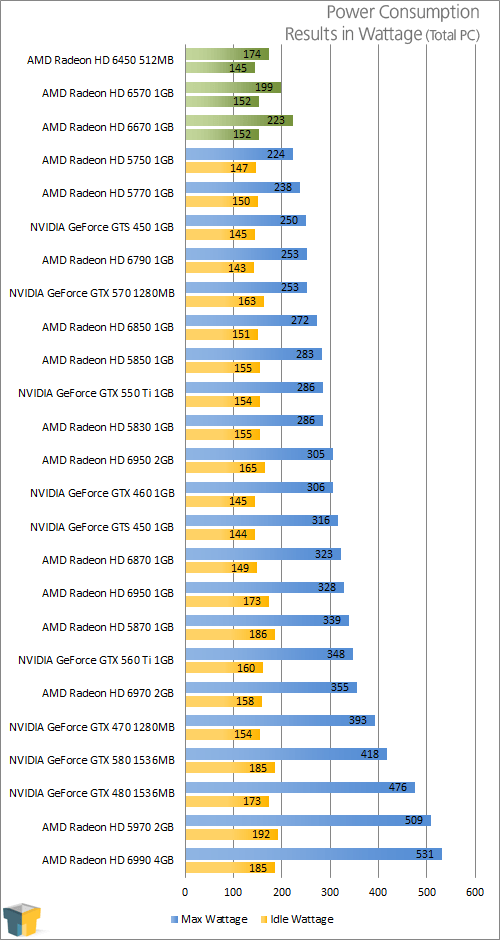

Page 9 – Power & Temperatures

To test our graphics cards for both temperatures and power consumption, we utilize OCCT for the stress-testing, GPU-Z for the temperature monitoring, and a Kill-a-Watt for power monitoring. The Kill-a-Watt is plugged into its own socket, with only the PC connect to it.

As per our guidelines when benchmarking with Windows, when the room temperature is stable (and reasonable), the test machine is boot up and left to sit at the desktop until things are completely idle. Because we are running such a highly optimized PC, this normally takes one or two minutes. Once things are good to go, the idle wattage is noted, GPU-Z is started up to begin monitoring card temperatures, and OCCT is set up to begin stress-testing.

To push the cards we test to their absolute limit, we use OCCT in full-screen 2560×1600 mode, and allow it to run for 15 minutes, which includes a one minute lull at the start, and a four minute lull at the end. After about 5 minutes, we begin to monitor our Kill-a-Watt to record the max wattage.

In the case of dual-GPU configurations, we measure the temperature of the top graphics card, as in our tests, it’s usually the one to get the hottest. This could depend on GPU cooler design, however.

Note: Due to changes AMD and NVIDIA made to the power schemes of their respective current-gen cards, we were unable to run OCCT on them. Rather, we had to use a less-strenuous run of 3DMark Vantage. We will be retesting all of our cards using this updated method the next time we overhaul our suite.

Once again, we can see rather expected results here.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!