- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

ATI Radeon HD 4890 & NVIDIA GeForce GTX 275

It’s not often we get to take two brand-new GPUs and pit them against each other in one launch article, but that’s what we’re doing with ATI’s HD 4890 and NVIDIA’s GTX 275. Both cards are priced at $249, and both also happen to offer great performance and insane overclocking-ability. So coupled with those and other factors, who comes out on top?

Page 2 – ATI’s Radeon HD 4890 1GB

When ATI first released their HD 4000 series last summer, people all over were stunned. For a company to go through such a rough patch (we don’t need to bring all that up again), the company really struck back with some stellar offerings – even putting NVIDIA’s own GT200 launch cards (launched a month earlier) to shame. Between then and now, though, the company hasn’t followed-up with any other high-end cards aside from the dual-GPU X2 card. The HD 4890 is their answer to that issue.

Like the HD 4870, this card still offers 1GB of GDDR5, and on top of faster clock speeds, the GPU core itself has gone through some modification. While the HD 4870 utilized an RV770 core, the HD 4890’s tweaked version becomes the RV790. Although it’s pretty similar to the RV770 architecturally, and built on the same process node, ATI made various changes that allowed much higher clocks, and hopefully lower temps. Some changes included a re-timing of key components to fix up any slow paths. These and others are what opened the path to higher clock speeds, while also moving some components around to achieve better power efficiency.

Because of these changes, ATI managed to add between 100 – 150MHz to the Core clock, while dropping the idle power consumption by 30W. On the other side of the coin, thanks to these higher frequencies, the load power draw has increased by 30W. As you can see below, while the HD 4870 had clock speeds of 750MHz on the Core and 900MHz Memory, the HD 4890 bumps both to 850MHz Core and 975MHz memory.

|

Model

|

Core MHz

|

Mem MHz

|

Memory

|

Bus Width

|

Processors

|

| Radeon HD 4870 X2 |

750

|

900

|

1024MB x 2

|

256-bit

|

800 x 2

|

| Radeon HD 4850 X2 |

625

|

993

|

1024MB x 2

|

256-bit

|

800 x 2

|

| Radeon HD 4890 |

850 – 900

|

975

|

1GB

|

256-bit

|

800

|

| Radeon HD 4870 |

750

|

900

|

512 – 1024MB

|

256-bit

|

800

|

| Radeon HD 4850 |

625

|

993

|

512 – 1024MB

|

256-bit

|

800

|

| Radeon HD 4830 |

575

|

900

|

256 – 512MB

|

256-bit

|

640

|

| Radeon HD 4670 |

750

|

900 – 1100

|

512 – 1024MB

|

128-bit

|

320

|

| Radeon HD 4650 |

600

|

400 – 500

|

512 – 1024MB

|

128-bit

|

320

|

| Radeon HD 4550 |

600

|

800

|

256 – 512MB

|

64-bit

|

80

|

| Radeon HD 4350 |

575

|

500

|

512MB

|

64-bit

|

80

|

To help either make things a little more confusing or to give consumers more choice, ATI will be offering to manufacturers both a stock-clocked chip, and also an “OC” part, which increases the Core clock to 900MHz, or 150MHz above the HD 4870. Coupled with those clock bumps and other architectural enhancements, it’s ATI’s hope that the HD 4890 breathes new life into what’s becoming an abundance of dull product launches.

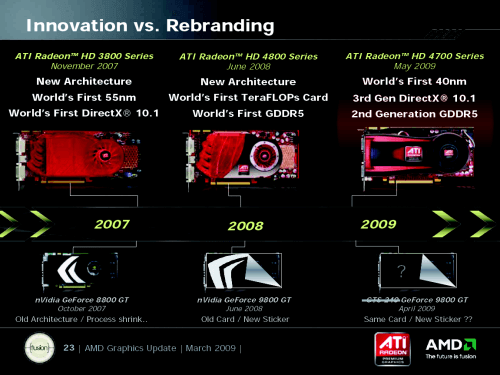

During the conference call last week, ATI made sure to point out that while the competition continues to release re-hashed parts, they continue to innovate. In November of 2007, they note, they released the world’s first 55nm part, and also the first DirectX 10.1 part. In June of 2008, they followed up with a new architecture and delivered the first GDDR5 card, and also the first card to offer over 1TFLOP of computational power.

Finally, in May of this year, the company will release their HD 4700 series which will become the first GPUs on the market to be built on a 40nm process. In that regard, they’re even ahead of the likes of AMD and Intel. This card will also offer a 3rd DirectX 10.1 generation and 2nd generation GDDR5. What either of those mean, I have no idea.

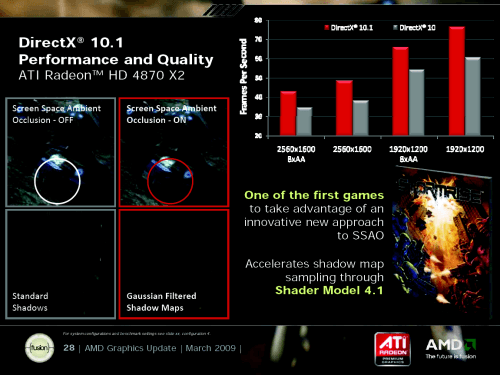

DirectX 10.1 is one feature they talked about a fair bit on last week’s call, and it’s no surprise given that NVIDIA never jumped on that bandwagon for some reason. They pointed out such titles as S.T.A.L.K.E.R.: Clear Sky (which can eat me), Sega’s Stormrise, Tom Clancy’s H.A.W.X and Battle Forge. They also mentioned that the Unigine game engine utilizes DX 10.1 as well.

Another feature that ATI as well as NVIDIA talked a lot about was Ambient Occlusion, a technique used to add more realistic shadows to certain objects in gaming. Although it can have varying ways of being executed, the common goal of AO is deliver accurate shadows to objects that may otherwise look incorrect due to lighting from global illumination.

Essentially, scenes and objects should look more realistic. One particular example NVIDIA gave was of Half-Life 2. Throughout the game, there are phone booths, and while they look as though they are pressed up against the wall, AO shows that they are not, thanks to the more realistic shadow effects. Because AO bases itself off of various lightning sources, there is going to be a performance hit, and it’s expected to range in the 30% area.

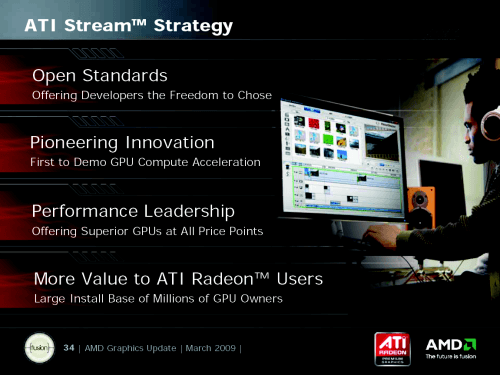

Aside from Ambient Occlusion, ATI talked more about their Stream technology, which is their solution to General Purpose computing on the GPU (GPGPU). Although the company is seemingly far behind NVIDIA in this respect, they gave examples of where they see their technology being used, such as media-related applications, graphic design, operating system functions, special effects, AI and of course, physics.

Being a huge supporter of OpenCL, ATI proved it with a demo with Havoc at the GDC that showed cloth effects being handled by the GPU. This technique is similar to PhysX, but we’re obviously in the very early stages where OpenCL and Havoc are concerned. It does give us hope to see a proper PhysX competitor in the near-future though.

Finally, ATI wrapped up their call with talks about Windows 7. Simply put, their dedication to the new OS is great. So great in fact, that they have worked with Microsoft to include their graphics drivers right on the Windows 7 install DVD. Although we can likely expect the same thing from NVIDIA, I didn’t receive an answer when I asked them about it.

As you can see, ATI had a lot more to talk about during their call last week than just the HD 4890. No complaints here… it’s all looking great as far as I’m concerned. So how does NVIDIA stack up? The next page tells all.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!