- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

ATI’s Radeon HD 5670 – DirectX 11 for $100

AMD has delivered a couple of firsts over the past few months, and it’s keeping the tradition going with its release of the market’s first $100 DirectX 11-capable graphics card. Despite its budget status, the HD 5670 retains the HD 5000-series’ impressive power consumption and low idle temperatures, along with AMD’s Eyefinity support.

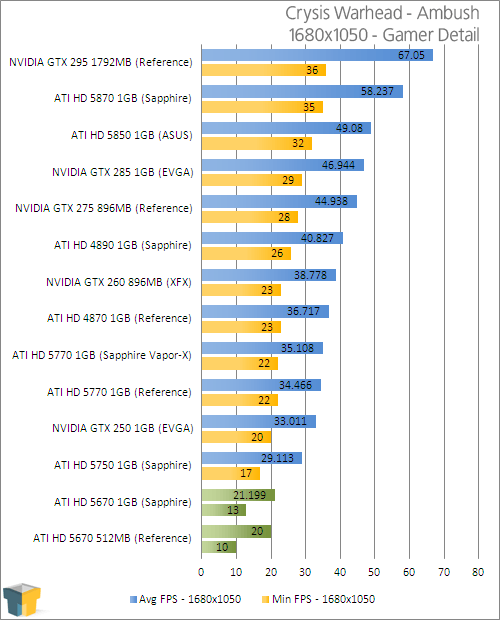

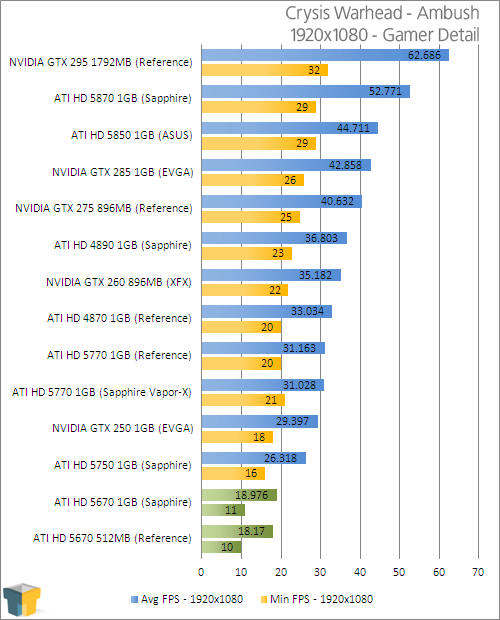

Page 5 – Crysis Warhead

Like Call of Duty, Crysis is another series that doesn’t need much of an introduction. Thanks to the fact that almost any comments section for a PC performance-related article asks, “Can it run Crysis?”, even those who don’t play computer games no doubt know what Crysis is. When Crytek first released Far Cry, it delivered an incredible game engine with huge capabilities, and Crysis simply took things to the next level.

Although the sequel, Warhead, has been available for just about a year, it still manages to push the highest-end systems to their breaking-point. It wasn’t until this past January that we finally found a graphics solution to handle the game at 2560×1600 at its Enthusiast level, but even that was without AA! Something tells me Crysis will be de facto for GPU benchmarking for the next while.

Manual Run-through: Whenever we have a new game in-hand for benchmarking, we make every attempt to explore each level of the game to find out which is the most brutal towards our hardware. Ironically, after spending hours exploring this game’s levels, we found the first level in the game, “Ambush”, to be the hardest on the GPU, so we stuck with it for our testing. Our run starts from the beginning of the level and stops shortly after we reach the first bridge.

These results aren’t too much of a surprise, given that Crysis is one of the most gluttonous games on the market. Even at 1680×1050, the performance was tar-like. Given that, what’s our best playable?

|

Graphics Card

|

Best Playable

|

Min FPS

|

Avg. FPS

|

|

NVIDIA GTX 295 1792MB (Reference)

|

2560×1600 – Gamer, 0xAA

|

19

|

40.381

|

|

ATI HD 5870 1GB (Reference)

|

2560×1600 – Gamer, 0xAA

|

20

|

32.955

|

|

ATI HD 5850 1GB (ASUS)

|

2560×1600 – Mainstream, 0xAA

|

28

|

52.105

|

|

NVIDIA GTX 285 1GB (EVGA)

|

2560×1600 – Mainstream, 0xAA

|

27

|

50.073

|

|

NVIDIA GTX 275 896MB (Reference)

|

2560×1600 – Mainstream, 0xAA

|

24

|

47.758

|

|

NVIDIA GTX 260 896MB (XFX)

|

2560×1600 – Mainstream, 0xAA

|

21

|

40.501

|

|

ATI HD 4890 1GB (Sapphire)

|

2560×1600 – Mainstream, 0xAA

|

19

|

39.096

|

|

ATI HD 4870 1GB (Reference)

|

2560×1600 – Mainstream, 0xAA

|

20

|

35.257

|

|

ATI HD 5770 1GB (Vapor-X)

|

2560×1600 – Mainstream, 0xAA

|

19

|

35.923

|

|

ATI HD 5770 1GB (Reference)

|

2560×1600 – Mainstream, 0xAA

|

20

|

35.256

|

|

NVIDIA GTX 250 1GB (EVGA)

|

2560×1600 – Mainstream, 0xAA

|

18

|

34.475

|

|

ATI HD 5750 1GB (Sapphire)

|

1920×1080 – Mainstream, 0xAA

|

21

|

47.545

|

|

ATI HD 5670 1GB (Sapphire)

|

1920×1080 – Mainstream, 0xAA

|

20

|

35.367

|

|

ATI HD 5670 512MB (Reference)

|

1920×1080 – Mainstream, 0xAA

|

20

|

35.103

|

Simply downgrading the Gamer profile to Mainstream made all the difference in the world, enabling us to game on both versions of the card at 1080p with 35 FPS on average. The 20 minimum FPS seems a tad low, but as you can see, the FPS in this title gets low on any card, and it’s really not all too noticeable during gameplay. Warhead is one of the few games where 20 FPS is actually playable, so anything towards the 30 FPS and up is great.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!