- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

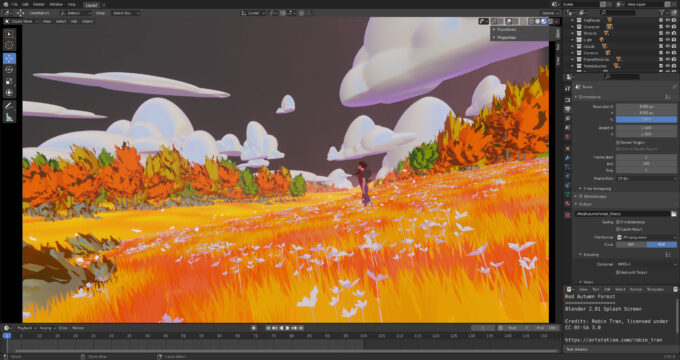

Blender 2.91: Best CPUs & GPUs For Rendering & Viewport

With Blender 2.91 recently released, as well as a fresh crop of hardware from both AMD and NVIDIA, we’re tackling performance from many different angles here. On tap is rendering with the CPU, GPU, and CPU+GPU, as well as viewport – with wireframe testing making a rare appearance for important reasons. Let’s dig in.

Page 1 – Rendering Performance (CPU, GPU, Hybrid & NVIDIA OptiX)

Get the latest GPU rendering benchmark results in our more up-to-date Blender 3.6 performance article.

The fourth and final major Blender release of 2020 dropped late last month, and as usual, we couldn’t waste too much time getting around to testing it out. As has become standard fare, unfortunately, we ran into a few issues that complicated our progress, but that has led to there being more stuff to talk about this time around.

As before, this article is going to tackle rendering with GPUs, CPUs, OptiX for NVIDIA GPUs, as well as heterogeneous rendering, combining the forces of CPUs with NVIDIA’s GeForce RTX 3070. On the second page, we’ll be poring over viewport performance, which is a lot more interesting in 2.91 than it has been in previous versions – leading us to even include wireframe performance for the first time.

For a look at all that the Blender 2.91 release brings to the table, we’d suggest heading over to the detailed release page. You can also refer to the even more in-depth release notes. The next time we’ll be revisiting Blender for in-depth testing will be with 2.92, due to release at the end of February.

Here’s a full list of all of the CPUs and GPUs we tested for this article:

| CPUs & GPUs Tested in Blender 2.91 |

| AMD Ryzen Threadripper 3990X (64-core; 2.9 GHz; $3,990) AMD Ryzen Threadripper 3970X (32-core; 3.7 GHz; $1,999) AMD Ryzen Threadripper 3960X (24-core; 3.8 GHz; $1,399) AMD Ryzen 9 5950X (16-core; 3.4 GHz; $799) AMD Ryzen 9 5900X (12-core; 3.7 GHz; $549) AMD Ryzen 7 5800X (8-core; 3.8 GHz; $449) AMD Ryzen 5 5600X (6-core; 3.7 GHz; $299) Intel Core i9-10980XE (18-core, 3.0 GHz; $999) Intel Core i9-10900K (10-core; 3.7 GHz; $499) Intel Core i5-10600K (6-core; 3.8 GHz; $269) |

| AMD Radeon RX 6900 XT (16GB; $999) AMD Radeon RX 6800 XT (16GB; $649) AMD Radeon RX 6800 (16GB; $579) AMD Radeon RX 5700 XT (8GB; $399) AMD Radeon RX 5700 (8GB; $349) AMD Radeon RX 5600 XT (6GB; $279) AMD Radeon RX 5500 XT (8GB; $199) NVIDIA RTX 3090 (24GB, $1,499) NVIDIA RTX 3080 (10GB, $699) NVIDIA RTX 3070 (8GB, $499) NVIDIA RTX 3060 Ti (8GB, $399) NVIDIA TITAN RTX (24GB; $2,499) NVIDIA GeForce RTX 2080 Ti (11GB; $1,199) NVIDIA GeForce RTX 2080 SUPER (8GB, $699) NVIDIA GeForce RTX 2070 SUPER (8GB; $499) NVIDIA GeForce RTX 2060 SUPER (8GB; $399) NVIDIA GeForce RTX 2060 (6GB; $349) NVIDIA GeForce GTX 1660 Ti (6GB; $279) |

| Motherboard chipset drivers were updated on each platform before testing. All GPU testing was performed on our Ryzen Threadripper 3970X workstation. All GPUs were tested with DDR4-3200 8GBx4 Corsair Vengeance RGB Pro. All CPUs were tested with DDR4-3200 16GBx4 Corsair Dominator Platinum. AMD Radeon Driver: Adrenalin 20.11.2 NVIDIA GeForce & TITAN Driver: GeForce 457.09 (457.40 for 3060 Ti) All product links in this table are affiliated, and support the website. |

All of our GPU testing was performed on our AMD Ryzen Threadripper workstation PC, with 32GB of Corsair memory. CPU and CPU+GPU testing was performed on each respective platform, with 64GB of memory, also from Corsair. Each platform is largely kept stock, except for the enabling of the memory profile, and disabling of any automatic overclocking features that breaks the “stock” nature of the processor.

The latest version of Windows, 20H2, was also used, as well as recent GPU drivers. As always, our OS is optimized to reduce bottlenecks as much as possible, and all tests are run at least three times over to ensure accuracy.

GPU: CUDA, OptiX & OpenCL Rendering

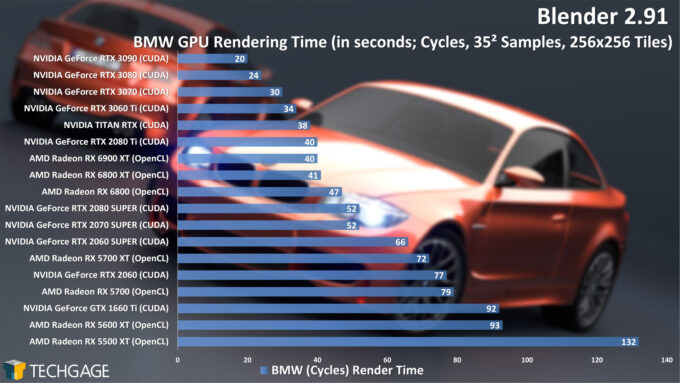

The popular BMW Blender project from Mike Pan is one of the lightest we benchmark with across any one of our tested renderers, but it’s become de facto over the years due to the fact that it doesn’t take long to render, and still manages to show great scaling across product stacks – CPU and GPU alike. Nothing changes in that regard with the latest crop of hardware releases.

From the get-go here, we can see that AMD has a difficult fight on its hands, despite making great strides between the RDNA and RDNA2 generations on the ray tracing front. If NVIDIA’s Ampere didn’t exist, it’d be exciting to see a GPU like the Radeon RX 6800 XT catch up to the GeForce RTX 2080 Ti – but then NVIDIA comes along and releases a $399 GeForce RTX 3060 Ti that outperforms its last-gen $2,499 TITAN RTX.

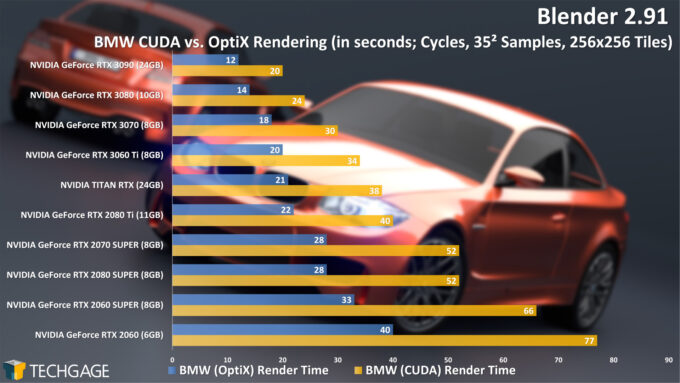

The CUDA vs. OpenCL performance above doesn’t seem too harsh on AMD’s GPUs, but unfortunately for Team Red, NVIDIA has a trick up its sleeve in the form of OptiX accelerated ray tracing which utilizes Turing’s and Ampere’s dedicated RT cores. When the OptiX API is employed instead of CUDA, we see the 34 second render time of the 3060 Ti drop to 20 seconds:

These results must feel like a sting to AMD, because with OpenCL, the RX 6900 XT renders this project in 40 seconds, whereas the last-gen RTX 2060 hits the same exact performance with OptiX enabled. Another set of useful results to compare generational improvements would be between the last-gen $399 RTX 2060 SUPER and the current-gen RTX 3060 Ti – we see a drop from 33 to 20 seconds in that particular OptiX match-up.

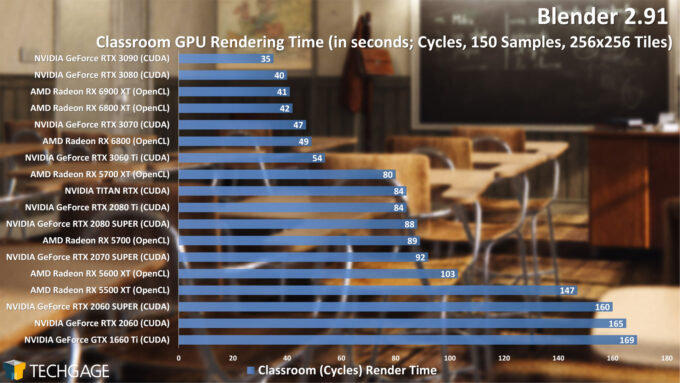

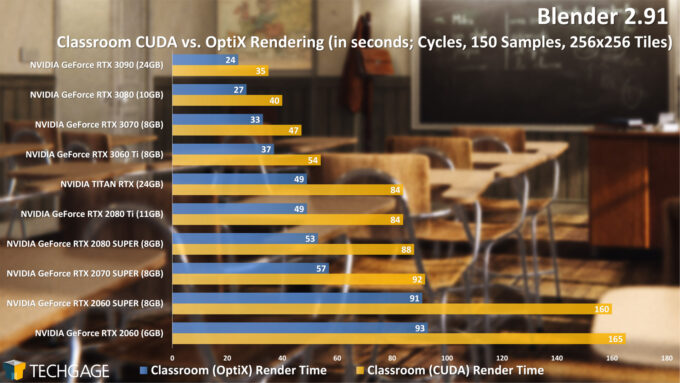

Here’s the same take with the more advanced Classroom project:

We’re not entirely sure why, but AMD’s Radeon GPUs have historically been pretty strong in the Classroom project when compared to its scalability in the BMW one. While not listed, this is one project where the older Radeon VII would exhibit a really strong time. Once again, if OptiX didn’t exist, this would look great for Radeon, but OptiX once again works wonders here:

An important thing to note is that OptiX hasn’t yet reached feature parity with the CUDA API, so if you’re converting older projects, you may have to adjust the project to render correctly. There’s a shortlist on Blender’s developer site that shows which features are still missing. At the moment, baking, branched path tracing, CPU + GPU, and bevel support is missing, but the latter two will make it into the 2.92 release. 2.92 will also drop the experimental tag, encouraging more folks to use it.

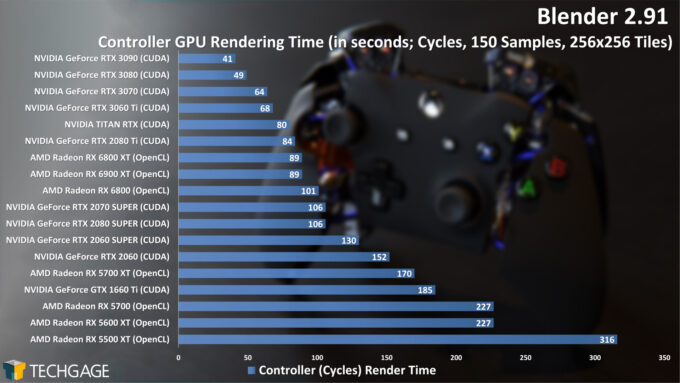

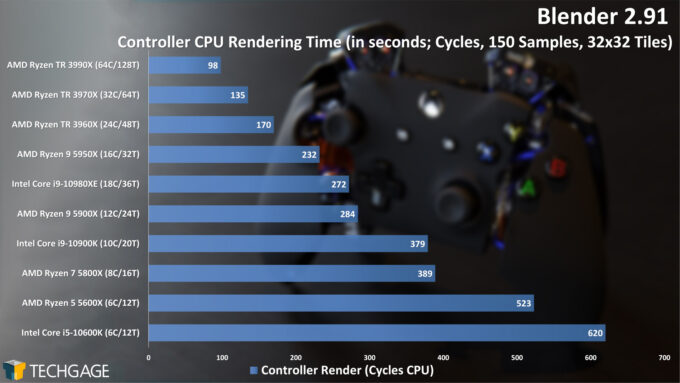

The Controller project up next is one that doesn’t render with OptiX in Blender 2.91 because of the missing bevel support, but it does render in the current 2.92 alpha. We’ll wait until final release before testing before / after, but for now, we can see the CUDA vs. OpenCL performance in 2.91:

Once again, AMD doesn’t perform too bad against NVIDIA’s last-gen competition, but Ampere is a serious force to be reckoned with. Even without the use of RT cores, NVIDIA’s ray traced rendering performance is really strong.

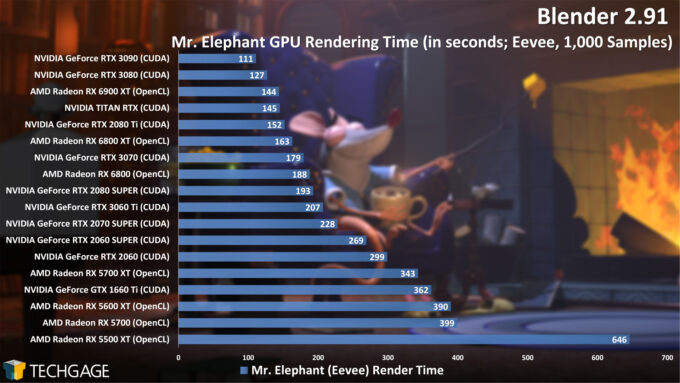

Fortunately for AMD, not all hope is lost, because if someone dedicated their entire Blender use to the Eevee rendering engine, then the scales change quite a bit. OptiX isn’t supported in Eevee, but NVIDIA still proves to deliver the stronger performance, but not nearly to the same degree of what we saw with Cycles + OptiX:

What’s made clear across all of these results is that you really don’t want to go with a low-end graphics card if you have a lot of work to get rendered. If you run 100 samples per frame, then you could consider the above Eevee performance to represent about 10 seconds of animation.

Another important factor to keep in mind with your GPU is also the frame buffer. 8GB is likely to be suitable for the general audience for the time-being, but it’s hard to predict when it will begin to feel like a real limitation. AMD gives more attractive memory options for the dollar, but that doesn’t matter a great deal when the memory bandwidth is lower, and the cards still underperform vs. the NVIDIA competitors.

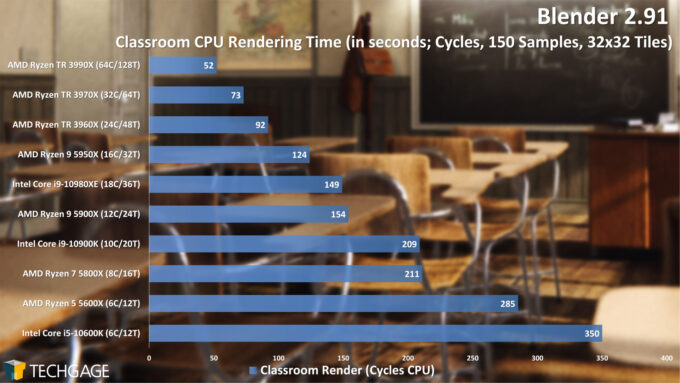

CPU Rendering

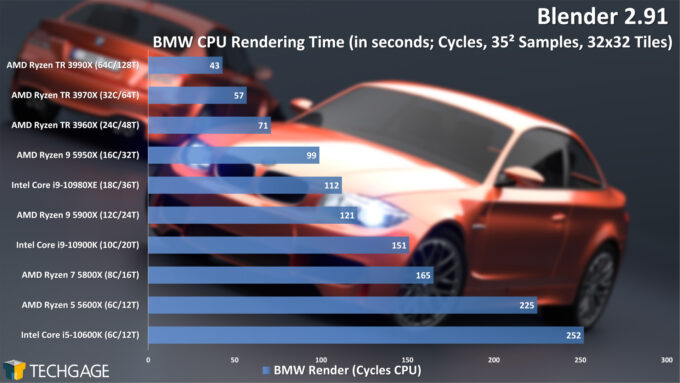

An interesting thing about CPU rendering in Blender is that for most of the software’s life, that was the only option. That of course meant that the more powerful the CPU, the faster your render times would be. Fast-forward to today, though, and current GPUs are so fast, that it almost makes the CPU seem irrelevant in some ways.

Take, for example, the fact that it takes AMD’s 64-core Ryzen Threadripper 3990X to hit a 43 second render time with the BMW project, a value roughly matched by the $649 Radeon RX 6800 XT. However, that ignores NVIDIA’s even stronger performance, allowing the $399 GeForce RTX 3060 Ti to hit 34 seconds with CUDA, or 20 seconds with OptiX. You’re reading that right: NVIDIA’s (currently) lowest-end Ampere GeForce renders these projects as fast or faster than AMD’s biggest CPU.

Because GPUs are so darn fast at rendering, the decision of which CPU you need will be dictated by your other workloads. You can still improve rendering time overall with more powerful CPUs, but the reality is, if you want faster rendering, you’d be better off buying a second GPU and enjoy nearly twice the rendering performance, rather than up to a 10% improvement with a faster CPU. However, having a large pool of system memory would still be to your advantage with more complex scenes, where the GPU’s framebuffer might be limited. Not to mention that rendering is just one-part of the process with 3D design, since baking, physics, and compositing are still CPU-bound.

Other things to consider is that faster clock speeds and faster single-threaded performance in general can speed up interactions in an OS and applications, so to us, a great option for a new high-end workstation would be AMD’s $549 Ryzen 9 5900X or Intel’s Core i9-10900K. That assumes you don’t have need for quad-channel memory, though – that feature can only be had on AMD’s Threadripper and Intel’s Core X enthusiast platforms.

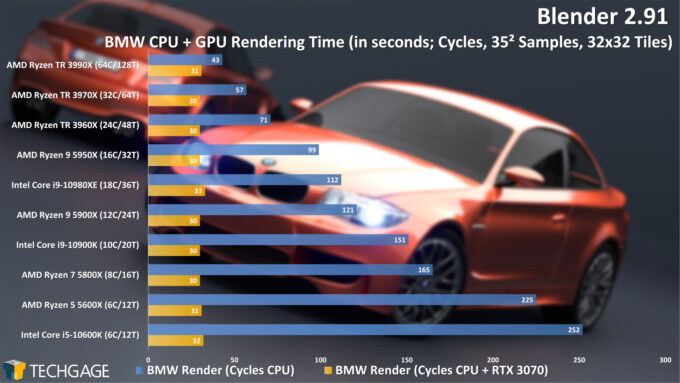

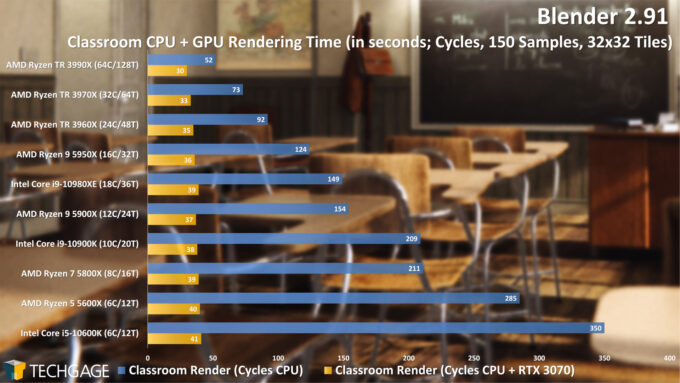

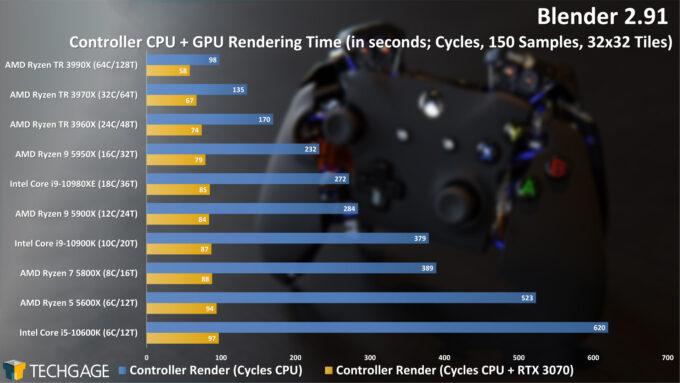

Speaking of CPU + GPU, let’s see how scaling is impacted when combining each one of these CPUs with NVIDIA’s $499 GeForce RTX 3070:

CPU + GeForce RTX 3070 Rendering

If it wasn’t made clear from the previous results that the GPU is much quicker at rendering in Blender than even the biggest CPUs, these three charts should seal the deal. The RTX 3070 that was used for testing in these particular tests isn’t the highest-end card going, but it does highlight the fact that multiple GPUs would deliver far greater speed-ups than adding a big CPU to complement the GPU.

All of that being said, if you don’t have a second GPU, but still want a rendering speed-up, it makes sense to enable CUDA’s heterogeneous rendering to speed things up – unless OptiX can prove even quicker.

On the next page, we’re going to shift topics from rendering to viewport performance.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!