- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Blender 2.93 Rendering & Viewport Performance: Best CPUs & GPUs

With the release of Blender 2.93, the 2.X era comes to an end. Considering Blender 2.0 released in August 2000, you could say that this moment is a bit special. It’d be a true understatement to say that the software has come a long way in all of that time, so let’s honor the final major 2.X release with some in-depth rendering and viewport benchmarking, shall we?

Page 1 – Blender 2.93 Rendering Tests: CPU, GPU, Hybrid & NVIDIA OptiX

Get the latest GPU rendering benchmark results in our more up-to-date Blender 3.6 performance article.

Last month, the Blender Foundation marked the end of the 2.X era with its 2.93 release. Next up will be a major update in the form of 3.0, which will come sometime later this year. While there’s a lot to look forward to with 3.0, Blender 2.93 proves itself to be an explosive release, one that’s still very much worth being excited for.

In 2.92, Blender gained its Geometry Nodes feature, and in 2.93, it’s been vastly augmented, with 22 new nodes, and a mind-boggling amount of potential coming with them. The Still Life project, seen below, makes heavy use of Geometry Nodes, so if you’re wanting to dig in and explore how they work, be sure to head over to the Blender demo files page and snag it. If you do go there, you should also check out the bottom of the page, as there are many more cool Geometry Nodes demos to play with.

Also new in Blender 2.93 are EEVEE upgrades, which involve a rewritten depth of field algorithm that improves accuracy. As you’ll see in our respective performance graph below, there have also been notable performance improvements that makes it essential to upgrade to 2.93 if you use EEVEE in your workflow.

If you’re a Grease Pencil user, you’ll likely also want to quickly move to 2.93, as major updates also hit that, including efficiency improvements, and the ability to export to SVG or PDF to further edit in other applications.

To get a fuller look at what’s new to 2.93, you should check out the official features page. If you want to really get your hands dirty, you could also hit up the even more detailed release notes page. 2.93 isn’t just the final 2.X release, it’s also an LTS release, ensuring that users who adopt it will be able to expect minor updates (including security) until the release of the next LTS (likely two years from now).

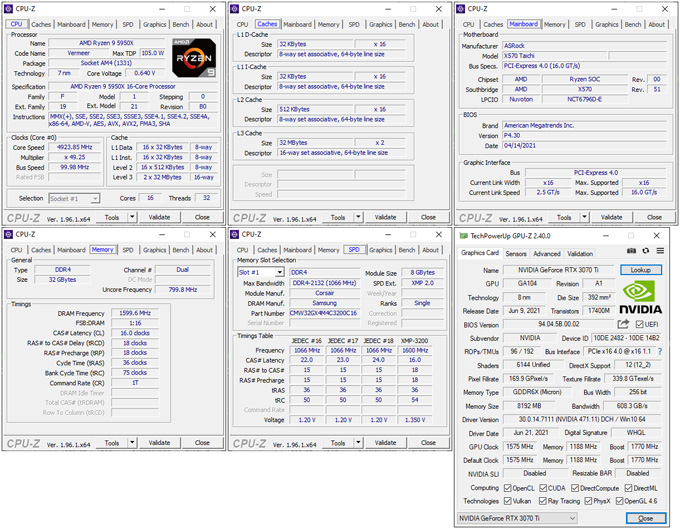

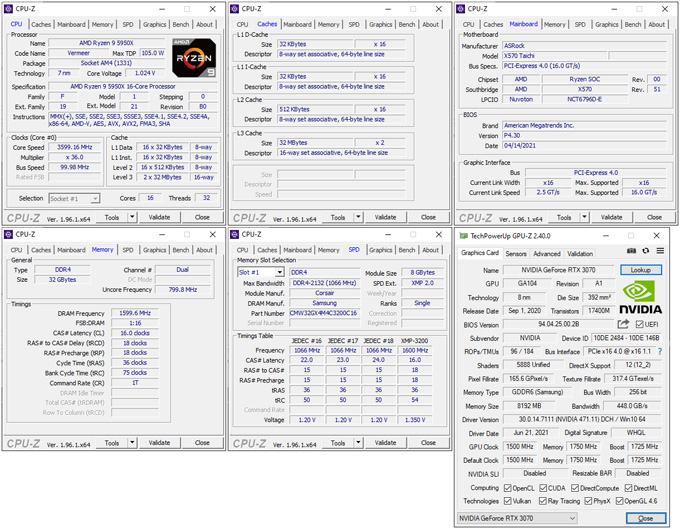

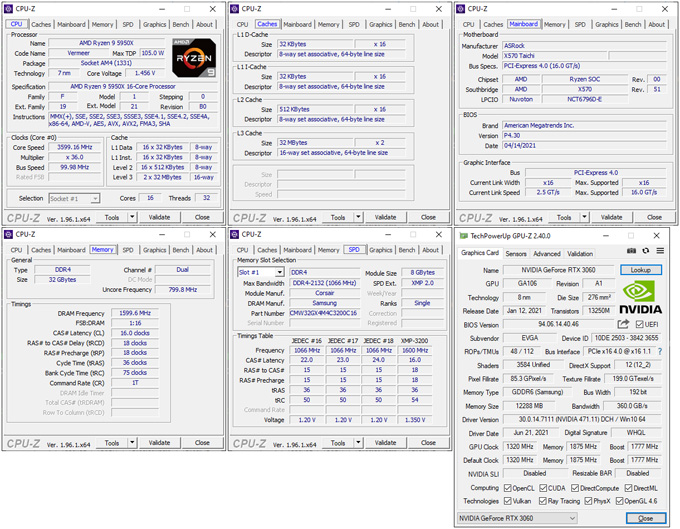

As we usually do, we’re diving into Blender 2.93’s performance in this article, taking a look at both rendering (to the CPU and GPU), and viewport performance (on the next page). We recently did a round of testing in Blender and other renderers for a recent article, but since that was published, we decided to redo all of our 2.93 testing on an AMD Ryzen 9 5950X platform to ensure that our CPU isn’t going to be a bottleneck for the viewport tests.

Before we jump into performance, here’s a quick overview of AMD’s and NVIDIA’s current lineups:

| AMD’s Radeon Creator & Gaming GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | Price | |

| RX 6900 XT | 5,120 | 2,250 | 23 TFLOPS | 16 GB 1 | 512 GB/s | 300W | $999 |

| RX 6800 XT | 4,608 | 2,250 | 20.7 TFLOPS | 16 GB 1 | 512 GB/s | 300W | $649 |

| RX 6800 | 3,840 | 2,105 | 16.2 TFLOPS | 16 GB 1 | 512 GB/s | 250W | $579 |

| RX 6700 XT | 2,560 | 2,581 | 13.2 TFLOPS | 12 GB 1 | 384 GB/s | 230W | $479 |

| Notes | 1 GDDR6 Architecture: RX 6000 = RDNA2 |

||||||

Currently, AMD doesn’t offer a current-gen GPU for under $479, which means for anything less expensive, you’d need to go back to the original RDNA generation. That’s not something we’d suggest if you’re a heavy Blender user, as we’ve encountered stability issues in the past. Unfortunately, some of those still exist with the current RDNA2 generation, but they’re less common.

One of the biggest perks to AMD’s lineup is the amount of memory offered on the top-end cards, easily beating NVIDIA’s similarly-priced options. However, that bigger frame buffer comes at the expense of weaker bandwidth, with NVIDIA taking a lead there thanks to its use of GDDR6X. And on the NVIDIA note:

| NVIDIA’s GeForce Creator & Gaming GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | SRP | |

| RTX 3090 | 10,496 | 1,700 | 35.6 TFLOPS | 24GB 1 | 936 GB/s | 350W | $1,499 |

| RTX 3080 Ti | 10,240 | 1,670 | 34.1 TFLOPS | 12GB 1 | 912 GB/s | 350W | $1,199 |

| RTX 3080 | 8,704 | 1,710 | 29.7 TFLOPS | 10GB 1 | 760 GB/s | 320W | $699 |

| RTX 3070 Ti | 6,144 | 1,770 | 21.7 TFLOPS | 8GB 1 | 608 GB/s | 290W | $599 |

| RTX 3070 | 5,888 | 1,730 | 20.4 TFLOPS | 8GB 2 | 448 GB/s | 220W | $499 |

| RTX 3060 Ti | 4,864 | 1,670 | 16.2 TFLOPS | 8GB 2 | 448 GB/s | 200W | $399 |

| RTX 3060 | 3,584 | 1,780 | 12.7 TFLOPS | 12GB 2 | 360 GB/s | 170W | $329 |

| Notes | 1 GDDR6X; 2 GDDR6 RTX 3000 = Ampere |

||||||

NVIDIA’s current-gen lineup is more complete than AMD’s, although with the market being what it is, even the lower-end parts are going for much higher prices on the market than they should be. Fortunately, it seems like there is some light at the end of the tunnel, with decreased cryptocurrency mining gains starting to result in better prices online from third parties. We can only hope this continues to trend, and we can return to normalcy sooner than later.

Nonetheless, the de facto “ultimate” GPU from NVIDIA is the GeForce RTX 3090, as it offers a monstrous frame buffer, and plenty of bandwidth to go along with it. The 3080 Ti, with its 12GB frame buffer, also becomes an alluring choice, with anything under that providing a more modest 10GB or 8GB frame buffer.

The RTX 3060 could be intriguing to those who want 12GB on the cheap, but the performance seen throughout our graphs will show that it does come at the expense of falling further behind the RTX 3060 Ti than you might imagine, based on paper specs. While it’s more memory, it’s also slower memory, with the 3060 Ti offering about 25% better bandwidth – but at the expense of a smaller frame buffer. We’re hopeful next-gen cards will be even more generous with memory sizes.

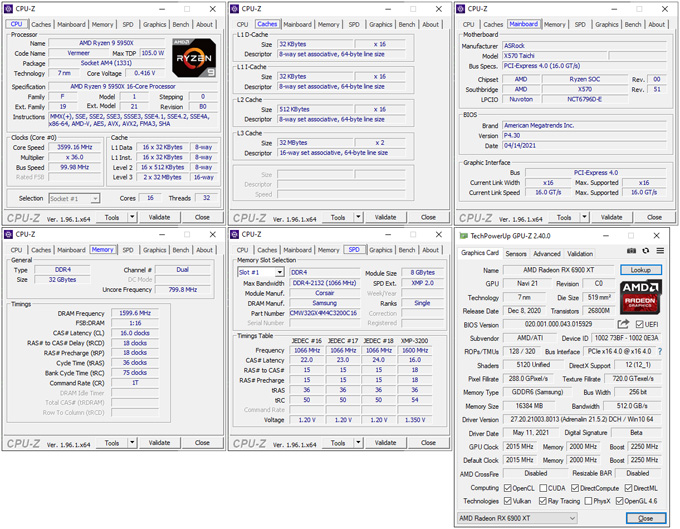

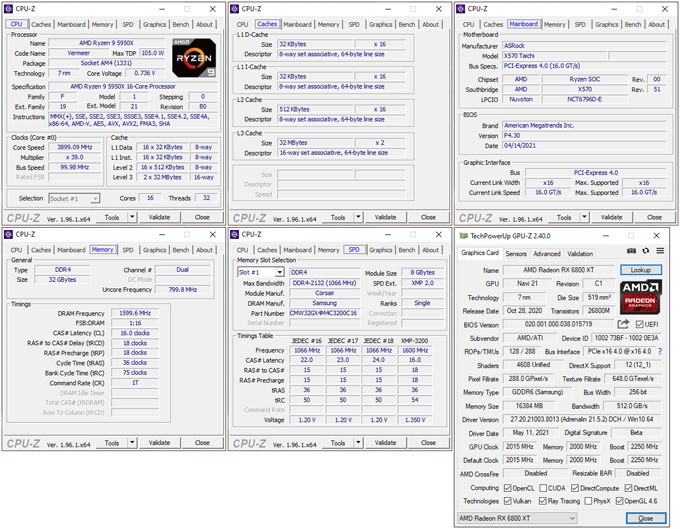

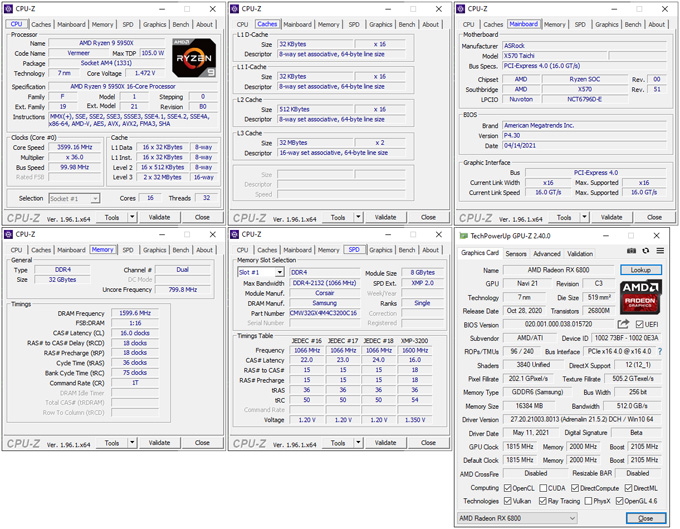

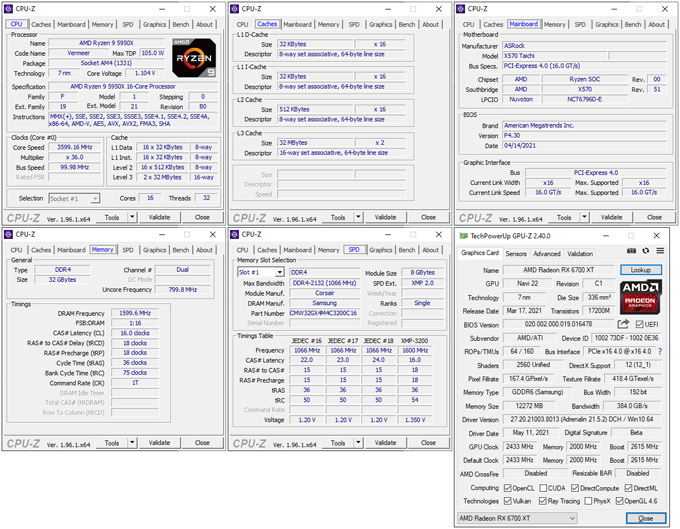

Onto a quick look at our test system:

| Techgage Workstation Test System | |

| Processor | AMD Ryzen 9 5950X (16-core; 3.4GHz) |

| Motherboard | ASRock X570 TAICHI (EFI: P4.30 04/14/2021) |

| Memory | Corsair Vengeance RGB Pro (CMW32GX4M4C3200C16) 4x8GB; DDR4-3200 16-18-18 |

| AMD Graphics | AMD Radeon RX 6900 XT (16GB; Adrenalin 21.5.2) AMD Radeon RX 6800 XT (16GB; Adrenalin 21.5.2) AMD Radeon RX 6800 (16GB; Adrenalin 21.5.2) AMD Radeon RX 6700 XT (12GB; Adrenalin 21.5.2) |

| NVIDIA Graphics | NVIDIA GeForce RTX 3090 (24GB; GeForce 471.11) NVIDIA GeForce RTX 3080 Ti (12GB; GeForce 471.11) NVIDIA GeForce RTX 3080 (10GB; GeForce 471.11) NVIDIA GeForce RTX 3070 Ti (8GB; GeForce 471.11) NVIDIA GeForce RTX 3070 (8GB; GeForce 471.11) NVIDIA GeForce RTX 3060 Ti (8GB; GeForce 471.11) NVIDIA GeForce RTX 3060 (12GB; GeForce 471.11) |

| Audio | Onboard |

| Storage | AMD OS: Samsung 500GB SSD (SATA) NVIDIA OS: Samsung 500GB SSD (SATA) |

| Power Supply | Corsair RM850X |

| Chassis | Fractal Design Define C Mid-Tower |

| Cooling | Corsair Hydro H100i PRO RGB 240mm AIO |

| Et cetera | Windows 10 Pro build 19043.1081 (21H1) AMD chipset driver 2.17.25.506 |

| All product links in this table are affiliated, and help support our work. | |

All of our testing is completed using up-to-date test PCs, with current versions used for Windows, the chipset driver, the EFI, and also the graphics drivers. For NVIDIA GPU testing, we used the current 471.11 Studio driver, while for AMD, we used Adrenalin 21.5.2. AMD has more recent drivers than this, but they’ve caused us issues with some of our renders, so we had to stick to 21.5.2. We submitted this issue through the Radeon bug reporter tool, so hopefully we’ll see different results soon enough.

Here are some other general guidelines we follow:

- Disruptive services are disabled; eg: Search, Cortana, User Account Control, Defender, etc.

- Overlays and / or other extras are not installed with the graphics driver.

- Vsync is disabled at the driver level.

- OSes are never transplanted from one machine to another.

- We validate system configurations before kicking off any test run.

- Testing doesn’t begin until the PC is idle (keeps a steady minimum wattage).

- All tests are repeated until there is a high degree of confidence in the results.

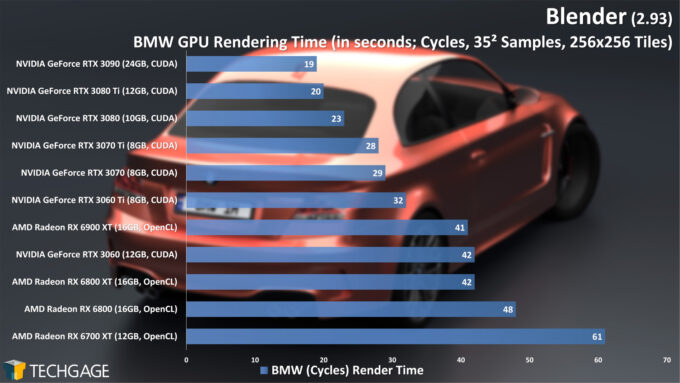

GPU: CUDA, OptiX & OpenCL Rendering

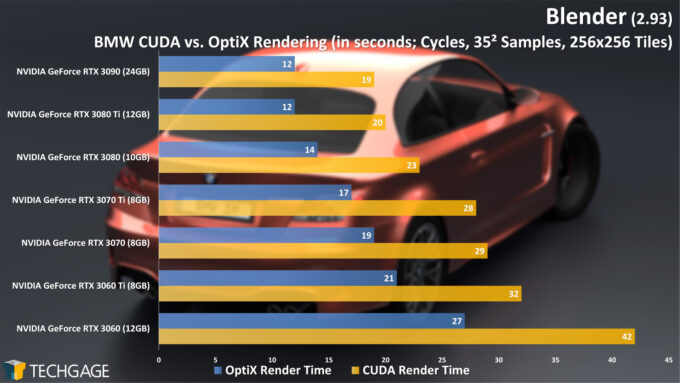

We’re going to kick off our look at Blender 2.93 performance with the help of three projects, including two classics, and the new Still Life one. Because using NVIDIA’s OptiX API with accelerated ray tracing makes such a notable performance difference, we’re including those respective charts after each of the CUDA/OpenCL ones. Let’s start with one of the true classics, BMW:

In our CUDA vs. OpenCL battle, AMD sits far behind NVIDIA’s competition, with the lowly 3060 Ti managing to beat out the RX 6900 XT. That painful delta for the red team is made even worse when OptiX is brought in, as that same 3060 Ti cuts a 32 second render time down to 21 seconds. AMD has historically performed better in the more complex Classroom project, so let’s pore over that next:

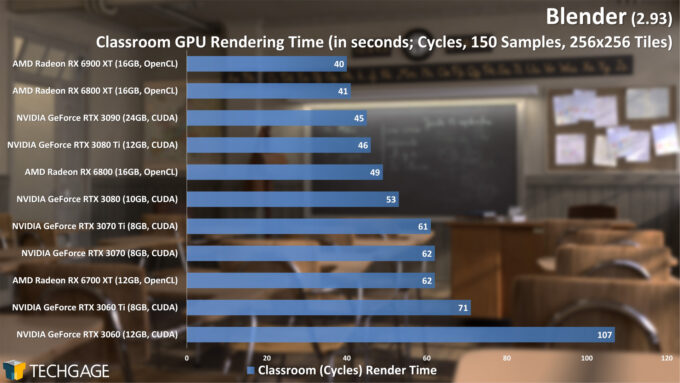

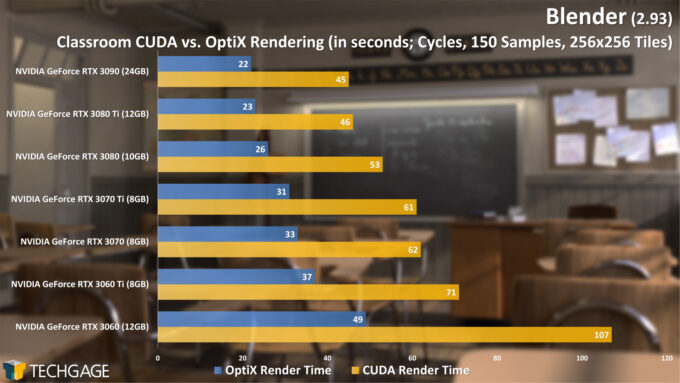

Indeed – AMD performs a lot better in the Classroom project over the simpler BMW one, and as we’ve commented on before, we’re not sure why that’s the case. It’s been the case for about as long as we’ve been testing the project, however, so it’s nice to see the red team redeem itself a bit here. That said, adding OptiX ray tracing acceleration to the picture changes the overall performance scaling quite significantly, with the RTX 3060 Ti suddenly matching the RX 6900 XT.

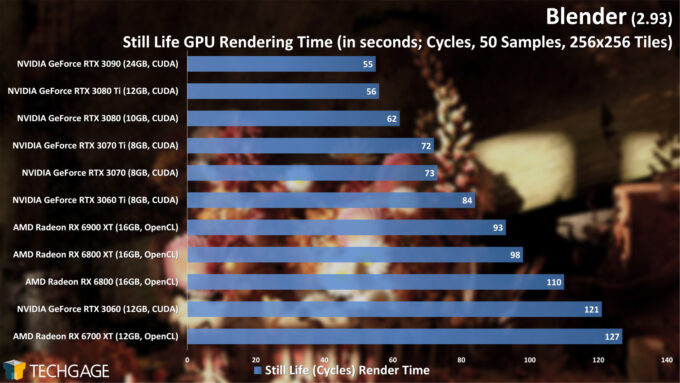

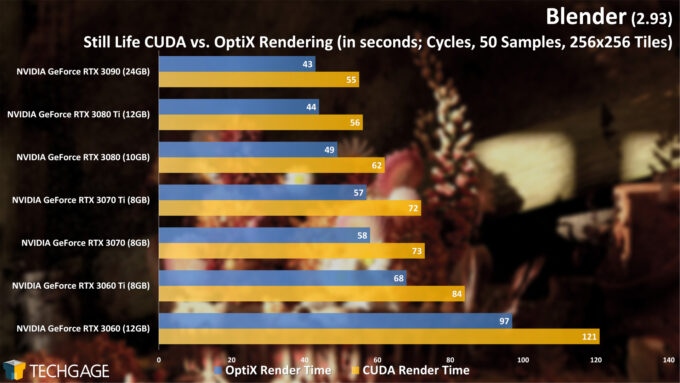

For this article, we decided to give the new Still Life project a good test, since it’s one of the first official projects in a while that has seemed suitable for benchmarking (eg: it has little wasted time before the GPU is engaged in the render). Let’s take a look at that one:

There’s quite a bit of interesting data here to chew on. For starters, with the non-OptiX renders, NVIDIA still manages to hold a demanding lead, while with the OptiX API engaged, we’re seeing nice gains, but not of the same level that we have seen in the previous tests. Still, no one is going to balk at an improvement render speed of 20% or so.

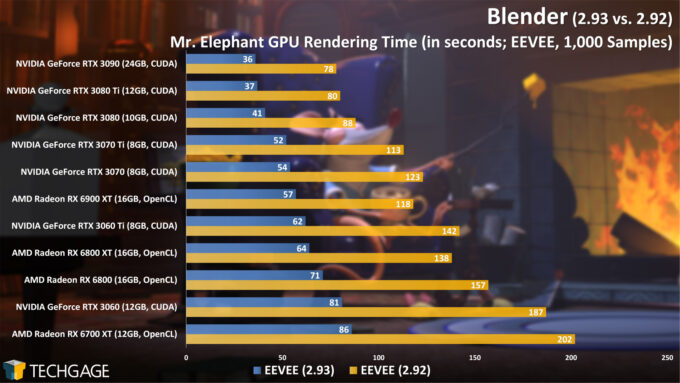

The EEVEE render engine doesn’t yet take advantage of OptiX accelerated ray tracing, so it gives us another apples-to-apples look at AMD vs. NVIDIA:

We mentioned before that EEVEE in 2.93 brings forth some huge performance improvements, and hopefully the graph above helps paint that picture well. Truly, the differences are staggering, and because of other EEVEE enhancements, your final render in 2.93 should look even better than 2.92. It’s a win/win all-around.

On the competitive front, AMD is really struggling to best NVIDIA, with the top dog RX 6900 XT falling right behind NVIDIA’s RTX 3070.

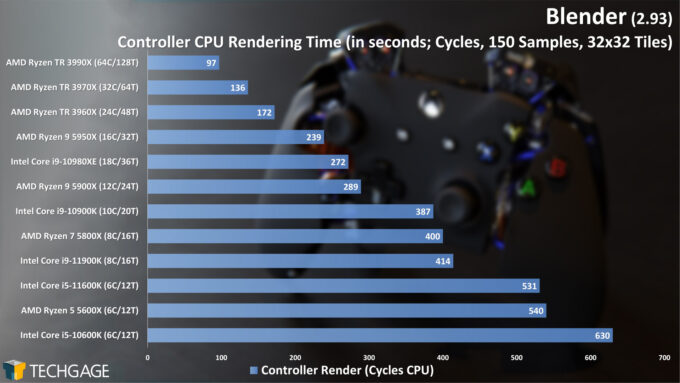

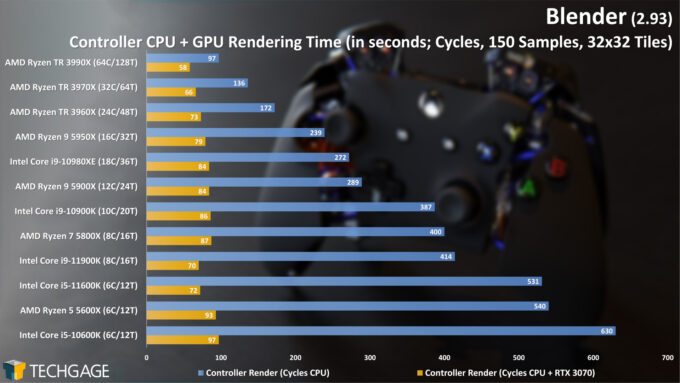

CPU Rendering

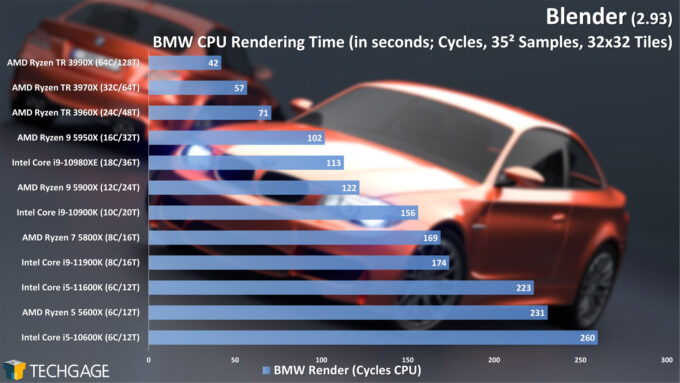

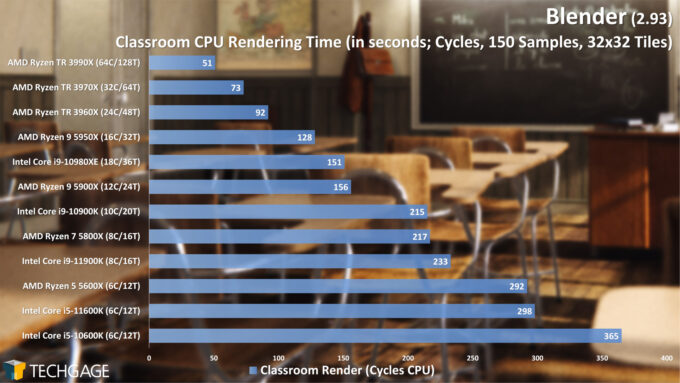

Generally speaking, we’d always recommend emphasizing your GPU over your CPU for rendering, as modern GPUs are simply hard to keep up with. Still, a fair benchmark like a Blender render helps highlight which CPUs offer the best bang-for-the-buck, at least on the multi-threaded front.

CPUs are a lot more tedious to benchmark than GPUs because of the different test PCs and amount of thermal paste used, so after we validated that 2.93 CPU rendering performance remained identical to our 2.92 results on two platforms, we decided to bring our 2.92 data forward, and call it 2.93. We did however add in Intel’s latest-gen i5-11600K and i9-11900K, as those were not available when we dove into our 2.92 deep-dive.

If you’ve been reading our Blender benchmarking articles for a while, you’ll likely already know what to expect from these results. Of most interest to us was the new 11th-gen Intel chips, as they are the company’s fastest ever, but don’t render (pardon the pun) the previous generation unnecessary in all cases. The i9-11900K, for example, has two fewer cores than the i9-10900K, with the trade-off being that the latest-gen chip has much more aggressive clocks.

When comparing the i5-10600K to i5-11600K, we actually see significant gains, gen-over-gen, despite both sporting six cores. In two of these three tests, that 11600K inched ahead of AMD’s Ryzen 5 5600X.

The 10-core i9-10900K outperforms the eight-core i9-11900K, which isn’t much of a surprise. However, since GPUs should probably be your rendering priority, the newer-gen chip would be the better choice, as its single-threaded performance is improved. That means quicker application loads and interactions.

Ultimately, if you’re doing rendering with only the CPU, AMD’s top-end Ryzen chips are really hard to beat, especially from a cost perspective. How do things change when a CPU and GPU work together? We’ll explore that below.

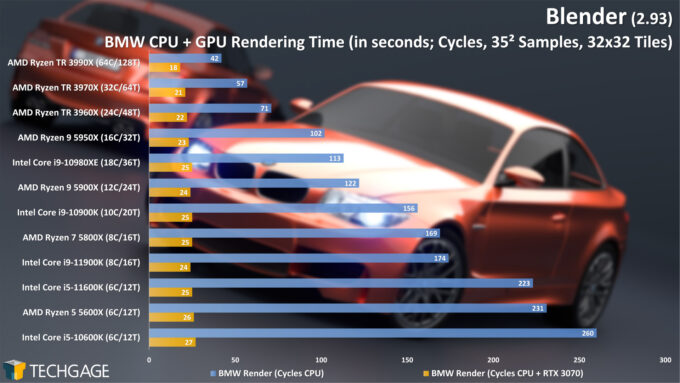

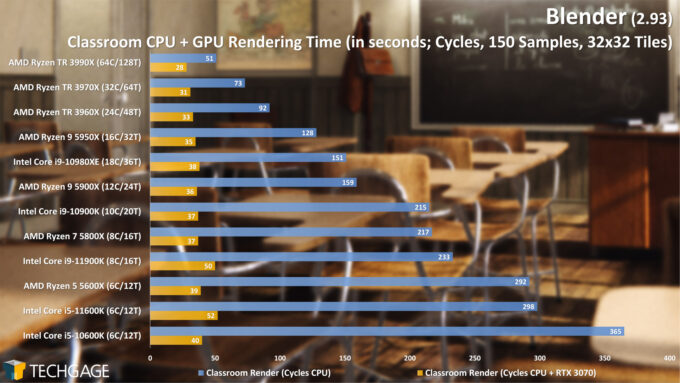

CPU + GPU Rendering

With these results, it becomes clear just how beneficial the CPU and GPU working in tandem can be for rendering, so it really becomes a no-brainer to go that route – unless you have an OptiX-capable GPU, that is. In almost all cases, the OptiX results outperform the CPU+GPU, using the RTX 3070. Some renderers, like V-Ray, tend to work better with CPU+GPU rendering over OptiX, but in Blender, the GPUs really own the lion’s share of the performance.

This concludes our look at rendering performance in Blender 2.93, but as you know, rendering isn’t the only performance measure that matters in 3D design work. On the following page, we’re going to explore viewport performance in three modes, and three resolutions.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!