- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Exploring Chaos V-Ray 6 GPU Rendering Performance

Chaos has just released version 6 of its popular photorealistic V-Ray render engine, so we of course couldn’t wait long before giving it a good test. Equipped with NVIDIA’s current-gen Amperes, along with a top-end Turing and Pascal-based GPU, we’re analyzing rendering performance across five different scenes.

Chaos recently unveiled the latest major iteration of its popular photorealistic render engine, V-Ray. Version 6.0 brings a plethora of new features, including Chaos Scatter (for filling a scene with many objects to create a realistic environment; eg: forest); procedural clouds; VRayEnmesh, a tool allowing the tiling of patterns to create high-detail geometry on complex surfaces; and, notably, Chaos Cloud Collaboration, for easy sharing of renders to exchange comments and markup with team members.

All of that is just scratching the surface. Also new is an improved VFB which includes a 360° panorama viewer and flip composition button; improved dome lights, subsurface scattering, and reflective materials; an improved UI; and improvements to V-Ray GPU that includes faster Light Cache, and also brings more feature parity with V-Ray CPU. To see full GPU supported features, check here.

If you want a quick video highlighting many of these features using the stunning “Explore the Unexplored” scene below, check out the official video.

As usual, we couldn’t let a new major version of V-Ray drop and us not take a detailed performance look at it. So, in this article, we’ll be exploring light V-Ray 5 vs. 6 performance testing, as well as apples-to-apples testing using five scenes. Most of NVIDIA’s current-gen stack has been tested; for good measure, the last-gen TITAN RTX, and last-last-gen GeForce GTX 1080 Ti are also included.

While our performance look revolves entirely around straightforward rendering, it’s important to note that Chaos has made tremendous gains with interactive rendering in V-Ray 6. The company notes that the latest version is effectively twice as fast in IPR, offering obvious benefits. Even better? You could have a second GPU in the system dedicated to denoising. That means while the first GPU renders, the second applies the denoise, resulting in even quicker results – a really cool feature.

NVIDIA GPU Lineup & Our Test Methodologies

To take full advantage of the GPU capabilities in Chaos’ V-Ray, an NVIDIA graphics card is required. We’re not sure if AMD Radeon support is planned for the future, but if you did have Radeon, and planned on primarily rendering with a monstrous CPU instead, you can still use Radeon for denoising and lens effects. Suffice to say, you should just go the NVIDIA route to get the best possible experience.

To that end, Chaos (and us, for that matter) suggests opting for an NVIDIA GPU of at least the Turing generation. That’s the architecture that introduced ray tracing and deep-learning cores to NVIDIA GPUs, and as we’ll be reminded of in the results below, they make a tremendous impact. Another thing we’ll see is: despite Turing being so much faster than previous-gen architectures for rendering, the latest Ampere architecture propels performance ahead even further.

Here’s a quick look at NVIDIA’s current-gen lineup:

| NVIDIA’s GeForce Gaming & Creator GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | SRP | |

| RTX 3090 Ti | 10,752 | 1,860 | 40 TFLOPS | 24GB 1 | 1008 GB/s | 450W | $1,999 |

| RTX 3090 | 10,496 | 1,700 | 35.6 TFLOPS | 24GB 1 | 936 GB/s | 350W | $1,499 |

| RTX 3080 Ti | 10,240 | 1,670 | 34.1 TFLOPS | 12GB 1 | 912 GB/s | 350W | $1,199 |

| RTX 3080 | 8,704 | 1,710 | 29.7 TFLOPS | 10GB 1 | 760 GB/s | 320W | $699 |

| RTX 3070 Ti | 6,144 | 1,770 | 21.7 TFLOPS | 8GB 1 | 608 GB/s | 290W | $599 |

| RTX 3070 | 5,888 | 1,730 | 20.4 TFLOPS | 8GB 2 | 448 GB/s | 220W | $499 |

| RTX 3060 Ti | 4,864 | 1,670 | 16.2 TFLOPS | 8GB 2 | 448 GB/s | 200W | $399 |

| RTX 3060 | 3,584 | 1,780 | 12.7 TFLOPS | 12GB 2 | 360 GB/s | 170W | $329 |

| RTX 3050 | 2,560 | 1,780 | 9.0 TFLOPS | 8GB 2 | 224 GB/s | 130W | $249 |

| Notes | 1 GDDR6X; 2 GDDR6 RTX 3000 = Ampere |

||||||

We’re testing all of these listed GPUs in this article, aside from the new GeForce RTX 3090 Ti. As far as gaming vs. workstation GPUs go, V-Ray is completely neutral in how it goes about rendering, so if you have two GPUs of different series that are spec’d similarly, you’ll likewise see similar performance between them (eg: an A6000 will perform about the same as a 3090 Ti).

For many users, we consider the GeForce RTX 3070 to offer the best bang-for-the-buck, as it’s effectively half of the RTX 3090 Ti, but costs just $500. Fortunately, if you’re under a tight budget, the lower-end options still boast at least 8GB of memory, so you won’t be truly stuck between a rock and a hard place with one. If you’re a professional working with V-Ray day in and out, you should to try for an even higher-end option, because at the end of the day, faster renders mean faster job completions.

It used to be that 8GB seemed like plenty of memory for a graphics card, but with creative workloads, it’s a bit of a different story. Ideally, it’d be great to see 16GB GPUs being the norm, but until that’s the case, you either have to choose to go high-end to be able to take advantage of a better framebuffer, or keep the budget modest and always try to get along with 8GB. The next-gen NVIDIA series is rumored to offer more VRAM at each given price-point, so we hope that ends up proving true.

Before tackling performance, here’s a rundown of our test PC, along with some basic methodologies:

| Techgage Workstation Test System | |

| Processor | AMD Ryzen Threadripper 3990X (64-core; 2.9GHz) |

| Motherboard | MSI Creator TRX40 (EFI: 1.70 05/17/2022) |

| Memory | Corsair VENGEANCE (CMT64GX4M4Z3600C16) 16GB x4 Operates at DDR4-3200 16-18-18 (1.35V) |

| NVIDIA Graphics | NVIDIA GeForce RTX 3090 (24GB; GeForce 516.93) NVIDIA GeForce RTX 3080 Ti (12GB; GeForce 516.93) NVIDIA GeForce RTX 3080 (10GB; GeForce 516.93) NVIDIA GeForce RTX 3070 Ti (8GB; GeForce 516.93) NVIDIA GeForce RTX 3070 (8GB; GeForce 516.93) NVIDIA GeForce RTX 3060 Ti (8GB; GeForce 516.93) NVIDIA GeForce RTX 3060 (12GB; GeForce 516.93) NVIDIA GeForce RTX 3050 (8GB; GeForce 516.93) NVIDIA TITAN RTX (24GB; GeForce 516.93) NVIDIA GeForce GTX 1080 Ti (11GB; GeForce 516.93) |

| Audio | Onboard |

| Storage | Samsung 500GB SSD (SATA) |

| Power Supply | Cooler Master Silent Pro Hybrid (1300W) |

| Chassis | NZXT H710i Full-Tower |

| Cooling | NZXT Kraken X63 280mm AIO |

| Et cetera | Windows 11 Pro build 22000 (21H2) AMD chipset driver 4.06.10.651 |

| All product links in this table are affiliated, and help support our work. | |

All of the benchmarking conducted for this article was completed using updated software, including the graphics and chipset driver, and V-Ray itself. A point release (6.00.06) of V-Ray became available after our testing was completed, but a sanity check with that version showed no difference in our results.

Other general testing guidelines we follow:

- Disruptive services are disabled; eg: Search, Cortana, User Account Control, Defender, etc.

- Overlays and / or other extras are not installed with the graphics driver.

- Vsync is disabled at the driver level.

- OSes are never transplanted from one machine to another.

- We validate system configurations before kicking off any test run.

- Testing doesn’t begin until the PC is idle (keeps a steady minimum wattage).

- All tests are repeated until there is a high degree of confidence in the results.

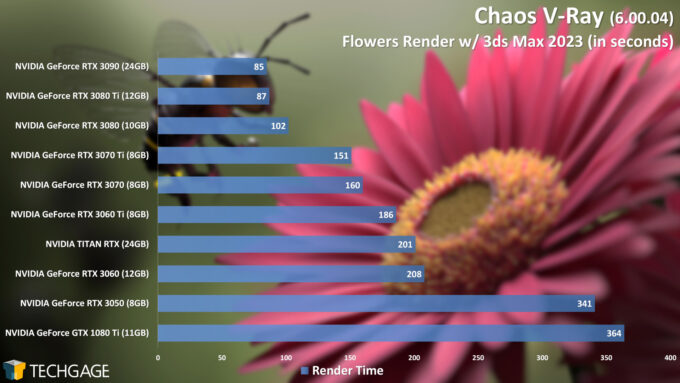

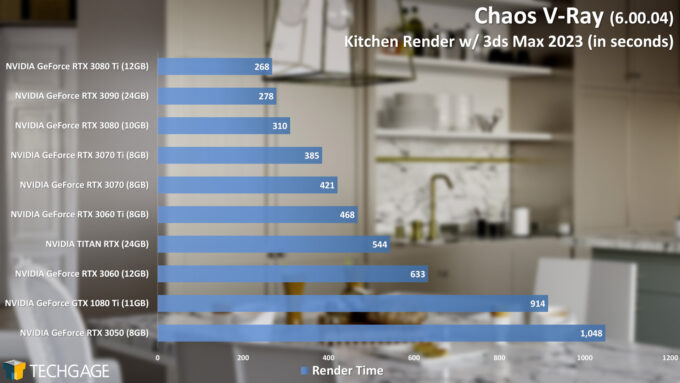

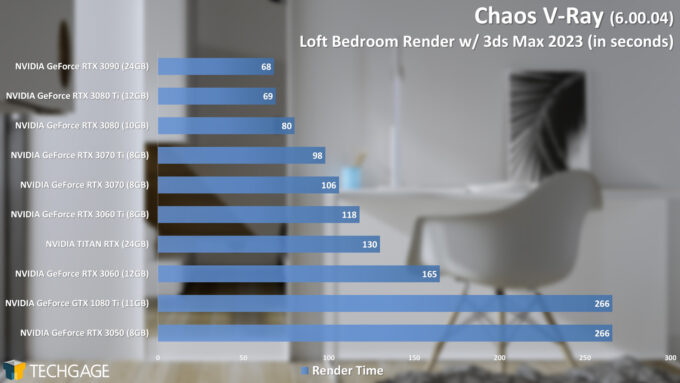

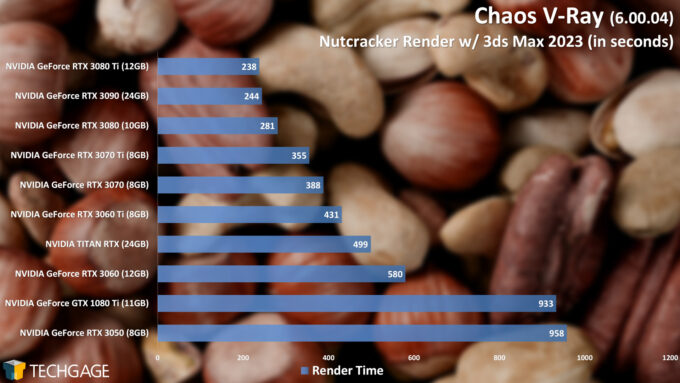

In the past, most of our V-Ray testing revolved around a single project, Flowers, but for this go-around, Chaos has kindly hooked us up with four additional projects that are tuned appropriately for V-Ray GPU. As we’ll see, they all help paint a clear performance picture across the stack. We truly appreciate the company’s support in this manner, as it helps us produce more meaningful results.

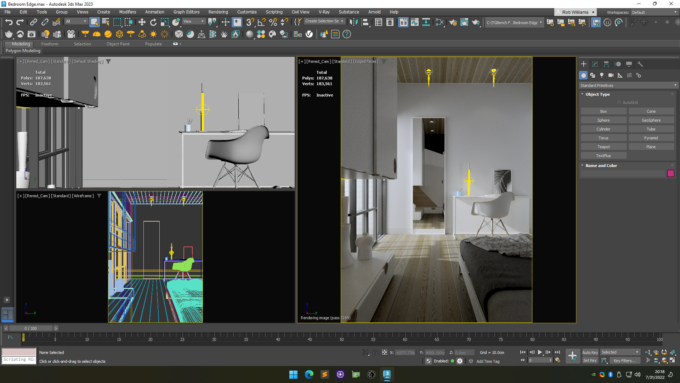

Examples of the final renders of each project can be seen below:

Alright… it’s time to dive into some performance numbers:

Chaos V-Ray 6 GPU Rendering Performance

When a new major version of a render engine drops, who can resist the opportunity to compare the performance of the new, against the old? Not us; that’s for sure. Before we ran all of our tested GPUs through the V-Ray 6 ringer, we used NVIDIA’s GeForce RTX 3090 to compare all five of our tested projects in a before / after scenario. Here are the results:

| V-Ray 5 | V-Ray 6 | |

| Flowers | 81 | 85 |

| Kitchen | 313 | 279 |

| Loft Bedroom | 182 | 68 |

| Nutcracker | 232 | 244 |

| Winter Apartment | 619 | 624 |

| Notes | Render test results in seconds. Best result of each set in bold. Tested GPU: NVIDIA GeForce RTX 3090 |

|

These results highlight that in some cases, the latest version of V-Ray may not necessarily render your scene faster than the previous version could. However, in all cases where we saw V-Ray 6 perform slower than its predecessor, the extra wait was rewarded with improved shadows and reflections.

How a scene benefits from performance improvements within V-Ray 6 will depend on its design, and whether or not it involves functionality that would be impacted by said optimizations. While a project like Kitchen saw a decent drop in render time between V-Ray 5 and 6, Loft Bedroom blows our socks off with a mammoth improvement – from 182 to 68 seconds. And, like those projects that took a bit longer to render in V-Ray 6, the end result was still a bit better.

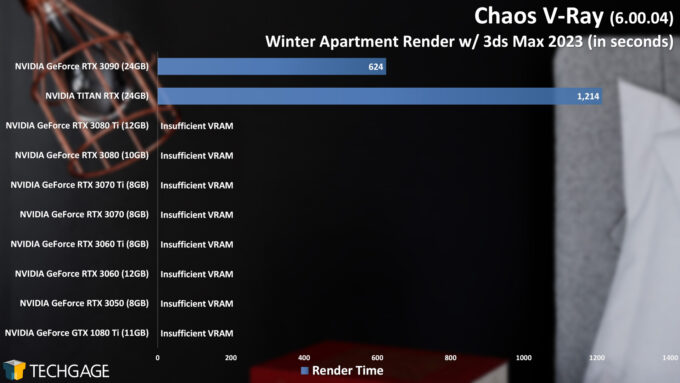

When we got started on testing this collection of V-Ray scenes, we originally intended to only include NVIDIA’s latest-gen offerings, to keep things simple. Well, as it happens, one of the projects, Winter Apartment, is so complex, that only the RTX 3090 managed to get through. We were then compelled to add a second 24GB GPU in the form of TITAN RTX, and then figured it wouldn’t be a bad idea to include the aging GTX 1080 Ti to enhance the generational view a bit.

Here are those apples-to-apples results:

It seems that we can’t get through an entire fresh set of testing without running against a couple of odd results, and there’s no exception here. In both the Kitchen and Nutcracker projects, the RTX 3080 Ti managed to edge ahead of the RTX 3090, despite the opposite being expected. We retested both projects with both GPUs after the initial testing was completed, and that scaling stuck.

Ultimately, the RTX 3090 isn’t that much faster than RTX 3080 Ti, but where it of course shines is with a framebuffer that’s twice as large. That framebuffer helped us render the Winter Apartment project without issue. That’s the reason the last-gen TITAN RTX could clear it, as well, but as we can see, the generational improvements between Turing and Ampere are enormous. The TITAN RTX felt like an absolute screamer when it was released, but the very next-gen top-end option halves the resulting render time.

The RTX 3070 continues to prove our belief that it’s the best bang-for-the-buck of the Ampere GeForce lineup. The RTX 3070 Ti does improve performance a bit further, but with its same 8GB framebuffer, anyone looking to score a better option may want to consider RTX 3080 instead, to gain the additional 2GB – or even 3080 Ti, to increase the VRAM by 50%. As we see from a project like Winter Apartment, anyone developing complex projects will want to consider GPUs with generous frame buffers (for reference, Winter Apartment has nearly 500 4K textures).

After looking over these performance graphs, we’re glad that we ended up including the GeForce GTX 1080 Ti, as it does paint an interesting picture of how far we’ve come. There’s a clear reason that Chaos recommends having at least a Turing-based card, but it’s even clearer that you should probably try to score an Ampere if you can.

Between TITAN RTX and RTX 3090, the differences are huge. As for the older Pascal, we’re seeing a top-end option of two generations ago siding up against the lowest-end option in NVIDIA’s current lineup. RTX 3050 vs. GTX 1080 Ti flip-flops throughout, whereas the $329 RTX 3060 sweeps the floor with it. Heck – not even the RTX 3060 falls too far behind last-gen’s TITAN RTX.

Final Thoughts

With all of the performance graphs above, you’ll hopefully have a far better idea of which GPU to seek out for your next V-Ray-focused workstation upgrade. As always, if you’re still not completely sure what to target for your own needs, please feel free to comment, and we’ll try to help you out. And, if you want to help us out, clicking any one of our Amazon links and purchasing something as normal will do just that (it doesn’t have to be a GPU!).

While this article focused entirely on GPU rendering performance, V-Ray is also excellent for rendering to the CPU. That said, GPUs are so enormously powerful nowadays, that you’d need a truly beefy CPU to make it feel like it’s even needed in the equation (it will of course be needed if V-Ray GPU doesn’t yet support a CPU-bound feature that you use). In some cases, CPU + GPU rendering will improve render times further, so that’s something to try out once you are set up with your new configuration. We’ll tackle CPU-bound V-Ray rendering in a future workstation performance roundup.

It’s worth highlighting again the enhancements Chaos has made to V-Ray’s interactive rendering. Simply from upgrading to V-Ray 6, IPR performance will be greatly enhanced, but if you have a second GPU installed that’s set to focus on denoising, the resulting image following a pan will resolve even quicker. When you’re not using interactive rendering, you can quickly change the configuration to use both GPUs for final rendering instead.

What should prove even more interesting than CPU rendering performance in V-Ray 6 is how the next-gen NVIDIA GPUs could improve rendering times once again. At this point, we’re not even sure of the architecture name of the next-gen GeForces (Ada or Lovelace seem to be the frontrunners), and we can’t imagine that it will deliver the same level of rendering boost as Ampere did over Turing, but we hope to be proven wrong.

We likewise hope that AMD’s next-gen offerings improve rendering performance to the point that companies like Chaos consider it for inclusion in its support list, and with Intel’s discrete GPUs not too far off, who knows what could happen there. Either way, that lack of competition has thankfully not resulted in a dearth of exciting options to choose from.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!