- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Gaming and Supercomputing Collide: NVIDIA Announces GeForce Titan

As proud as it is of its GK110 architecture, NVIDIA couldn’t let the folks at Oak Ridge National Laboratory have all the fun, so the company has worked its magic and is bringing it to the desktop. The result is a GPU that boasts a lot of transistors, is super power-efficient and crazy powerful. It looks good, too.

When NVIDIA made headlines this past fall for its part in powering the world’s fastest supercomputer, little did we realize that the same GPUs found there would be making their way to our own desktops. With Oak Ridge’s supercomputer boasting the name of “Titan”, it comes as little surprise that NVIDIA decided to adopt the same for its new flagship GPUs.

Enter GeForce Titan.

The cooler design first seen on the GTX 690 makes an appearance here, albeit with a number of tweaks. For starters, there’s just one GPU under the hood here vs. the 690, so the fan could be situated to the far right rather than the center. The PCB design doesn’t appear too dissimilar to what we’ve seen from NVIDIA’s high-end cards in the past. It’s staggering to realize that Titan packs in 7.1 billion transistors though – twice that of the GTX 680.

For the best possible cooling in this form-factor from an air cooler, NVIDIA has again adopted a vapor chamber design. This draws heat off of the GPU core using a similar evaporation process as heatpipes, but it’s more effective. With the help of provider Shin-Etsu, the thermal interface material that NVIDIA uses in Titan is 2x as effective as the TIM used in the GTX 680.

Titan’s goal is to deliver the best possible gaming performance with either a single or multi-GPU configuration. In single-GPU mode, the GPU has the potential to leave the 680 in the dust, and 3x Titan should do the same thing against GTX 690 in quad-SLI (dual cards). As has been evidenced many times before, most games have a difficult time scaling beyond three GPUs, and sometimes even two, so by offering more performance from three GPUs instead of four, a 3x Titan setup is going to look very attractive to those interested in building what could only be considered an “über” high-end setup. The potential is unbelievable.

Comparisons are difficult without a spec table, so *poof*, here’s one:

| Cores | Core MHz | Memory | Mem MHz | Mem Bus | TDP | |

| GeForce GTX Titan | 2688 | 837 | 6144MB | 6008 | 384-bit | 250W |

| GeForce GTX 690 | 3072 | 915 | 2x 2048MB | 6008 | 256-bit | 300W |

| GeForce GTX 680 | 1536 | 1006 | 2048MB | 6008 | 256-bit | 195W |

Well, joke’s on me – even with a specs table, comparisons are difficult. As the table we’ll see in a moment states, Titan will retail for $999 like the GTX 690 (which NVIDIA is not discontinuing), but it features just a single GPU under the hood. It wouldn’t be right to consider Titan as being either a GTX 685 or GTX 780, because A) we’re not sure how the rest of the year will play out for NVIDIA and B) with the potential performance gains (based on the specs alone), it’d almost be a disservice to give Titan a mere model number boost. That might be why NVIDIA took the ultra-rare route of giving the card an actual name.

NVIDIA is restricting benchmark results from being released until Thursday, so until then, we’re left to speculate with the help of the above table. Where clock speeds are concerned, the GTX 690 actually appears to be faster than Titan, but Titan has one trick up its sleeve: a 384-bit memory bus. NVIDIA has said that it’s hit 1,100MHz on Titan in the labs, so for those worried about overclocking, it looks like you have no need to be.

As its name suggests, Titan isn’t going to be good just for gaming, but should excel for computational work as well. The next table helps us understand that:

| Codename | SMX Modules | TFLOPS | Transistors | Pricing | |

| GeForce GTX Titan | GK110 | 14 | 4.5 | 7.1b | $999 |

| GeForce GTX 690 | GK104 | 8 x 2 | 2.81 x 2 | 3.54b x 2 | $999 |

| GeForce GTX 680 | GK104 | 8 | 3.09 | 3.54b | $499 |

There are two bits of information here that I consider to be pretty mind-blowing. Versus the GTX 680, NVIDIA has effectively doubled the number of transistors in a single GPU. For comparison, Intel’s beefiest CPU, the Core i7-3960X, boasts a mere 2.27 billion. There’s also the fact that from a single GPU, NVIDIA has increased the computational power 50%. The ultimate F@h card? It sure seems so.

Despite a 75% increase in the number of cores, and what’s sure to be a major boost in performance, Titan experiences only a modest TDP boost to 250W from 195W, and 50W less than the dual-GPU GTX 690.

Think about this for a moment. Here we have a 250W GPU that’s about as powerful (on paper) as a dual-GPU solution. Remember that monster GTX 480 NVIDIA released almost three years ago? Also 250W. We’ve come a long way performance-wise since then, but I still doubt many people expected Titan to sit at 250W given what it offers on paper.

Until the benchmarks trickle out, it’s impossible to compare Titan to the GTX 690. But we can speculate based on the specs we do know.

We’d expect 2x GTX 690 and 2x Titan to fare just about the same overall in real-world gaming. As mentioned earlier, it’s rare when a game can take advantage of a fourth GPU, and sometimes even a third one, so despite coming out the winner in maximum throughput, the GTX 690 might not come out the definitive winner outside of benchmarks. That said, Titan has a couple of major advantages: a 6GB framebuffer and a 384-bit memory bus.

Those perks make Titan the no-brainer solution for those who are using (or want to use) a 3×1 monitor setup with a resolution of at least 5760×1080. According to NVIDIA, 3x Titan is the only way to enjoy Crysis 3 at that resolution using the game’s highest detail settings. We’re talking $3,000 worth of GPUs. “Can it run Crysis?” is about to become relevant again.

NVIDIA admits that Titan is overkill in many ways thanks to that framebuffer, but the company went with that value for the sake of future-proofing. If someone is to shell out a couple of grand for GPUs, they’d sure as heck hope that it’d last them more than just two years. If NVIDIA had stuck to 3GB, these monster cards could have suffered a bottleneck with games coming out over the next couple of years.

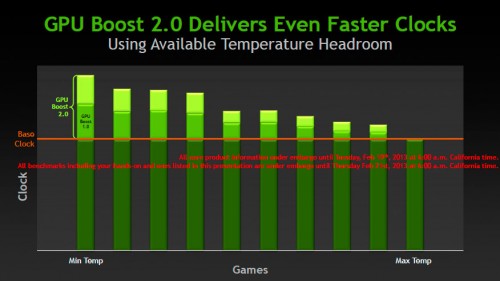

With Titan (and presumably future family members) comes GPU Boost 2.0, an evolution of the GPU core clock boost first introduced with the Kepler architecture. With GPUB 1.0, the GPU decided on its clock boost based on power draw alone, but in further testing, NVIDIA found that basing it on temperature was more effective. An added benefit is that because temperature is valued more than power, it could help improve the life expectancy of the card (it’s not only voltage that kills silicon, but temperatures, too).

Also with GPUB 2.0 is “OverVoltage”, an ability NVIDIA is giving its customers who really know what they are doing and are willing to take more of a risk with their overclocking. In effect, this allows you to go beyond the typical voltage spec and what NVIDIA would ever recommend for serious overclockers, but realizing the need, it’s there anyway. As you’d expect, trying to use this feature will yield a warning message, and for the sake of not allowing anyone to deliberately or accidentally blow their card up, there is still a maximum.

From the “I had no idea that was even possible” file comes a GPUB 2.0 feature called “Display Overclocking”. Like our CPUs, GPUs and memory, it turns out that displays have the ability to have their refresh rate boosted beyond the rated spec, and as you’d expect from overclocking, your mileage will vary.

In the example above, we have a 60Hz monitor that can actually operate at 80Hz just fine. The reason this is possible is that it’s not the display that’s driving the signal, but rather the GPU. What Titan can do is increase the refresh rate, and if it succeeds, you can stick with it. If it fails, you’ll simply see a black screen for a couple of seconds until it resets. Warranties may come into play here, but given the display’s components shouldn’t run any hotter just because of the increased signal, it seems like a safe type of overclock to us. Even so – exercise caution.

Official NVIDIA GeForce Titan Launch Trailer

In terms of both its architecture and what it brings to the table, Titan looks to be one of the most impressive graphics card launches we’ve ever witnessed. It’s in all regards an absolute beast, and a far more appropriate successor to Kepler than we would have imagined. GG, NVIDIA.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!