- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Gigabyte GeForce GTX 260 Super Overclock

NVIDIA’s GeForce GTX 260 is not a new card. In fact, it’s been available for over a year in its 216 Core form. So is it even worth a look at today? Where Gigabyte’s “Super Overclock” version is concerned, yes. Although it costs less than a stock GTX 275, this new card beat it out in almost every single game and setting we put it through.

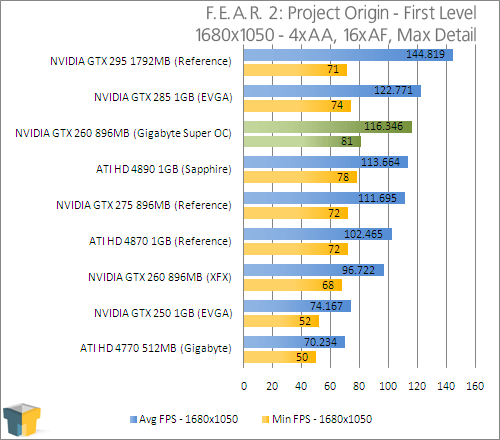

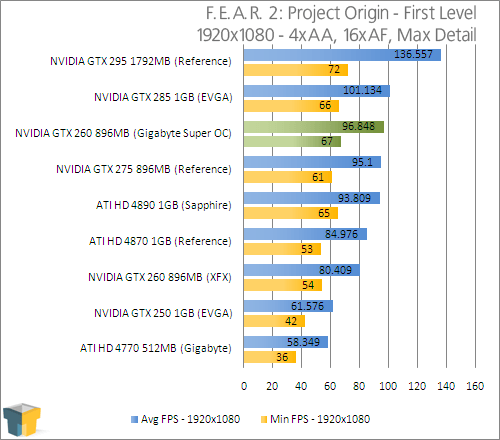

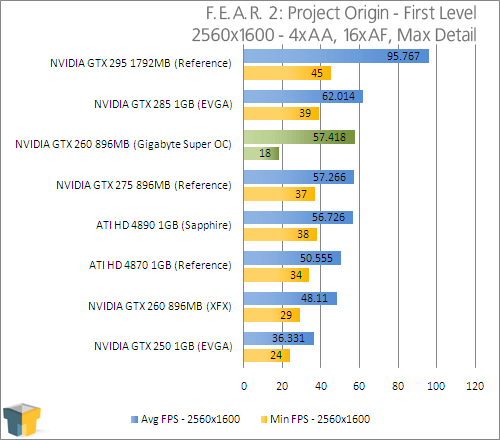

Page 6 – F.E.A.R. 2: Project Origin

Five out of the seven current games we use for testing are either sequels, or titles in an established series. F.E.A.R. 2 is one of the former, following up on the very popular First Encounter Assault Recon, released in fall of 2005. This horror-based first-person shooter brought to the table fantastic graphics, ultra-smooth gameplay, the ability to blow massive chunks out of anything, and also a very fun multi-player mode.

Three-and-a-half years later, we saw the introduction of the game’s sequel, Project Origin. As we had hoped, this title improved on the original where gameplay and graphics were concerned, and it was a no-brainer to want to begin including it in our testing. The game is gorgeous, and there’s much destruction to be had (who doesn’t love blowing expensive vases to pieces?). The game is also rather heavily scripted, which aides in producing repeatable results in our benchmarking.

Manual Run-through: The level used for our testing here is the first in the game, about ten minutes in. The scene begins with a travel up an elevator, with a robust city landscape behind us. Our run-through begins with a quick look at this cityscape, and then we proceed through the level until the point when we reach the far door as seen in the above screenshot.

Gigabyte’s Super Overclock so far as consistently remained ahead of the GTX 275 in each game and resolution so far, but a noticeable trend is that the overall gain becomes less as the resolution increases. Still, Gigabyte’s card costs less than a stock-clock GTX 275. Faster performance for less money? No reasonable person would complain about that.

|

Graphics Card

|

Best Playable

|

Min FPS

|

Avg. FPS

|

|

NVIDIA GTX 295 1792MB (Reference)

|

2560×1600 – Max Detail, 4xAA, 16xAF

|

45

|

95.767

|

|

NVIDIA GTX 285 1GB (EVGA)

|

2560×1600 – Max Detail, 4xAA, 16xAF

|

39

|

62.014

|

|

NVIDIA GTX 260 896MB (GBT SOC)

|

2560×1600 – Max Detail, 4xAA, 16xAF

|

18

|

57.418

|

|

NVIDIA GTX 275 896MB (Reference)

|

2560×1600 – Max Detail, 4xAA, 16xAF

|

37

|

57.266

|

|

ATI HD 4890 1GB (Sapphire)

|

2560×1600 – Max Detail, 4xAA, 16xAF

|

38

|

56.726

|

|

ATI HD 4870 1GB (Reference)

|

2560×1600 – Max Detail, 4xAA, 16xAF

|

34

|

50.555

|

|

NVIDIA GTX 260 896MB (XFX)

|

2560×1600 – Max Detail, 4xAA, 16xAF

|

29

|

48.110

|

|

NVIDIA GTX 250 1GB (EVGA)

|

2560×1600 – Max Detail, 4xAA, 16xAF

|

24

|

36.331

|

|

ATI HD 4770 512MB (Gigabyte)

|

2560×1600 – Normal Detail, 0xAA, 4xAF

|

30

|

43.215

|

Once again, the best playable setting is the same that we used for our above 2560×1600 setting, at 4xAA and 16xAF. An oddity is the fact that Gigabyte’s card lacked a bit in the minimum FPS area, which was caused due to a longer pause during an automatic save. There was no issue in our previous GPU runs, so I’m unsure if this issue was tied to the card, or the game. Either way, it only occurred during this save point, and isn’t really noticeable as a lag spike is usually expected during an auto game save.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!