- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Intel Arc Multi-GPU Rendering, Encoding & Math Performance

We recently established that Intel’s Arc graphics cards perform well in many creative tasks, but have you wondered how combining the forces of two Arc GPUs might improve things? We’re finding that out here, with the help of Blender, LuxMark, BRAW, and others.

It’s been a couple of weeks since Intel launched its long-awaited top-end Arc GPUs, the A770 and A750, both of which we put through our gauntlet of tests, including rendering, encoding, photogrammetry, AI, and math. That might sound exhaustive, but the truth is, it feels like we’ve just scratched the surface on different performance angles to tackle. This article helps fill the void for another.

While we covered encoding in our Arc launch articles, we still have plans to tackle Hyper Encode soon – the mode that allows you to accelerate encoding by combining the forces of Arc discrete GPUs and Intel’s integrated graphics. We plan to dive into this advanced encoding testing after the 13th-gen launches, when we can stop stressing over upcoming embargoes, and are able to spend quality time evaluating things. Of course, we also intend to tackle gaming, as well, after our initial creator angles are covered. That will include a look at Linux gaming, as we’ve seen a number of requests for that directly to us, and around the web.

In this article, we’re taking a look at a more unique angle of Arc: multi-GPU. We’re not sure how much focus Intel has had on multi-GPU performance for Arc, but the performance results below will help paint us a nice picture. In putting together this article, we also had a chance to double-check which creative applications we test with can even use multiple GPUs. While it’s effectively implied that rendering workloads will always support multi-GPU, those are not the only benefiting workloads that exist.

We tested every single one of the workloads from our Arc launch articles in a dual-GPU setup, and you may find yourself surprised by some of the results. Before getting to those results and some discussion on tests, here’s a quick look at our test PC’s configuration:

| Techgage Workstation Test System | |

| Processor | AMD Ryzen 9 5950X (16-core; 3.4GHz) |

| Motherboard | ASRock X570 TAICHI (EFI: P4.80 03/02/2022) |

| Memory | Corsair Vengeance RGB Pro (CMW32GX4M4C3200C16) 8GB x 4 Operates at DDR4-3200 16-18-18 (1.35V) |

| AMD Graphics | AMD Radeon RX 6900 XT (16GB; Adrenalin 22.9.1) AMD Radeon RX 6800 XT (16GB; Adrenalin 22.9.1) AMD Radeon RX 6800 (16GB; Adrenalin 22.9.1) AMD Radeon RX 6700 XT (12GB; Adrenalin 22.9.1) AMD Radeon RX 6600 XT (8GB; Adrenalin 22.9.1) AMD Radeon RX 6600 (8GB; Adrenalin 22.9.1) AMD Radeon RX 6500 XT (4GB; Adrenalin 22.9.1) |

| Intel Graphics | Intel Arc A770 (16GB; Arc 31.0.101.3435) Intel Arc A750 (8GB; Arc 31.0.101.3435) Intel Arc A380 (6GB; Arc 31.0.101.3430) |

| NVIDIA Graphics | NVIDIA GeForce RTX 4090 (24GB; GeForce 521.90) NVIDIA GeForce RTX 3090 (24GB; GeForce 516.94) NVIDIA GeForce RTX 3080 Ti (12GB; GeForce 516.94) NVIDIA GeForce RTX 3080 (10GB; GeForce 516.94) NVIDIA GeForce RTX 3070 Ti (8GB; GeForce 516.94) NVIDIA GeForce RTX 3070 (8GB; GeForce 516.94) NVIDIA GeForce RTX 3060 Ti (8GB; GeForce 516.94) NVIDIA GeForce RTX 3060 (12GB; GeForce 516.94) NVIDIA GeForce RTX 3050 (8GB; GeForce 516.94) |

| Audio | Onboard |

| Storage | Samsung 500GB SSD (SATA) (x3) |

| Power Supply | Corsair RM850X |

| Chassis | Fractal Design Define C Mid-Tower |

| Cooling | Corsair Hydro H100i PRO RGB 240mm AIO |

| Et cetera | Windows 11 Pro build 22000 (22H1) AMD chipset driver 4.08.09.2337 |

| All product links in this table are affiliated, and help support our work. | |

Which Applications Support Multi-GPU?

As mentioned above, rendering is one type of workload where you can safely expect that multiple CPUs or GPUs will be utilized to great effect. As these are straight-forward compute workloads, there are no issues of weak (or worsened) performance as we’ve seen in games when utilizing multiple GPUs via AMD’s CrossFire or NVIDIA’s SLI. Adding a second GPU should always dramatically reduce render times.

Even still, assuming multi-GPU will work doesn’t mean that Intel’s added the proper polish to its driver alongside the major launch of its first-gen Arcs – though, thankfully, it has:

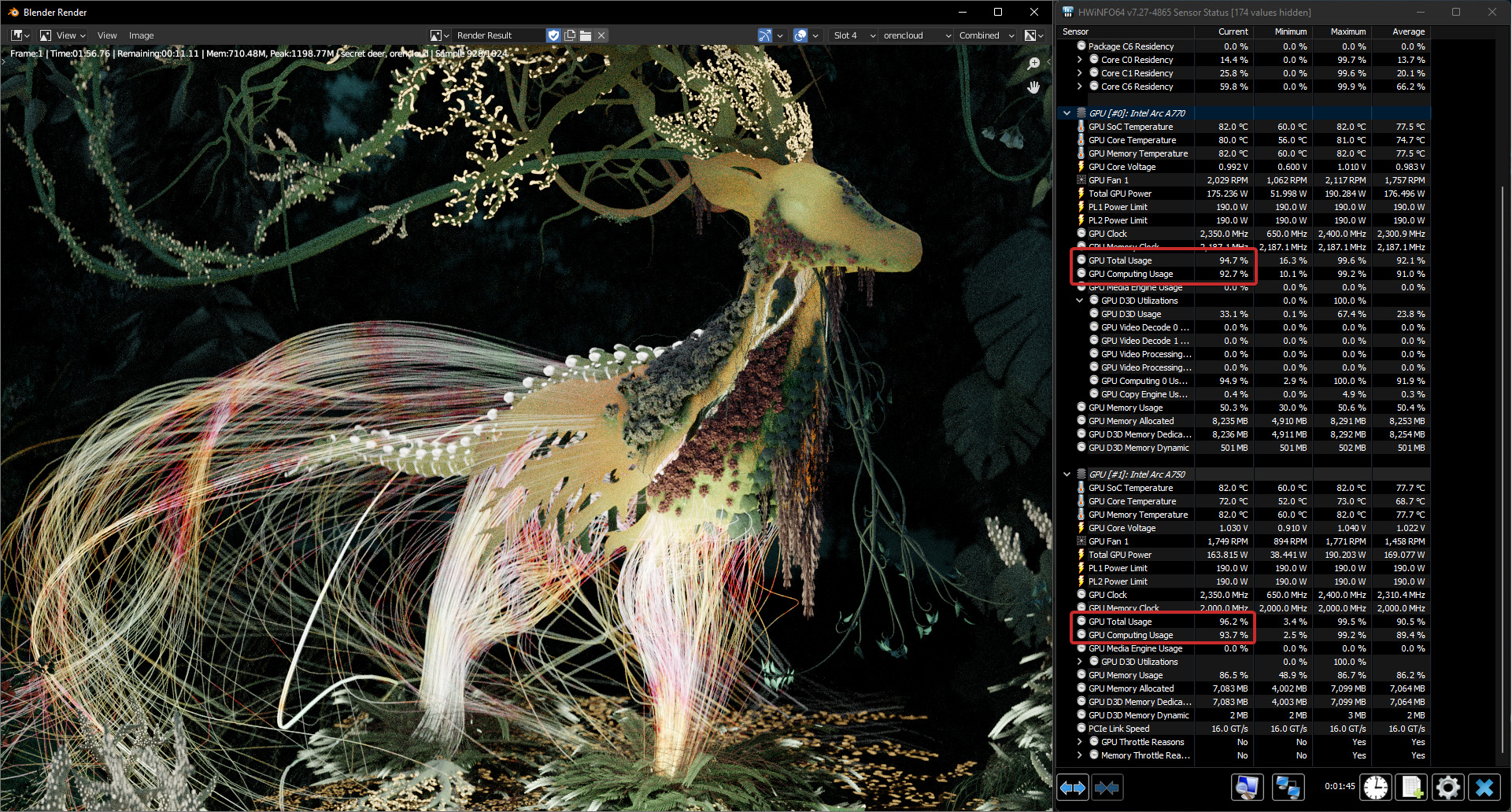

In the shot above, you can see the Secret Deer project being rendered in Blender, and via HWiNFO, we can see that both chips are being utilized well. As we’ll see in the results, LuxMark benefits just the same from multiple Arc GPUs. Encoding, on the other hand, is iffier:

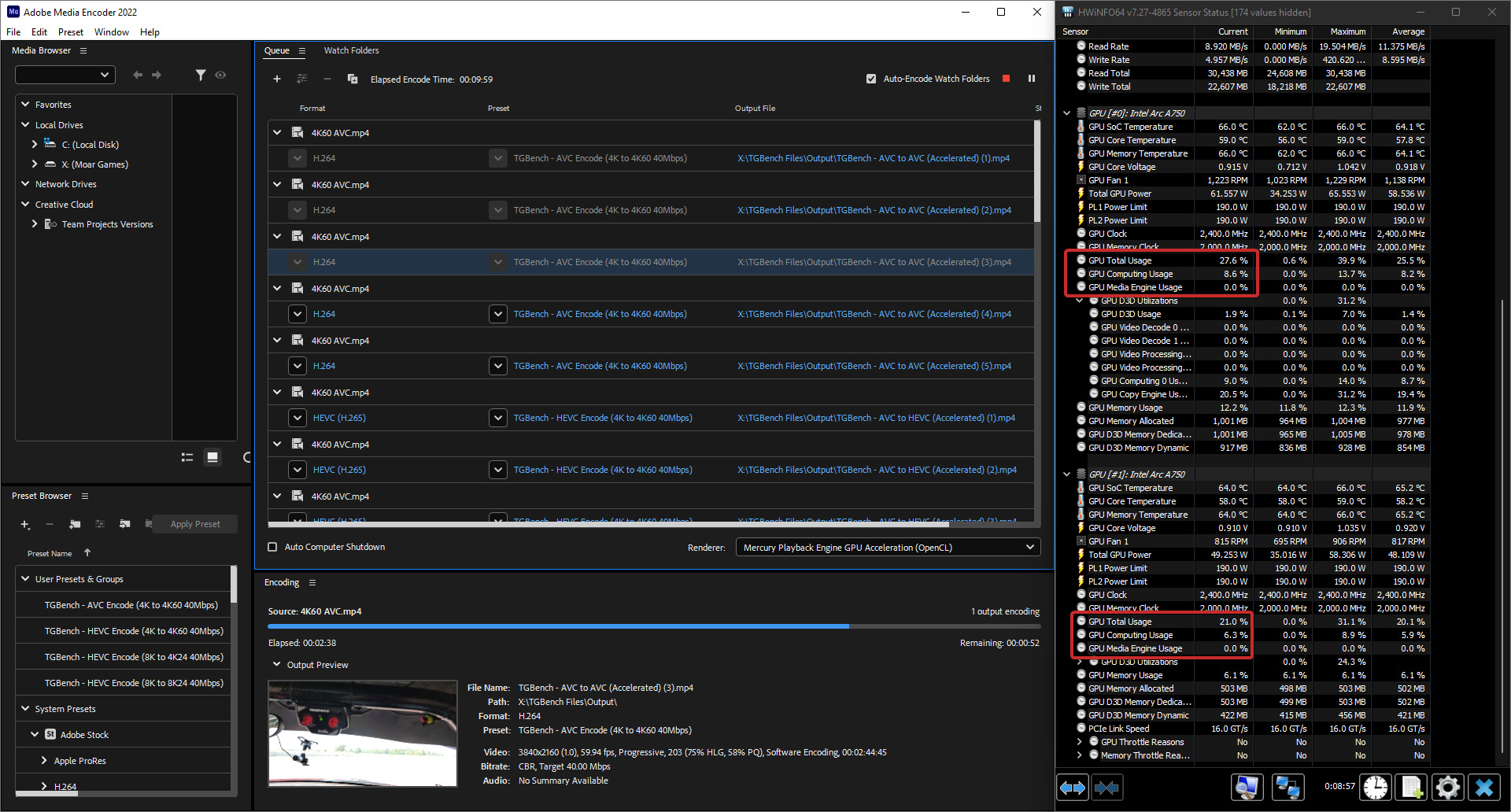

Having two discrete Arc GPUs installed in a machine seems to confuse Adobe’s Premiere Pro (and thus Media Encoder), as neither of their video engines end up being used. Instead, all work is relegated to the CPU and GPU cores. This means that transcodes are slower, and that advanced projects with effects added will outright fail. Dual Arc behaves similarly to AMD’s encoderless Radeons (eg: RX 6400 and RX 6500 XT).

We’re not sure if Premiere Pro will ever plan to utilize multiple GPUs for encoding, but we hope a future update will at least make it so only one Arc GPU is properly used if multiple are installed.

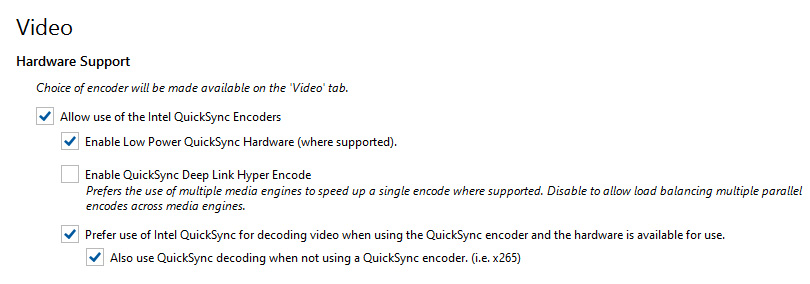

HandBrake doesn’t collapse when multiple Arc GPUs are present, but we encountered another anomaly. When a second Arc is added into the system, an option for “Enable QuickSync Deep Link Hyper Encode” appears. That gave us the impression that both GPUs would in fact be used, but not so. We believe this feature is just a bug, as “Hyper Encode” refers explicitly to encoding with both an Arc discrete GPU and integrated graphics. If that accelerated encoding is possible, though, we’d hope to see the option extended to multiple discrete cards in the future.

The odd behavior with HandBrake doesn’t end there, as we noticed that encoding performance actually dropped when merely having two Arc GPUs installed. With one single A750, we hit 148 FPS in our test HEVC encode. With two Arc GPUs present, that performance dropped to 128 FPS – despite the fact that only the first card’s video engine was being utilized.

As for other types of encoding, we were hopeful that Adobe’s Lightroom Classic would support multiple GPUs, but that’s unfortunately not the case. In fact, depending on the GPU you have, you may be lucky if the software even decides to automatically engage it. To ensure you’re getting proper acceleration, you’ll need to head into the options, and make sure it states “full” instead of “limited”. If it’s the latter, then choosing the Custom option and manually choosing to enable it should work. The reason some GPUs work out-of-the-box and others don’t boils down to an annoyance called “whitelists”.

Lastly, we gave dual Arc a test in Topaz’s Gigapixel AI, and while that software fully supports multiple GPUs, we saw the exact same performance with two GPUs as we originally did with one. We’ll revisit that tool down-the-road to see if anything’s changed.

Arc Performance, Times Two

When using multiple GPUs to accelerate your creative work, it’s ideal that each one is the exact same make and model, although a different version of the same specific GPU is good enough (even if resulting clocks are a bit different). You’re still able to mix-and-match two different cards together and see performance uplifts, but the overall efficiency might not be to the same level.

For these results, we’ve tackled both angles: combining two A750s together for a proper multi-GPU look, as well testing A770 + A750 to see if any oddities arise from having dissimilar GPUs. We produced a similar sort of article following the NVIDIA Ampere GeForce launch, where we put an RTX 3080 alongside the Turing generation to see how scaling fared – it’s worth checking out if you want a wider view of what to expect when going that route.

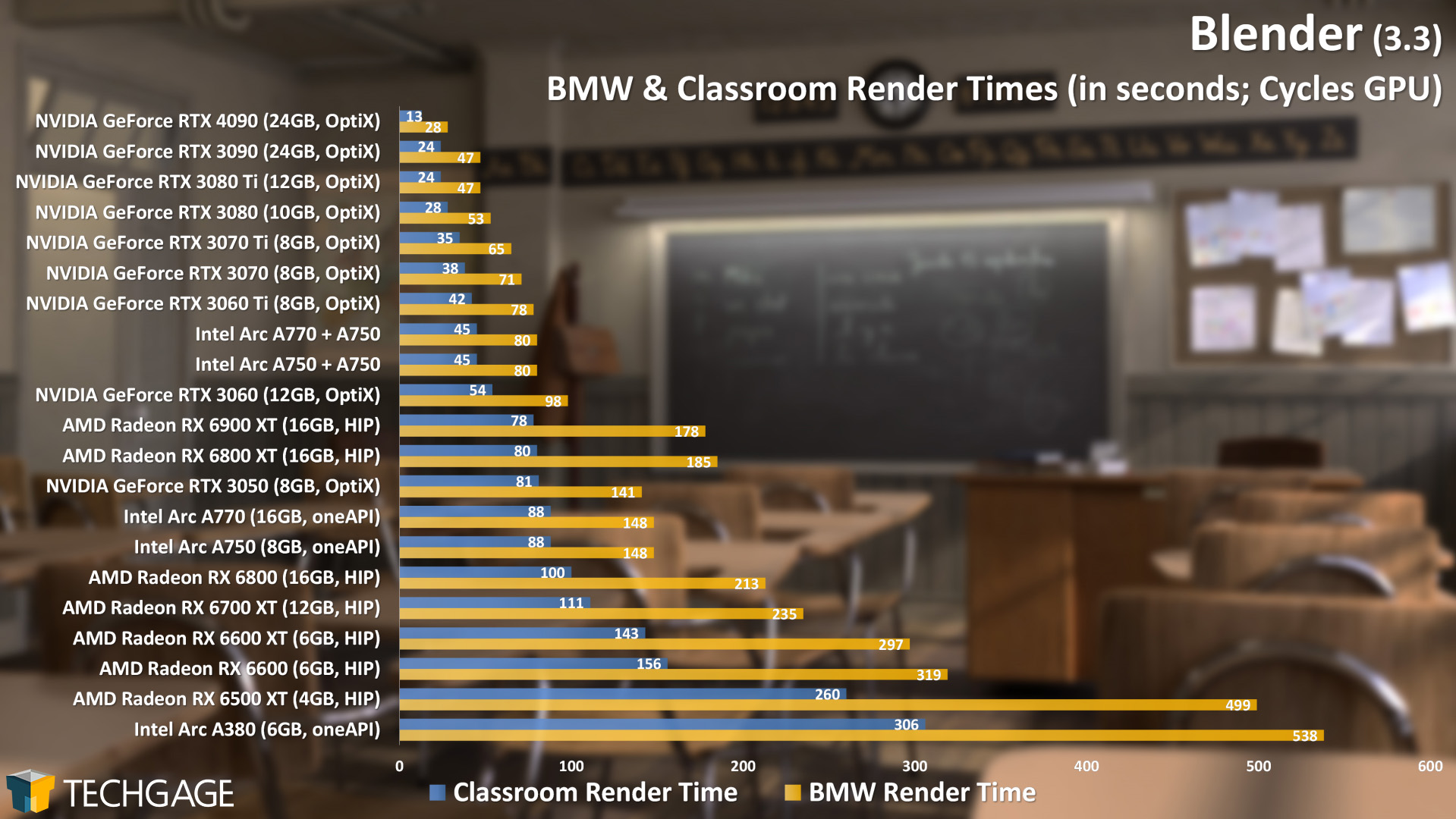

Let’s start with a look at Blender:

Please note that the BMW, Classroom, and Secret Deer projects have all been modified (vs. the native file on Blender’s demo files page) to run longer and warm each GPU up better. You can see our changes in our previous Blender deep-dive article.

This type of performance scaling is exactly what we hoped to see when we first glanced at the results. We don’t see results quite cut right in half, but that’s not typical to begin with – and both the BMW and Classroom projects still came close to achieving it.

The elephant (or deer) in the room is that NVIDIA’s OptiX is so strong in Blender, that GeForce is going to be the best choice. It’s notable just how strong Intel is against AMD, though. Radeon support for Blender has been refined again and again, and yet Intel comes out of (relatively) nowhere and delivers far better performance – where lower-end Arcs outperform higher-end Radeons. It’s something to see.

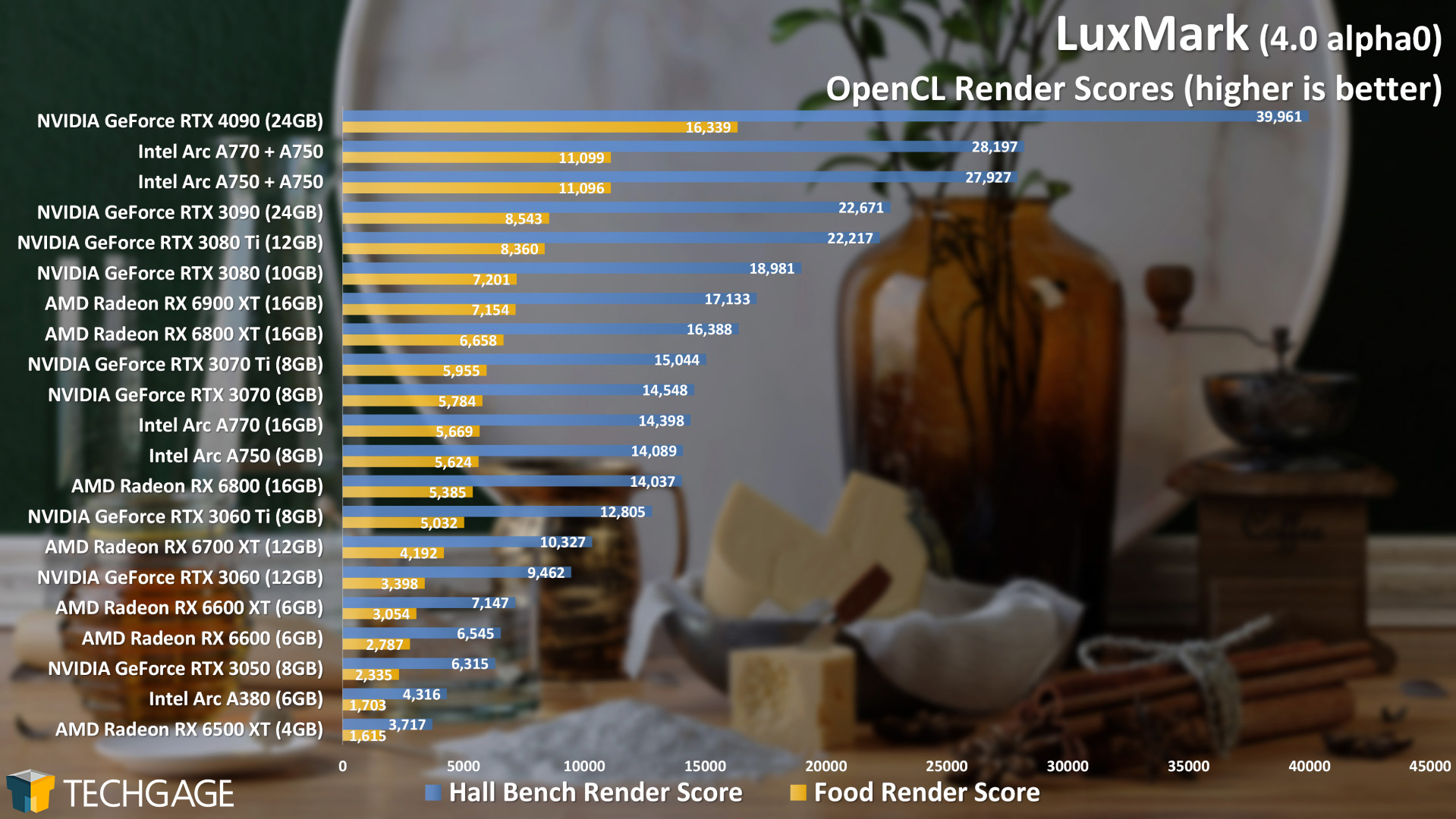

Similar gains can be seen in LuxMark, which represents LuxCoreRender performance:

It’s humorous to see Arc sit behind the GeForce RTX 4090 here – or, it at least would be without context. Still, it’s neat to see dual Arc GPUs soar to the top of certain charts.

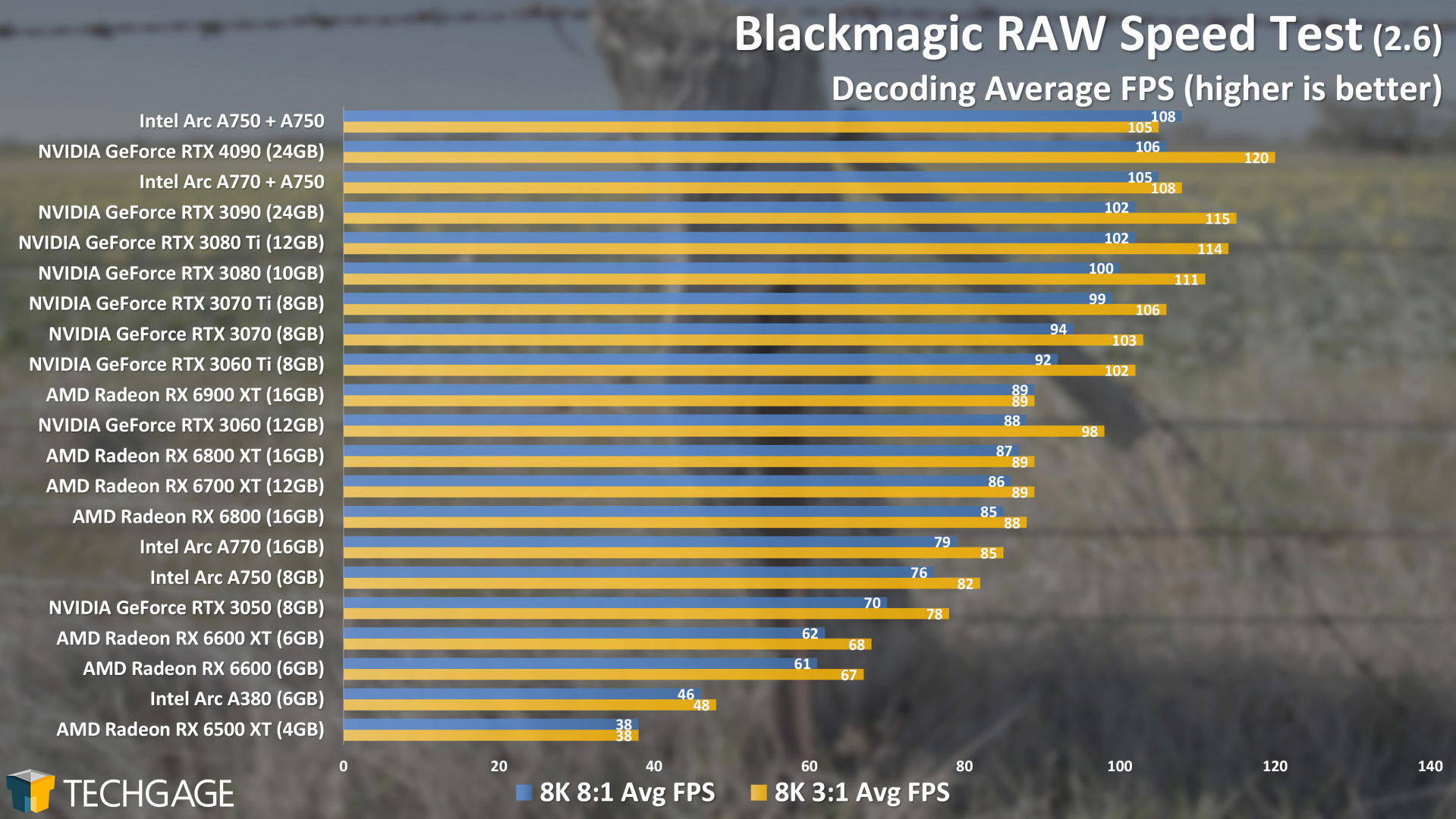

As we covered before, Premiere Pro does not agree with having two Arc GPUs installed, but BRAW Speed Test does. This might not be a big surprise to Blackmagic Design DaVinci Resolve users, as that software has long touted support for multiple GPUs – although it doesn’t improve every performance aspect. It obviously does improve decode performance, however:

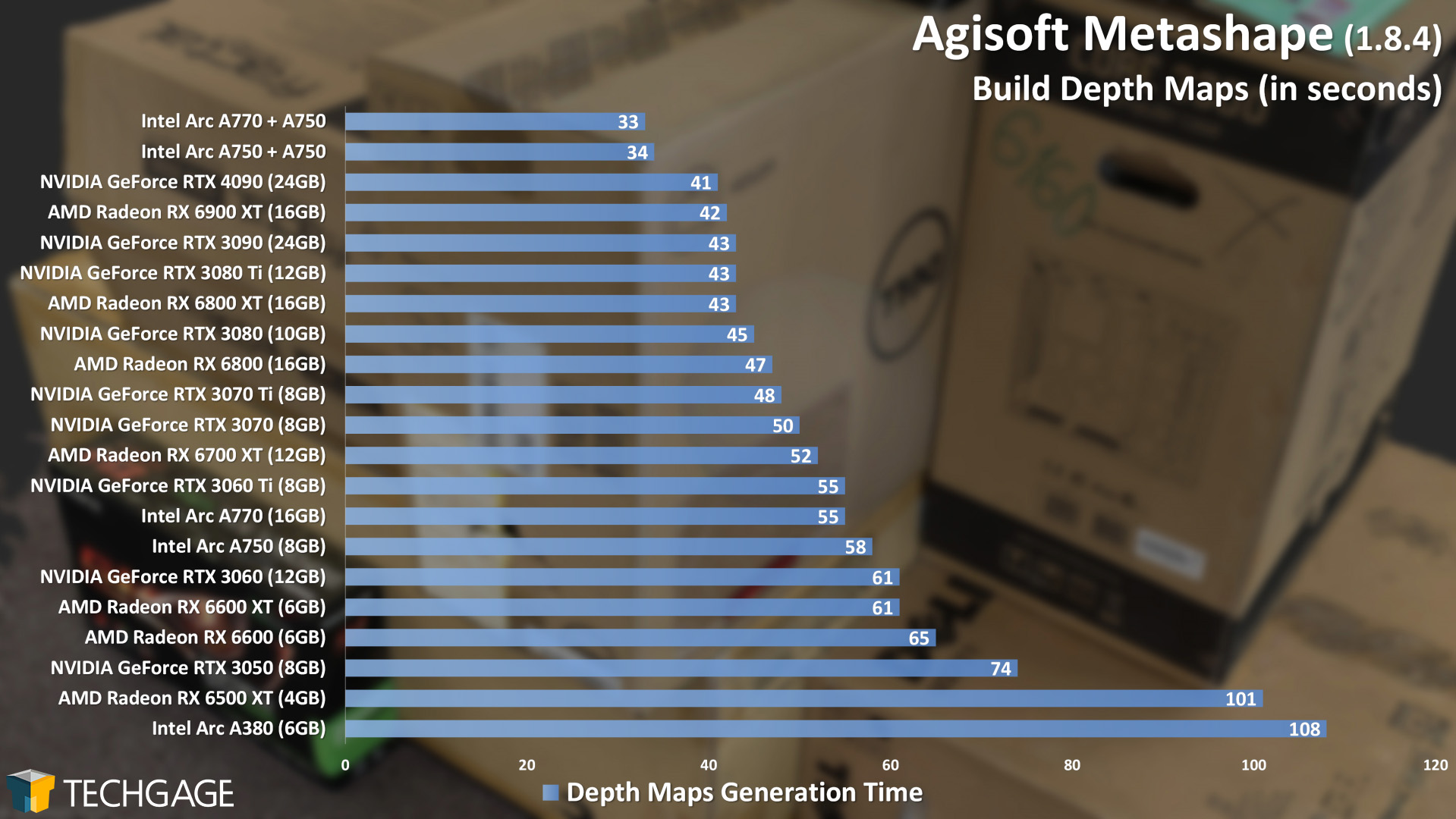

With our Agisoft Metashape photogrammetry test, we also saw a performance uplift with the Build Depth Maps part of the process:

We’ve never tested multiple GPUs in Metashape before, so we were pleased to see these sorts of results. Most of a photogrammetry process utilizes the CPU, but when the GPU is called-upon, multiple GPUs can shave precious seconds off of the entire process. Both AMD and NVIDIA perform well in Metashape in general, so we’d assume these same sorts of gains would also be seen with GeForces and Radeons.

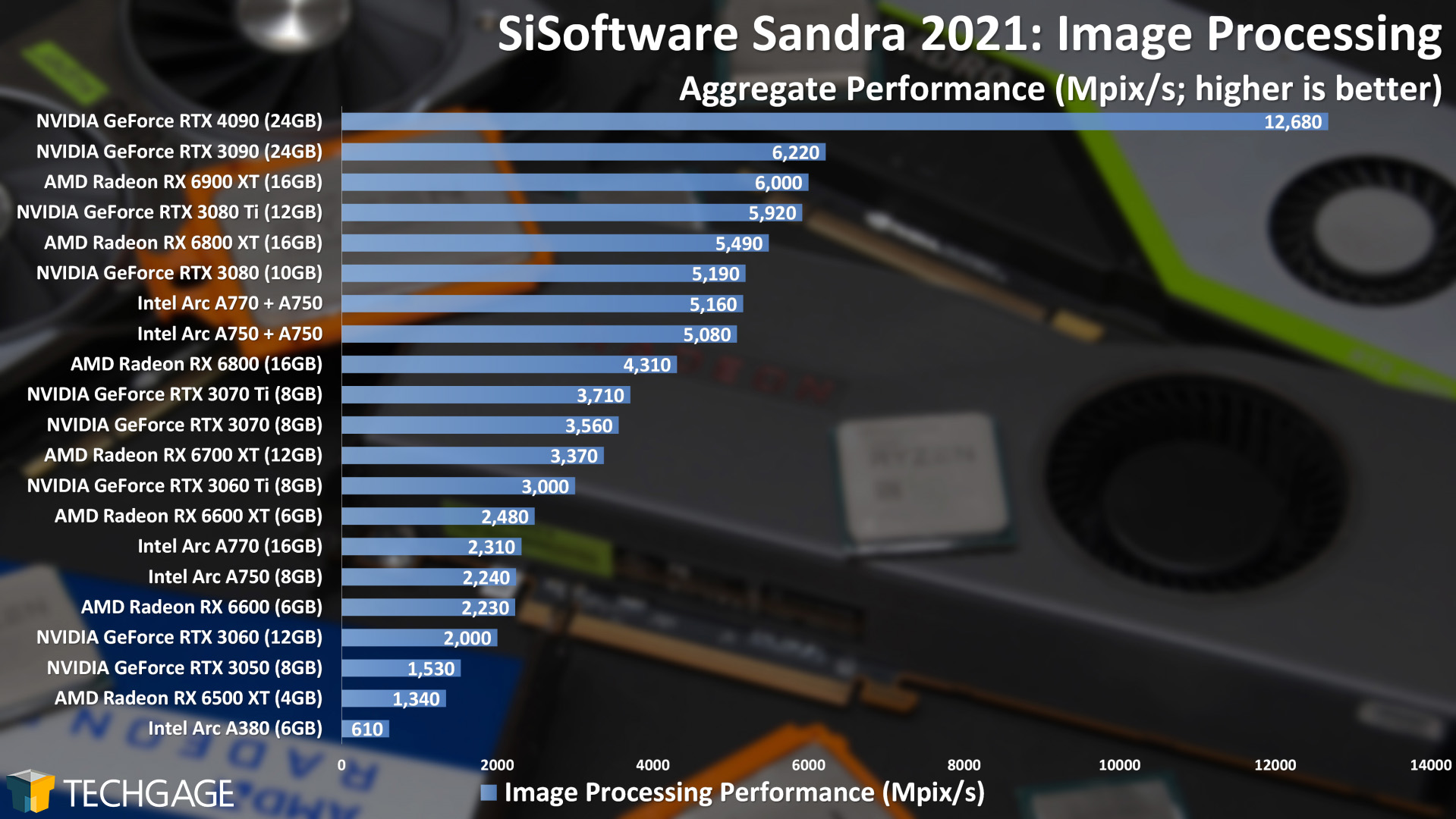

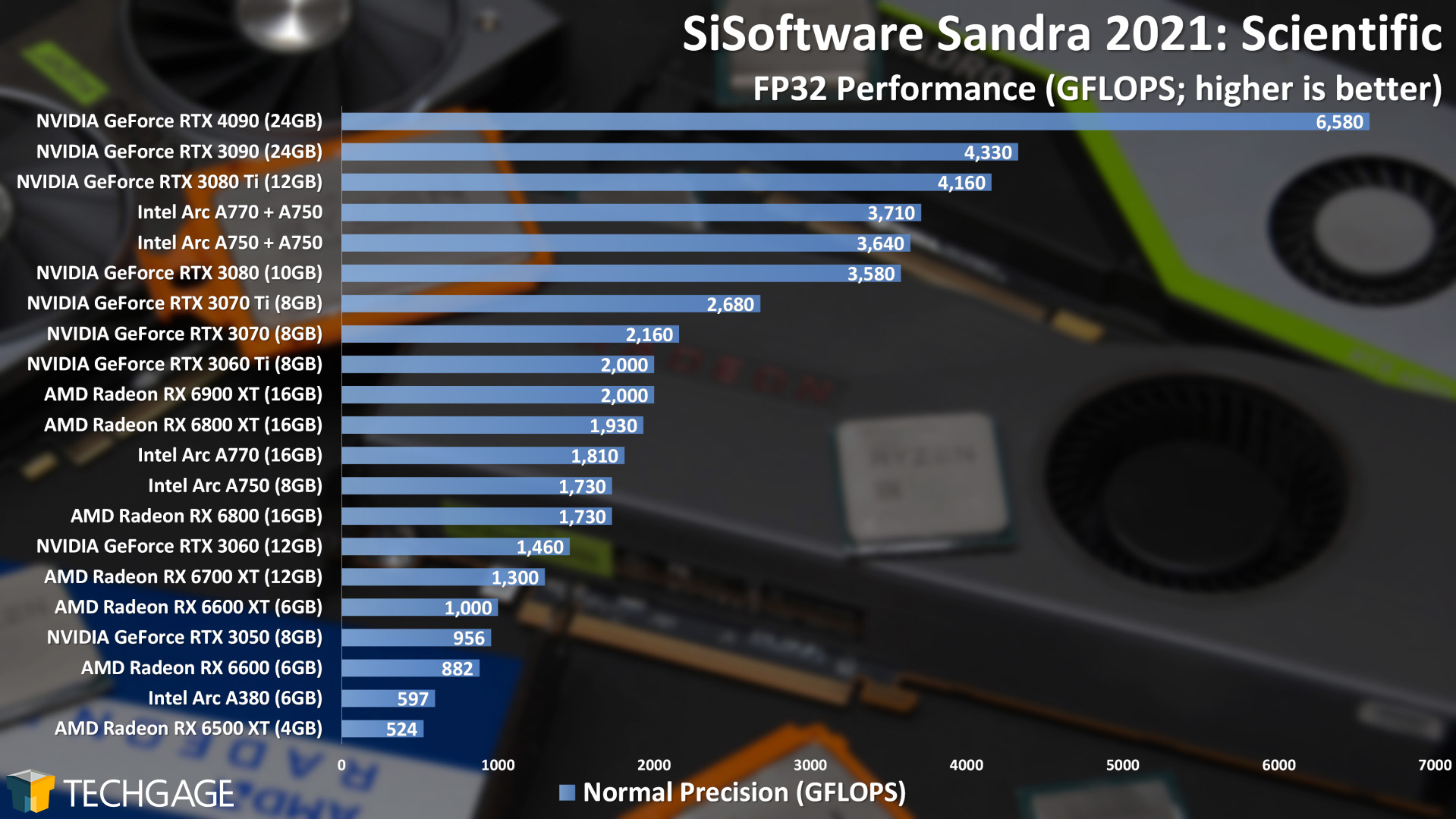

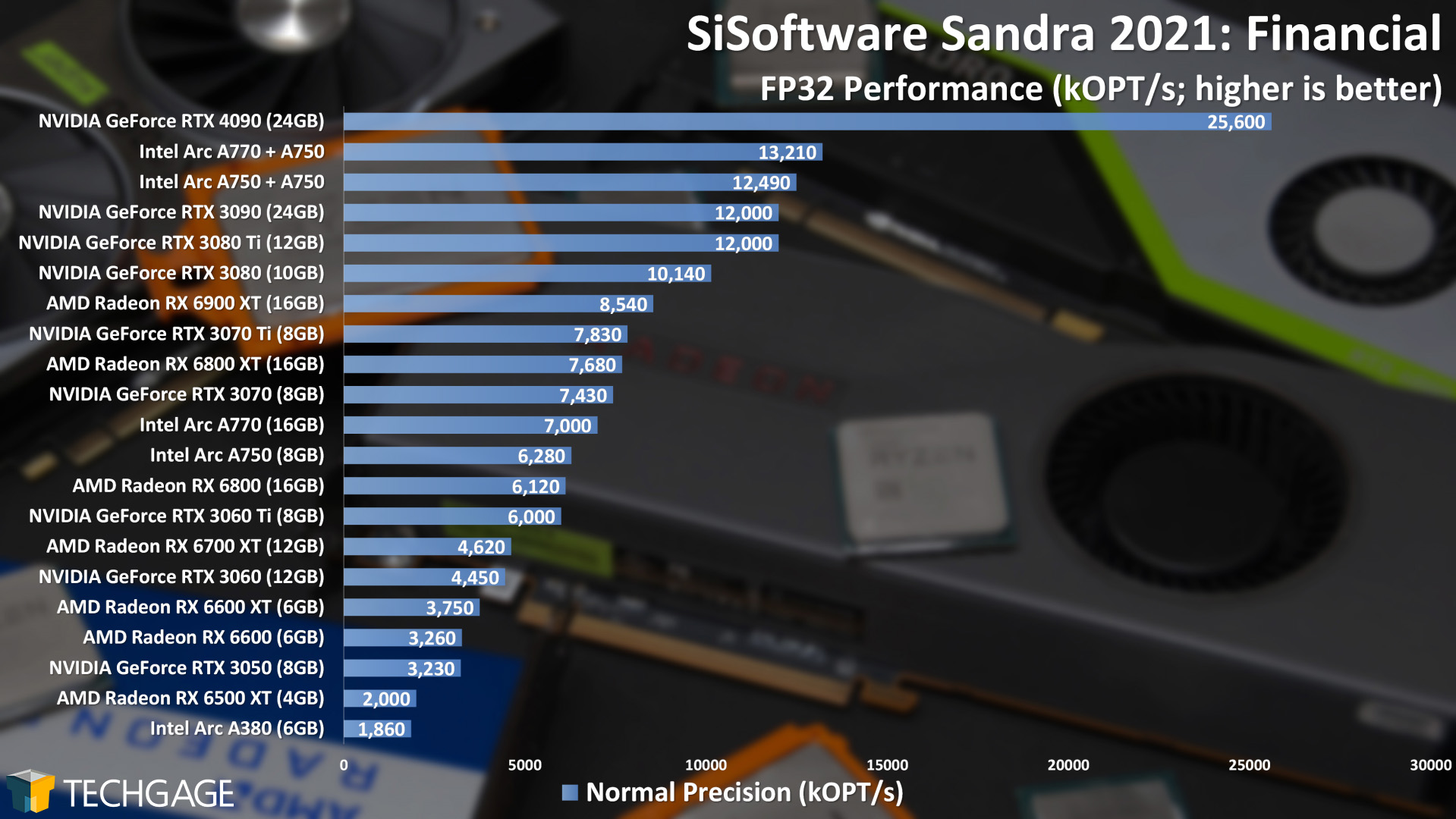

To wrap-up, we wanted to re-test the last piece of software that we knew would show clear performance boosts from having multiple GPUs: SiSoftware’s Sandra. Across all four of our benchmarked tests, the combined Arc cards saw massive gains in the charts:

The most notable result here might be the Financial test, as both dual-GPU Arc configurations sit behind only the RTX 4090. That performance seen out of the RTX 4090 is something else, it must be said. It more than doubled the throughput of the RTX 3090. If only we saw gains like those every single generation!

Final Thoughts

We still can’t speak to the stability or performance-factor of Arc’s drivers for gaming, but we can say that throughout all of our exhaustive creator testing, we’ve not experienced a single driver issue – no crashes, no stalls, nothing that ever stood out. Instead, the current state of things continues to impress us.

Would you have been surprised if multi-GPU on Arc wasn’t working correctly? We honestly wouldn’t have ahead of the recent launch. After all, we’re talking about a third vendor entering a hotly contested market, and making drivers both reliable and performant for most workloads is not a trivial task. Yet, we’ve had great luck so far in all of our testing – even when it comes to combining two GPUs together.

So… the big question. Are we suggesting going the multi-GPU route? As with all things creator, it’s impossible to make that kind of suggestion as a blanket statement. As we’ve seen proof of in our results so many times, you can’t look at the performance from one workload and assume your other workloads will scale the same way. You need to prioritize your most important workloads, and go from there.

With solutions like Blender, it doesn’t make sense to go the multi-GPU Arc route, because a single lower-end NVIDIA card with OptiX enabled will beat it. LuxCoreRender is different, where dual Arcs soar to the top to sit just behind the RTX 3080.

We also only tested multiple Arc GPUs here. We don’t have duplicate versions of any of the others, but maybe in time we’ll make that happen and see how all three vendors scale with multiple GPUs. With Intel new to the discrete desktop market, we simply wanted to see how the company’s drivers fared in such a niche configuration. We’re happy to be able to say that we’re left more impressed than we expected to be.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!