- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Intel Opens Up About Larrabee

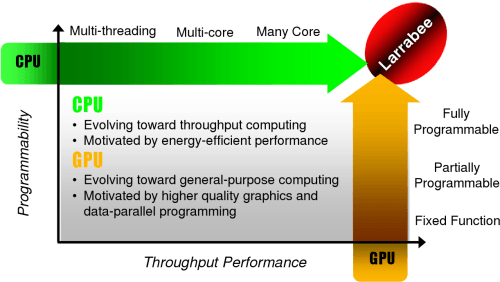

Intel today takes a portion of the veil off their upcoming Larrabee architecture, so we can better understand its implementation, how it differs from a typical GPU, why it benefits from taking the ‘many cores’ route, its performance scaling and of course, what else it has in store.

Page 1 – Introduction

During a press briefing late last week, Intel discussed their upcoming Larrabee ‘visual computing’ architecture in much greater detail than ever. Some of what was discussed has been known for a while, but exactly how the architecture works and what it offers hasn’t been explored much in the past.

This briefing nicely prefaces the SIGGRAPH conference, which happens next week in Los Angeles, and also Intel’s own Developer Forum, occurring in two weeks in San Francisco. Although much was unveiled during last week’s briefing, even more may be covered at IDF.

As a quick refresher, Larrabee is Intel’s architectural solution to visual computing. While a Larrabee-equipped card could be considered a ‘graphics card’, in that it can render your game’s 3D graphics, ‘visual computing’ is the term Intel makes sure hovers around the name. It’s no secret that GPUs are excellent performers in specific non-gaming scenarios, and as a result, both Intel and NVIDIA have been going forth with their own projects in order to push the GPU far beyond gaming.

From an architectural standpoint, Larrabee will not be able to be compared to current GPUs on the market, as the innards are vastly different. While ATI’s and NVIDIA’s solutions pit one or two large GPU cores on a graphics card, Larrabee will be going in a slightly different direction, offering a processor with many smaller cores underneath.

As previously leaked, but now confirmed, the cores inside Larrabee feature a pipeline derivative of the Pentium processor, using a short executing pipeline with a fully coherent cache structure. Larrabee of course will carry it’s own improvements, however, which include 64-bit extensions, multi-threading, a wide vector processing unit and also an advanced pre-fetcher.

How many of these cores Larabee will feature is currently unknown, although it is sure to vary from model to model, just like current desktop CPUs. In an image I’ll show later in this brief article, graphs provided show usage of 8 cores, all the way up to 48.

The question of why Intel decided to go this route is a common one, but the simplified answer is that it just makes sense to go in this direction. Prior to the advent of Dual-Core processors, the issue we were facing were processor cores that were topping-out at a certain frequency, and no improvements could immediately be made to increase it. Even if it could be increased further, the benefits seen would far underwhelm the amount of technical work required to go that route.

To aide in the matter, Dual-Core and eventually Quad-Core processors were released. We’ve been fortunate to have the best of both worlds with the Core architecture, because it proved faster overall compared to previous Netburst-based CPUs, plus we had the benefit of being able to fit more than one under the hood.

The reason for Larrabee’s direction is that it’s easier to scale a handful of cores than it is to create one mammoth core. Taking a look at NVIDIA’s latest GTX core will verify this.

Likewise to the progression of CPUs, GPUs began off with a similar life. They were first Fixed Function, and limited in how they could be executed, and then moved on to become partially programmable, and finishes up to be fully programmable, with the help of Larrabee. It should be noted that NVIDIA’s CUDA architecture is similar in some regards to Larrabee, however, but until we see raw performance data from Larrabee, it’s impossible to assume who has the better product.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!