- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Introduction to Consumer Virtualization

With some companies putting such a focus on virtualization, have you ever stopped to wonder what it is? Better yet, have you ever thought about how it could benefit you? This primer aims to answer those very questions, by taking a look at what virtualization is, why it exists, its limitations and also discuss scenarios where it could benefit the regular consumer.

Page 2 – How Does it Work?

To help simplify things, let’s get a few popular terms taken care of before we jump into our explanations. In virtualization, a “host” is your real OS, while a “guest” is the OS running inside of your host. So, if Windows is installed directly onto your hard drive, and you are running Ubuntu through VirtualBox inside of your Windows, then Windows is the host, and Ubuntu the guest.

One of the most common terms used in virtualization is “hypervisor”, which refers to the application you’re using to handle your virtualized machines. There are two types of hypervisors, classified as “Type 1” and “Type 2”. The latter is what we’re focusing on, as it refers to the fact that the hypervisor runs inside of an operating system. Type 1 on the other hand, is a specialized hypervisor that requires no installed OS (it runs off of hardware). Lastly, VM is “virtual machine”, which would be your guest OS.

To understand how a hypervisor works, picture again game emulation. In order for a game to run on its original console, certain hardware factors must match up, else it isn’t going to run. Developers of these emulators, then, must design their application in such a way that it emulates the original hardware, and as we’ve seen over the years, the idea behind it all works well, as I’m not sure there exists a console that hasn’t been emulated (aside from the current-gen, perhaps).

In a similar vein, the most common virtualization tools on the market for the regular consumer are all going to emulate an entire PC, so in a sense, you’ll not only be controlling a secondary OS, but another computer as well. Because these hypervisors act so much like a real PC, you’ll even be able to enter a BIOS as you boot your guest OS up, and even change boot devices.

This is so much so the case, that if you choose to boot up with a CD designed for a specific task, it will treat the PC (as in, that particular virtual machine) as normal. There are of course limitations, but the abilities are still limitless. Picture for example, booting up with Acronis True Image. Even though all it will see is the virtualized installation of whatever OS you have installed, it’ll still see a “real” partition, so you’ll be able to back it up as normal.

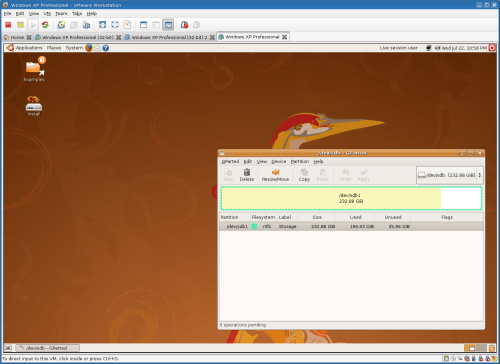

Another possibility is testing out a new Linux distro. Rather than install it on your machine, you could easily start up any of your virtual machines and choose the CD-ROM as the boot device. Because the hypervisor is essentially the PC, the distro should boot up just fine, with graphics, audio and other various support in tact. Or in a similar scenario, say you want to partition a storage device. Easy. Boot up with Ubuntu or some other Linux distro, start up GParted, change to the proper device, and take care of what you need to. See? Endless possibilities!

I should give out a warning, though. Although it’s possible to alter partitions on installed disks through a VM, it’s not advised. Altering partitions on USB-based devices would be fine, however, as they are not integral to the PC.

Because a hypervisor emulates a PC, there will be various aspects of your computer that won’t be fully utilized in a VM, such as the graphics card and audio card. This is because the VM won’t have direct access to these components like the host OS does. Because of this, each hypervisor will declare their own proprietary version of each such component. Where VMware is concerned, for example, the graphics will be installed as “VMware SVGA II”, and the audio will be “Creative AudioPCI”.

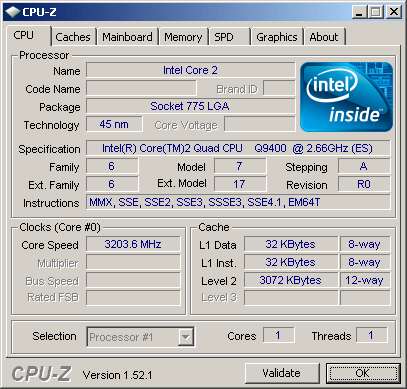

Not all of the components have their attributes completely hidden from your virtual machine, though. The processor is one example of this, as you can see in the screenshot below. Although a lot of fields are blank in CPU-Z, the processor’s name is seen, along with an accurate reading of the frequency. It doesn’t stop there, though, as even the most common instruction sets are also listed, meaning you’re able to take advantage of those from within the virtual machine if you need to.

It’s up to the hypervisor to properly allocate your real system resources to each VM, and as you’d expect, the better your system, the better your virtual machines will run. CPU frequencies will matter, as will your RAM speed and density. There are a few other factors that come into play, though, such as added extensions used to boost virtualization performance, such as AMD-V and Intel VT-x.

To have desirable VM performance today, it’s important to make sure your machine is equipped with a processor with this virtualization acceleration. Intel first rolled support for their VT-x into later Netburst-based processors, while AMD shipped their first supported processor mid-2006. If you’re unsure whether your CPU includes such an extension, the best thing to do is to head on over to the processor vendor’s website and look it up there. But most likely, if your PC was built within the last three years, it will have such functionality.

Most recently, both AMD and Intel have released updates to their respective extensions, which AMD calls RVI, and Intel EPT. RVI and EPT are essentially marketing terms for the same thing, NPT, or nested paging tables. The goal here is to allow the VM direct access to the computer’s memory, which would remove the hypervisor as the middleman. The result is faster memory access, which means less overhead. AMD’s RVI can be found on anything past Phenom (including Opteron), while Intel’s EPT is included on Core i7 (and Nehalem server CPUs).

The entire process of virtualization might sound simple at first, but there’s incredible magic going on here to create such possibilities. To be able to run a secondary, tertiary or even more OS’ inside of your main OS, is simply incredible. But what about the limitations? We’ll tackle that next.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!