- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

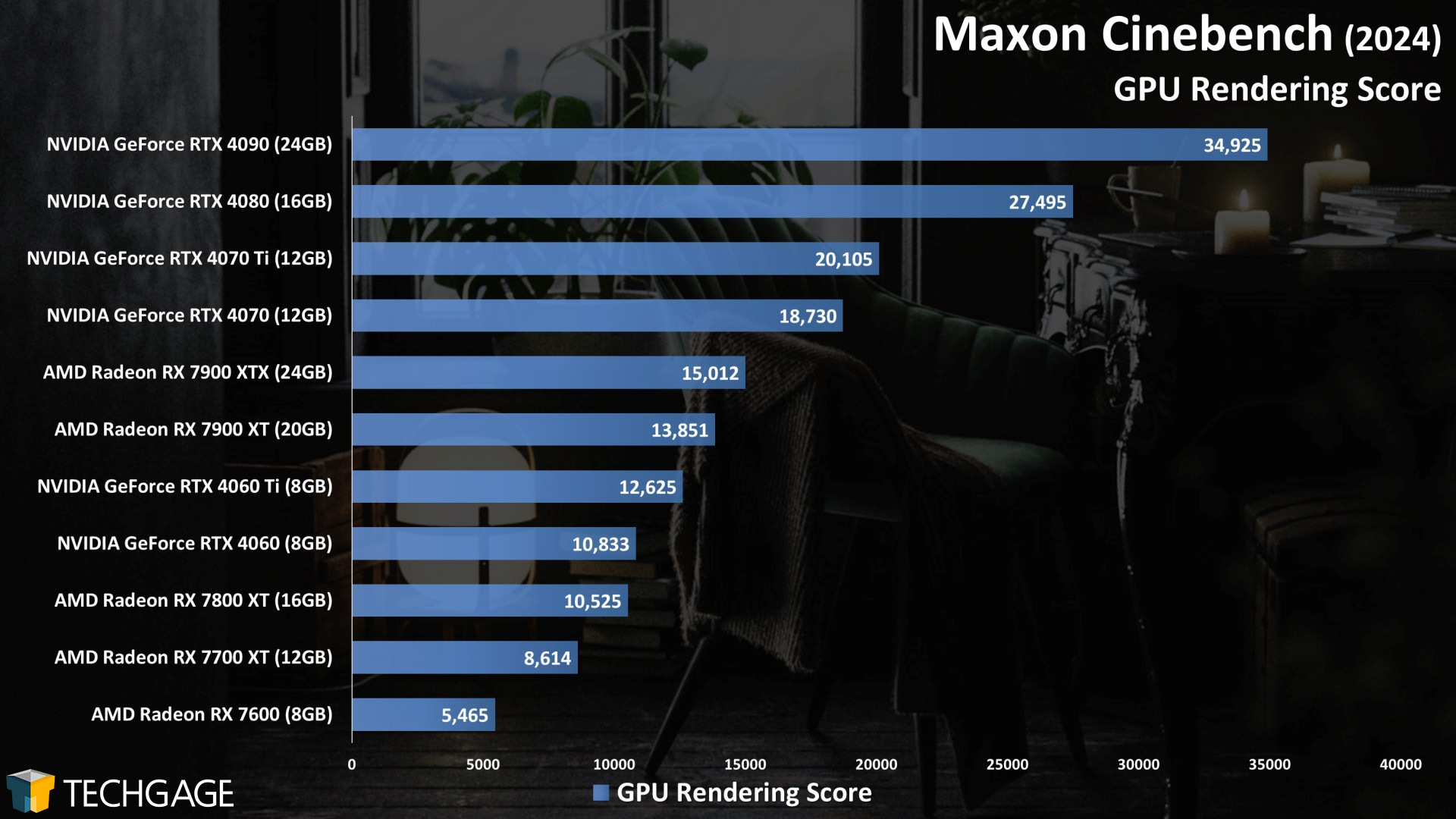

Maxon Cinebench 2024 & Redshift GPU Rendering Performance

Maxon has recently rolled out major updates to both its Cinebench and Redshift products, so we wanted to take a fresh look at GPU rendering performance in each with the help of AMD’s and NVIDIA’s current product stacks. While Redshift recently gained support for AMD Radeon, Intel, with its Arc series, becomes the odd one out.

One of the most popular performance benchmarks on the planet is Maxon’s Cinebench. We’ve been taking advantage of it for CPU-bound rendering tests for ages – it’s actually difficult to recall a time when we didn’t use it. So, when a new version launches, it tends to grab the attention of anyone who loves performance-testing hardware.

The latest version of Cinebench released last month, and it brought about some major changes. That includes wider platform support, including Arm64, for testing on an Apple Mac or Qualcomm Snapdragon computer. It also introduces a GPU rendering test, which at the moment supports only AMD and NVIDIA graphics cards on PC.

In earlier versions of Cinebench, Maxon included a GPU viewport test, but as the usefulness of those results were often questionable (they didn’t scale like our Blender viewport tests do), the feature was dropped. Four years ago, Maxon acquired Redshift, and fast-forward to today, it’s Redshift that acts as the backend for Cinebench’s new GPU rendering test.

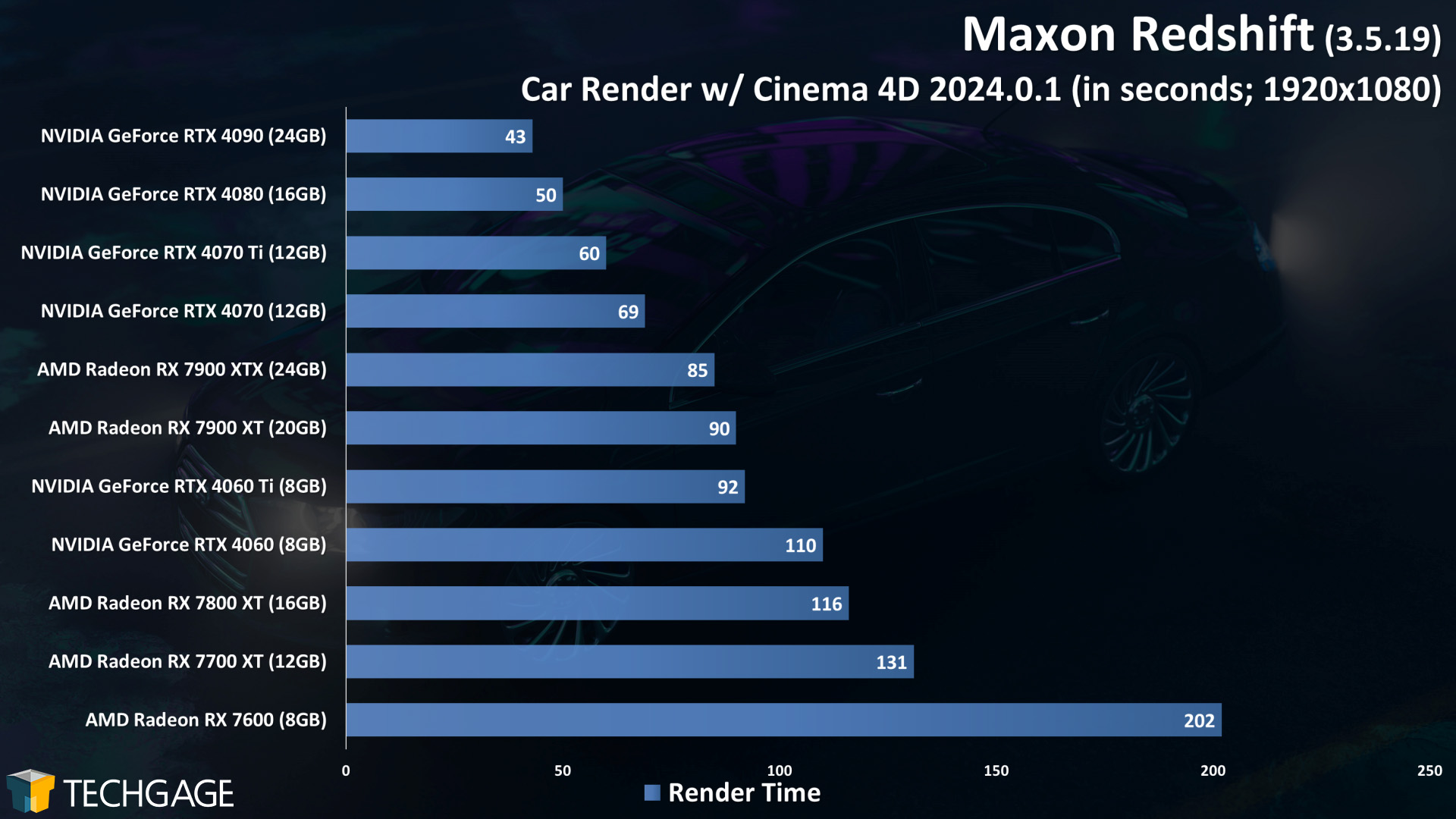

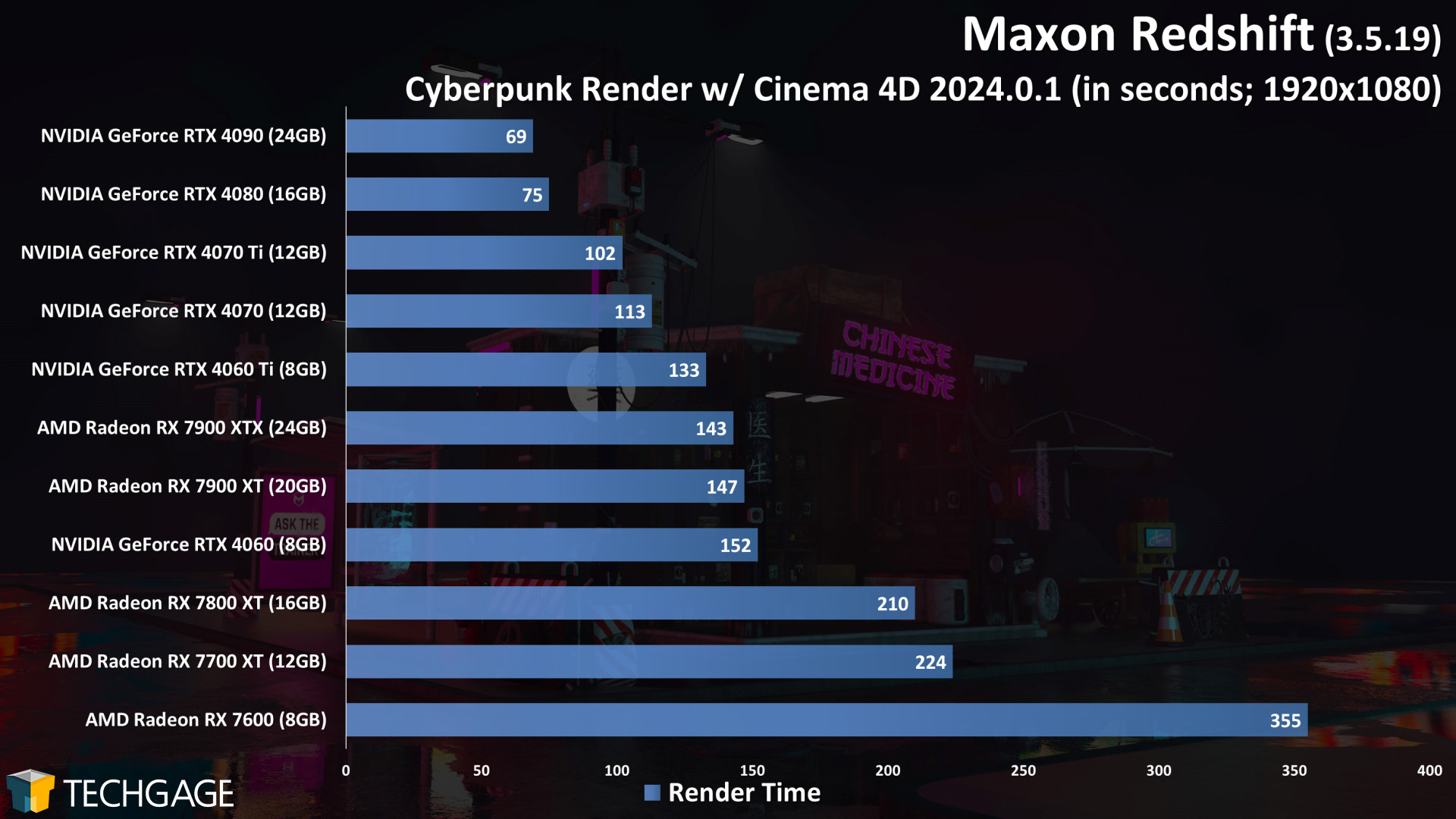

In this article, we’re going to post performance results from our Cinebench testing, as well as include performance for two separate Redshift scenes, tested through Cinema 4D 2024. While we love the simplicity of Cinebench, we wish it was designed to test more than one scene, because not all scene renders will scale the same way across-the-board. Our Redshift results will help pad things out.

| Techgage Creator GPU Testing PC | |

| Processor | Intel Core i9-13900K (3.0GHz, 24C/32T) |

| Motherboard | ASUS ROG STRIX Z690-E GAMING WIFI CPUs tested with 2305 BIOS (March 10, 2023) |

| Memory | G.SKILL Trident Z5 RGB (F5-6000J3040F16G) 16GB x2 XMP-enabled w/ freq. set to DDR5-6000 (30-40-40-96, 1.35V) |

| AMD Graphics | AMD Radeon RX 7900 XTX (24GB; Adrenalin 23.9.2) AMD Radeon RX 7900 XT (20GB; Adrenalin 23.9.2) AMD Radeon RX 7800 XT (16GB; Adrenalin 23.9.2) AMD Radeon RX 7700 XT (12GB; Adrenalin 23.9.2) AMD Radeon RX 7600 (8GB; Adrenalin 23.9.2) |

| NVIDIA Graphics | NVIDIA GeForce RTX 4090 (24GB; Studio 537.42) NVIDIA GeForce RTX 4080 (16GB; Studio 537.42) NVIDIA GeForce RTX 4070 Ti (12GB; Studio 537.42) NVIDIA GeForce RTX 4070 (12GB; Studio 537.42) NVIDIA GeForce RTX 4060 Ti (8GB; Studio 537.42) NVIDIA GeForce RTX 4060 (8GB; Studio 537.42) |

| Storage | WD Blue 3D NAND 1TB (SATA 6Gbps) |

| Power Supply | Corsair RM1000x (1000W) |

| Chassis | Corsair 4000X Mid-tower |

| Cooling | Corsair H150i ELITE CAPELLIX (360mm) |

| Et cetera | Windows 11 Pro 22H2, Build 22621 Intel Chipset Driver: 10.1.19199.8340 Intel ME Driver: 2306.4.10.0 |

| All product links in this table are affiliated, and help support our work. | |

All of the benchmarking conducted for this article was completed using an up-to-date Windows 11 (22H2), the latest Intel chipset driver, as well as the latest (as of the time of testing) graphics driver.

Here are some general guidelines we follow:

- Disruptive services are disabled; eg: Search, Cortana, User Account Control, Defender, etc.

- Overlays and / or other extras are not installed with the graphics driver.

- Vsync is disabled at the driver level.

- OSes are never transplanted from one machine to another.

- We validate system configurations before kicking off any test run.

- Testing doesn’t begin until the PC is idle (keeps a steady minimum wattage).

- All tests are repeated until there is a high degree of confidence in the results.

Based on the sheer amount of cross-vendor render testing we’ve done in the past, the results here scale largely to our expectations. NVIDIA’s top-end GeForce RTX 4090 has no problem looking dominant on top, and AMD’s Radeon GPUs struggle to keep up with lesser-expensive competition.

For some proof at just how far ahead of the game NVIDIA is in rendering, note that the scores between the $299 RTX 4060 and $499 RX 7800 XT are effectively the same. AMD’s top-end Radeon RX 7900 XTX, available for around $900, falls a fair bit behind NVIDIA’s $600 RTX 4070.

Let’s see how Redshift with our own chosen projects fares:

We’ve only tested with two Redshift projects, but each highlights what we’re talking about when we say there is value in testing more than just one scene. As far as modeling projects go, the Car one we use in testing isn’t nearly as complex as the Cyberpunk one. If you look at the bottom three results in each graph, it’s easy to see how Radeon begins to struggle a bit more once the scene complexity increases.

Final Thoughts

First thing’s first: cheers to Maxon for implementing a GPU rendering test in Cinebench. It seemed inevitable that it was going to happen, but now that it has, it’s great to have. We would have loved to have seen a viewport test, as well, although in our experience of trying to create our own with Cinema 4D, none of the available viewport modes result in interesting scaling from top to bottom.

After poring over the performance results above, we’re not too surprised about anything we’ve seen. AMD has talked lots about its newfound support in Cinema 4D and Redshift, but as our results highlight, Radeon should not be the target if Redshift is your primary workflow. NVIDIA’s rendering prowess is just too much for the competition.

This leads us to two thoughts. First, even if performance between AMD and NVIDIA was on par, we’d still not suggest Radeon at this point in time because our experience in testing gives us the vibe that NVIDIA is going to be more stable in the long-run. With Radeon, we encountered the occasional hung test in Cinebench that had us reboot and try again. Across all testing, the Radeon RX 7600 is the one that gave us the most hassle. We’d suggest going with a card that has more than 8GB of memory to begin with, but this result could mean it’s even more important on Radeon to have lots of VRAM.

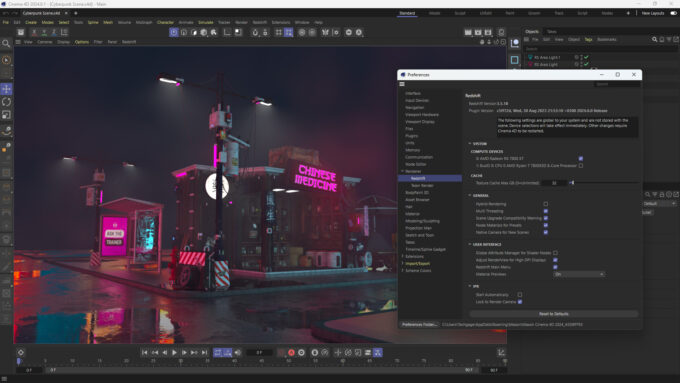

Our experience with Radeon in Cinema 4D itself was better, although we still had some hiccups that had us questioning things. Rendering itself inside of Cinema 4D with Radeon seemed stable for the most part, but there were still occasions when oddities made us reboot before running the second render test (eg: Windows UI misbehaving, or desktop crashing).

If you love Radeon, and have intentions to use Redshift, you should seek out real-world experiences of users who’ve put good time in. We can only performance-test, not actually use the software as it’s meant to be. But the fact we encountered a few hiccups when doing so little makes us feel uneasy about suggesting the pairing. All of this said, Maxon is continually updating Redshift, as is AMD with its Radeon GPU driver, so stability should improve over time.

With Intel now offering discrete graphics solutions, we hope that Maxon plans on adding support for Arc in the future, because it’s support that deserves to be added. We’ve talked before about Arc’s strong rendering performance, so considering what we see out of Radeon, it seems like Intel should be supported. It’s not as though Maxon rushed to get Radeon support in because the performance was better than GeForce, after all. The day when all major render engines support all of the big vendors is going to be a good one.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!