- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Move Aside, GTX 680: NVIDIA GeForce GTX 770 Review

Though it might seem a bit unusual to see NVIDIA let loose its GTX 770 a mere week after its 780 launch, here’s something to clear things up: $399. Built on GK104 (not GK110, like the 780), the GTX 770 is in effect a beefed-up GTX 680. It boasts 700 series features, NVIDIA’s latest cooler, and of course, a savings of about $100.

Page 8 – Temperatures & Power

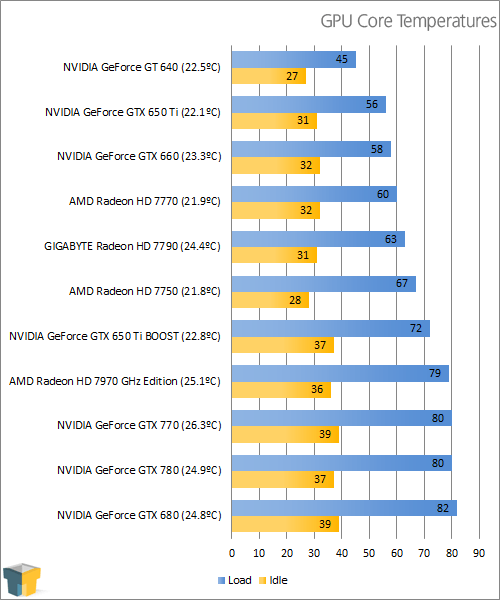

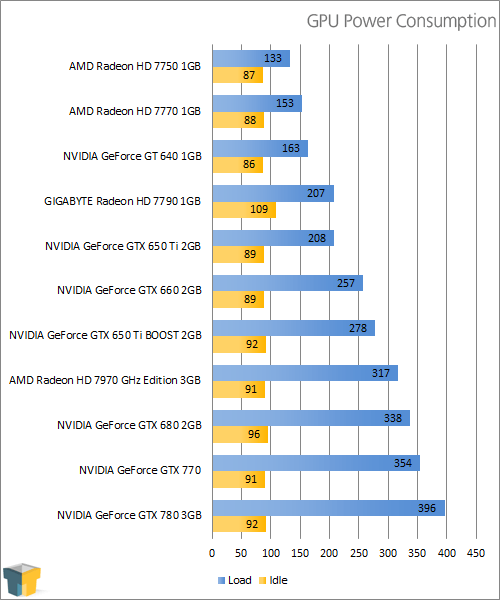

To test graphics cards for both their power consumption and temperature at load, we utilize a couple of different tools. On the hardware side, we use a trusty Kill-a-Watt power monitor which our GPU testing machine plugs directly into. For software, we use Futuremark’s 3DMark 11 to stress-test the card, and techPowerUp’s GPU-Z to monitor and record the temperatures.

To test, the general area around the chassis is checked with a temperature gun, with the average temperature recorded (and thus noted in brackets next to the card name in the first graph below). Once that’s established, the PC is turned on and left to site idle for five minutes. At this point, GPU-Z is opened along with 3DMark 11. We then kick-off an Extreme run of 3DMark and immediately begin monitoring the Kill-a-Watt for the peak wattage reached. We only monitor the Kill-a-Watt during the first two tests, as we found that’s where the peak is always attained.

Note: (xx.x°C) refers to ambient temperature in our charts.

As with the GTX 780 and TITAN, the GTX 770 utilizes GPU Boost 2.0 to limit its temperature to 80°C, which we can see in the chart above. While the GTX 770 did idle at 2 degrees warmer than the GTX 780, it’s likely attributed only to the 1.4°C increase in ambient room temperature.

In the intro, we talked about the GTX 770’s TDP being 35W higher than the GTX 680. In real-world testing, the increase we exhibited was much less, just 16W.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!