- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GeForce RTX 4080 GPU Rendering Performance Review

The second GPU to grace NVIDIA’s Ada Lovelace GeForce lineup has landed: RTX 4080. Like the previously-released RTX 4090, the RTX 4080 continues to show us that the Ada Lovelace generation offers huge upticks in rendering performance over the previous-gen Ampere.

When NVIDIA launched its Ada Lovelace-based GeForce RTX 4090 last month, it delivered what we were hoping for in creator tasks: a notable leap in ray tracing performance over the previous generation. That made a GPU like the RTX 4090 soar far ahead of the rest of the stack, and gave a GPU like the RTX 4080 a good chance to strut its stuff well against the last-gen top-end RTX 3090.

Well, after testing the RTX 4080, we admit we were left surprised in some cases, as the $1,199 option from NVIDIA today easily outpaces the last-gen’s $1,499 RTX 3090 – and even manages to draw less power.

With a name like RTX 4080, it might be easy to assume that it’d be a dual-slot design like every other X080 GPU – leaving the top-end X090 cards to go three-slot. Well, that’d be assuming wrong, as the RTX 4080 is about the same size as the RTX 3090. A larger size means that smaller mid-towers will not be sufficient enough to hold the card, but if you can fit it in, it will deliver strong performance and decently quiet operation (and temperatures) given its large cooler.

You might recall that the Ada Lovelace generation launched with three models. Since that announcement, NVIDIA unlaunched the RTX 4080 12GB, and is expected to relaunch it later as a different model. That leaves us with two total models gracing the current Ada Lovelace lineup:

| NVIDIA’s GeForce Gaming & Creator GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | SRP | |

| RTX 4090 | 16,384 | 2,520 | 82.6 TFLOPS | 24GB 1 | 1008 GB/s | 450W | $1,599 |

| RTX 4080 | 9,728 | 2,510 | 48.8 TFLOPS | 16GB 1 | 717 GB/s | 320W | $1,199 |

| RTX 3090 Ti | 10,752 | 1,860 | 40 TFLOPS | 24GB 1 | 1008 GB/s | 450W | $1,999 |

| RTX 3090 | 10,496 | 1,700 | 35.6 TFLOPS | 24GB 1 | 936 GB/s | 350W | $1,499 |

| RTX 3080 Ti | 10,240 | 1,670 | 34.1 TFLOPS | 12GB 1 | 912 GB/s | 350W | $1,199 |

| RTX 3080 | 8,704 | 1,710 | 29.7 TFLOPS | 10GB 1 | 760 GB/s | 320W | $699 |

| RTX 3070 Ti | 6,144 | 1,770 | 21.7 TFLOPS | 8GB 1 | 608 GB/s | 290W | $599 |

| RTX 3070 | 5,888 | 1,730 | 20.4 TFLOPS | 8GB 2 | 448 GB/s | 220W | $499 |

| RTX 3060 Ti | 4,864 | 1,670 | 16.2 TFLOPS | 8GB 2 | 448 GB/s | 200W | $399 |

| RTX 3060 | 3,584 | 1,780 | 12.7 TFLOPS | 12GB 2 | 360 GB/s | 170W | $329 |

| RTX 3050 | 2,560 | 1,780 | 9.0 TFLOPS | 8GB 2 | 224 GB/s | 130W | $249 |

| Notes | 1 GDDR6X; 2 GDDR6 RTX 3000 = Ampere; RTX 4000 = Ada Lovelace |

||||||

How NVIDIA priced this generation so far is worthy of discussion, because many could assume that the company has decided to structure things in such a way that it encourages anyone to consider opting for the top chip. The RTX 4090 costs 33% more than the RTX 4080, but includes 50% more memory, 41% more bandwidth, and 68% more cores.

NVIDIA could have no doubt launched the RTX 4080 at $999 and given AMD’s upcoming Radeon RX 7900 XTX a serious fight at the same price-point, because the price gap between both top GPUs in the current GeForce lineup just doesn’t follow what we’ve seen in the past. If AMD’s top next-gen card is competitive, we might see RTX 4080 pricing sway a bit.

That all said, we talk about that from an overall standpoint, but in rendering, even if NVIDIA commands a notable price premium, chances are that the performance per dollar is still going to be in the green team’s favor – something our many rendering performance graphs here will highlight.

We’ll leave more discussion to the conclusion. For now, here’s the test rig we used for all of our benchmarking, followed by the test results (we’ll post another article looking at encoding, photogrammetry, math, and viewport performance soon).

| Techgage Workstation Test System | |

| Processor | AMD Ryzen 9 5950X (16-core; 3.4GHz) |

| Motherboard | ASRock X570 TAICHI (EFI: P4.80 03/02/2022) |

| Memory | Corsair Vengeance RGB Pro (CMW32GX4M4C3200C16) 8GB x 4 Operates at DDR4-3200 16-18-18 (1.35V) |

| AMD Graphics | AMD Radeon RX 6900 XT (16GB; Adrenalin 22.9.1) AMD Radeon RX 6800 XT (16GB; Adrenalin 22.9.1) AMD Radeon RX 6800 (16GB; Adrenalin 22.9.1) AMD Radeon RX 6700 XT (12GB; Adrenalin 22.9.1) AMD Radeon RX 6600 XT (8GB; Adrenalin 22.9.1) AMD Radeon RX 6600 (8GB; Adrenalin 22.9.1) AMD Radeon RX 6500 XT (4GB; Adrenalin 22.9.1) |

| Intel Graphics | Intel Arc A770 (16GB; Arc 31.0.101.3435) Intel Arc A750 (8GB; Arc 31.0.101.3435) Intel Arc A380 (6GB; Arc 31.0.101.3430) |

| NVIDIA Graphics | NVIDIA GeForce RTX 4090 (24GB; GeForce 521.90) NVIDIA GeForce RTX 4080 (16GB; GeForce 526.72) NVIDIA GeForce RTX 3090 (24GB; GeForce 516.94) NVIDIA GeForce RTX 3080 Ti (12GB; GeForce 516.94) NVIDIA GeForce RTX 3080 (10GB; GeForce 516.94) NVIDIA GeForce RTX 3070 Ti (8GB; GeForce 516.94) NVIDIA GeForce RTX 3070 (8GB; GeForce 516.94) NVIDIA GeForce RTX 3060 Ti (8GB; GeForce 516.94) NVIDIA GeForce RTX 3060 (12GB; GeForce 516.94) NVIDIA GeForce RTX 3050 (8GB; GeForce 516.94) |

| Audio | Onboard |

| Storage | Samsung 500GB SSD (SATA) (x3) |

| Power Supply | Corsair RM850X |

| Chassis | Fractal Design Define C Mid-Tower |

| Cooling | Corsair Hydro H100i PRO RGB 240mm AIO |

| Et cetera | Windows 11 Pro build 22000 (22H1) AMD chipset driver 4.08.09.2337 |

| All product links in this table are affiliated, and help support our work. | |

Our look at rendering performance for NVIDIA’s GeForce RTX 4080 will be revolving around six software solutions – or seven, if you count Blender as two, as both Cycles and Eevee are tested. Unfortunately, a bug with the review GPU driver prevented Luxion’s KeyShot from being tested, so when a new driver comes out, we’ll get that tested and added in.

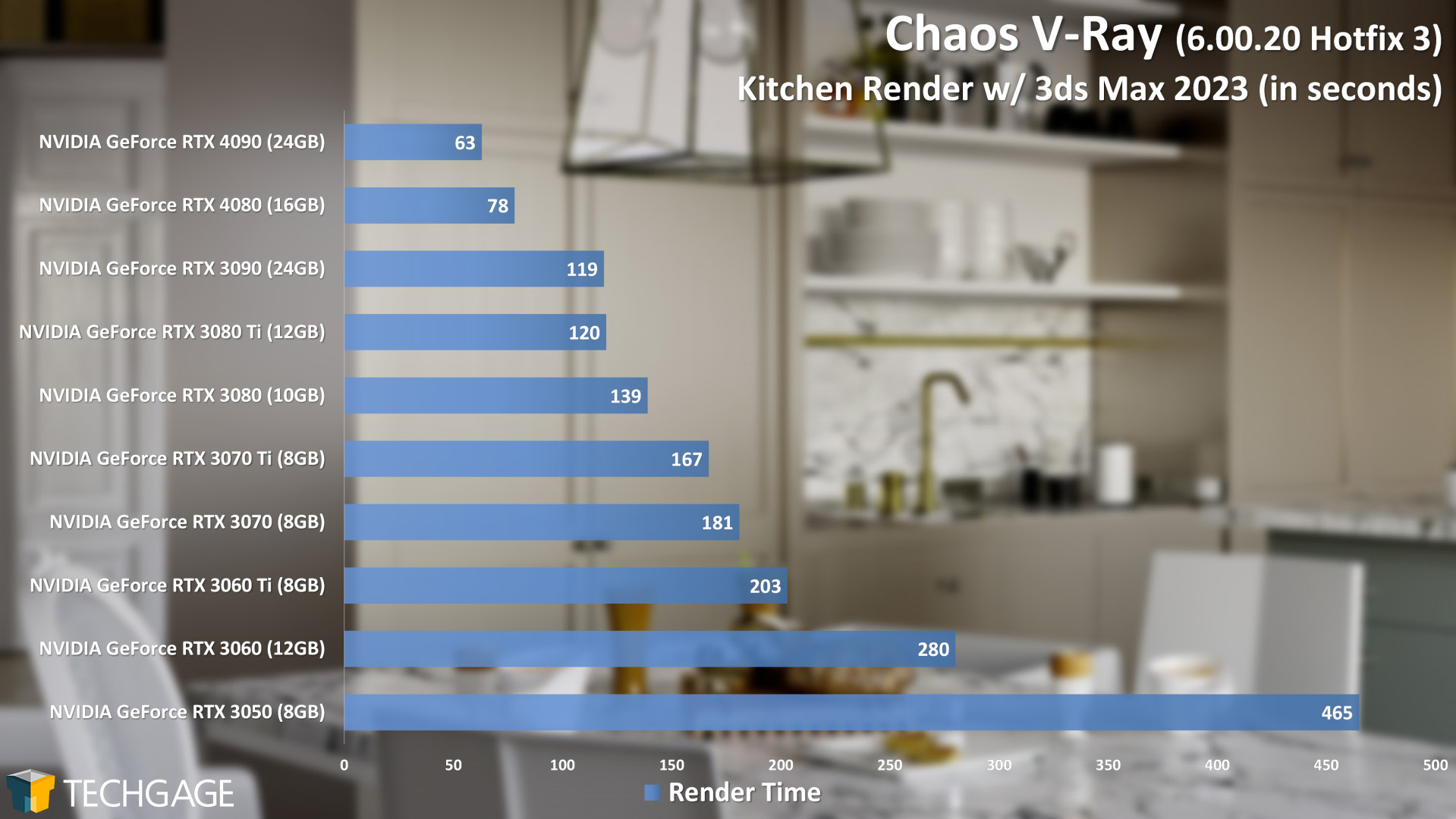

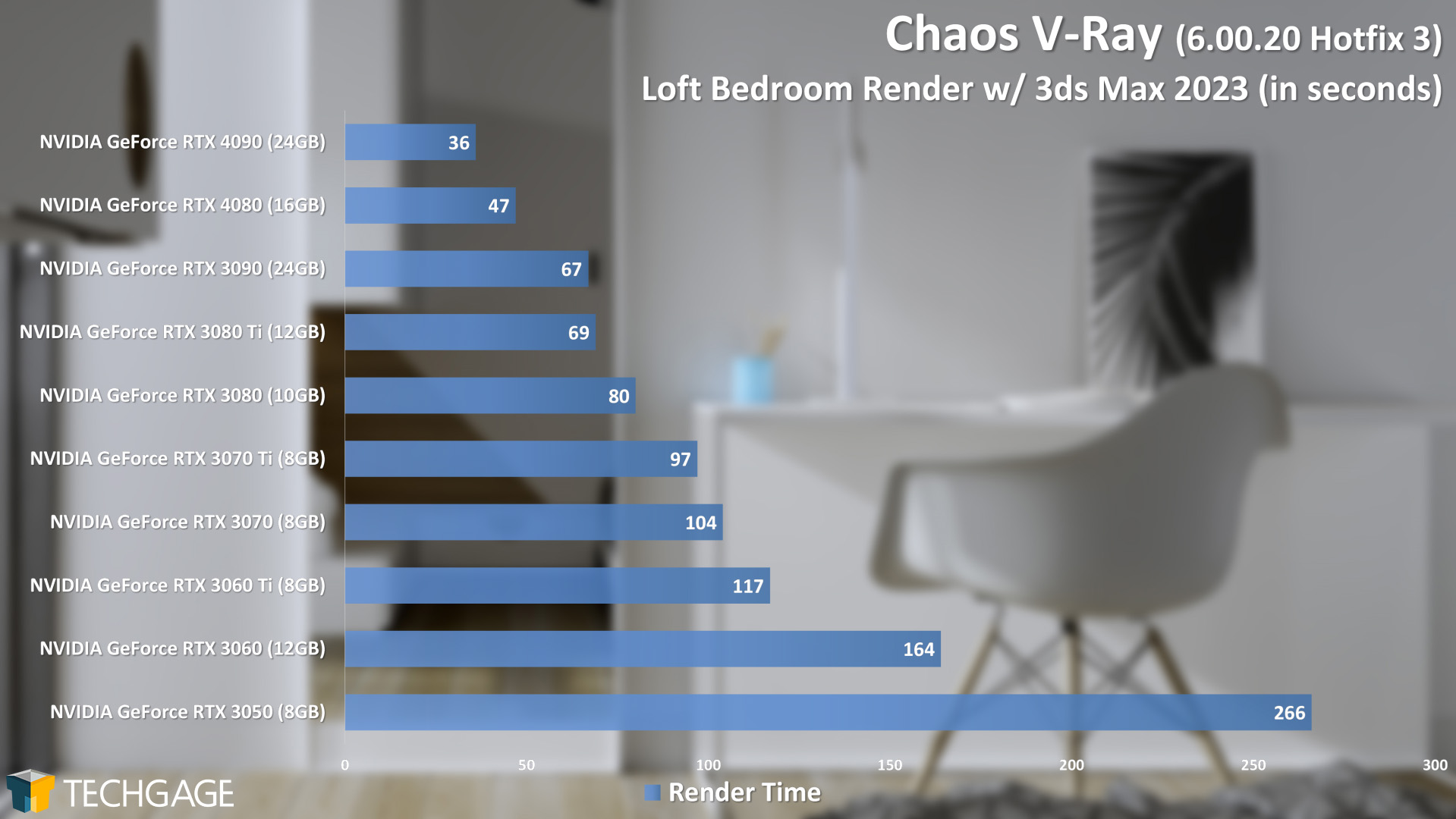

As we did with the 4090 launch article, let’s start with V-Ray:

From the get-go, the RTX 4080 makes it clear that it’s strong at rendering. On one hand, it doesn’t fall too far behind the RTX 4090, but on the other, it soars ahead of a top-end option from the Ampere generation. We don’t have an RTX 3090 Ti to include results for, but its lead over the RTX 3090 wouldn’t be enough to change much in this overall picture.

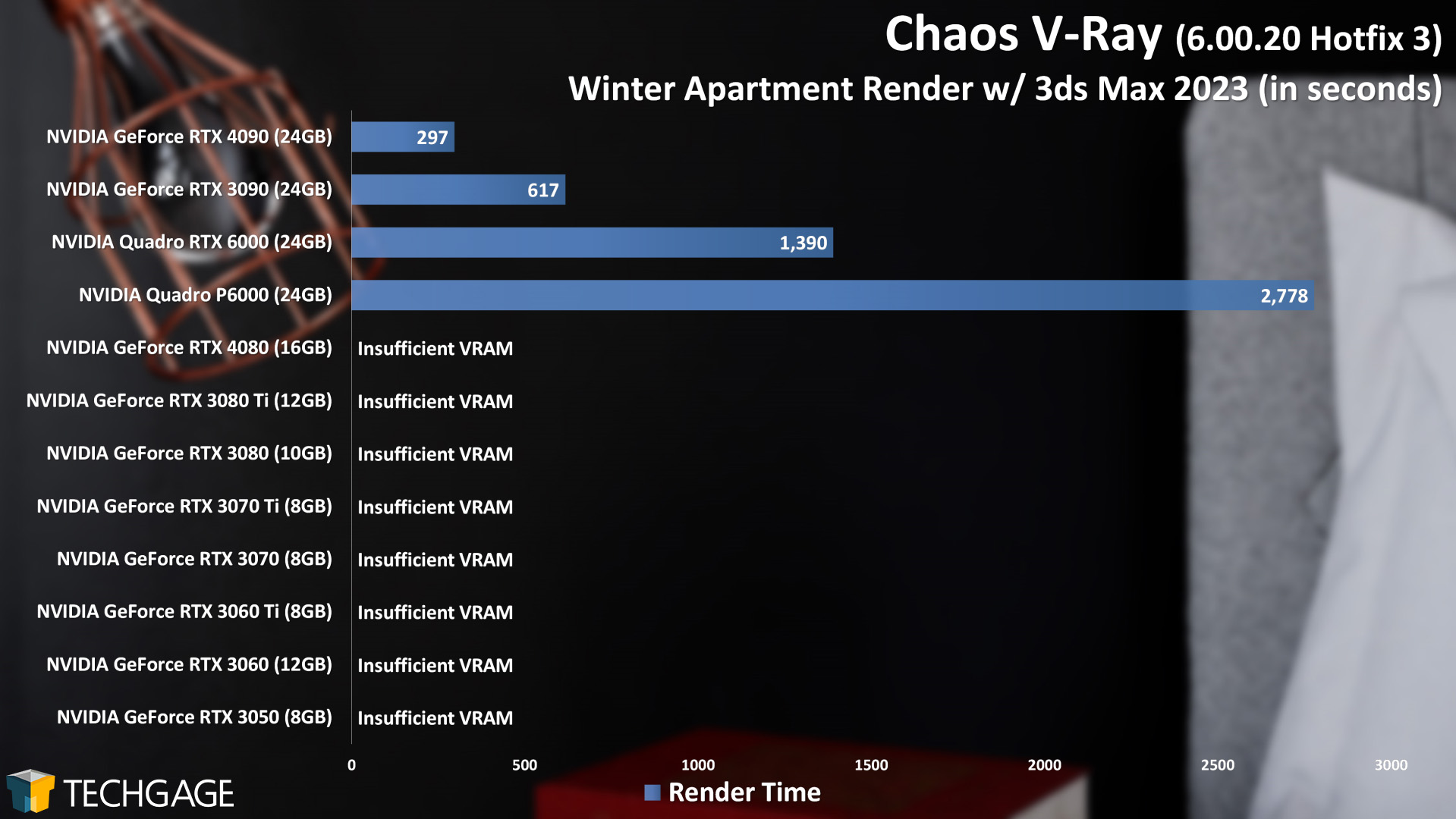

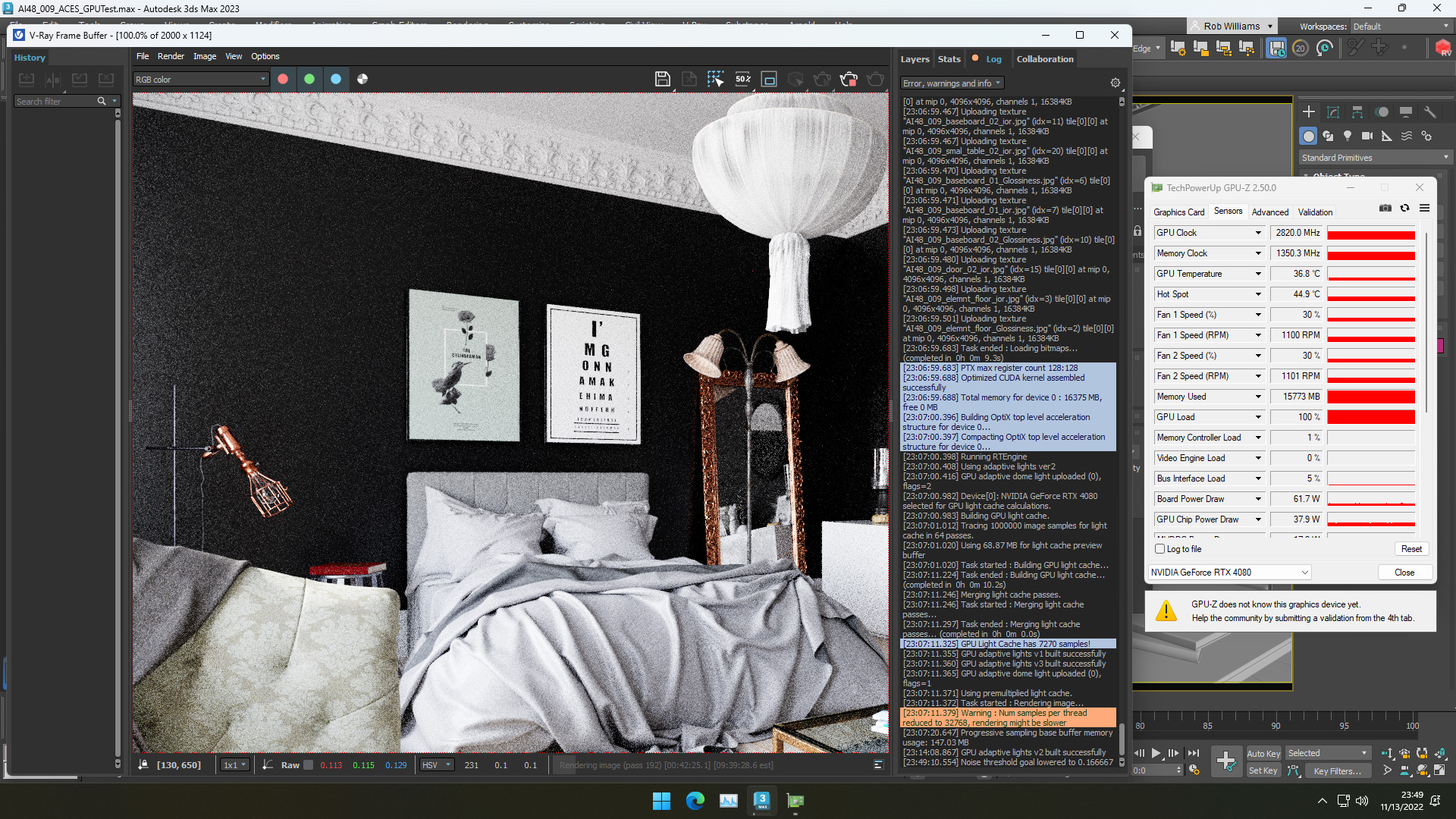

In our RTX 4090 launch article, we took a look at the demanding Winter Apartment project across multiple generations. This is a project that requires more than 16GB of VRAM, but we still couldn’t help but want to see how a GPU of the current Ada Lovelace generation without enough VRAM would actually behave when trying to render the project. Welp:

This seems like a non-result, but it’s an interesting one to us for the fact that the project did actually begin to render – it just would have taken ages to complete. We assume that’s because of the limited frame buffer, and if there were a few gigs more on tap, the entire render would have been more efficient and delivered a result that would slide in nicely.

We say that this project requires more than 16GB of memory, but the fact is, it may very well require closer to 20GB. On previous-gen NVIDIA cards, and ignoring the 24GB SKUs, the top model from last-gen had 12GB of VRAM. 100% of the GPUs from that line-up – aside from RTX 3090 – failed the Winter Apartment render immediately. With RTX 4080, it actually did try to do the work, but its maxed-out memory held it back:

In the above shot, you can look at the GPU-Z window to see full GPU utilization, as well as maxed-out VRAM. To give a clear idea of how inefficient this project was rendering: the RTX 4090 took 297 seconds to clear it, whereas our run implied that the RTX 4080 would take close to 10 hours.

The lesson? If you are planning to work with truly demanding 3D projects, you’ll want to make sure your frame buffer is not going to hold you back, because clearly, not even 16GB of memory is enough in all cases.

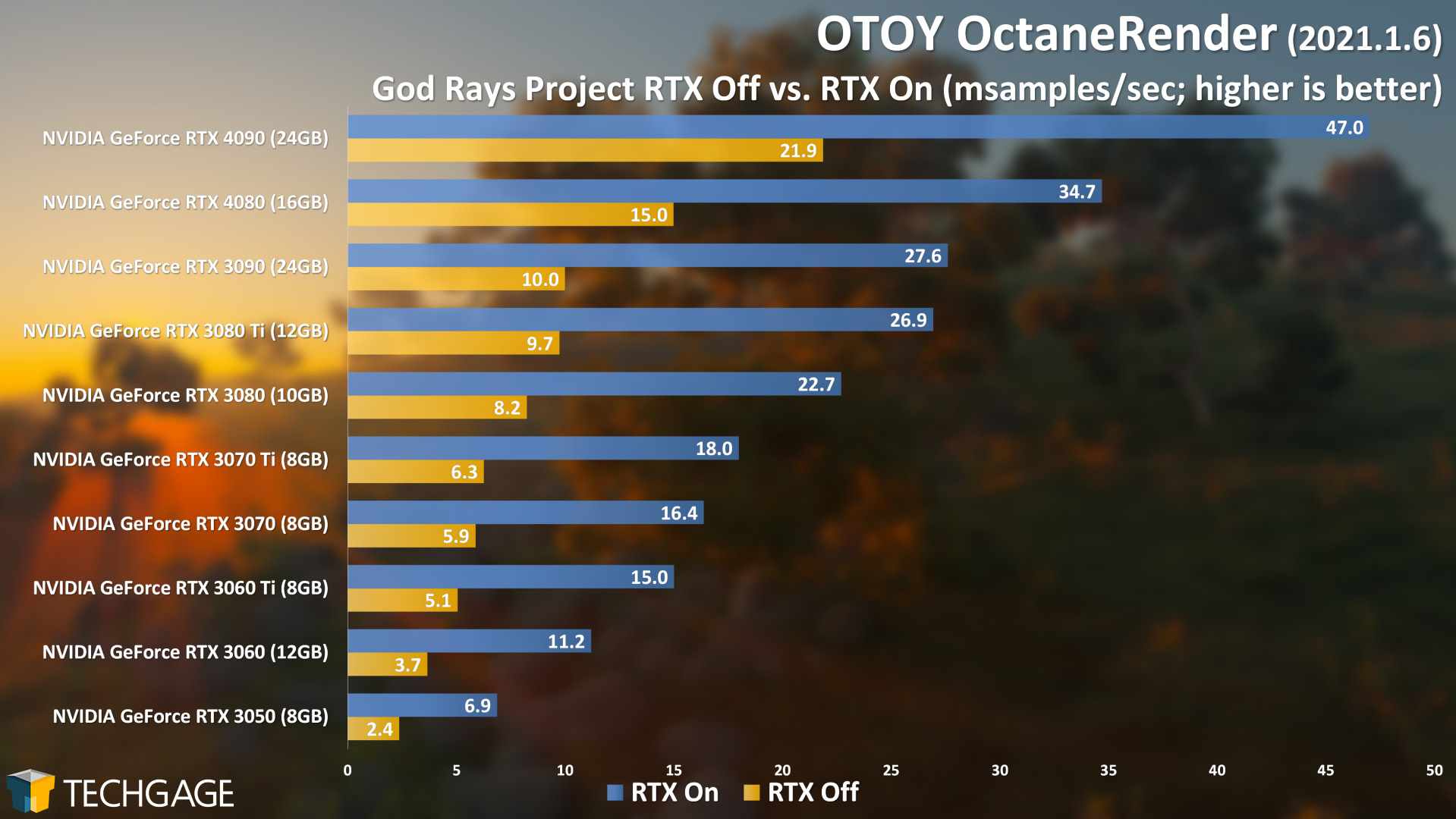

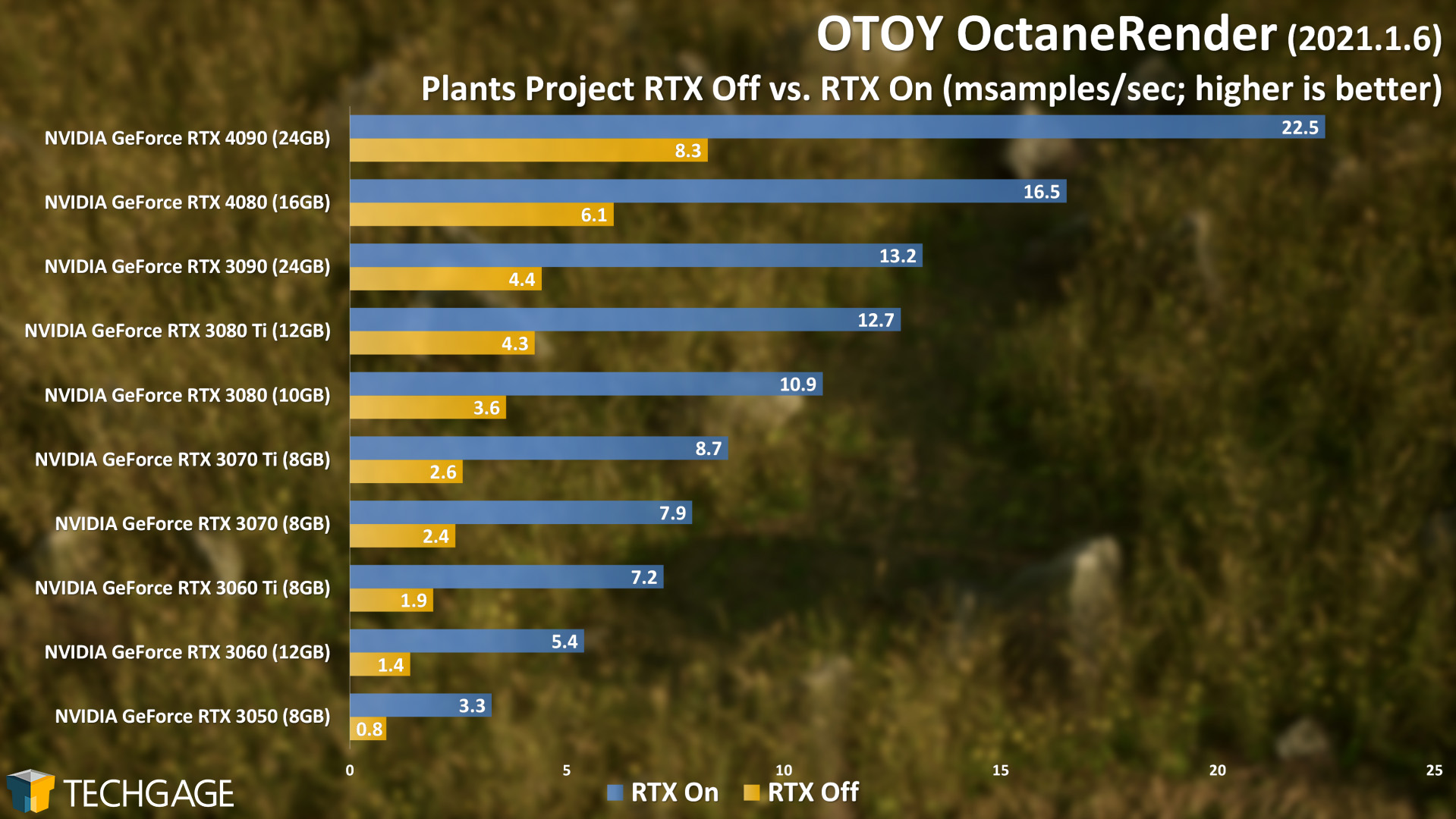

One of our favorite rendering engines to test with, Octane, continues to put the RTX 4080 in a good light:

From a generational stand-point, it’s nice to see strong gains on this gen’s $1,199 RTX 4080 over last-gen’s $1,499 RTX 3090.

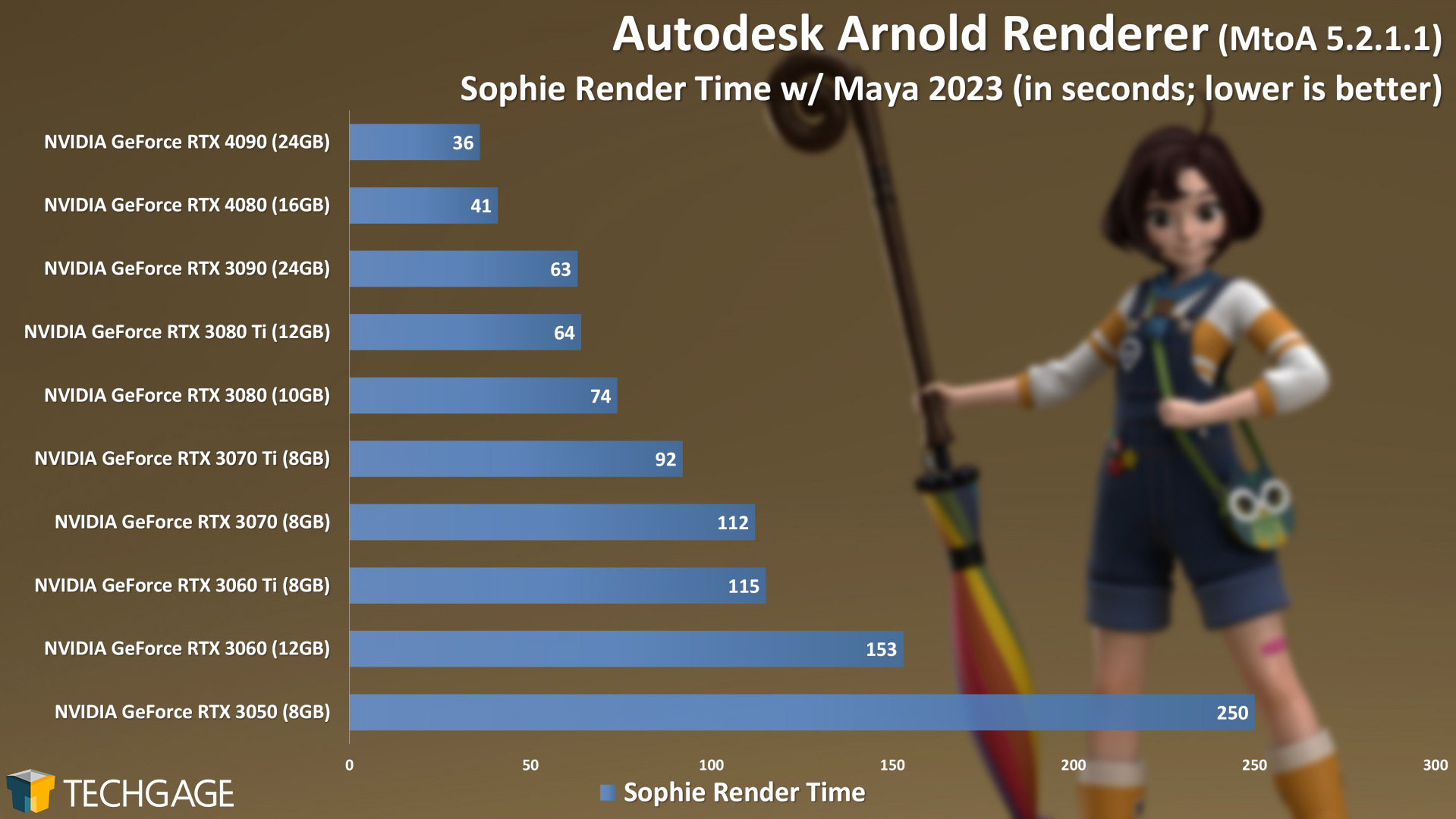

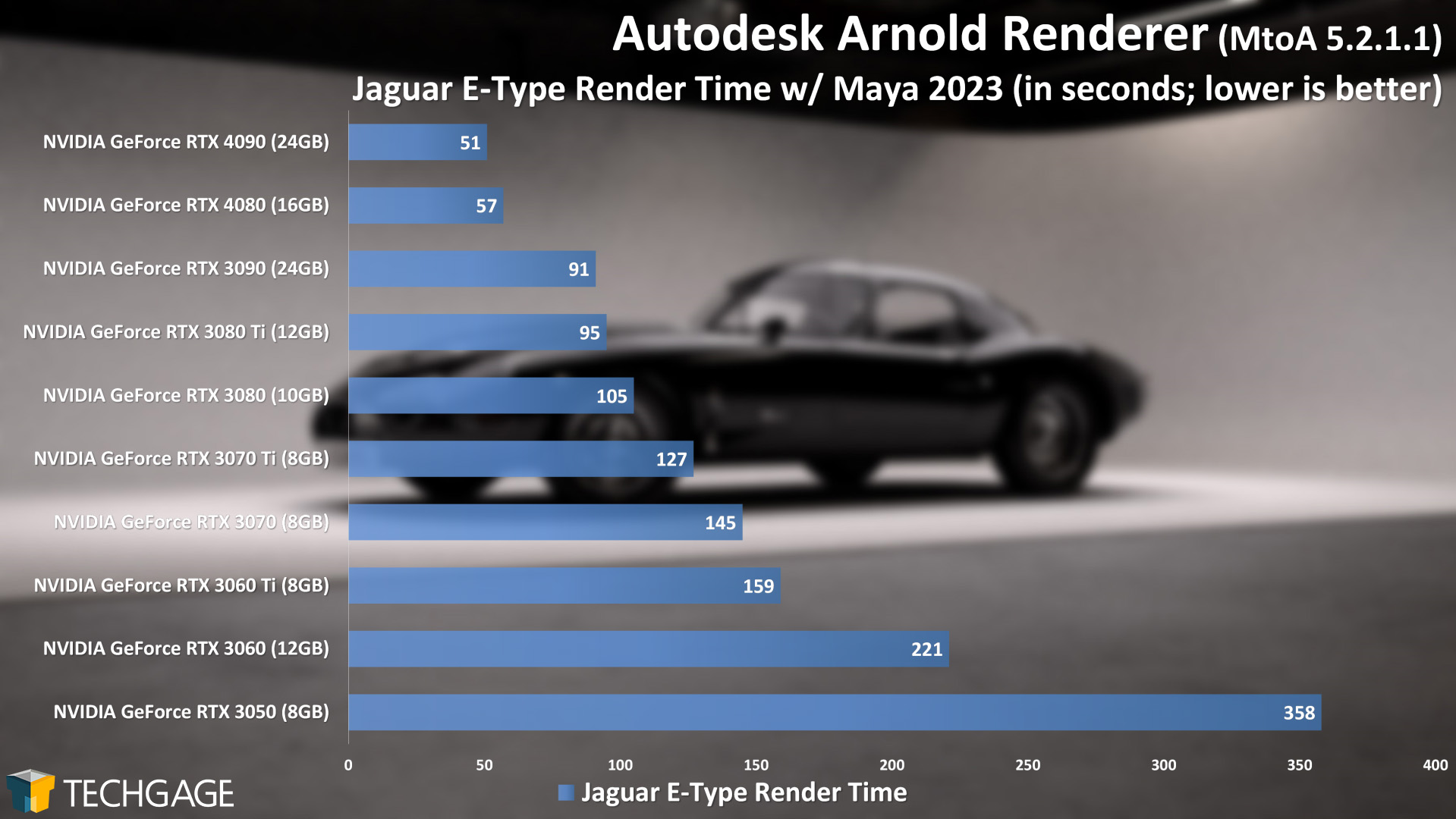

Hey Arnold! How does thee fare?

After seeing notable performance differences between RTX 4080 and RTX 4090 in the previous tests, it’s really interesting to see modest separations here. This performance does make the RTX 4080 look particularly attractive, as despite not placing too far behind the RTX 4090, it still soars ahead of the RTX 3090 and remaining Ampere lineup.

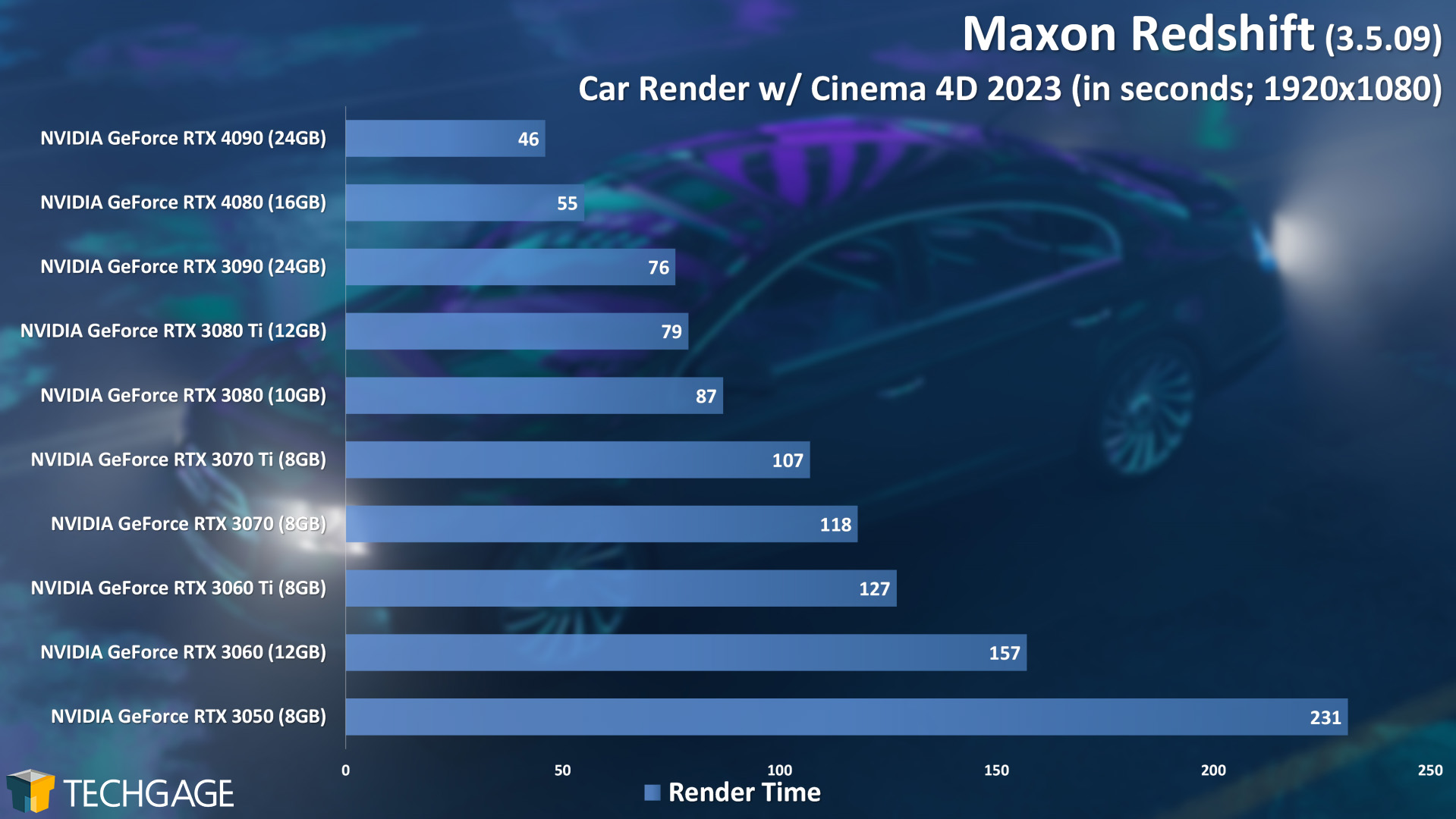

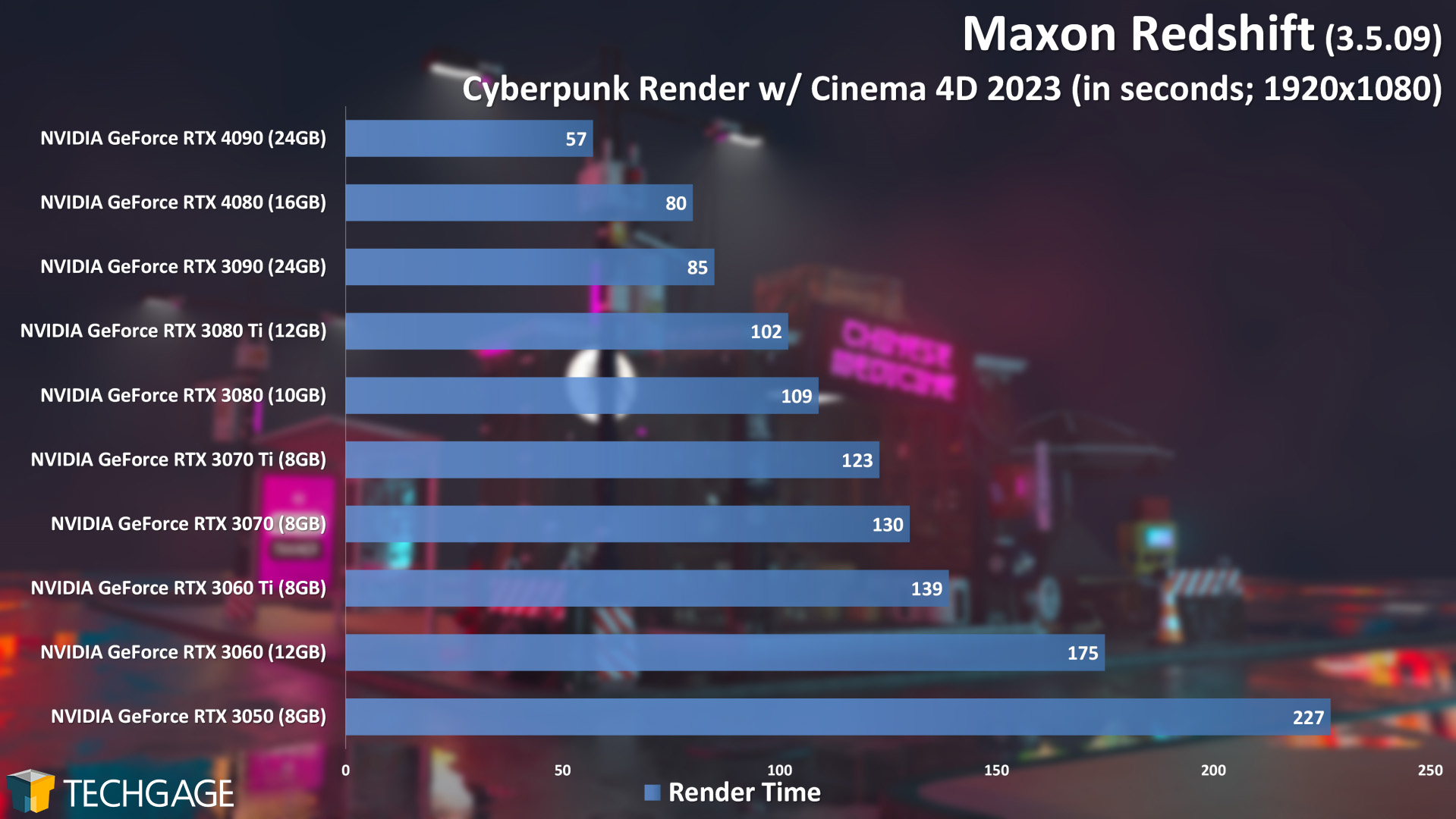

With Maxon’s Redshift, we see similarly modest scaling differences with the simpler Car project, but much wider scaling in Cyberpunk:

We’re not entirely sure why there is a larger performance gap between the 4090 and 4080 across these two tests, but we’ll have to chalk it up to the fact that the Cyberpunk scene is more complex, and thus the beefier GPU handles it more efficiently. We don’t believe the 24GB frame buffer on the 4090 has anything to do with this, as we found both projects to use the same amount of VRAM on the 4080 (about 14GB).

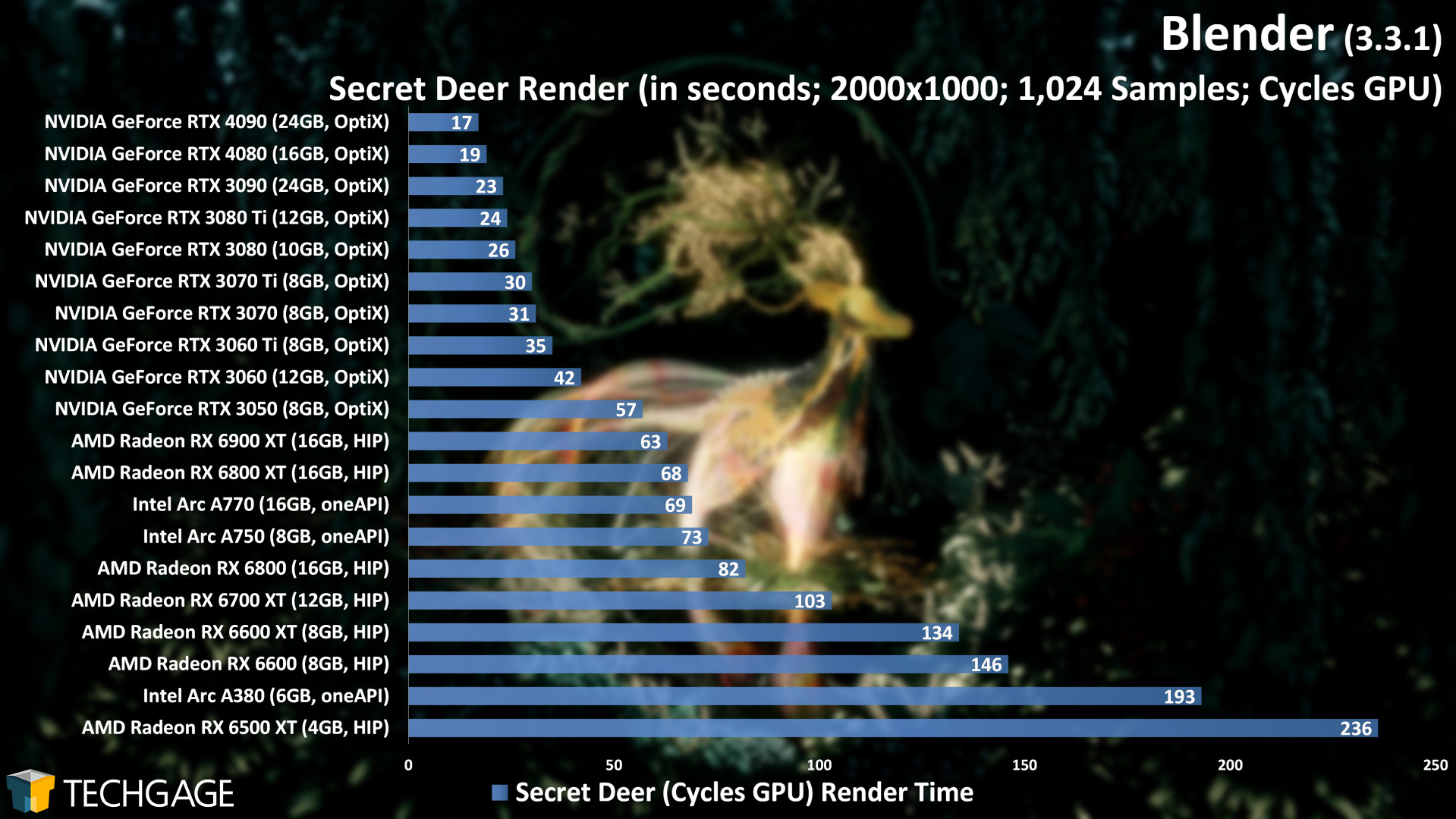

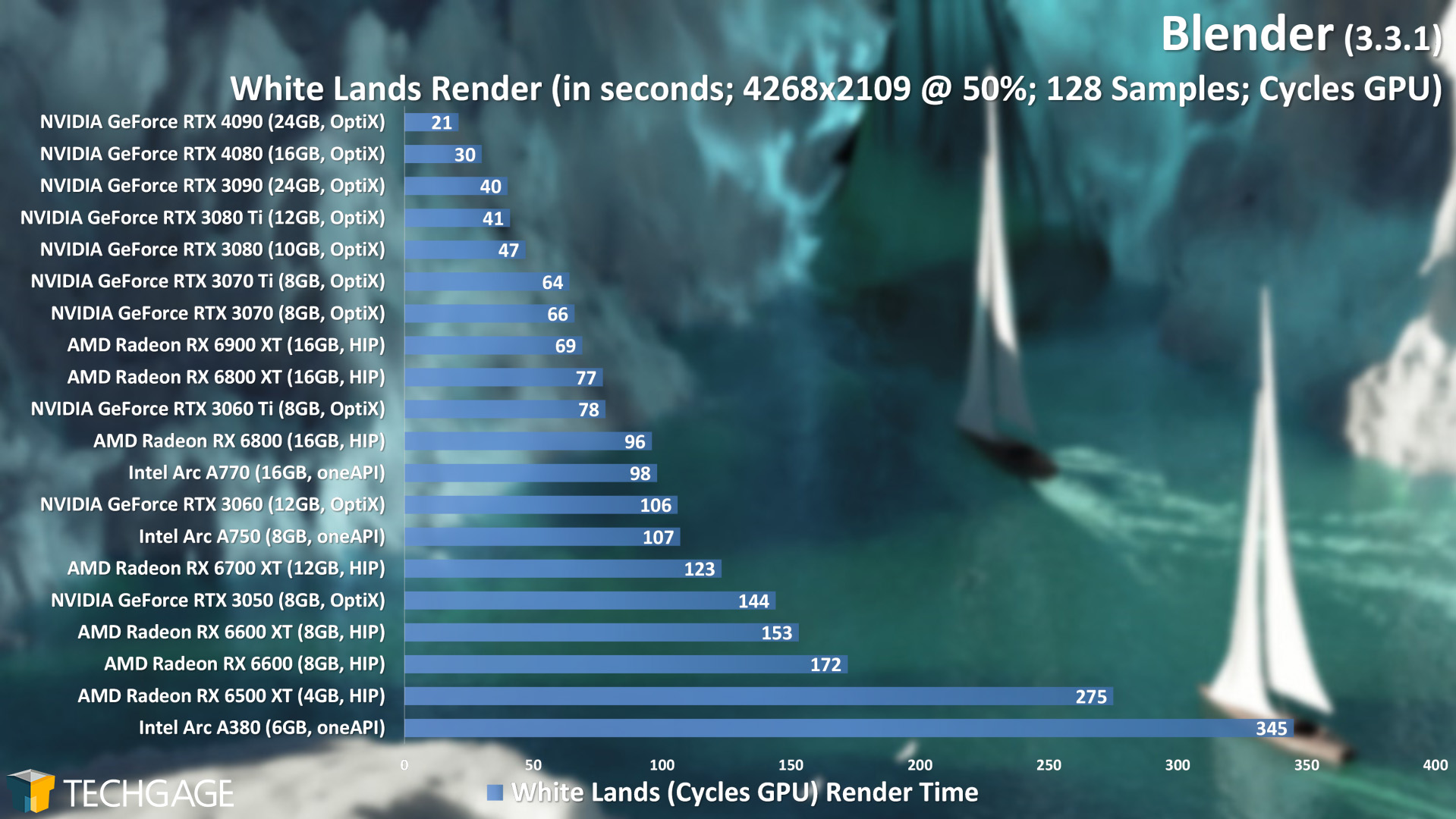

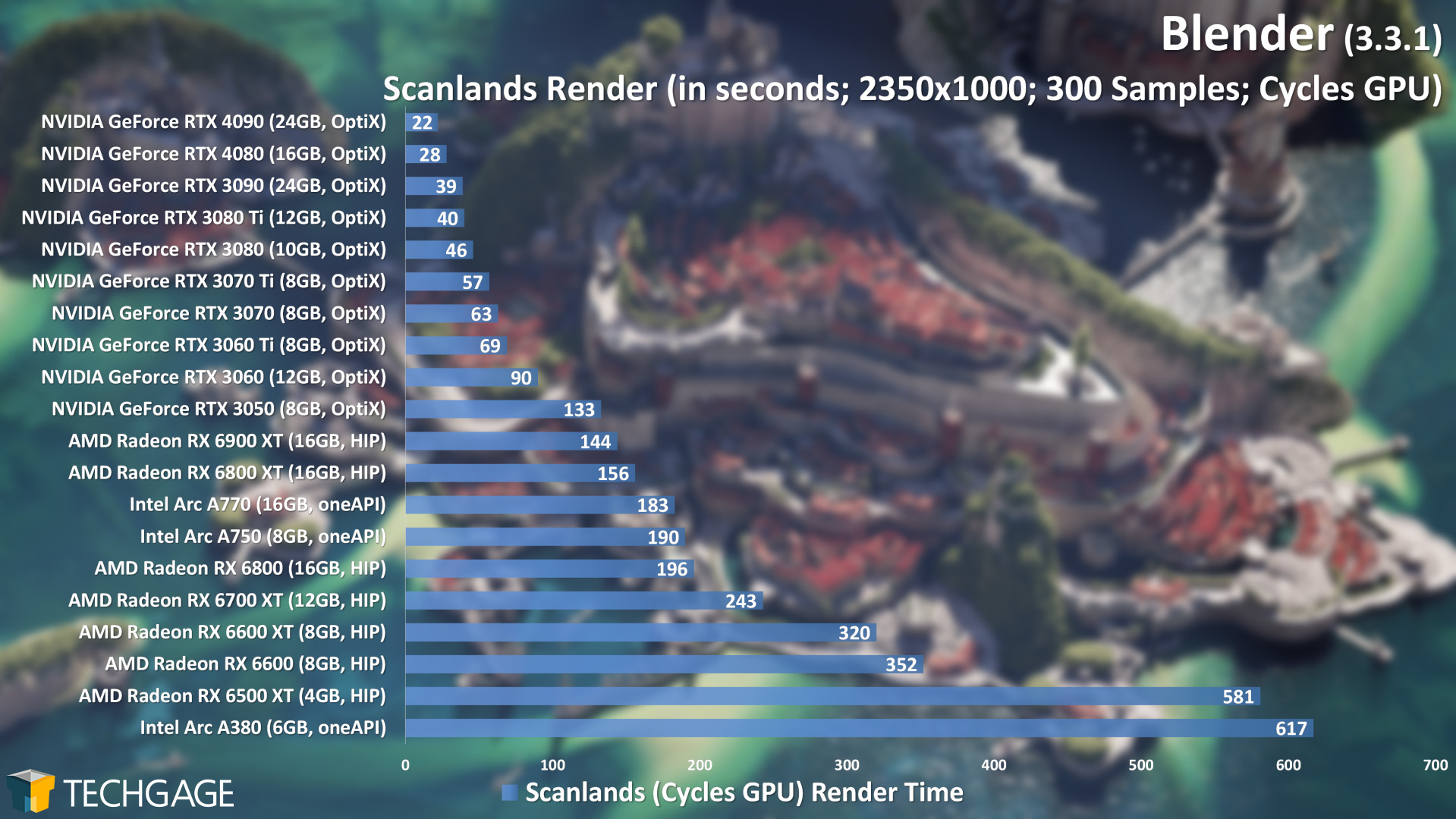

The Blender tests we’re including in our testing here differ a wee bit over those found in the RTX 4090 launch article. Since that article was posted, we published our Blender 3.3 deep-dive, which got rid of the classic BMW and Classroom projects, and replaced them with three modern projects (which are tested as-is, so feel free to test your performance against our numbers).

Let’s kick off our Blender testing with a look at Cycles:

In the quicker-to-render Secret Deer scene, there isn’t much of a gap between the two Ada Lovelace cards, but the other two projects which take longer to render do reveal wider ones – especially White Lands.

Overall, one of the most obvious takeaways here is that NVIDIA’s OptiX is so effective, that it’s impossible for AMD or Intel to catch up to its fast render times. Eevee is a bit different, as it doesn’t take advantage of accelerated RT, and thus levels the playing field a bit:

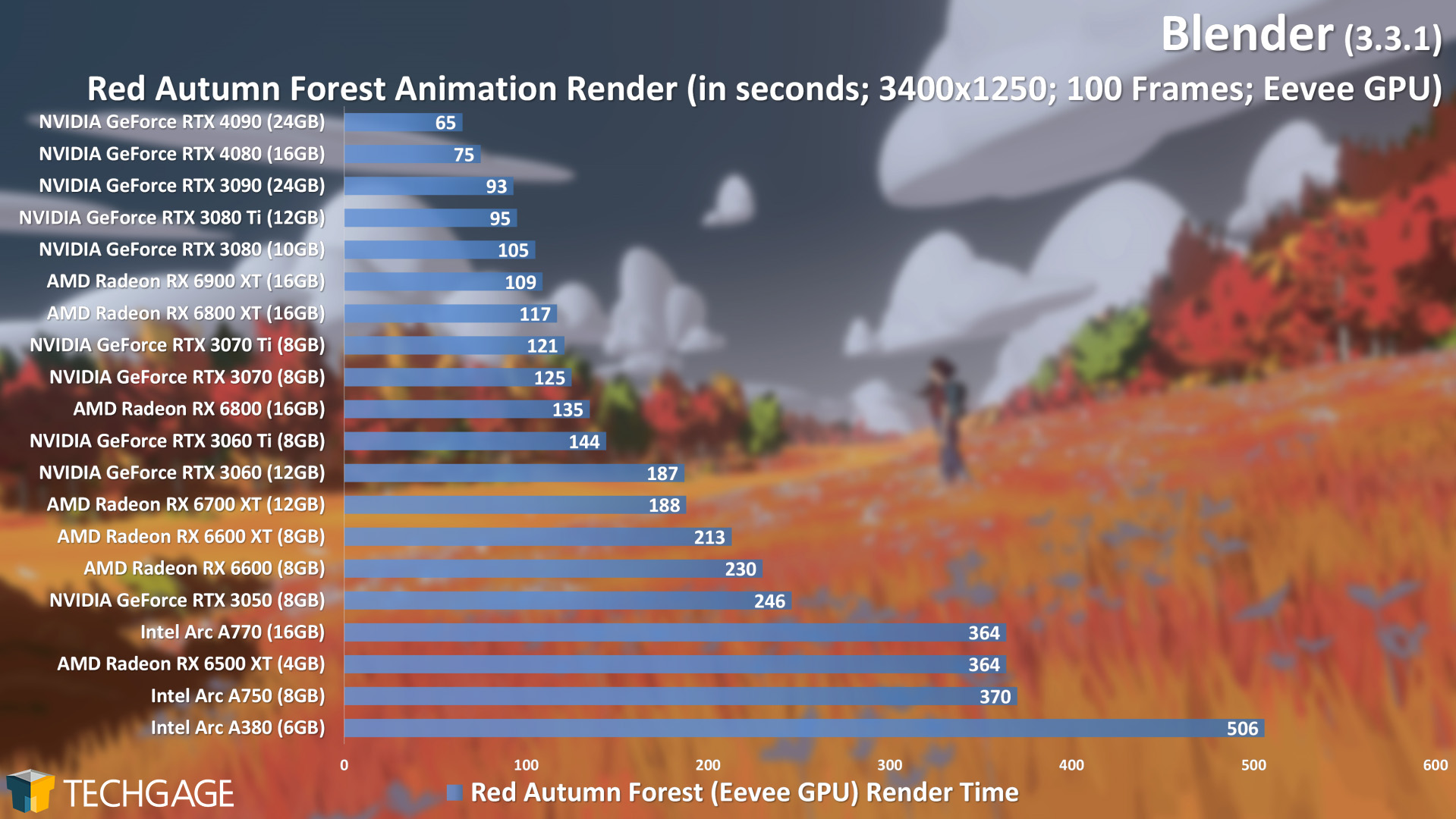

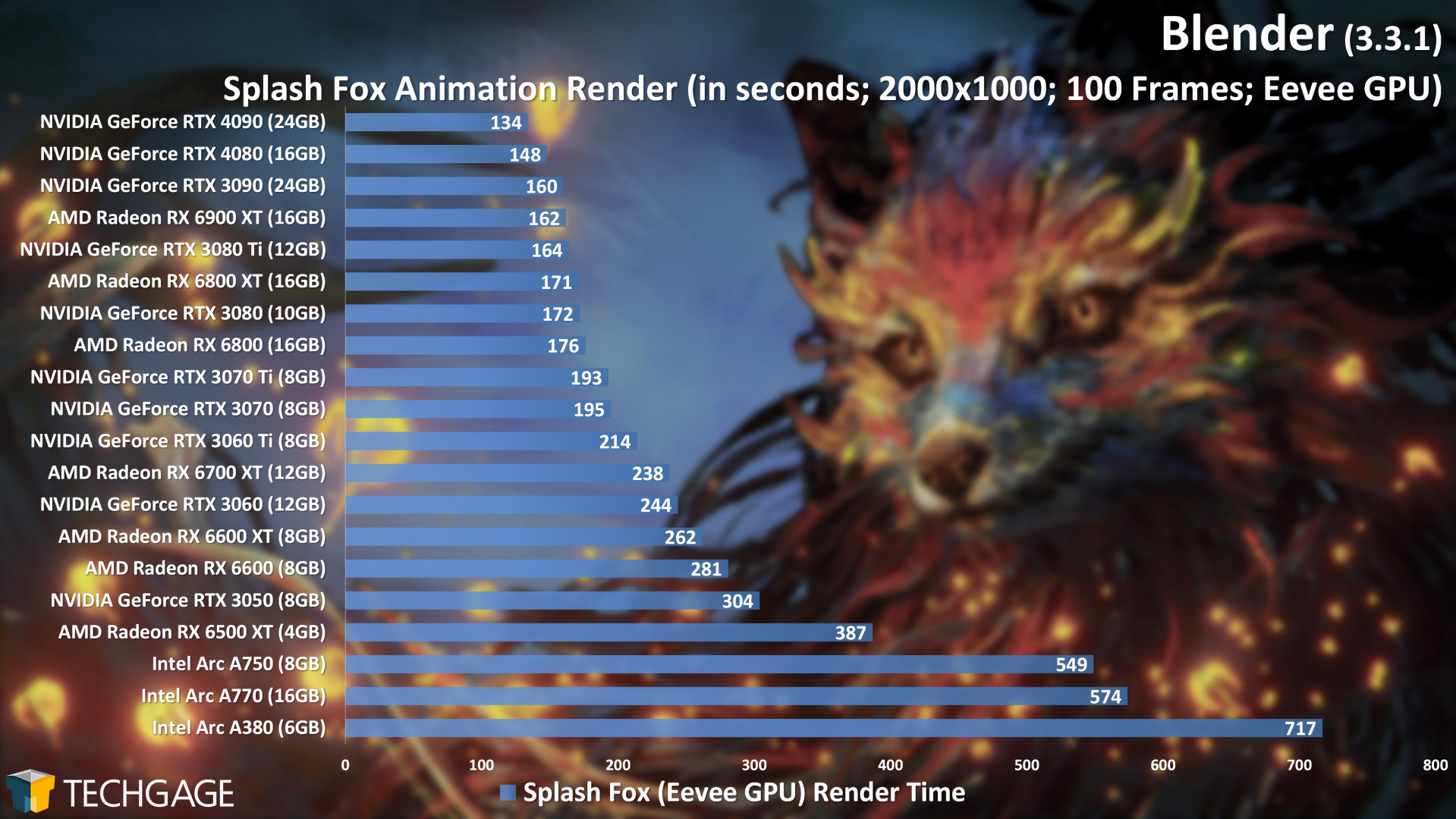

As we saw in the Cycles tests, Ada Lovelace offers huge gains over the Ampere generation, just as Ampere did over Turing. Eevee is more neutral, as stated, and so even the Ampere to Ada Lovelace generational gains are even more modest than we’d expect.

As we’ve covered before, Splash Fox is an interesting project in that there is a lot of CPU work going on in between each frame render. This is an unfortunate case where the CPU intervenes so often, that the resulting scaling isn’t too interesting, but it still represents a real project some will create. Red Autumn Forest is simpler in design, using the CPU very little, resulting in slightly more interesting scaling.

Overall, the Ada Lovelace GPUs top the charts here, but depending on the project design, a last-gen GPU could still serve you very well – either GeForce or Radeon. Unfortunately, Intel’s current Eevee performance leaves a lot to be desired.

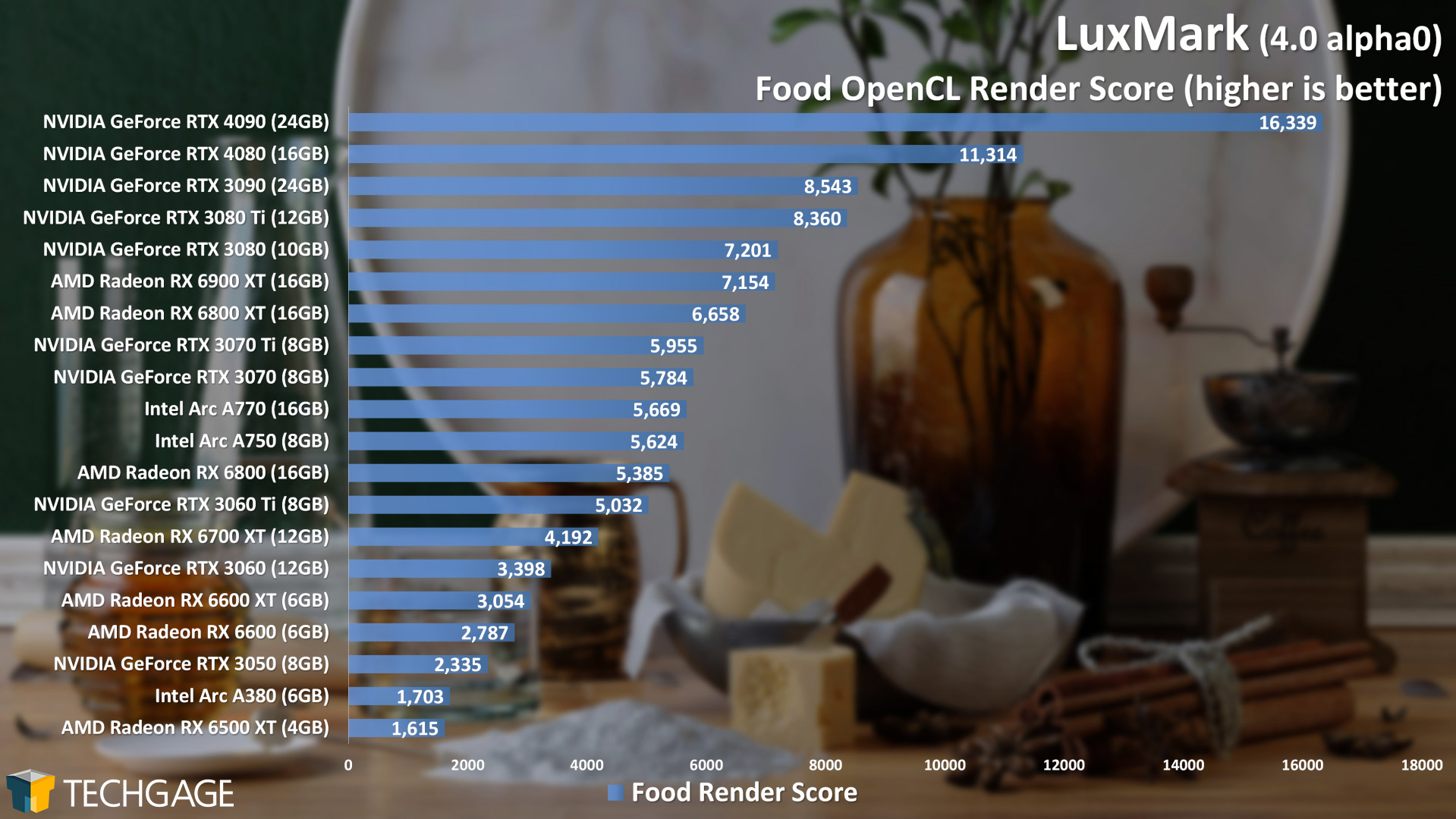

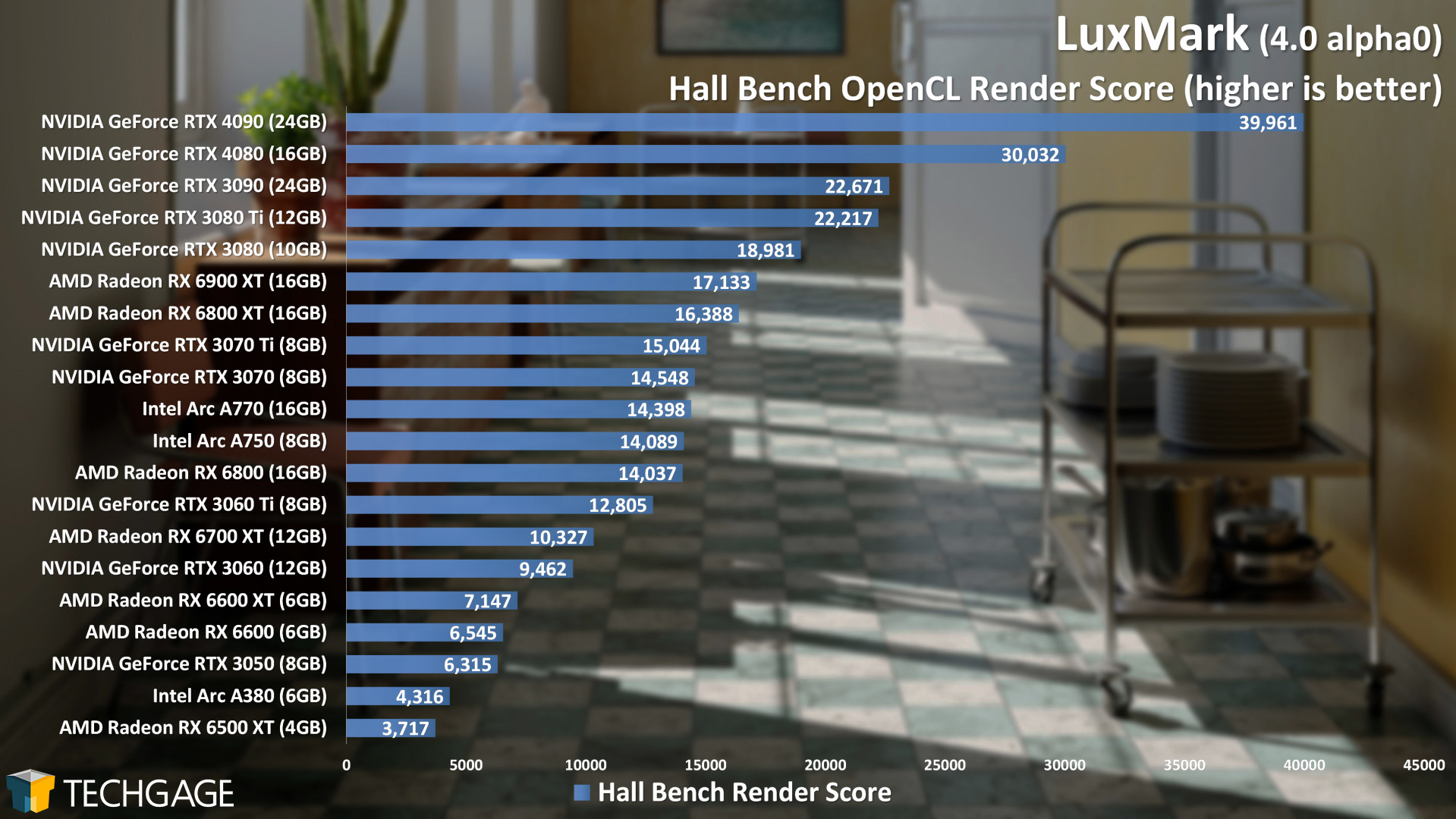

As mentioned earlier, Luxion KeyShot crashes on the RTX 4080 review driver, so to wrap things up here, we’ll take a look at LuxMark – another simple benchmark that you yourself can run easily enough if you want to compare your performance to ours.

When we posted our RTX 4090 launch article, LuxMark was a shining example of its generational performance gains. While the Food project saw a score of 8.5K on the RTX 3090, the RTX 4090 boosted that to a staggering 16.3K – almost double. With the scaled-back RTX 4080, we can still easily see just how much Ada Lovelace has improved rendering performance gen-over-gen, as 4080 again easily soars past the previous flagship, RTX 3090.

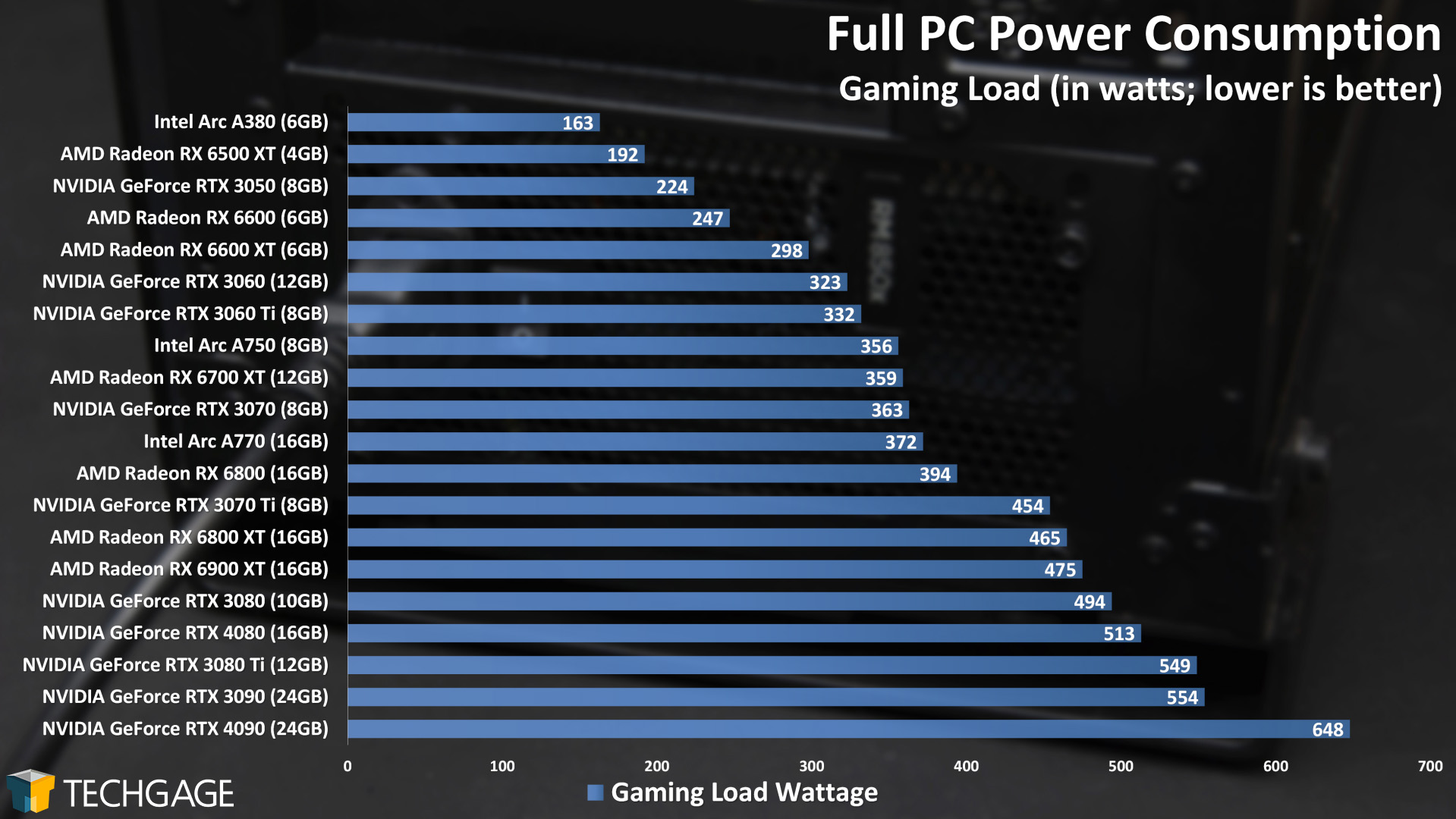

Power Consumption

To test the power consumption of our selected graphics cards, we utilize a Kill-a-Watt hardware device plugged into a power socket, and the entire test PC plugged into it. While this article focuses on a creator angle, gaming tests tend to be more intensive on the overall GPU, thus drawing the most amount of power. So, to test here, we take advantage of UL 3DMark’s Fire Strike 4K (Ultra) test, looped for five minutes before the power usage is monitored.

The on-paper specs show that the RTX 3090 draws more power than the RTX 4080, and our testing here shares that view. That’s nice to see, since 4080 is quite a bit faster at rendering overall than the RTX 3090. Meanwhile, the RTX 4090 is in a league of its own – drawing 135W more than the RTX 4080.

Final Thoughts

This concludes our first look at NVIDIA’s GeForce RTX 4080, and it’s far from being our last. We’ll eventually take a look at the gaming aspect of the card (and the 4090, for that matter), but because creator angles are so scarce in the review game, we wanted to again kick off our coverage with a focus on that.

It might go without saying, but we must proclaim the caveat that all of our opinions thus far on this GPU relate strictly to creator workloads. That said, we we’ve seen so far is really impressive – at least with regards to rendering.

As mentioned above, we’re kicking off with an exclusive rendering look for now, but we’ve already tested the GPU in many other tests, and just need to put word to paper. We can say right now that rendering is the one angle that will show such a dramatic uptick in performance (ignoring gaming); most encode and photogrammetry test improvements are modest, which is pretty well expected, since they don’t utilize the GPU like a render does. Viewport and math tests will make the secondary look pretty alluring, though.

It’s still a little hard to believe that a $1,000 top-end GPU seems to be the new normal. The GTX 1080 Ti was an amazing GPU when it launched, and little did we realize that its $649 price tag would be so drool-worthy all this time later. The RTX 2080 Ti released at $1,199, the RTX 3090 at $1,499, and now, the RTX 4090 is $1,599.

We covered it a bit earlier, but the pricing of this current Ada Lovelace generation requires some digging into. The RTX 4090 costs 33% more than the RTX 4080, but its overall specs far exceed that 33%. Usually the opposite is true, and because it’s not in this case, it’s as though NVIDIA is banking on most people simply upgrading to the top-end chip.

As we saw in our rendering tests, though, the differences between the two Ada Lovelace GPUs are not quite as stark as you might imagine. Depending on the render engine, the gaps can either be really notable, or truly modest. In Arnold and Redshift in particular, the performance deltas were uninteresting, but they were the stark opposite in V-Ray, Octane, Blender, and LuxMark. Once a new GPU driver releases that fixes a Luxion KeyShot issue, we’ll get that solution tested, and slipped in here.

Overall, it’s not really too difficult to conclude on a GPU like the RTX 4080. At $1,199, it’s not inexpensive, but if you use it in the right workloads, the performance leaps over the previous generation can be huge. The fact that the current-gen $1,199 GPU is much faster overall than the last-gen $1,499 RTX 3090 is a good sign, although we still wish that given the top-end performance gap, the RTX 4080 had even more attractive pricing – but if you were to talk to the company over a marketplace website, it’d surely say, “No bartering. I know what I have.”

We’re not sure where the RTX 4080 stands overall in gaming, but in rendering, it follows in the footsteps of the RTX 4090 and can only be surmised as an absolute screamer. Both Ada Lovelace GPUs top the charts, which makes us really eager to see what AMD’s next-gen RDNA GPUs can muster. At least with weaker rendering competition, we can still relish the fact that NVIDIA continues to push things forward, and continues to make our jaws drop after pushing the “Render” button.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!