- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GeForce GTX 680 Preview

NVIDIA’s GeForce GTX 680 is here. Based on Kepler, it’s an interesting beast. It features a reworked architecture, is produced on a 28nm process, introduces things like GPU Boost, Adaptive VSync and TXAA, has a redesigned cooler, and perhaps most important of all, aims to perform better than the competition.

Page 1 – Introduction

NVIDIA today launches its long-awaited Kepler architecture with the help of the GeForce GTX 680. For the past couple of weeks, we’ve been working towards posting a robust launch article on time, but due to various problems that have plagued us, we’re able to post just a small preview at this point in time. You can expect our full review with much more detail on Monday.

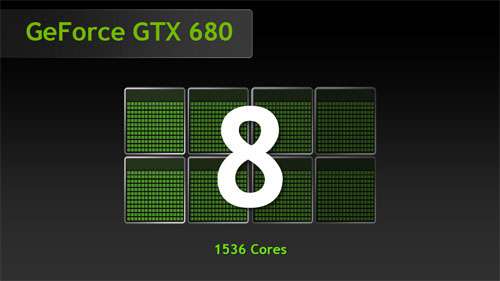

Without wasting time, let’s get to the good stuff. The GTX 680 is NVIDIA’s first based on a 28nm process, and also the first to utilize a PCI-E 3.0 bus (rather insignificant at this point in time). The card features 1,536 CUDA cores (3x over GTX 580), a 1,006MHz core clock and 2GB of 6Gbit/s GDDR5.

None of that is too interesting, however. What is, is the fact that the GTX 680 ships with a 195W TDP and dual 6-pin connectors. That’s right – no 8-pin needed here, a fact I am sure NVIDIA will love to flaunt. I’ll talk more about features in a moment, but before that, feel free to check out the card in all of its glory:

If there’s one thing we’re told every single time a new GPU architecture launches, it’s that the cooler has been redesigned and is the highest-performing and quietest to date. The cooler with the GTX 680 is no exception, and NVIDIA is confident that even at max load, it’ll be much quieter than the competition.

Since this is more of a preview than a review, I’m going to barrel-roll through some other niceties that NVIDIA brings with its Kepler architecture. I’ll be going into much more detail next week, but am able to answer questions you’re dying to throw at me before then.

With its Kepler architecture, NVIDIA “fixes” something that has bugged me for a while: the inability to run three monitors off of a single GPU. In fact, the company has gone one further by offering support for up to 4 monitors (a typical configuration might be 3×1 and then another monitor up top, center). Given just how powerful today’s GPUs are, it’s nice to have the option to stick to just one for multi-monitor gaming.

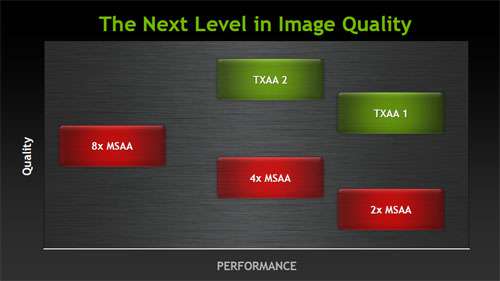

At a press conference held in San Francisco earlier this month, NVIDIA showed off all that’s being brought with Kepler, with one of the most touted features being TXAA – yet another new anti-aliasing option. With it, the company claims that it can achieve better AA results than MSAA with improved performance. There are two modes, TXAA1 and TXAA2, where the former matches or surpasses 8x MSAA quality but offers improved performance, and the latter results in even better quality than 8xMSAA at far less cost. The following image sums that up:

To put TXAA into perspective, NVIDIA brought Epic Games VP Mark Rein on the stage to give an example. After the GTX 580 launch, a video was released of the company’s “Samaritan” demo, arguably the most technologically impressive video to date. At that time, to get the quality the company wanted, 3x GTX 580s were required. But with the help of architecture improvements along with TXAA, the same demo can be run with only a single GTX 680, at the same quality. Ideally it’d be great to receive access to the demo to test this for ourselves, but for now we’ll just have to take NVIDIA’s and Epic’s word for it.

In the previous generation, each “SM” module was comprised of 32 cores and the control logic, while with the GTX 680, SMX is born to pack 192 cores into each module, awarding a performance increase of 2x per watt. Eight of these modules can be found in the GTX 680, delivering a total of 1,536 cores.

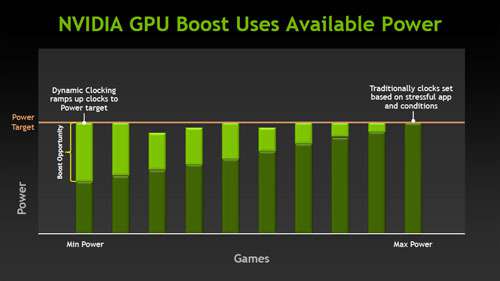

The GTX 580’s TDP was 244W, so NVIDIA made some obvious strides to deliver a better-featured and faster card at just 195W. Thanks in part to this major power savings, NVIDIA is introducing “GPU Boost” with Kepler, a technology similar to Intel’s Turbo. Whenever the GPU can take advantage of extra GPU power, the core clock will be boosted to improve game performance. The opposite can also be true – if a game can’t take advantage of the stock performance, the card can underclock itself. Here’s a graph showcasing that:

When overclocking, GPU Boost still applies. Your maximum overclock will have to take this into consideration, so in order to find a true “max overclock”, a game or benchmarking tool that properly stresses a card should be used (such as 3DMark 11).

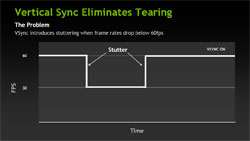

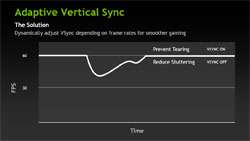

Whenever possible, taking advantage of VSync while gaming is recommended as it’ll reduce or rid stutter that can occur due to asynchronous FPS / Hz rates. However, when playing a beefier game, VSync might not always be able to hold a constant 60 or 120 FPS, resulting in the most noticeable stutter you’ll likely encounter. This is because the game immediately dips from a set FPS value to a lower one, normally 60 to 30 FPS (to keep in line with monitor refresh rates). To help solve this issue, NVIDIA has invented “Adaptive VSync”.

To explain this technology, I have to provide two graphs. The first is standard VSync being used, while the second is Adaptive VSync.

With Adaptive VSync enabled, the GeForce driver will try to detect as soon as possible when the average framerate has to dip below the monitor refresh rate and then disables VSync on-the-fly in order to reduce the stutter. So rather than a major drop from 60 FPS to 30 FPS, there will be a curve, as if you were not using VSync at all. Once performance returns to normal, VSync is then re-enabled.

That about wraps up the major features of Kepler. Recapping, we have improved FXAA performance, an added TXAA mode, GPU Boost, Adaptive VSync, improved tessellation performance, a redesigned SM module (dubbed SMX), a redesigned cooler aiming to be the quietest ever and perhaps best of all, single GPU multi-monitor.

As mentioned earlier, more will be discussed in next week’s full review. To help wrap up this quick preview, however, we’re posting a couple of performance results on the next page, pitting the GTX 680 against AMD’s current high-end champion, Radeon HD 7970.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!