- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Redshift 3.0 Massive Performance Boost Tested – Comparing Redshift 2.6 & NVIDIA Optix

With the release of Redshift 3.0 set in the not-so-distant future, we’ve decided to finally dive in and take a look at its performance improvements over the current 2.6 version. As we did with V-Ray last week, we’re also taking this opportunity to render with NVIDIA’s OptiX, and see what further performance gains might be in store.

Last week, we took a performance look at Chaos Group’s V-Ray 5, across CUDA, OptiX, and hetereogeneous rendering workloads. In that article, our inclusion of OptiX was long overdue, since it’s been supported for some time. Similarly, we’ve been overdue testing the same API in Redshift, and testing out the upcoming 3.0 version. As you likely suspect, this article takes care of that.

We couldn’t find a master list of all of what Redshift 3.0 brings to the table, but we know for certain that “performance improvements” is one of the bullet-points. One of the better places to look for what’s upcoming is the developer’s Trello board. Looking there, we can see OptiX 7.0 and Redshift RT were recently implemented, as has support for the latest software suites.

Once in a while, a reader will ask us when one of these CUDA-only solutions will support Radeon, and a couple of years ago, it really felt like it’d never happen. But, times change, and with Apple having killed off CUDA on macOS, it’s really spurred some change. Fortunately, Radeon support for Redshift is still in development, and will target both Windows and macOS when it becomes available.

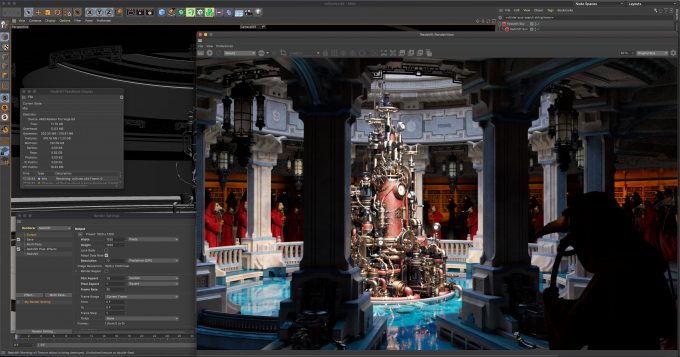

For a quick tease, the above shot was provided to the Trello by Redshift developer Saul Espinosa, highlighting one of the same projects we test with running inside of Cinema 4D within macOS. Naturally, Metal is the API being used there. We’d assume Vulkan will be the target on PC.

Equipped with that Age of Vultures project seen above, as well as a simpler project of an old-time radio, we’re testing performance across a range of NVIDIA GTX and RTX graphics cards, with both version 2.6 and 3.0.22. Note that while Redshift 3 is still in development, it recently lost its “experimental” tag, so we feel safe doing this testing at this particular time. In addition to the version comparison, we’ll also be seeing if OptiX can further improve render times.

But first, here’s the test rig used for all of our benchmarking in this article:

| Techgage Workstation Test System | |

| Processor | Intel Core i9-10980XE (18-core; 3.0GHz) |

| Motherboard | ASUS ROG STRIX X299-E GAMING |

| Memory | G.SKILL FlareX (F4-3200C14-8GFX) 4x16GB; DDR4-3200 14-14-14 |

| Graphics | NVIDIA TITAN RTX (24GB, GeForce 446.14) NVIDIA GeForce RTX 2080 Ti (11GB, GeForce 446.14) NVIDIA GeForce RTX 2080 SUPER (8GB, GeForce 446.14) NVIDIA GeForce RTX 2070 SUPER (8GB, GeForce 446.14) NVIDIA GeForce RTX 2060 SUPER (8GB, GeForce 446.14) NVIDIA GeForce RTX 2060 (6GB, GeForce 446.14) NVIDIA GeForce GTX 1660 (6GB, GeForce 446.14) NVIDIA GeForce GTX 1660 SUPER (6GB, GeForce 446.14) NVIDIA GeForce GTX 1660 Ti (6GB, GeForce 446.14) NVIDIA Quadro RTX 6000 (24GB, GeForce 446.14) NVIDIA Quadro RTX 4000 (8GB, GeForce 446.14) NVIDIA Quadro P2200 (5GB, GeForce 446.14) |

| Audio | Onboard |

| Storage | Kingston KC1000 960GB M.2 SSD |

| Power Supply | Corsair 80 Plus Gold AX1200 |

| Chassis | Corsair Carbide 600C Inverted Full-Tower |

| Cooling | NZXT Kraken X62 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro build 19041.329 (2004) |

| All product links in this table are affiliated, and help support our work. | |

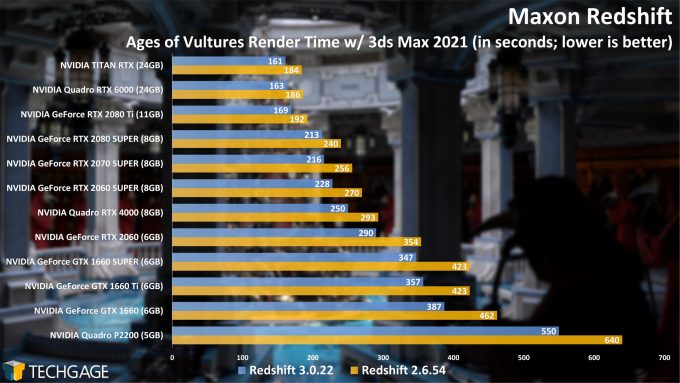

Let’s get started with a look at CUDA performance between 2.6 and 3.0 versions of Redshift:

It never ceases to amaze us when we see performance increases like these driven entirely by software. For the end-user, a mere software upgrade gives the same impact as upgrading their GPU in some cases. Naturally, performance aside, you’d still never want to go below 8GB VRAM for a workstation GPU nowadays, but it’s still great to see solid performance at the bottom-end.

Even the lowbie Quadro P2200, a card that doesn’t even have a power connector, saw a mammoth improvement to performance with the simple move from Redshift 2.6 to 3.0. Again, we’d never suggest choosing a GPU like this for rendering, but that level of performance improvement on a lower-end last-generation card is great to see.

It does become clear that one of these scenes is more intensive than the other. The biggest improvements are seen in the Radio project for some reason, whereas the improvements are a little more modest with Age of Vultures. And this is where OptiX and accelerated ray tracing can come in, and spice things up.

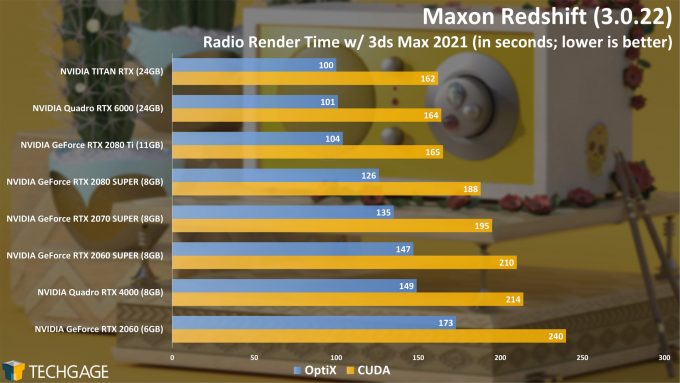

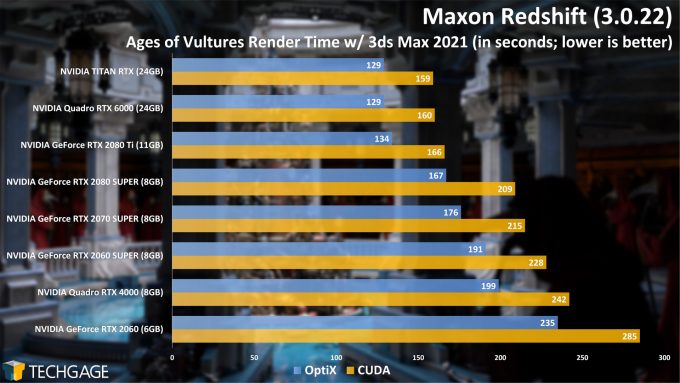

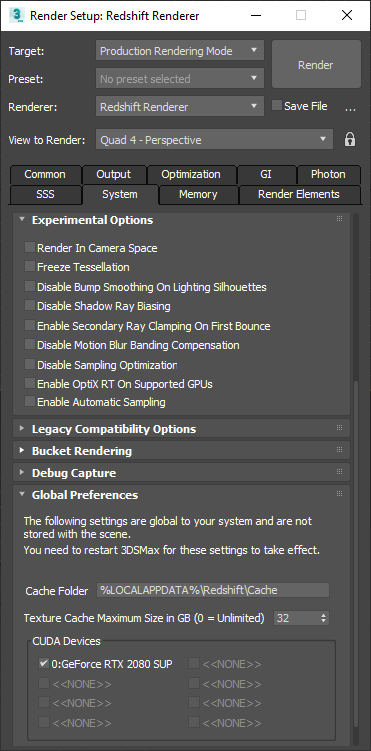

It’s important to note that OptiX in Redshift is used for both AI denoising and rendering, where the Tensor cores would be used for the former, and RT (ray tracing) cores for the latter. Neither of the OptiX options are enabled by default; you will need to head into the Experimental Options section to apply it for the renderer. If you have an RTX GPU, you can effectively call it yet another free performance boost.

Please note that when we tested OptiX, performance was a bit too quick in some cases, so the below results are not comparable to the above ones. We increased the amount of sampling each scene does, so that we can try and get more useful scaling. And that said, here are those results:

From the get-go, the advantage from enabling OptiX RT is clear. Even at the top-end, the GPUs managed to shave a ton of time off of their renders, and again, while the effect was less pronounced with the Age of Vultures scene, we’re still seeing such major jumps that it’s almost like you’re getting a free GPU upgrade. It’s important to note that the end renders here are the same regardless of whether you choose CUDA or OptiX, although your level of success may depend on your scene.

The fact that OptiX RT isn’t enabled by default isn’t a good enough reason to not give it a try. Some competing renderers, like Arnold, use OptiX by default, but most commonly, it is currently offered as a switch, at least perhaps until we reach a time where it’s definitively stable, and can be made a default. The gains seen above from OptiX are nothing new; we saw the same sort of increases in Blender and in V-Ray.

The past year has been pretty great for software upgrades that tremendously help render times, and clearly, Redshift has done its part in making sure those same sorts of enhancements are making it into 3.0. If you’d rather err on the side of caution and wait for a finally final release of 3.0 once it’s out, you should be able to expect to see it in about two months. As always, we’ll upgrade to the final release as soon as it drops, and use it going forward in our CUDA testing.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!