- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Ryzen For The Masses: A Look At AMD’s Ryzen 5 1600X & 1500X Processors

With our Ryzen 7 review, we found that AMD had released three powerhouse CPUs, chips able to do proper battle against the competition – and in many cases, win. Now, we have Ryzen 5. Will we see the same kind of bang-for-the-buck with these chips as we did with Ryzen 7? Obviously, there’s only one way to find out!

Page 6 – Gaming: 3DMark, Ashes, Battlefield 1, GR: Wildlands, RotTR, TW: ATTILA & Watch Dogs 2

(All of our tests are explained in detail on page 2.)

It’s been easy to highlight the performance differences across our collection of CPUs on the previous pages, since most of the tests used take advantage of every thread we give them. But now, it’s time to move onto testing that’s a different beast entirely: gaming.

In order for a gaming benchmark to be useful in a CPU review, the workload on the GPU needs to be as mild as possible; otherwise, it could become a bottleneck. Since the entire point of a CPU review is to evaluate the performance of the CPU, running high detail and high resolutions in games won’t give us the most useful results.

As such, our game testing revolves around 1080p, and 1440p, with games being equipped with moderate graphics detail (not low-end, but not high-end, either). These settings shouldn’t prove to be much of a burden for the GeForce GTX 1080 GPU. For those interested in the settings used for each game, hit up page 2 (a link is found at the top of this page).

In addition to 3DMark, our gauntlet of tests includes six games: Ashes of the Singularity (CPU only), Battlefield 1 (Fraps), Ghost Recon: Wildlands (built-in benchmark), Rise of the Tomb Raider (built-in benchmark), Total War: ATTILA (built-in benchmark), and Watch Dogs 2 (Fraps).

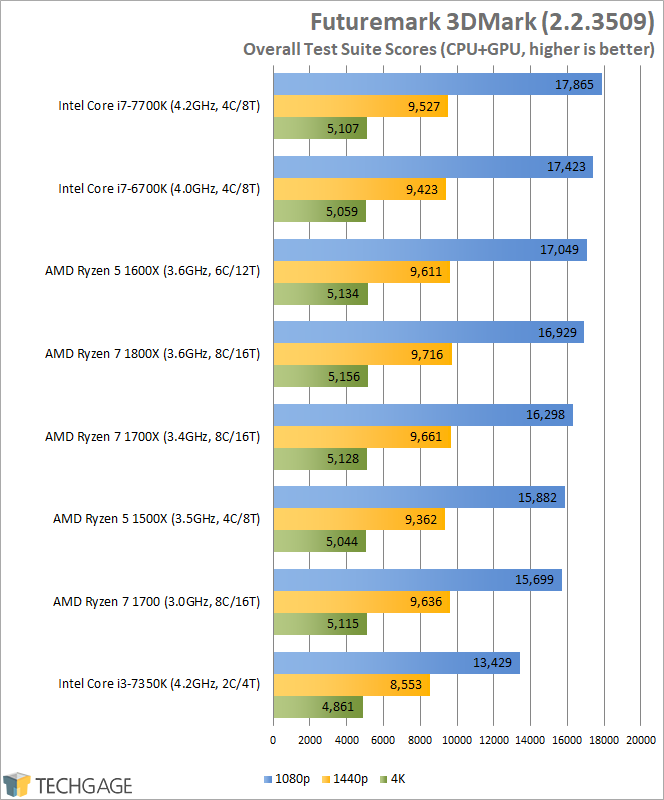

Futuremark 3DMark

As mentioned above, it’s going to be hard to see performance differences between CPUs if the GPU is a bottleneck, and these results explain why. At 1080p, there are grand differences between the bottom and top of the stack, but at 4K, where the GPU becomes the biggest bottleneck, there’s effectively no difference at all (except for the dual-core, but even that difference is mild).

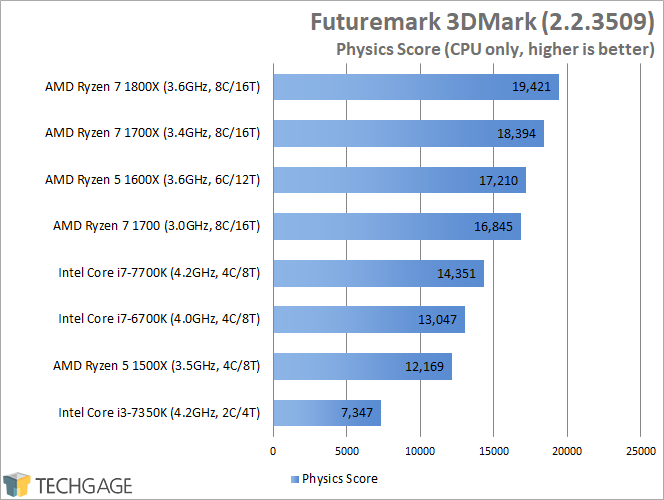

When we separate the physics score, which explicitly uses the CPU, we can see why the overall scores above managed to get their boost:

As with most of the tests prior to this page, huge differences can be seen when the CPU is specifically singled out in a gaming test. But just how useful is this data? It’s really only useful if you consider it to represent theoretical gaming performance. On this page, the 8-cores place at the top of the chart in the CPU-specific tests, but in actual gaming, the higher-clocked (but lower-core) chips perform better.

So, theoretically, if a game can take advantage of 8+ core chips, the graph above would represent the benefits of many core CPUs. The closest I think we have to hitting the mark comes up next:

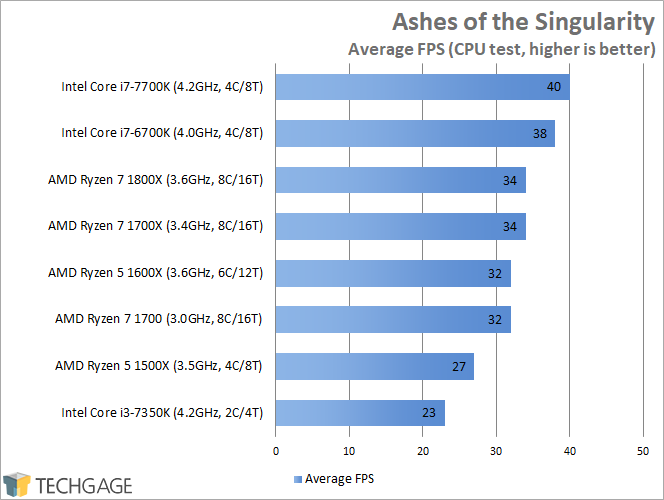

Ashes of the Singularity

It’s important to note that this is the original Ashes, and not the Escalation DLC which includes specific Ryzen optimizations. However, it acts as a good bare-bones CPU test, as the graphics are not involved, just like with 3DMark’s physics test. If a game can take advantage of more than 4 cores, then the bigger chips stand to deliver the best performance. Or, if a game adjusts its AI based on CPU horsepower available, the bigger chips would be ideal.

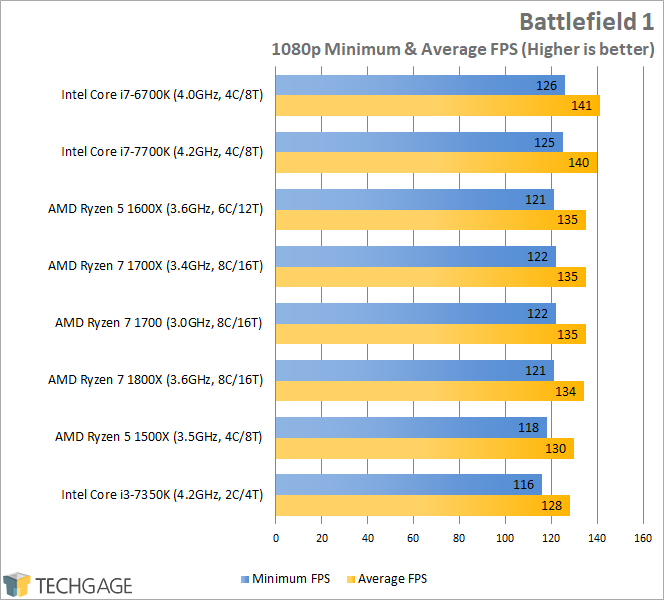

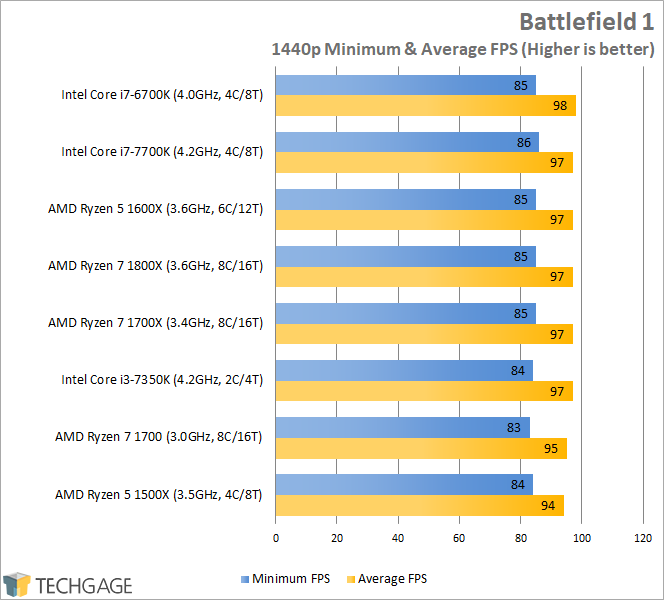

Battlefield 1

BF1 is one of the more recent examples of games that can actually take decent advantage of today’s processors, but as we see here, the overall differences are not that stark. At 1080p, the delta between the top and bottom of the stack is a mere 10 FPS (for the minimum; and we’re talking a 126 FPS peak value), and at 1440p, there’s no effective winner on the minimum side, and for the average, there’s just a 5 FPS difference from the bottom to top.

I should stress the fact that this testing represents offline play, and that online play will likely give you different results. But because of the variable nature of the gameplay, real-world testing is complicated, especially by someone who’s not familiar with the game and would drag an unsuspecting team down. Thus, I stick to offline tests for the sake of sanity.

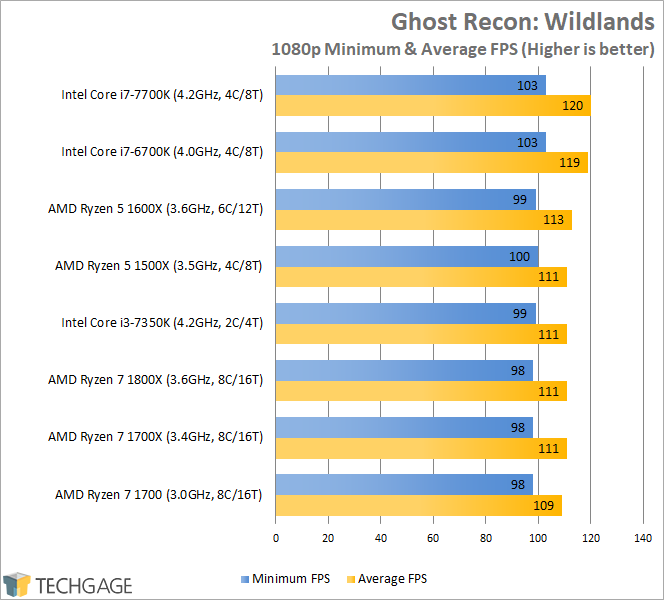

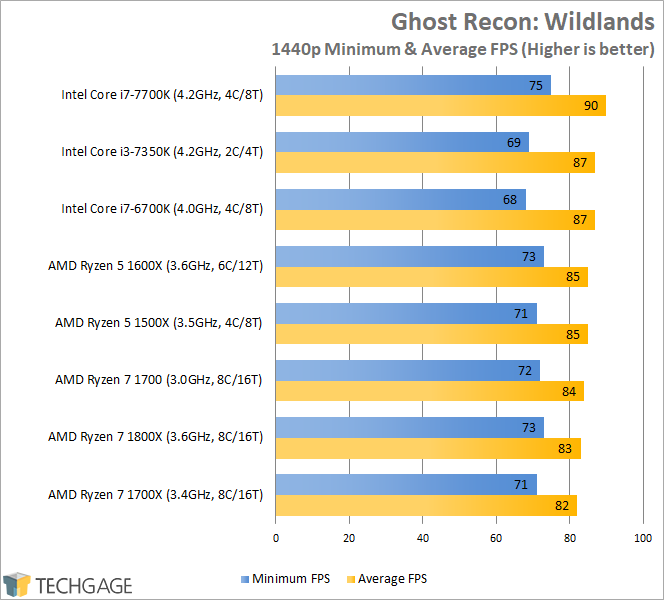

Ghost Recon: Wildlands

We’re seeing more of the same here, with very little overall differences being seen between the top and bottom. Interestingly, all of the Ryzen chips managed to deliver a better minimum FPS at 1440p than the 4GHz 6700K and 4.2GHz 7350K (but fall short of the 7700K). Overall, rather minimal differences.

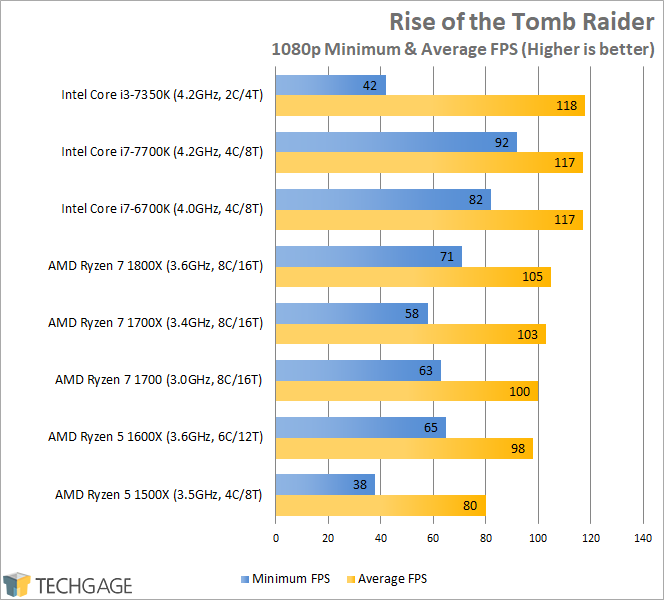

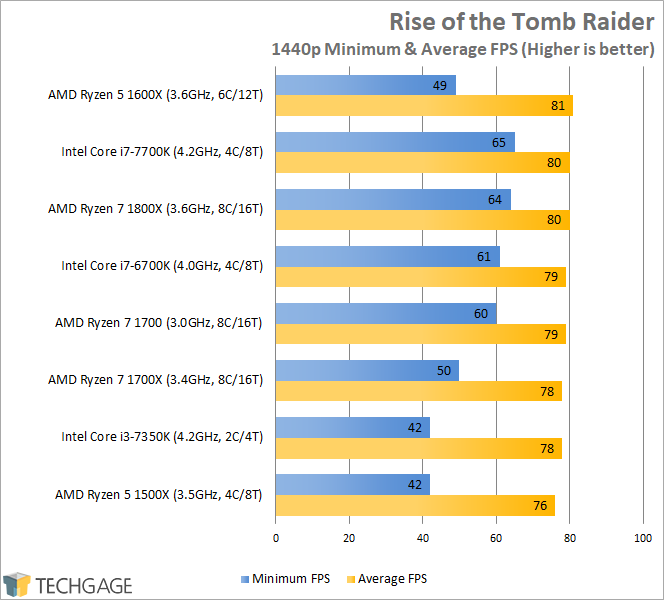

Rise of the Tomb Raider

Compared to the previous results on this page, Rise of the Tomb Raider‘s are downright sporadic. Based on both the 7350K and 1500K, this game doesn’t like dual-cores, or quad-cores with sub-4GHz clocks. Intel tops the charts here, which based on previous performance suggests the game itself is optimized for the blue team. At 1080p, that leads the 1500X to fall below the 7350K in both resolutions, but at 1440p, the differences for the average FPS is minimal.

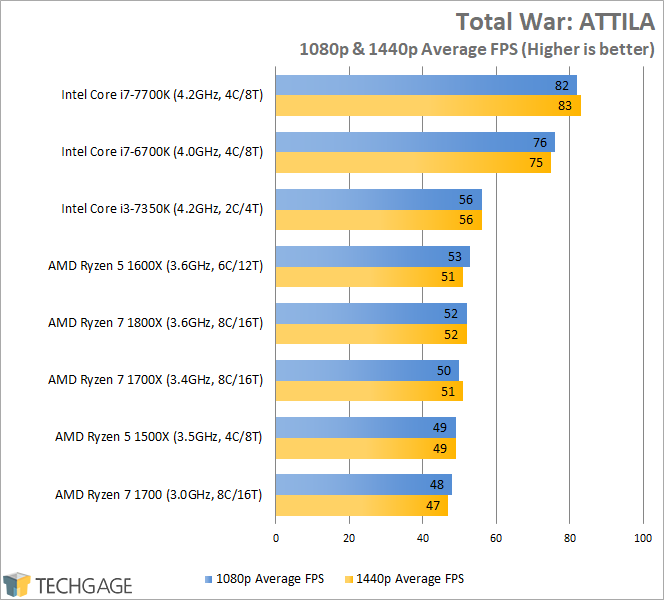

Total War: ATTILA

ATTILA, like RotTR, doesn’t seem to like non-Intel chips too much. In most cases throughout this review, Ryzen’s extra cores have helped it do proper battle against Intel, but these results highlight that in some cases, that Intel optimizations mean a lot.

Take the 7350K, for example. Despite having half of the number of cores and threads as the 1500X, it beat out even the eight-core 1800X, and likewise, the 1800X couldn’t match a single Intel chip here.

The fact that the results between the 1080p and 1440p results are largely the same proves that ATTILA is a fantastic CPU benchmark, but only for Intel.

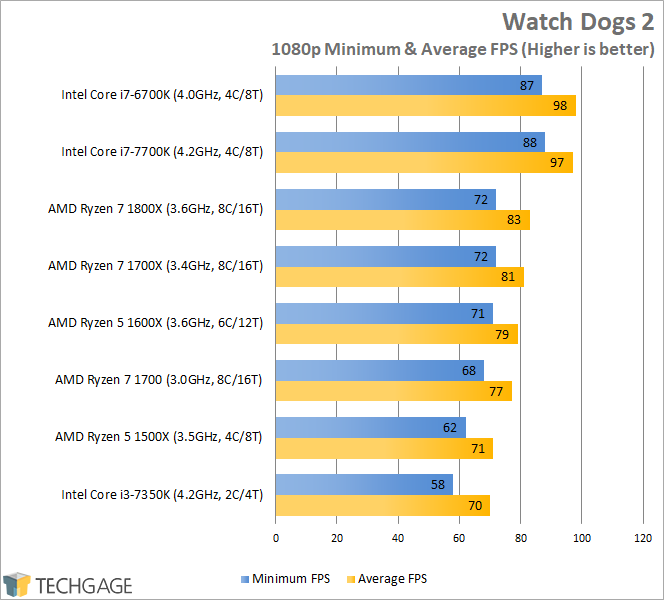

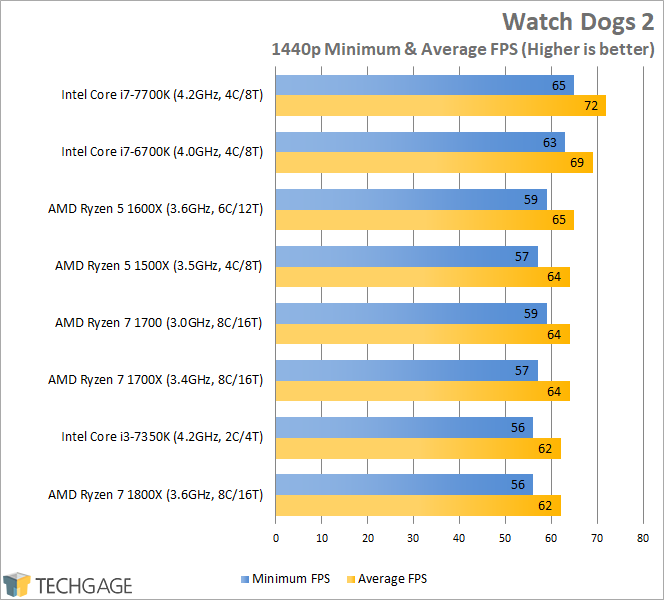

Watch Dogs 2

Compared to the rest of the games on this page, Watch Dogs 2 seems to scale the fairest between processors, giving an edge to no one. Because it’s a game that doesn’t need more than a quad-core, the highest-clocked chip that’s at least a quad-core wins.

As seen a few times with other games, 1080p isn’t too kind to Ryzen, but the gap tightens at 1440p.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!