- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

SysAdmin Corner: Demystifying RAID

Interested in RAID, but not sure which option is right for you? The goal of this article is to clear up any confusion you may have. We discuss what RAID is, what it isn’t, potential dangers, differences between popular RAID levels and last but not least, what you need to get yourself up and running with your very own RAID.

Page 3 – Choosing Your RAID Controller

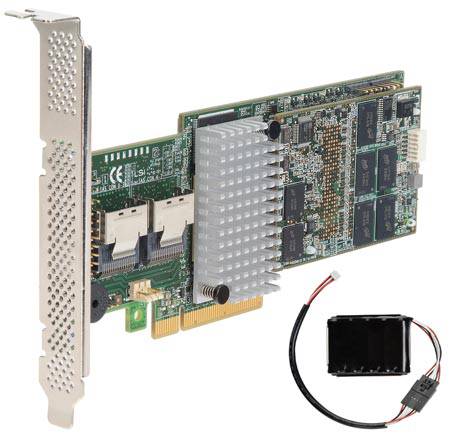

In order to set up your RAID array, you need a controller that makes all of the drives do what they’re supposed to. Overall, controllers are split between two different types – hardware and software. Which one is right for you? It depends on your needs and your budget – but you need to think ahead.

I’m going to start by putting something out there. I personally (and it is a personal choice) do not advocate hardware RAID. Even Intel’s onboard controller is a risky gamble to me. I have had a motherboard fail and had nothing around but an AMD board to switch to – and if I had used hardware RAID at all, I might not be able to boot that array again until I bought a new Intel board and chipset. A PCIe board is even more of a gamble to me, as they are costly and a potential source of failure, which may or may not be replaceable when something breaks.

That being said, why use hardware RAID? Simple – performance. A direct dump into the PCI-E bus means parity calculations for RAID5/6 are fast and there is no software overhead – the OS as a whole sees the entire array as one drive. Other benefits include that most RAID cards come with SATA ports onboard, so you can use more than your motherboard may have.

The downside to hardware is that every RAID controller is different – and they don’t have to work with each other at all. Different manufacturers do not support each other’s product; there is not a true standard. Sometimes, they don’t even support their own products. Need more drives? You may need a new card. Maybe the new one and old one will work together, or maybe the manufacturer wanted you to spend a lot more on an expensive model before offering that. And what if your card died? The new one might not be backwards compatible with your old array. That last one’s a bit rarer, but it happens.

Software RAID has a performance hit, which is its number one reason to not be used. Don’t let that fool you, though – my home server on a three-disk RAID 5 array pulls more than enough data to saturate a gigabit home network, streaming to my home theater in 1080p without a hitch while doing several background operations. On server versions of Windows and a properly configured Linux array, software is fast enough to barely even measure the performance hit, much less feel it.

With Windows 7, Microsoft finally joined the realm of common sense and incorporated a built-in software RAID onto its desktop that is worthy of some recognition. However, unless you’re running a server version of Windows, you still don’t have the option for RAID 5 – only 0 and 1. Given this, many users will opt instead for Intel’s chipset RAID, which is a shame – however, as far as hardware RAIDs go, it’s one of the best, most well-supported and available options, and requires no additional equipment.

Those of us using a more server-oriented environment like Linux will have the ability to use MDADM – or multiple-device administration. MDADM is built into nearly all Linux and BSD/Unix distributions, and is fairly simple to set up and manage. Should you be running Linux, I strongly advocate using MDADM over any hardware controller unless you have a very specific need. And if you disagree with my recommendation, you need only look at the big-branded NAS solutions out there – both Drobo and Synology use modified versions of MDADM as their foundation.

The nicest thing about software is flexibility – you are only limited by the number of drives you can connect to your board. Switching out any other hardware is completely transparent, and there’s no expensive card to add. You can even put two or more partitions on the same drive (if your RAID array is 1TB and you add a 2TB drive, you needn’t let all the space go to waste) – though that’s not recommended, of course. Let’s remember why we’re doing this in the first place!

No matter what they tell you, size matters…

…Block size, that is. Hard drives are divided into sectors (4KB each on most modern drives), which store certain amounts of information. The filesystem that you format the drive in will convert these sectors to blocks, with one block containing a certain number of sectors. For instance, the Windows NTFS file system defaults to 4KB blocks. This means that every time the OS writes a file, it will do so in 4KB pieces (on drives from 2GB to 2TB) – but it can be specified when you format the drive. If you raise the number, every file will be broken up into larger chunks that keeps the data closer together. This comes with the downside that files can take up more space, because even a single byte flowing to the next block will make that block occupied.

Though 4KB blocks are great for something with a lot of small files (like OS drives, for instance), it’s actually quite inefficient. It becomes grossly excessive if you’re storing 4TB of 1GB MKVs, or even games with an average file size of 100-200MB. Each one of those blocks needs a different read/write action, meaning your controller has to send more commands, and it will be more likely to break up (fragment) the files wherever there’s free space.

Along with the file system’s block size, RAID adds a “chunk size” that functions somewhat similarly but sits one layer under the filesystem. This is the exact amount of data that will be written on each write to one disk at a time. The default for RAID 5, for instance, is a 32KB chunk – meaning each disk will have 32KB written to it before moving to the next disk. Coupling that with the filesystem block size of 4KB would mean that each disk gets 8 different accessible blocks stored to it. Note that this does not mean that all 8 need to be accessed sequentially each time (though that is the usual behavior).

The efficiency and speed of RAID 0, 5, 6 and 10 all depend on breaking the information up over multiple disks block-by-block. If you have three disks, the controller is pulling three blocks at once. Though you could say “Yes, so it’s most efficient to pull those small, 4KB chunks”, tuning your filesystem to use a larger block size reduces the number of distinct, separate read commands necessary – giving you that bandwidth for more sensible things, like your data. This should be balanced with chunk size as well – more drives can have smaller chunks, but access time for each drive will go up a little bit. Proper setup between block and chunk sizes minimizes seek time and prevents wear, because the array will organize its data into more coherent chunks, keeping files much less fragmented.

The rule of thumb for a single drive is to increase your block size as you increase the average file size on your disk – and RAID benefits from this as well. Increasing to 32KB or even 64KB tends to be a much better option when storing fewer, larger files. Your RAID chunk size should never be smaller than your block size, but above that it’s a trial-and-error dependent on your disks, your data, and your array size. Usually the defaults of 32KB are great for a RAID5-6 array. Just remember to change it if you increase your block size to 64KB!

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!