- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Testing AMD Radeon VII Double-Precision Scientific And Financial Performance

AMD whet the appetites of those hoping for strong FP64 performance with Radeon VII when it said it would be double what RX Vega offered. People clapped, and AMD apparently enjoyed the response. Just before launch, the company decided to double the FP64 performance once again, bringing us to 1:4 FP32. Let’s see where that puts the card in financial and scientific tests.

With this week’s release of AMD’s Radeon VII, we’ve taken a look at performance for both gaming, and professional workloads. While the card traded blows with NVIDIA’s comparable GeForce RTX 2080, there were a few cases where the Radeon VII really came into its own.

Due to time constraints, we couldn’t fit all of the tests we wanted to into our launch articles. Now that the rush to meet the embargo is over, we can look into some of the more quirky aspects of Radeon VII.

When AMD announced the Radeon VII at CES, it was obvious that the card shared many characteristics with its bigger brother, the Radeon Instinct MI50. That’s an enterprise-grade GPU with uncapped double-precision floating-point compute. At the time, it was widely speculated that the VII would inherit this FP64 performance, but that was shot down after we contacted AMD’s head of marketing.

During testing, we noticed some oddities with the claim of unaccelerated FP64 performance, as we were seeing some much higher results than we expected. Fast-forward to the day before embargo, and we get an email from AMD about a boost to FP64: from the 1:16 FP32 we were told last month, to an impressive 1:4 FP32 at launch. AMD originally told us that this amounted to 3.52 TFLOPS, but the company dropped that slightly to 3.46 TFLOPS after embargo lift.

After our original articles were posted, we went through our data a bit more carefully, and threw together a few charts to highlight Radeon VII’s strengths in different high-precision workloads. While we were at it, we also wanted to take a look at FP16 half-precision results, since AI and deep-learning are quickly becoming ubiquitous in our computing, even outside of research. These results will also let us see if anything is going on under the hood with NVIDIA’s Tensor cores on RTX’s Turing architecture.

If you are in research, simulation, financial analysis, or are generally in need of high precision, this unexpected boost is going to make the Radeon VII a very enticing card, since FP64 is typically only found on very expensive GPUs, namely NVIDIA’s Tesla series and the TITAN V, or AMD’s Radeon Instinct range.

If you’re not in those high-precision fields, what does FP64 performance bring to the table for us common folk, the gamers and content creators? Sadly, not a lot. In fact, FP64 is very rare in any kind of day-to-day software; even the various CAD and simulation projects rarely use it. Maybe its lack of use is simply because so little hardware supports it, but in truth, very little actually needs that level of precision outside of the sciences.

In our compute-focused look at the Radeon VII, we included only single-precision performance results, as at that time, we hadn’t been able to dig into the others (remember, AMD dropped the FP64 bombshell just last night.) The graphs below include those same FP32 results, as well as half- and double-precision results, helping us appreciate what AMD’s kind VBIOS update has given us.

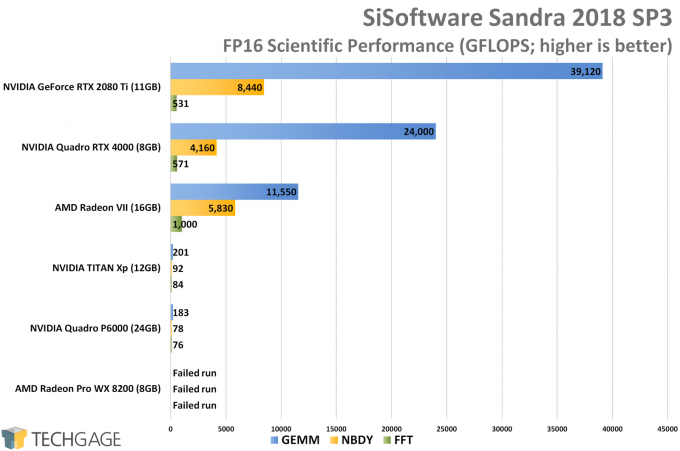

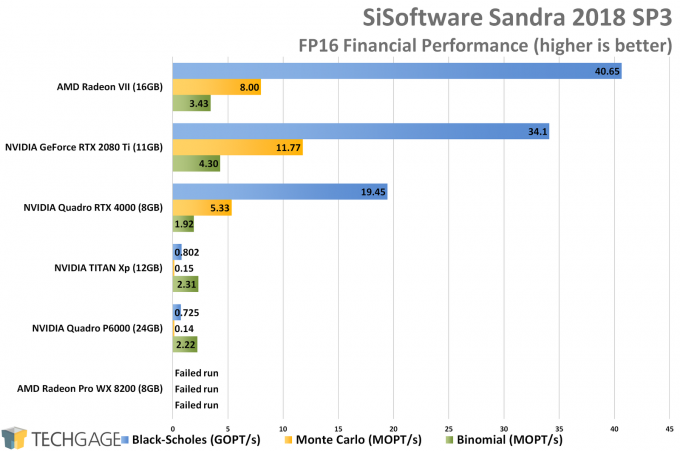

FP16 (Half-precision)

NVIDIA’s obsession with deep-learning has led it to dominate the FP16 charts here, thanks to the RTX series’ inclusion of Tensor cores. With RX Vega, AMD offered uncapped FP16 performance, while NVIDIA did no such thing on Pascal GeForce. That’s easily seen when you look at the TITAN Xp and Quadro P6000 results. Against those, the Radeon VII performs extremely well. It doubles the Quadro RTX 4000’s fast Fourier transform performance, and sees another big boost in the N-Body simulation.

The financial results paint a better picture for the TITAN Xp and P6000, as long as we’re only talking about Binomial. NVIDIA’s Tensor cores clearly don’t do much acceleration here. The Black-Scholes result is extremely favorable towards the VII, and from a cost perspective, the Monte Carlo scaling is strong with the VII as well.

For the curious, we’re not sure why the Radeon Pro WX 8200 failed to run any of the FP16 tests, but based on the simple error of “GPGPU call failed”, it seems like the driver simply didn’t like this test on this particular card. We tested on a brand-new OS install to double-check things, and it still continued to fail.

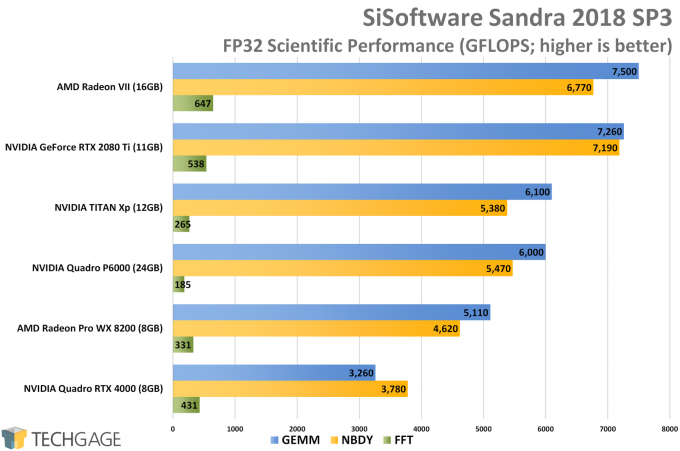

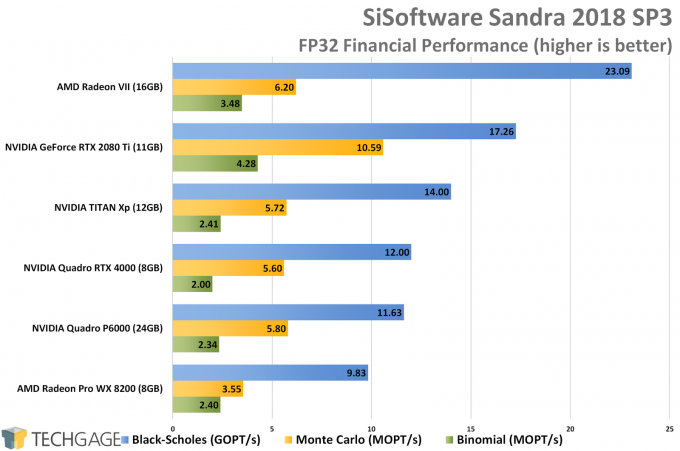

FP32 (Single-precision)

NVIDIA’s GeForce RTX 2080 Ti is an unbeatable card in gaming, but in compute, AMD’s strengths are clear. The Radeon VII manages to top the single-precision charts, with NVIDIA taking the lead in Monte Carlo and Binomial once again. This is all very neat scaling, and helps prove that you can’t base workload performance on something as simple as a TFLOPS spec.

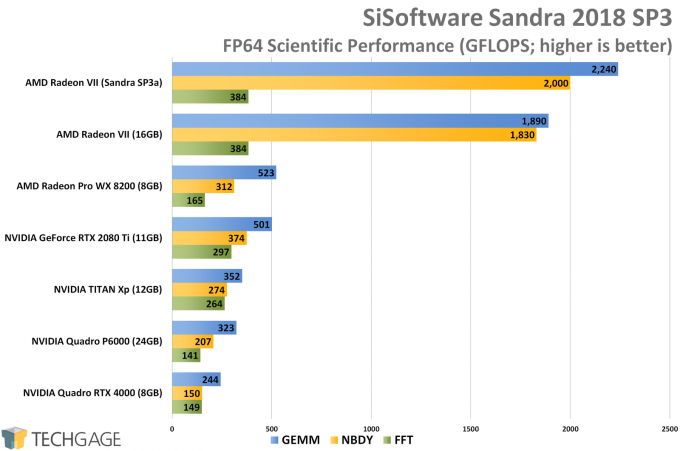

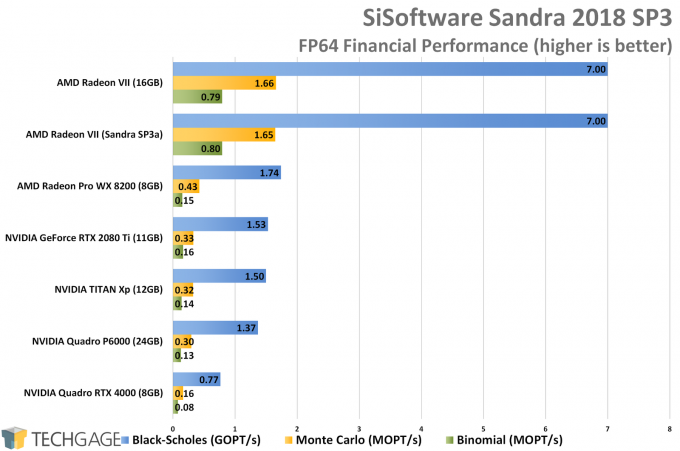

FP64 (Double-precision)

(Note: SiSoftware released SP3a five minutes after this article went live. For VII, a vectorized kernel is now used instead of a scalar one, with FP64 performance increasing a bit on the Scientific test as a result (sans FFT). Financial performance remains identical, along with both the FP16 and FP32 results.)

These double-precision results are interesting, and not only because of Radeon VII. The Radeon Pro WX 8200 also performs very strongly here in some cases, highlighting some obvious optimization AMD has in place in its Vega architecture. But, as interesting as the WX 8200 might be, it doesn’t hold a rendered candle to the double-precision performance of the VII.

With the 1:4 FP32 double-precision performance we were promised, the VII soars to the top of the charts with both GEMM and N-Body, and also Monte Carlo and Black-Scholes. The Radeon Instinct MI50 offers 1:2 FP32 performance, so if crafty reverse engineers ever manage to unlock the VII’s BIOS, that’d could lead to quite a disruption to NVIDIA’s Tesla line. But, that assumes stock for the VII is going to be plentiful. At this point, we have no idea what the next few weeks are going to look like.

What we do know is that AMD is basically catering to everyone with the Radeon VII, but it’s best targeted at creative pros, and now, researchers. The card is solid for gaming, but with 16GB of memory, it’ll really shine best in intensive compute workloads, and when it will only take two of the cards to get close to a multi-thousand dollar Tesla or Quadro, it’s not only going to be gamers who seek it out.

If you landed in this article directly, you may be interested in checking out our gaming look, which tackles three resolutions and five competitor GPUs, as well as our workstation performance look, tackling creative and compute scenarios, like encoding, rendering, and viewport performance.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!