- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

The Path To Real-Time Ray Tracing: Q&A With J. Turner Whitted – Creator Of Graphical Ray Tracing

With the release of DirectX Raytracing and NVIDIA’s RTX API, it’s time we took a look at the history behind ray tracing, and how it came about. With SIGGRAPH just around the corner, we speak to Turner Whitted, who used his knowledge of sonar to pioneer ray tracing as a method for rendering computer graphics back in 1979.

Real-time ray tracing has been the holy grail of games and simulations for as long as they’ve been around. With each generation of hardware and software, there’s always been this nagging feeling of “will this be the start?”. Photorealism is something many game developers and graphics artists strive to achieve, but the process of bringing it to fruition has been extremely difficult.

We’ve come a long way, and real-time rasterization engines have continued to impress, no matter how imperfect they may be. However, there are only so many shortcuts and approximations that one can do before you are left going “we might as well do this properly”. With the introduction of DirectX Raytracing and NVIDIA’s RTX API, it might be safe to say that we are finally at the start of a real-time ray tracing revolution.

The question is, why has it taken so long? It’s hard to believe that the journey of ray tracing in computer graphics goes back over 40 years. With the progression of phong shaders, to ray casting, and global illumination, it wasn’t until 1979 where the idea of recursive ray tracing came in that allowed for reflections, refractions, and shadows to be rendered – the building blocks of all modern rendering engines. It’s somewhat ironic that the path to real-time ray tracing started as an idea to not improve performance, but to slow down and increase complexity.

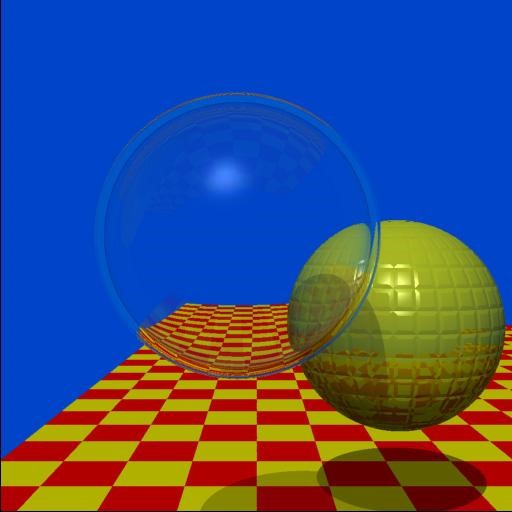

J. Turner Whitted, one of the pioneers behind modern ray traced rendering, recounts how he stumbled upon these recursive techniques in an article now up on NVIDIA’s website (since he now works for NVIDIA). While some early mistakes somewhat hindered performance, Turner’s construction of inter-reflecting spheres using similar processes he learned when working with Sonar, allowed him to use a computer to render complex lighting beyond what was available with environment mapping. He then later presented his findings at SIGGRAPH back in 1979, and again in 1980.

Ray tracing, as a concept in physics, goes back before computers were invented, as it was used as a mechanism to calculate the behavior of waves or particles through different mediums. By using a computer to perform ray tracing functions on light by modeling different material properties, and calculate refraction and reflections, it was possible to render scenes far more realistically than those offered by global illumination.

It was the framebuffer that allowed for the project to work – something that modern graphics cards take for granted. It allowed for graphics to be stored in memory while they were being constructed, instead of in time for the screen refresh, and when rendering cycles takes minutes to hours at a time, it means the image can stay in memory until its ready, and then be displayed. Another concept that modern designers will be familiar with is branching, creating the cast ray as a branch that splinters and bounces off other objects in a scene to build up more detail, and eventually reflect back in on itself.

Turner’s work didn’t really get mainstream appeal or practical application until some time later, as it was all computationally expensive to run for even simple scenes (like two spheres reflecting and refracting a checkerboard), such were the limitations of the monstrous DEC computers he was using at the time. The end result was undeniable, though, and it’s this work that allowed for many of the visual effects that started to crop up in TV shows and movies in the mid 90s and onwards, in what is now called Whitted-Style ray tracing. You can read more in the article linked earlier.

We managed to bounce some questions off Turner, to get a better understanding of how this engine came about, how it compares to moderns systems, and what he’s been up to recently:

Techgage: The jump from ocean acoustics to 3D rendering is quite stark. What made you decide to jump into computer graphics?

J. Turner Whitted: It was a natural transition. Sonar is all about signal processing. With a background in hardware for signal processing, I wanted to pursue graduate studies in image processing. My transition from image processing to computer graphics was pretty smooth because a lot of the math is common to both subjects.

TG: What hardware were you using to do the first renders, and how long did it take to produce the first full resolution image of the two spheres?

JTW: The original platform was a DEC PDP-11/45 running Unix connected to a 9-bit per pixel frame buffer. The initial images were monochrome and did not have all the features of the published images. The VAX-11/780 was considerably faster and took only 74 minutes to render the spheres and checkerboard. At a resolution of 640×480, this worked out to roughly 1/70th of a second per pixel on a machine advertised to run at 1 MIPS. A more detailed look at execution times revealed that my naively written code was wasting about one-quarter of its time converting from float to double and back. In hindsight, declaring all variables to be doubles would have actually shortened the run time.

TG: After the SIGGRAPH presentation in 1979, was there a great deal of interest in your recursive rendering engine, or did it take time for it to pick up steam?

JTW: Ray tracing remained a novelty for a few years. Steve Rubin, my colleague at Bell Labs at the time, and I wrote a paper on ray tracing acceleration structures for the 1980 SIGGRAPH conference, but it wasn’t until 1984 when Rob Cook, Thomas Porter, and Loren Carpenter published “Distributed Ray Tracing” that the full potential of the technique started to emerge.

TG: Do you ever recreate your iconic two spheres scene with modern hardware and software to see how quickly it renders?

JTW: Not exactly on modern hardware, but on my desktop PC. In 2007, Judy Brown and Steve Cunningham published “A History of ACM SIGGRAPH” in Communications of the ACM. They asked for a copy of the spheres and checkerboard image to include in the article, but I didn’t have one handy.

However, I did happen to have a copy of the original C program in an archive. After changing a couple of lines of code to make it run on MS-DOS, I compiled the code, initiated execution, and got up to get some coffee while waiting for it to complete. As I was standing, the execution terminated in less than 6 seconds. I immediately started debugging, but could not find any error that would cause it to crash in 6 seconds which led me to think that it had crashed. I recompiled and started execution again. Same result. Eventually I took the trouble see if any image had been generated. It had. There were no bugs; the image was perfect. The only difference was that the code executed 740 times faster than it had in 1979, and on a computer that cost 100 times less.

TG: Have you done any ray tracing work since 1980, or anything related to computer science? Have you worked on computer graphics since starting at NVIDIA?

JTW: In my university teaching I’ve helped students write their own ray tracers, but there are so many other topics to be explored. In the past 38 years I’ve started a graphics software company, helped to engineer a consumer VR system, managed a hardware devices research group at Microsoft, and spent most of the past 10 years (including the past 4 years at NVIDIA) trying to inject graphics processors into displays.

TG: Some 40 years later, did the journey to real-time ray tracing take longer than you thought?

JTW: It took the aviation industry 44 years to move from the Wright Flyer to breaking the sound barrier. The graphics industry is not only ahead of this schedule but can place supersonic GPUs on your desktop.

I’m still awed by the fact that real-time ray tracing can be done at all. For decades we have seen improvements in both performance and realism. This is common in graphics as algorithmic sophistication brings simple but impractical techniques into the real world. What has caught me by surprise is the recent evolution of graphics processing capabilities that have brought ray tracing into everyday use.

TG: With Photon Mapping and Ray Tracing, do think there are still areas that could be improved for more realistic rendering, or are we at a good enough point in image quality where the industry can focus more on efficiency?

JTW: The industry continues to make forward steps on both realism and efficiency. To me the real question is whether there is some grand simplifying principle yet to be found that will open up an even more promising pathway to realism and efficiency. I don’t believe that computer graphics rendering research is finished yet.

TG: If it isn’t said enough, thank you for your efforts in laying the foundation for the technology that is used to entertain billions of people, used in simulations to save lives, and provide jobs for millions of people. While you may have been one of many that contributed to the industry, you still played an important role. Thank you for your time.

The term “embarrassingly parallel” is often used to describe ray tracing, as the lighting calculations for each pixel in the scene are completely independent of each other. This means that the massively parallel architecture of GPUs makes them the ideal candidate for accelerating ray tracing workloads. With the arrival of the RTX API and other ongoing work we can harness the power of the GPU; instead of a server room filled with Cray computers, a single chip in a little box hidden under a desk is all that’s required.

All this means it won’t be long before we start to see real-time ray tracing at the home. Currently, only certain parts of a scene are calculated using ray tracing, and then overlayed on top of a raster image. Demonstrations of this can be seen with the updated Timespy demo from UL (formerly Futuremark), and the Star Wars UE4 video, which renders the video above in real-time. The GDC conference this was presented at showed edits being done to the scene live on stage.

While modern rendering engines are far different from what Whitted created 40 years ago, he laid the groundwork for what was to come, showing that it was possible to use ray tracing to generate realistic lighting.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!