- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

WD Black 4TB Hard Drive Review

WD’s Black 4TB is the sort of product that doesn’t need much of an introduction – it speaks for itself. We’re dealing with a standard-sized desktop hard drive that sports a market-leading 4TB of storage. That’s 4,000GB, for those not paying enough attention. It’s impressive on paper, so let’s see how it fares in our benchmarks.

Page 4 – Synthetic: HD Tune Pro 5.0 & Iometer 1.1.0

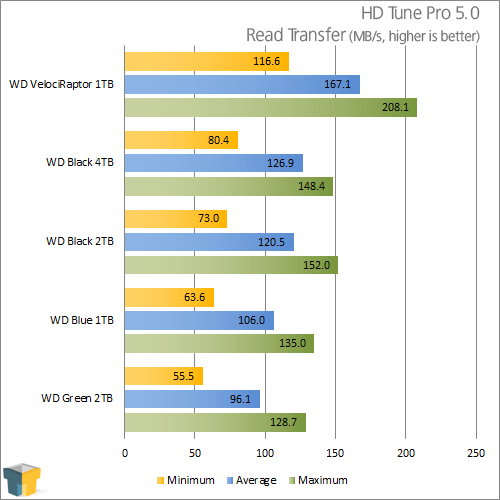

One of the best-known storage benchmarking tools is HD Tune, as it’s easy to run, covers a wide-range of testing scenarios, and can do other things such as test for errors, give SMART information and so forth. For our testing with the program, we run the default benchmark which gives us a minimum, average and maximum read speed, along with an access time result.

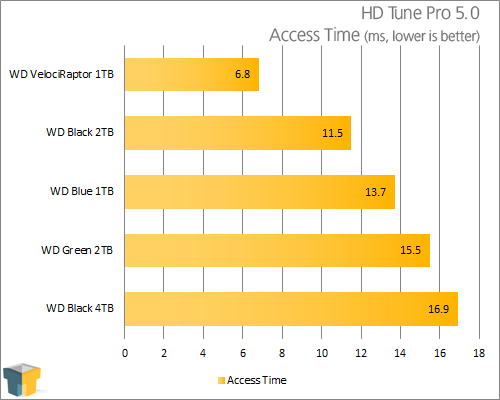

On the last page, we saw the 4TB Black score 9% less than the 2TB model, and the reasons for that become clear with the help of HD Tune. Whereas the 2TB model boasts a read access time of 11.5ms, the 4TB falls to the back of the pack with 16.9ms. This is rather striking, because it means that WD’s massive 4TB 7,200 RPM model reads slower than its ~5,300 RPM Green drives (albeit the difference is small). This is not something you expect to see from a drive that’s targeted at the performance crowd. Again, had WD employed 1TB platters here, we’d be seeing much different results.

On the upside, WD’s Black 4TB did manage to outperform the 2TB model throughput-wise, with the exception of the “Maximum”.

Iometer 1.1.10

Originally developed by Intel, and since given to the open-source community, Iometer (pronounced “eyeawmeter”, like thermometer) is one of the best storage-testing applications available, for a couple of reasons. The first, and primary, is that it’s completely customizable, and if you have a specific workload you need to hit a drive with, you can easily accomplish it here. Also, the program delivers results in IOPS (input/output operations per second), a common metric used in enterprise and server environments.

The level of customization cannot be understated. Aside from choosing the obvious figures, like chunk sizes, you can choose the percentage of the time that each respective chunk size will be used in a given test. You can also alter the percentages for read and write, and also how often either the reads or writes will be random (as opposed to sequential). We’re just touching the surface here, but what’s most important is that we’re able to deliver a consistent test on all of our drives, which increases the accuracy in our results.

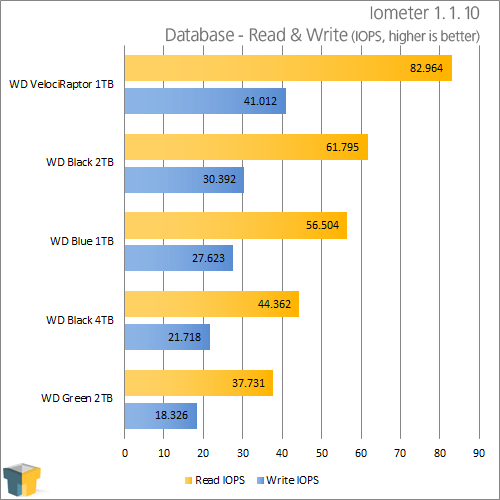

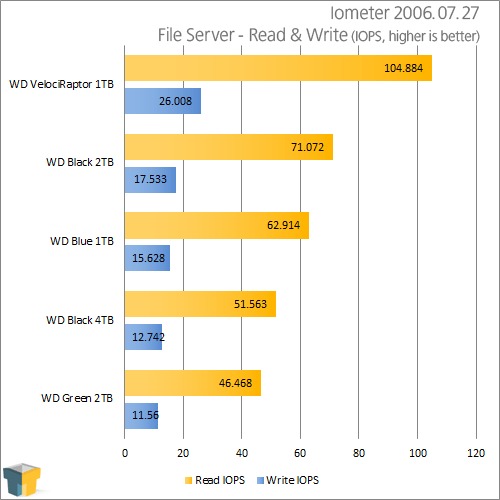

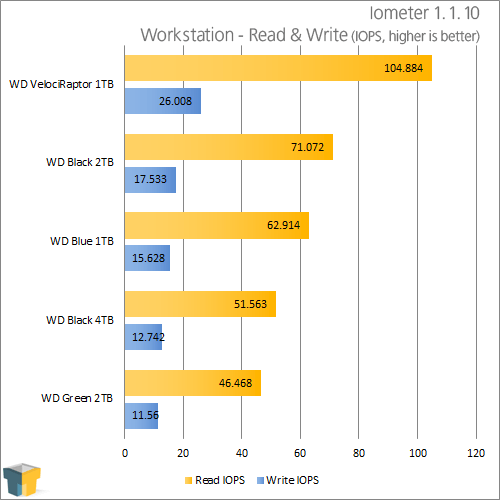

Because of the level of control Iometer offers, we’ve created profiles for three of the most popular workloads out there: Database, File Server and Workstation. Database uses chunk sizes of 8KB, with 67% read, along with 100% random coverage. File Server is the more robust of the group, as it features chunk sizes ranging from 512B to 64KB, in varying levels of access, but again with 100% random coverage. Lastly, Workstation focuses on 8KB chunks with 80% read and 80% random coverage.

Because these profiles aren’t easily found on the Web, with the same being said about the exact structure of each, we’re hosting the profile here (right-click, save as) for those who want to benchmark their own drives with the exact same profiles we use. Once Iometer is loaded, you can import our profile.

We should note that as a whole, hard drive vendors are not that concerned with Iometer testing, and some even recommend against it. The reasons are simple: SSDs will clean house where raw IOPS performance is concerned, so it makes hard drive performance look poor. But we still value results gained with the program because when hard drives are as IOPS-strapped as they are, seeing one drive deliver higher numbers than another means that it’ll better handle the heaviest of workloads.

Continuing an obvious theme here, Iometer performance on the 4TB drive is without question, lackluster in comparison to the 2TB. While not “slow”, per se, it doesn’t sit too far ahead of the energy-efficient Green model.

As mentioned briefly on the first page of this article, we’re likely to drop Iometer from our hard drive testing going forward. The reason boils down to the fact that it doesn’t properly support GPT unpartitioned hard drives, which means any drive larger than 2TB cannot be benchmarked in the same way all of the other drives are.

Why Iometer lacks such basic support, I’m unsure, but it forces our hand to choose between two methods of testing – neither of which are ideal. For the best results, Iometer should always be tested on an unpartitioned drive, but when using GPT, the program doesn’t pick up on it. Therefore, we could benchmark the drive using MBR, but that splits the entire drive into two separate sections. We can’t verify that this would give us reliable results. The second option is formatting the drive and filling it up 100% with a test file, and then run things that way. The problem here is that it can take upwards of 8 hours for a test file to be created on a 4TB model – hardly ideal. Even then, we don’t like running Iometer on a partitioned drive.

Unless we discover a more suitable workaround, we’re likely to replace all of Iometer with HD Tune’s Random Benchmark, as it also gives us results in IOPS (and MB/s). The fact that it tests out multiple common cluster sizes makes it a much more suitable benchmark in our mind versus Iometer. Iometer is still suitable for SSD testing, however. By the time SSDs weighing in at 2TB or higher are released, we can only hope that Iometer will finally support GPT.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!