- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

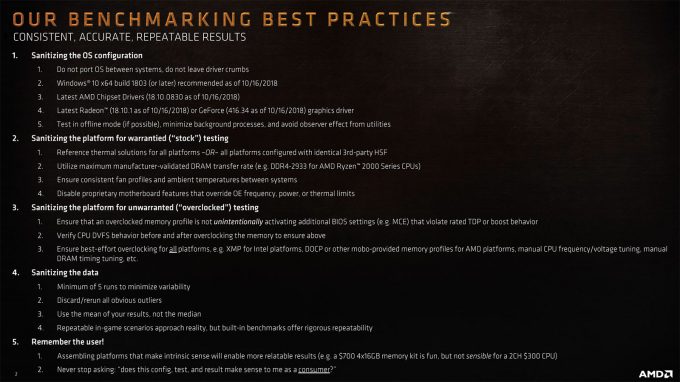

Still Not Sure How To Benchmark? AMD Has Your Set Of Testing Guidelines

Before the weekend hit, AMD shot over some slides that discuss its thoughts on the recent Intel + Principled Technologies performance debacle. I saw some AMD employees make comments on the matter after it happened, but it didn’t seem like the company was going to offer an official statement until now.

I’ll wager that overall, this isn’t too interesting. AMD essentially has the same thoughts that most others do. The testing that Intel paid for was conducted in such a way that the final report failed to inspire confidence to those who read it, something that wasn’t improved on even after Principled Technologies came public with its own thoughts (I’d still contend that it’s a misstep from the company, not something that should diminish its other work).

What I found interesting about AMD’s comments wasn’t what it thought about the matter, but that it offered up its own testing suggestions (seen above) to make sure reviewers and everyone else alike understand what it takes to conduct accurate testing. As someone who takes benchmarking very seriously, I scanned the list wondering if there would be anything I wouldn’t see eye-to-eye with AMD on, but overall, I can’t find anything worth tearing apart.

AMD suggests that OSes are never ported between vendors, and while that’s something we’ve adhered to, it’s good to hear that it’s standard practice. While it might not be obvious that driver crumbs exist, they can, and they can sometimes waste your time after being forced to troubleshoot.

Of course, it’s suggested that drivers be up to date, and that thermal issues be ruled out. It’s also suggested that tests be done in offline mode, but in our case, we can’t do that because some applications require online access to authenticate (V-Ray, for example, will not work without an internet connection). That said, our tests have shown that if you prepare the OS properly, net access is not going to be a problem. Windows is the primary beast that must be contained, which means things like Windows Update need to be paused or disabled.

There are a few things I think were missed in these guidelines, but most of them are tied to taming Windows. It is mentioned that you should “minimize background practices”, but that’s putting it a little too simply. Due to experiencing issues first-hand, I’ve become accustomed to uninstalling as much bloatware as I can from the OS, with my PowerShell script including some 25+ entries. That includes Photos, which I’ve caught on multiple occasions using too much CPU power, even though the app was never opened previously. Windows Search and Defender also need to be disabled, because both have the tendency to hog I/O at unsuspecting times.

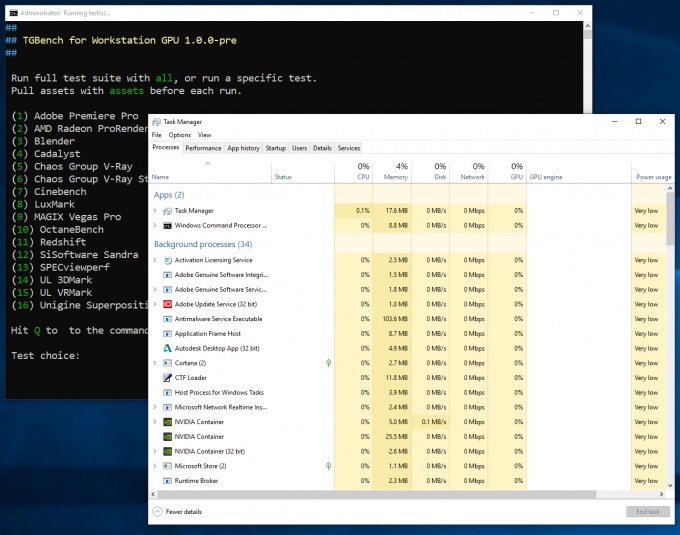

You should also hit-up the “Startup” tab in Task Manager and disable every non-essential application. Even though ours is populated with seemingly required startup software from Adobe and Autodesk, neither are actually required for their related applications to work as normal.

Generally speaking, if you are completely setup and ready to benchmark, and your Task Manager looks similar to ours above where there is no usage across the board, then you should be able to feel confident in your testing. It never hurts to check out Task Manager on occasion, though. That’s how I ended up whittling out little issues over time. There are few things quite as unpredictable as software. It really makes you wish Windows simply had a proper benchmarking mode built in.