- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

AMD Introduces Radeon Instinct Machine-learning Accelerator Series

AMD has long offered graphics cards that have been suitable for machine- (or deep-) learning, but as with other changes that have been made since the introduction of the Radeon Technologies Group, the company has decided to launch future cards with such complex focuses in its own lineup, not attach them to its preexisting Radeon Pro line.

The result is Radeon Instinct, a rather bad-ass sounding series that couldn’t be more serious about what it sets out to do. Machine-learning requires a lot of horsepower – it can’t be understated – and it also needs optimizations designed for that particular market. Those who purchase Radeon Instinct set out to crunch complex math problems as quickly as possible, and with as much reliability as possible.

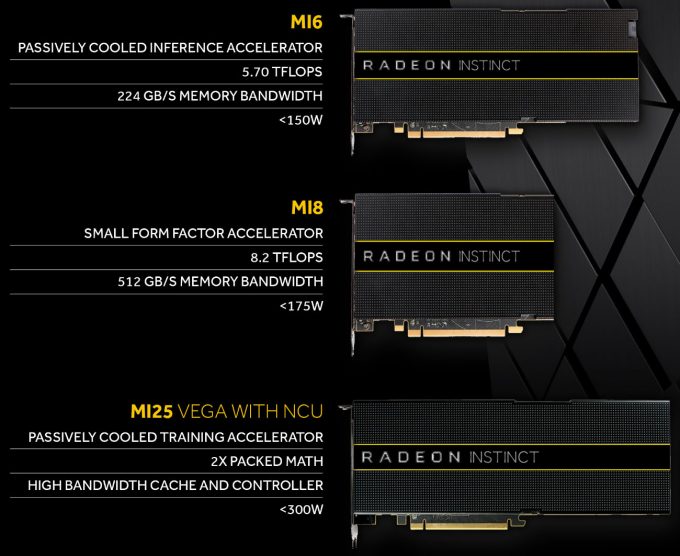

The Radeon Instinct line at this point in time includes three different models, and while the Radeon Pro’s three models are similar in architecture to one another, that can’t be said about Instinct: the three cards couldn’t be more different.

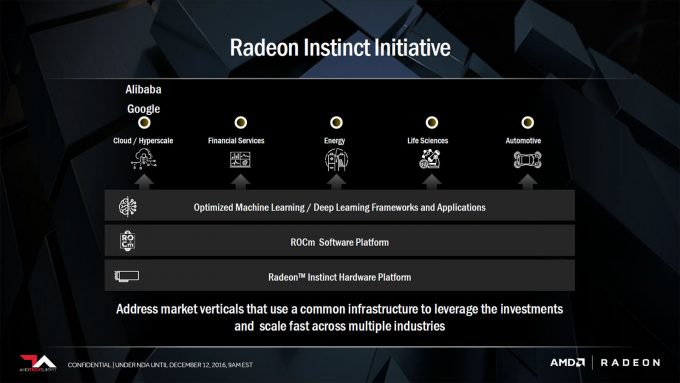

Radeon Instinct is designed 100% in-house by AMD, and it’s designed to work with common workflows in the machine-learning space. At the forefront, it supports AMD’s own (and new) MIOpen GPU-accelerated library, which will allow developers to fine-tune their code to work at peak efficiency with Radeon Instinct. There’s also tight integration with ROCm frameworks, so users can expect Instinct to perfectly complement their workloads in Caffe, Google’s Tensorflow, and Torch 7.

So about those “different” models. As if the series didn’t sound cool enough, AMD’s gone ahead and named one of its new GPUs “MI6”, which might mean it’d be perfectly suitable for undercover agents. This card is built on the Polaris architecture, and based on the 5.7 TFLOP peak FP16 rating, I’d wager that this is a customized RX 480 (much like the Radeon Pro WX 7100). Unlike the RX 480, the MI6 will include 16GB of VRAM.

The second card is the Radeon Instinct MI8, which takes advantage of last-gen’s Fiji architecture to deliver a small form-factor GPU that boasts huge performance: 8.2 TFLOPS FP16, to be exact. This card is built on the same architecture as the Radeon R9 nano, a card that’s perfectly suited for ITX systems. This does bring with it the odd design of including a meager 4GB of VRAM, although its HBM design caters to those needing huge bandwidth but can survive on the limited framebuffer.

Finally, the third card is likely to be the last one to be released (unless all release at once). It’s called the Radeon Instinct MI25, and it will take advantage of AMD’s next-generation Vega architecture. Notice a trend? The MI6 is about 6 TFLOPs, and the MI8 is just over 8 TFLOPs. Does that mean the MI25 is 25 TFLOPs? As hard as it is to believe: yes. How is that possible? We’re going to have to wait and see, apparently. Color us intrigued.

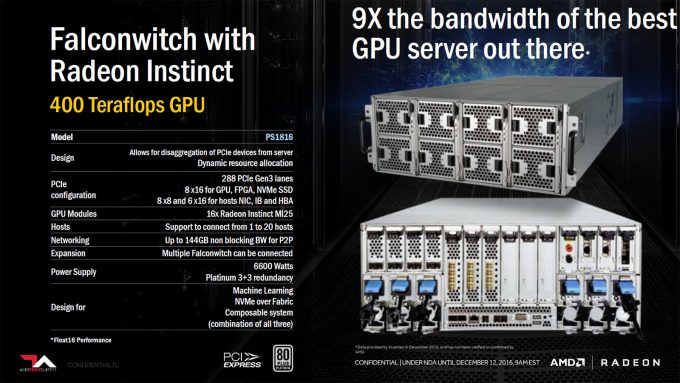

To give an idea of what Instinct users can expect, AMD gave some example builds that revolve around them. A server using Inventec’s K888 2U chassis could be equipped with 4x Radeon Instinct MI25 to deliver 100 TFLOPs throughput. For those with even grander needs, 16x Radeon Instinct MI25s could be installed into an Inventec PS1816 chassis to push a ridiculous 400 TFLOPs of GPU throughput, with a total power draw of ~6.6KW.

If that somehow isn’t enough, you could combine 120 Radeon Instinct MI25s into a 6x 2U Inventec server (combined with 6x 4U PCIe switches) to enjoy performance of 3 petaflops. That should do the trick, right?

AMD anticipates that its Instinct GPU series will become available for purchase in the first-half of 2017. You can read a bit more on the models here. While there, you might want to sign up for the newsletter (at the bottom) to make sure you don’t miss out on your chance to acquire one of these beauts as soon as they become available.