- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

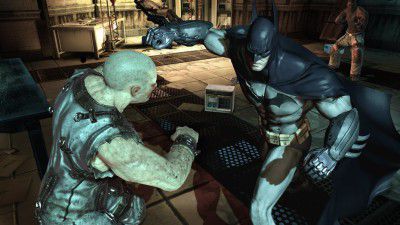

AMD vs. NVIDIA: Anti-Aliasing in Batman: Arkham Asylum

There’s an on-going war of words being exchanged between AMD and NVIDIA, and in some cases, also Eidos and Rocksteady, regarding the recent PC hit, Batman: Arkham Asylum. The story surfaced well before the game’s launch, but spread like wildfire when it became available to consumers, as gamers began to experience a downside when running the game with an ATI card installed: no anti-aliasing. While AA is indeed possible with ATI cards, the method of applying it is complicated, compared to any in-game solution.

With all the details boiled down, it appears that NVIDIA is the one in the hotseat, as multiple sources, including developer Rocksteady, claim that the company disallowed the in-game anti-aliasing code to be applied for non-NVIDIA cards. This of course enraged AMD and gamers alike. Simply changing your ATI card’s vendor ID to match NVIDIA’s would enable anti-aliasing once again, adding even more fuel to the fire.

The story is long and complicated, but Bright Side of News*’s Theo Valich has taken an exhaustive look at the situation from various angles, and has even gotten comment from developers not at all related to the game. Some have praise for NVIDIA, stating that its dedication to game developers is unparalleled. In some cases, NVIDIA has been known to provide not only hardware to developers, but support at no cost. AMD, on the other hand, seemingly does the bare minimum.

The case has a sticking point, though. Half a year before the game’s release, Rocksteady approached both AMD and NVIDIA regarding Unreal Engine 3’s lack of native anti-aliasing support. NVIDIA went ahead and wrote some code, while AMD decided to focus more on DirectX 11 titles, as the company knew it would be way ahead of the curve (and it is, although we’ve yet to see such titles). The argument is that if NVIDIA wrote the code required, why should it allow AMD’s graphics cards to take advantage? NVIDIA states that AMD didn’t do anything to help with the development of AA in the title, and therefore, it’s at fault – not NVIDIA.

Believe it or not, despite the fact that Unreal Engine 3 (mentioned earlier here) is one of the most robust engines on the market in terms of features and performance, it doesn’t natively support anti-aliasing. This is proven by loading up almost any UE3-built game, including Unreal Tournament III. Players do have the option of forcing AA in the graphics driver’s control panel, but that’s a less-than-elegant solution.

Not much is sure to come from this, but two things do seem to be proven. For one, Unreal Engine should include native anti-aliasing support. It’s kind of absurd that the engine has been around for years, and hasn’t included a feature that’s been around for well over ten. Second, AMD really has to step up its game (no pun of course) when it comes to catering to game developer’s needs. I’ve heard this from game developers first-hand in the past, so it does seem to be a real issue.

What got AMD seriously aggravated was the fact that the first step of this code is done on all AMD hardware: “‘Amusingly’, it turns out that the first step is done for all hardware (even ours) whether AA is enabled or not! So it turns out that NVidia’s code for adding support for AA is running on our hardware all the time – even though we’re not being allowed to run the resolve code! So… They’ve not just tied a very ordinary implementation of AA to their h/w, but they’ve done it in a way which ends up slowing our hardware down (because we’re forced to write useless depth values to alpha most of the time…)!”

| Source: Bright Side of News* |

Discuss: Comment Thread

|