- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

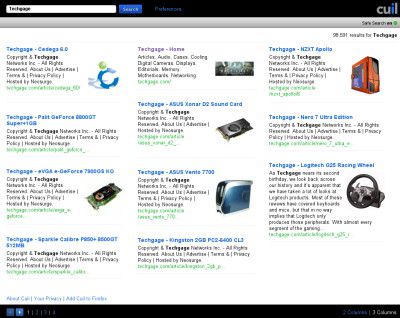

Cuil Taking Down Some Websites?

As far as search engines go, Cuil is one that just can’t catch a break. It launched in late July to harsh criticism, but all for good reason. Any search query shot at the site would be sure to invoke a ‘WTH?’ expression quick. I do have to admit that the queries have improved, though, at least with the ones I’m running. So things may be improving.

Any new found goodness in the search engine is likely to be overshadowed by some though, since Cuil is apparently killing off websites, one by one, due to an ineffective, but overzealous, site crawler. The way a crawler works, normally, is that it searches through the sites for all real URLs and makes sure they are spidered (as in, searchable from the engine). The way Cuil’s seems to work, though, is that it makes up its own URLs on the site to see if they exist, just in case.

Needless to say, though that method might be a good idea if we all had monster servers and no bandwidth bills to worry about, it’d be fine, but it’s apparently been pummelling a few servers so hard, that it’s taking them offline. A quote from one site admin noted that the crawler hit their site upwards of 70,000 times! Ouch. Cuil is not so cool right now, and they desperately need to fix things before people write it off entirely (if they haven’t already).

Website owners are also saying that the way Cuil indexes sites isn’t scientific in any way and is actually quite “amateurish.” According to those who experienced the Twiceler onslaught, the bot seems to “randomly hit a site and continue to guess and generate pseudo-random URLs in an attempt to find pages that aren’t accessible by links. And by doing this, they completely bring a site down to where it’s not functional.”