- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Intel’s MLAA Techniques Use the CPU, not GPU

Graphics buzzwords – love ’em or hate ’em, we’re sure treated to a lot of them. One of the more recent, and most prevalent, is ‘Morphological Anti-Aliasing’. This is a unique technique that aims to provide quality AA effects in games where AA is either not possible in the engine, or just wasn’t a concern to the developer.

Unlike most other AA techniques, MLAA acts as a post-processing effect rather than one that executes at the same time as the frame rendering itself. That means that once a frame is rendered without AA, MLAA steps in and analyzes the entire picture and then works its magic. Sometimes the effect is less-than-ideal, but other times the result can be quite nice. Plus, as mentioned above, it can be forced in games where AA is not supported as an option in the menu.

Though not the creator of the technique, AMD was the first to push MLAA with the launch of its Radeon HD 6000 graphics cards, and NVIDIA has also stepped into the game with its spin called ‘Subpixel Reconstruction Anti-Aliasing’. Intel, not to be left twiddling its thumbs, is also getting in on the MLAA action, and demonstrated its solution at the GDC Europe event, held earlier this week.

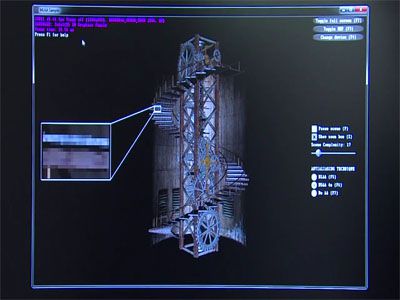

The difference with Intel’s MLAA solution should be somewhat obvious… the CPU is involved. In the company’s demonstration, it’s seen that the GPU itself handles the actual rendering while the CPU handles the AA calculations, and when working as a team like this, the performance is much improved – though raw FPS differences are not provided.

It could be surmised that Intel is jumping on a bandwagon it shouldn’t, given that the company deals only with integrated graphics, but the potential goes much further. In an article published to Intel’s Software Network website last month, full details about its MLAA implementations were given, along with the source code for those who want to investigate things further.

As AA in general is kind of a lost feature on an IGP, I asked Intel if this CPU+GPU tag-team technique could be done with a discrete GPU, and it was confirmed to us that it can. Unlike a technology such as QuickSync, Intel’s solution works through the normal DirectX pipeline, allowing both the CPU and GPU to work together in an efficient manner.

While Intel has proof-of-concepts of its CPU+GPU MLAA rendering in place, neither NVIDIA or AMD are taking advantage of it… yet. Though, with AMD also being able to offer CPUs in addition to discrete GPUs, we might well see the company use that to its advantage in the future, which could force NVIDIA to team up with Intel so that we can get two truly competitive MLAA techniques out there.