- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA’s Support Of FreeSync Seems Well Received So Far

Following years of gamer outcry, NVIDIA finally released a GeForce driver earlier this week that opens up support for VESA’s Adaptive-Sync, used by every AMD FreeSync monitor. From the get-go, the company was clear that it didn’t think most FreeSync monitors fit the bill, and proved it by certifying only 12 of the 400 monitors it tested up to the time of the announcement.

As soon as NVIDIA let GeForce 417.71 loose, GeForce-with-FreeSync owners rushed to test the feature. Almost immediately, the reaction online seemed to be very good. Even days later, we haven’t come across too many issues, though many are bummed that HDMI support doesn’t exist right now. NVIDIA has said before that it’s evaluating adding it in the future (let’s hope it happens).

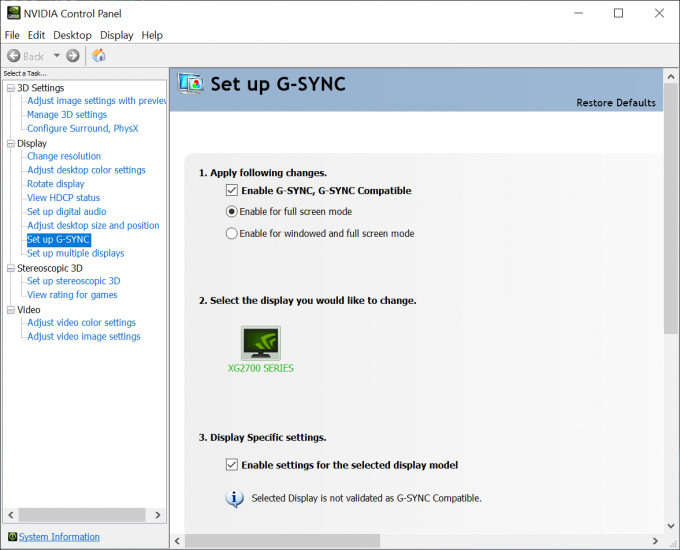

To use FreeSync on GeForce, you need at least a Pascal-based graphics card, and of course a FreeSync-compatible monitor. After installing the latest driver, you should see a G-SYNC menu on the left side of the NVIDIA Control Panel. If not, it likely means that the FreeSync functionality is disabled by default on your monitor, which would require you to scour through the on-screen display to rectify it.

Before getting to testing, you can also enable the G-SYNC indicator in the “Display” menu of the NVIDIA Control Panel, which will show you a simple overlay whenever G-SYNC is active and working. In my testing, this indicator does not show up in DirectX 12 games, but does just fine in DX11 games. After you confirm that G-SYNC works, you’ll want to disable this setting.

We’ve only tested a single FreeSync monitor at this point, since the second one is buried pretty sufficiently. The monitor in question is ViewSonic’s XG2700, a 4K panel capped at 60Hz. That refresh rate means this monitor isn’t the best one for this kind of testing, since the issue can get worse at higher frame rate, but it’s proven suitable enough for quick testing.

We originally gave NVIDIA’s pendulum demo a test, but the results on this particular monitor are not so hot. Even with G-SYNC active, and enabled in the demo, noticeable tearing can be seen. The V-Sync option, conversely, looked pretty great.

We also loaded up Forza Horizon 4, Battlefield V, Deus Ex: Mankind Divided, and Shadow of the Tomb Raider, with the latter two proving to be the best examples among them all for this testing. In Tomb Raider, the beginning of the game has Lara climbing up through a narrow cave, and for whatever reason, it’s a great segment of the game to catch tearing taking place. In DE: MD, the simple panning of the scene also highlights the issues fairly well.

It became ultimately clear after testing that leaving G-SYNC enabled is the smart move. It’s sometimes hard to catch the improvements, but that’s because tearing doesn’t always happen as badly in some scenes as it does in others. Leaving G-SYNC on should mean that you simply don’t see tearing at all; instead, you’d just see smoother gameplay.

So far, we haven’t been able to find any complaint about this G-SYNC on FreeSync thing going awry for someone, so if you own a FreeSync monitor, as well as a GeForce GPU, you should feel safe in testing the feature out. More information can be had in this reddit thread, and also this community support document, which includes submitted information spanning a range of tested FreeSync monitors.