- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

SPEC’s CPU2006 Added to Our CPU Test Suite

Alongside Intel’s launch of its Sandy Bridge-E processors next week, we’ll be unveiling something of our own – an overhauled CPU test suite. Our last major update occurred in late 2008, so we had quite a bit to tweak, add, remove, or replace with the latest iteration. Over the course of the coming week, I’ll be making a few posts in our news section like this one, explaining some of the new tests we’re introducing, why they make for a great CPU benchmark, and of course, why they’re relevant.

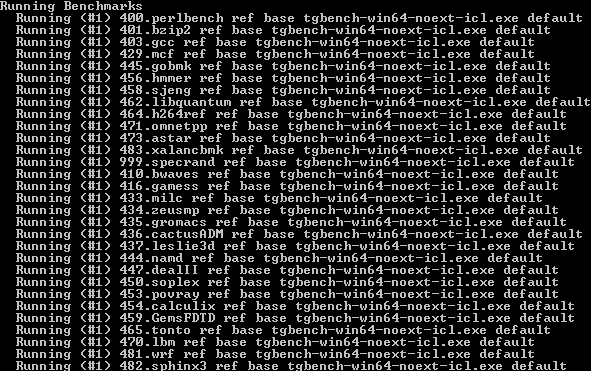

The first benchmark I wanted to talk about is also the most time-consuming and complicated: SPEC’s CPU2006. Don’t let the five-year-old name fool you; the benchmark received its latest update a mere two months ago. The goal here is to test both the compilation and execution performance of a machine. In addition to stressing the CPU, CPU2006 also takes full advantage of the memory sub-system and also the compiler.

You might not have heard of SPEC before, and if so, it’s likely because the non-profit group creates benchmarks targeted more towards the enterprise than the desktop. The folks responsible for each one of SPEC’s benchmarks takes things extremely seriously, and nothing gets released without extensive review. Many companies belong to SPEC as members, offering input and other insight. Some of these include AMD, Intel, Apple, ASUS, HP, Fujitsu, IBM, Lenovo, Microsoft, NEC, NVIDIA, Novell, Red Hat, Super Micro, VMware, Dell and EMC.

SPEC’s CPU2006

I mentioned that SPEC’s focus is more on the enterprise, so why are we benchmarking with it? The reason is twofold. First, we want to cater more to our enterprise readers and deliver benchmark results relevant to their line of work. Second, the results can also benefit our regular readers, as CPU2006 specifically tests the integer and floating-point performance of our processors – and even select optimizations.

CPU2006 is about as complicated to explain as it is to run. With the extensive help of our code master Mario Figueiredo, we’ve prepared what we feel to be the best possible configuration for use with the tool. Since we began evaluating the benchmark, we switched compilers, altered our configuration files extensively and finally wound up at choosing Intel Compiler v12 + Microsoft Visual Studio 2008 for our testing.

While many results on SPEC’s website were performed using per-architecture optimizations, we’ve decided to stick to a single profile that’s fair to all architectures, from either AMD or Intel. In the future, we may do special runs to showcase what’s possible with SSE4.2 or AVX optimization, but as very few coders would need to take advantage of either of these, we feel it could give one architecture a major boost that’s not realistic for the real-world.

CPU2006 is without question the most thorough benchmark we’ve ever used, but that’s not all that makes it unique; it also takes the longest to run. On an Intel Core i7-2600K, the entire suite took just over 13 hours for the full run. That said, we’ve opted to skip over the “peak” portion of the test, as its use isn’t clear. That effectively helps us cut the benchmarking time almost in half.

That said, as lower-end CPUs would take well more than an entire day to run, coupled with the fact that no one interested in CPU2006 results would ever consider low-end processors, we’re likely to skip benchmarking of mainstream and low-end parts.

SPEC’s CPU2006 isn’t the only benchmark we’re introducing to our revamped suite of course, so stay tuned as we’ll be discussing the others through the week. If you want to learn more about this benchmark, hit up SPEC’s site below, and also feel free to post any questions in our related comment thread.