- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

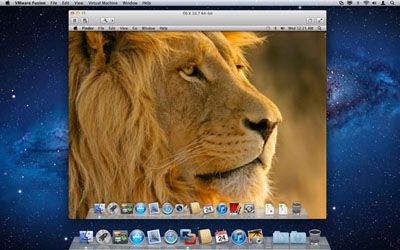

VMware Fusion Gains Ability to Run OS X 10.5-10.7 in a VM

When Apple released OS X 10.7 ‘Lion’ this past summer, I didn’t consider it to be a ‘major’ release as the company was touting. There was, however, a rather significant feature that I somehow completely missed: the ability to run OS X in a virtual machine. If you’re a Windows or Linux user, don’t get too excited – Apple has a rule that OS X VMs can work only under an OS X host. As such, both VMware and Parallels updated their respective clients to support Lion not long after its launch.

Last week though, VMware issued a Fusion update that allowed both Leopard and Snow Leopard to be installed as well, though this decision was one the company made itself, without the “a-OK” by Apple. Whether or not Apple will step in, it’s anyone’s guess, but being that Leopard and Snow Leopard are aging, the company might just not care.

I do have to admit that I find Apple’s stance on VM a little bizarre, however. It wasn’t until now that I realized that OS X VMs have never been an option in the past – and if you’re a developer or at least knows what goes into being one, having no VM option can be a little maddening. There’s quite simply nothing like being able to test your software in a virtual machine – be it for bug-testing or simply testing your creation in different versions of the OS.

If OS X can be used as a guest under VMware Fusion, then it could also be used under Windows or Linux if Apple allowed it. Given that VMware Fusion (and Parallels Desktop) are only produced as Mac binaries, the chances of someone porting the ability to run a OS X under a Windows or Linux host are not good. Nor is there much of a need for that by developers – only end-users, and where those are concerned, Apple is going to make sure you own a Mac if you want to use its OS X.

Still, the ability to run OS X in a VM under OS X is a rather major move by Apple, though the saying, “It’s about time!” couldn’t be more true here.