- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

From Rome To Milan: AMD’s Zen 3 EPYC 7003 CPUs Performance Tested

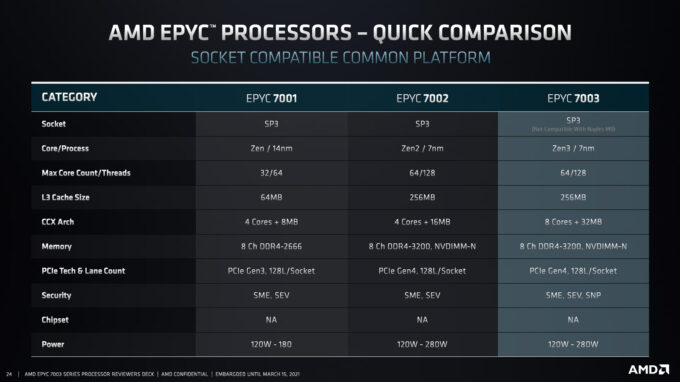

AMD has just launched its third generation EPYC server processor series, also known as Milan. This EPYC update brings AMD’s Zen 3 architecture to the data center, with its improved efficiency, faster performance, and bolstered security. With some of the new chips in-hand, we’re going to explore how AMD’s latest chips handle our most demanding workloads.

Page 1 – Introduction; A Look At Zen 3 Improvements

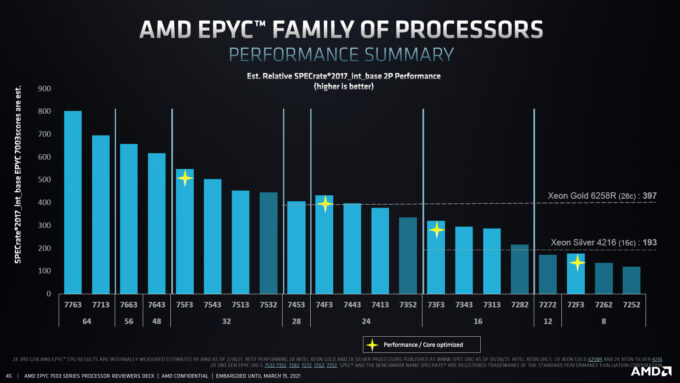

It was four years ago this month when AMD announced its EPYC processor series, which marked the company’s triumphant return to the battle at the heart of the data center. Out-of-the-gate, AMD offered 32 core options with dual socket (2P) potential, giving customers up to 128 threads in one node. In all this time, Intel has been stuck with a 28-core top-end, although its upcoming Ice Lake is set to finally shake that up.

Since the first-gen Naples launch in 2017, AMD improved upon its Zen architecture with Rome to reduce latencies, improve performance, and deliver chips equipped with twice as many cores. Today’s launch of the third-gen EPYC (Milan) represents the first server chips built around AMD’s most advanced Zen 3 architecture.

When AMD launched its Rome parts in 2019, it didn’t have a 280W option from the get-go, but did deliver one later with the EPYC 7H12. The new third-gen line-up launches with not just one high-performance 280W part, but two. As with the previous generation, AMD has special “F” series SKUs that focus on not just reaching higher clock speeds, but also bundling more cache.

| AMD EPYC 7003 Processor Line-up | ||||||

| Cores | Clock (Peak) | Cache | Min cTDP | Max cTDP | 1Ku Price | |

| 7763 | 64C (128T) | 2.45 GHz (3.5) | 256MB | 225W | 280W | $7,890 |

| 7713 | 64C (128T) | 2.0 GHz (3.675) | 256MB | 225W | 240W | $7,060 |

| 7713P | 64C (128T) | 2.0 GHz (3.675) | 256MB | 225W | 240W | $5,010 |

| 7663 | 56C (112T) | 2.0 GHz (3.5) | 256MB | 225W | 240W | $6,366 |

| 7643 | 48C (96T) | 2.3 GHz (3.6) | 256MB | 225W | 240W | $4,995 |

| 7543 | 32C (64T) | 2.8 GHz (3.7) | 256MB | 225W | 240W | $3,761 |

| 7543P | 32C (64T) | 2.8 GHz (3.7) | 256MB | 225W | 240W | $2,730 |

| 7513 | 32C (64T) | 2.6 GHz (3.65) | 128MB | 165W | 200W | $2,840 |

| 7453 | 28C (56T) | 2.75 GHz (3.45) | 64MB | 225W | 240W | $1,570 |

| 7443 | 24C (48T) | 2.85 GHz (4.0) | 128MB | 165W | 200W | $2,010 |

| 7443P | 24C (48T) | 2.85 GHz (4.0) | 128MB | 165W | 200W | $1,337 |

| 7413 | 24C (48T) | 2.65 GHz (3.6) | 128MB | 165W | 200W | $1,825 |

| 7343 | 16C (32T) | 3.2 GHz (3.9) | 128MB | 165W | 200W | $1,565 |

| 7313 | 16C (32T) | 3.0 GHz (3.7) | 128MB | 155W | 180W | $1,083 |

| 7313P | 16C (32T) | 3.0 GHz (3.7) | 128MB | 155W | 180W | $913 |

| “F” Series Processors | ||||||

| 75F3 | 32C (64T) | 2.95 GHz (4.0) | 256MB | 225W | 280W | $4,860 |

| 74F3 | 24C (48T) | 3.2 GHz (4.0) | 256MB | 225W | 240W | $2,900 |

| 73F3 | 16C (32T) | 3.5 GHz (4.0) | 256MB | 225W | 240W | $3,521 |

| 72F3 | 8C (16T) | 3.7 GHz (4.1) | 256MB | 165W | 200W | $2,468 |

| All EPYC 7003 SKUs support: x128 PCIe 4.0 Lanes 8-Channel Memory Up to 4TB DDR4-3200 (w/ 128GB DIMM) |

||||||

AMD had more eight-core SKUs last-generation, but the one that remains here is quite interesting, even if it’s not obvious at first. All of the “F” series chips prioritize clock speed, but the eight-core 72F3 also manages to pack 256MB of cache under its hood, for 32MB per core. That doubles the best cache count of the eight-core options last-generation, so the 72F3 is quite an intriguing chip.

At the top-end of AMD’s line-up, the EYPC 7763 effectively succeeds the 7H12 as AMD’s most powerful offering, sharing a peak TDP of 280W. All of these EPYC chips have adjustable TDPs, with both 280W SKUs in this current-gen line-up able to be tuned down to 225W. While many of the TDPs are shared from last- to current-gen, AMD has improved clock speeds in all cases that we can see.

Another interesting SKU in this line-up is the first 56-core model to hit EPYC: 7663. With 112 threads, the 7663 matches the number of threads available in a top-spec 2P Intel server, but with just a single processor. You could say AMD has a bit of a stranglehold on the core count game right now.

A huge number of cores may be great for certain workloads, but AMD naturally fills out its EPYC lineup with a large assortment to suit many different needs.

As with the previous-gen EPYC processors, these latest models support up to 32x 128GB L/RDIMM for a total of 4TB of DDR4-3200 memory. The eight-channel memory controller returns, as well, delivering some seriously impressive bandwidth, as we’ll see later. Lastly, all third-gen EPYC SKUs can be used in dual or single-socket configurations, with P models being restricted to single-socket only.

EPYC Milan Architecture Updates

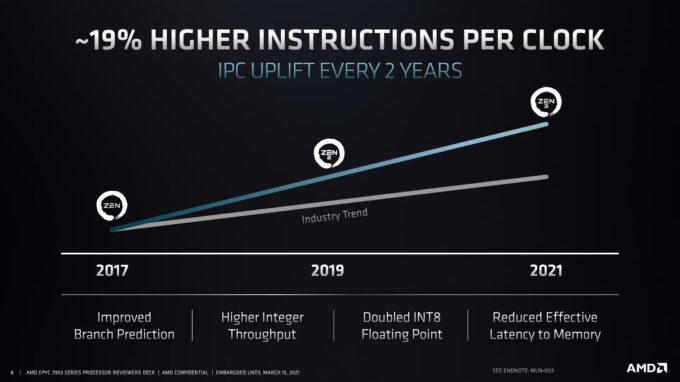

Since the launch of its Zen architecture in 2017, AMD has made great strides towards improving its chips’ instructions-per-clock performance, with notable gains being seen from generation to generation. This “from the ground-up” third-gen Zen core proves to deliver the biggest improvement yet, with AMD now stomping on Intel’s previous IPC performance leadership.

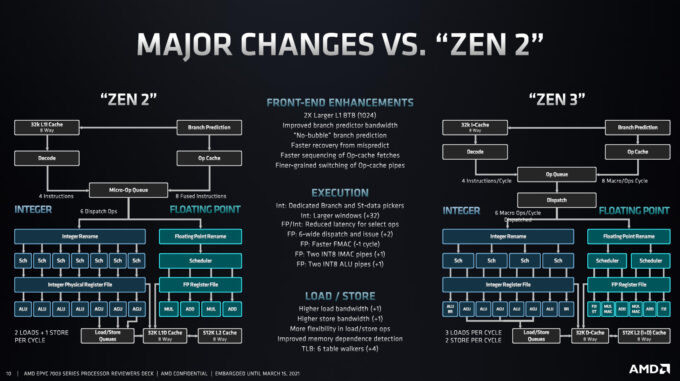

As with the previous generation of EPYC chips, the third-gen Milan awards a 256MB cache to its beefier models, with up to 32MB being available to a single core (such as with the 72F3.) That’s made possible AMD’s move to implement eight cores (from four) into each CCX.

AMD says that Milan brings a 19% improvement to its IPC performance, and based on the performance we’ve seen previously from AMD’s desktop and workstation Zen 3 CPUs, we believe that to be a pretty accurate statement. Much has been polished with this new design, including faster integer throughput, improved TAGE branch prediction, and a notable reduction to memory latencies.

AMD made some big improvements to its branch predictor between Zen 1 and 2, and Zen 3 continues these enhancements. For starters, the L1 BTB has doubled in size to 1,024 entries, and thanks to a new “no bubble” mechanism, branch targets can be pulled every single cycle. Further, improvements have been made to reduce latency penalties from branch mispredictions.

On the execution side, both integer and floating-point have seen many improvements. Integer gains a dedicated branch and data store picker, while the re-order buffer gains a larger window for more instructions in flight. Floating-point improvements can be seen with doubled throughput for IMAC and ALU pipes, one less cycle is needed for FMAC (from five to four), and dispatch and issue has been bumped from 2- to 6-wide, compared to Zen 2.

The load/store unit on Zen 3 sees an increase to both load and store for additional memory operations per cycle – three load and two store. The TLB has also seen a big improvement to include four additional table walkers (six total) that will reduce latency for sparse and random memory requests.

All of these changes contribute to the +19% IPC performance boost, highlighting the fact that it can require a lot of work with many different pieces of an architecture to accomplish such a feat.

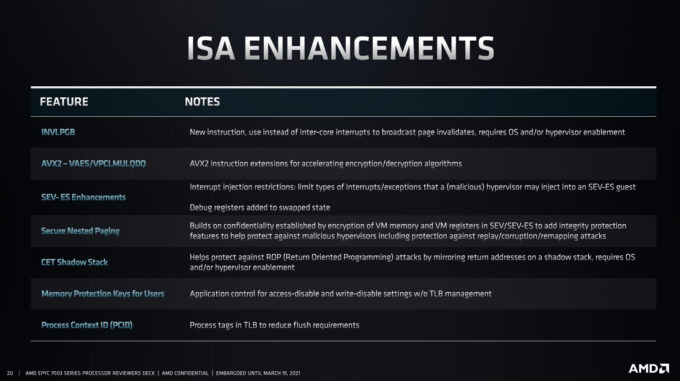

One of the most notable additions to EPYC’s Milan variant is the addition of Secure Nested Paging, or what AMD calls SEV-SNP. This improved memory integrity will help protect guest instances against untrusted hypervisors. The company has also added Shadow Stack to protect against ROP (return-oriented programming) malware.

AMD has introduced a new instruction called Invalidate Page Broadcast which avoids using inner-processor interrupts to do TLB shootdowns; the invalidate can be broadcast to the fabric instead, ultimately increasing efficiency. AVX2 encryption support has also been bolstered with VAES and VPCLMULQDQ extensions added in, with 256-bit data now supported.

Over the next three pages, we’re going to explore performance from a few of AMD’s new EPYC chips in a variety of tests, and compare them against the last-gen EPYC 7742 64-core, and Intel’s Xeon Platinum 8280 28-core. Let’s move on:

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!