- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

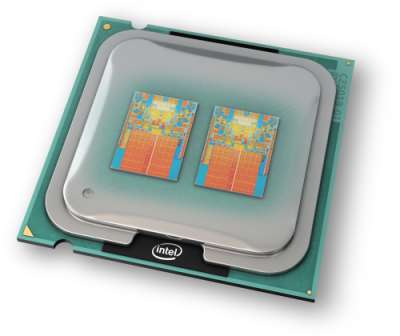

Intel Core 2 Quad Q9450 2.66GHz

The wait for an affordable 45nm Quad-Core is now over, and the Q9450 promises to become the ultimate choice of the new offerings. It’s not much slower than the QX9650, offers 12MB of cache and as expected, has some fantastic overclocking ability. How does 3.44GHz stable sound?

Page 1 – Introduction

When Intel launched their first 45nm processor last November, the QX9650, people might have been excited, but not everyone wanted to shell out a premium to have the fastest piece of hardware available. So, most sat around and waited in hopes to see more affordable Quad-Cores hit the market.

January came, and at CES we found out that the new CPUs were still not ready for launch. This was a blow to those who were already holding off their upgrade or new built. But fast forward almost four months, and finding a 45nm Quad-Core is easier than ever.

It just might not be the Q9450.

Because the new CPUs are in such high demand, it’s hard to find the top two mid-range models in stock, anywhere. For those looking for an entry-level point into the 45nm Quad-Core scheme of things, you’ll be pleased to know that the Q9300 is readily available at most popular e-tailers.

But we’re here today to take a look at the Q9450, the mid-range offering of the mid-range offerings. Clocked at a healthy 2.66GHz, it looks to be an ideal chip for those looking to piece together a fast computer without breaking the bank. For those curious about overclocking, don’t worry, we’ve got you covered.

Closer Look at the Core 2 Quad Q9450

As mentioned above, the Q9450 is one popular chip right now, and because of that, prices tend to be inflated, which is unfortunate. The official price from Intel is $316, but many e-tailers are selling it for well over $400, when it should be closer to $350 – $360. The situation is even worse for the Q9550, which seems to be even more rare.

If you want a new Quad-Core and happen to want it now, then the Q9300 would make for a great choice. I haven’t touched one personally, but I know what kind of performance it pushes out, and given that it has good overclocking ability, it’s hard to go wrong. Plus, because it’s not suffering any sort of shortage, prices are ideal, at around $285.

|

Processor Name

|

Cores

|

Clock

|

Cache

|

FSB

|

TDP

|

1Ku Price

|

Available

|

| Intel Core 2 Extreme QX9775 |

4

|

3.20GHz

|

2 x 6MB

|

1600MHz

|

150W

|

$1,499

|

Now |

| Intel Core 2 Extreme QX9770 |

4

|

3.20GHz

|

2 x 6MB

|

1600MHz

|

136W

|

$1,399

|

Now |

| Intel Core 2 Extreme QX9650 |

4

|

3.0GHz

|

2 x 6MB

|

1333MHz

|

130W

|

$999

|

Now |

| Intel Core 2 Quad Q9550 |

4

|

2.86GHz

|

2 x 6MB

|

1333MHz

|

95W

|

$530

|

Now |

| Intel Core 2 Quad Q9450 |

4

|

2.66GHz

|

2 x 6MB

|

1333MHz

|

95W

|

$316

|

Now |

| Intel Core 2 Quad Q9300 |

4

|

2.5GHz

|

2 x 3MB

|

1333MHz

|

95W

|

$266

|

Now |

| Intel Core 2 Duo E8500 |

2

|

3.16GHz

|

6MB

|

1333MHz

|

65W

|

$266

|

Now |

| Intel Core 2 Duo E8400 |

2

|

3.00GHz

|

6MB

|

1333MHz

|

65W

|

$183

|

Now |

| Intel Core 2 Duo E8200 |

2

|

2.66GHz

|

6MB

|

1333MHz

|

65W

|

$163

|

Now |

| Intel Core 2 Duo E8190 |

2

|

2.66GHz

|

6MB

|

1333MHz

|

65W

|

$163

|

Now |

| Intel Core 2 Duo E7200 |

2

|

2.53GHz

|

3MB

|

1066MHz

|

65W

|

~$133

|

May 2008 |

Where the Q9450 sits well, though, is with it’s robust cache, at 12MB. The Q9300 on the other hand, cuts that in half. The benefits of all that extra cache is difficult to to judge from a simple specs standpoint, but it’s a topic I’d like to delve into in a future article.

So, let’s get right to some benchmarking! On the following page, we explain in-depth how our testing methodology works, then we’ll jump into our SYSmark and PCMark tests, followed by many more.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!