- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GeForce GTX 660 2GB Review

With the release of its GeForce GTX 660, NVIDIA has delivered what we feel to be one of the most attractive Kepler offerings to date. It may be a step-down from the Ti edition released last month, but the GTX 660 delivers great performance across the board, and priced at $229, it won’t break the bank.

Page 1 – Introduction

NVIDIA is good at many different things, but where the company truly excels is in its ability to fill every conceivable void in the GPU market. Have $100? There’s a card for you. Have $150? Ditto. Have $1,000? Can I have it?

Just a month ago, NVIDIA released its GeForce GTX 660 Ti, which was received to rather critical acclaim. Specs-wise, the card isn’t too far off from the GTX 670 which costs $100 more. But at $300, the card wasn’t exactly “affordable” by all standards. NVIDIA knew it had a duty to finally deliver a mainstream Kepler part as close to $200 as possible, and that’s resulted in the $229 non-Ti GTX 660.

The GTX 660 is equipped with 960 cores, vs. 1344 with the Ti. That comparison alone can give us an idea of what to expect here. Well, it would be easy if NVIDIA, in its usual way, didn’t give the core clock a nice boost on the non-Ti edition. Lesser cores, but +65MHz to the clock. An interesting move, and not one that anyone will complain about.

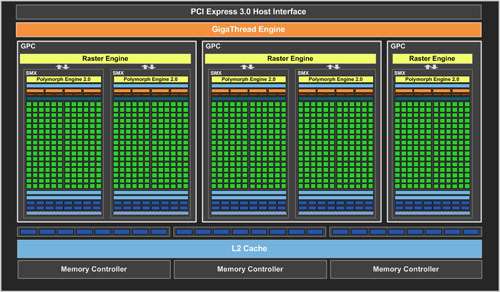

Memory density and general architecture layout remain similar between the two cards, although while the 660 Ti is based on the GK104 chip, this non-Ti version uses GK106. Whereas typical GPCs, or Graphics Processing Cluster, have two SMX units per, GK106 splits one right down the middle, as the following diagram shows:

This is an odd design, but won’t result in some sort of bottleneck as all SMX modules interface with their respective raster engine rather than each other.

Though this non-Ti edition of the GTX 660 drops the core count quite significantly, its increase in core frequency negates some of the additional power savings we would have seen. For that reason, the non-Ti is rated at 140W, vs. 150W of the Ti.

| Cores | Core MHz | Memory | Mem MHz | Mem Bus | TDP | |

| GeForce GTX 690 | 3072 | 915 | 2x 2048MB | 6008 | 256-bit | 300W |

| GeForce GTX 680 | 1536 | 1006 | 2048MB | 6008 | 256-bit | 195W |

| GeForce GTX 670 | 1344 | 915 | 2048MB | 6008 | 256-bit | 170W |

| GeForce GTX 660 Ti | 1344 | 915 | 2048MB | 6008 | 192-bit | 150W |

| GeForce GTX 660 | 960 | 980 | 2048MB | 6000 | 192-bit | 140W |

| GeForce GTX 650 | 384 | 1058 | 1024MB | 5000 | 128-bit | 64W |

| GeForce GT 640 | 384 | 900 | 2048MB | 5000 | 128-bit | 65W |

| GeForce GT 630 | 96 | 810 | 1024MB | 3200 | 128-bit | 65W |

| GeForce GT 620 | 96 | 700 | 1024MB | 1800 | 64-bit | 49W |

| GeForce GT 610 | 48 | 810 | 1024MB | 1800 | 64-bit | 29W |

Alongside the GTX 660, NVIDIA has also launched the GTX 650, although availability at this point is nil. Despite being briefed on both of these cards at the same time, I haven’t found a single review online of the GTX 650, so it’s to be assumed that NVIDIA isn’t rushing that product out too fast, and if I had to guess, it’s a card that exists only to finish off the sequential numbering system. Laugh all you want, but imagine the table above without the GTX 650. That’d look rather odd, wouldn’t it?

That aside, for NVIDIA to call it the GTX 650 is a bit of an insult to the GTX name. In no possible way does this GPU deserve it – GTX has traditionally represented cards that could more than handle games being run with lots of detail and at current resolutions. “GTX” is certainly suitable for the 660, but how did NVIDIA deem the 650 worthy when it slots just barely in front of the $100 GT 640? Maybe next we’ll see Porsche release a 4 cylinder 911 Turbo S.

Rant side, the vendor to provide us with a GTX 660 sample is GIGABYTE. Unfortunately, it’s an “OC Version”, which means we are unable to deliver baseline GTX 660 results (I am not keen on forcing turbo adjustments). Making matters a bit worse, the OC isn’t that minor. Memory remains the same, but the core gets +73MHz tacked on. An OC like this is great for consumers, but tough on reviewers who’d like to compare GPUs fairly.

We don’t normally include photos of a product box in our reviews, but something about the one with this GTX 660 struck me. It’s super-clean, simple, and nice to look at. I’m a sucker for blue and black.

With its Windforce cooler, GIGABYTE aims to greatly reduce the GTX 660’s temperatures vs. a reference cooler. At the same time, thanks to the larger fans, many heatpipes and finned design, the card should run a lot quieter as well.

For the sake of time, I didn’t tear the card apart for photos. But you can see both sides of the card below to gain an understanding of how the cooler is constructed.

We’ve been pleased with the Windforce cards we’ve taken a look at in the past, and this model looks to carry on its legacy. And with that, it’s time to tackle our test system, and then move right on into our testing.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!