- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

How Does Dual GPU Rendering Scale With NVIDIA’s RTX 3080 & Your Old GPU?

Have you ever wondered about the performance gains you could see by combining dissimilar GPUs? With the RTX 3080 having launched, we’re taking a look at how our rendering workloads are improved when the card is combined with each of the last-gen GeForce RTX GPUs – as well as the older GTX 1080 Ti, for good measure.

The launch of NVIDIA’s first Ampere-based GeForces has been eventful, to say the least. The fact that we can’t even mention the new cards without hearing, “Yeah, cool – if you could buy them.” is rather telling about the current state of things. And, it’s unfortunate, because these new GPUs are crazy fast, for either gaming or creation.

We can’t look back at the previous Turing generation and call it “weak”, but when it comes to rendering, the gains NVIDIA has delivered with Ampere is seriously impressive. It might mean that the last-gen cards are not as impressive as they seemed to be a couple of months ago, but those who are able to score one of the new cards will be enjoying some satisfying performance.

But… there’s always room for “moar power”, right? With this launch, we noticed a higher than the usual number of people questioning whether or not they should keep their old GPU around to tag-team with their new RTX 3080 (or 3090) for an added rendering boost. We’re going to help answer that with this article, where we combine the use of RTX 3080 with each last-gen GeForce RTX card, as well as the Pascal-based GTX 1080 Ti for good measure.

To nip a question in the bud, we do not have a second RTX 3080 to do proper dual-GPU testing with that. In time, this could change, but we’re just working with what we have for now. We do however have some performance from RTX 3090 + RTX 3080, as a close enough gauge as to what to expect.

If you want to learn more about the new GeForce series, you can check out our previous coverage for creators, gamers, or read up on the architecture.

| Techgage Workstation Test System | |

| Processor | Intel Core i9-10980XE (18-core; 3.0GHz) |

| Motherboard | ASUS ROG STRIX X299-E GAMING |

| Memory | G.SKILL FlareX (F4-3200C14-8GFX) 4x8GB; DDR4-3200 14-14-14 |

| Graphics | NVIDIA RTX 3090 (24GB, GeForce 456.38) NVIDIA RTX 3080 (10GB, GeForce 456.38) NVIDIA TITAN RTX (24GB, GeForce 456.38) NVIDIA GeForce RTX 2080 Ti (11GB, GeForce 456.38) NVIDIA GeForce RTX 2080 SUPER (8GB, GeForce 456.38) NVIDIA GeForce RTX 2070 SUPER (8GB, GeForce 456.38) NVIDIA GeForce RTX 2060 SUPER (8GB, GeForce 456.38) NVIDIA GeForce RTX 2060 (6GB, GeForce 456.38) NVIDIA GeForce GTX 1080 Ti (11GB, GeForce 456.38) |

| Audio | Onboard |

| Storage | Kingston KC1000 960GB M.2 SSD |

| Power Supply | Corsair 80 Plus Gold AX1200 |

| Chassis | Corsair Carbide 600C Inverted Full-Tower |

| Cooling | NZXT Kraken X62 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro build 19041.329 (2004) |

| All product links in this table are affiliated, and help support our work. | |

All of the tests seen below were included in our original ProViz looks at Ampere, but one is missing: Arnold. In testing, we couldn’t get Arnold (inside of Maya) to use more than one GPU, even though the renderer is supposed to support it. If we render with the default state, only the first GPU will be touched; if we manually select both GPUs, the render ends up failing.

We’re not sure if Arnold is meant to have two identical GPUs in order to work, but we doubt it. If we get to the bottom of this issue, we’ll get it tested for the next time we do this kind of content – because we don’t expect that it will be our last.

To help clarify the charts below (because things get real complicated later on), the ‘Other GPU’ refers to GPU the RTX 3080 is paired with in the dual configuration. Some charts will include the single GPU result as a baseline. It’s important to focus on the single RTX 3080 results when comparing the dual GPU solutions, as you’ll see that sometimes, most of the performance will come from the RTX 3080 by itself. In addition, an SLI bridge was not used (nor is it required for our purposes), and the GPU scaling is purely down to the software.

So with that, let’s get started with Octane:

OTOY OctaneRender 2020

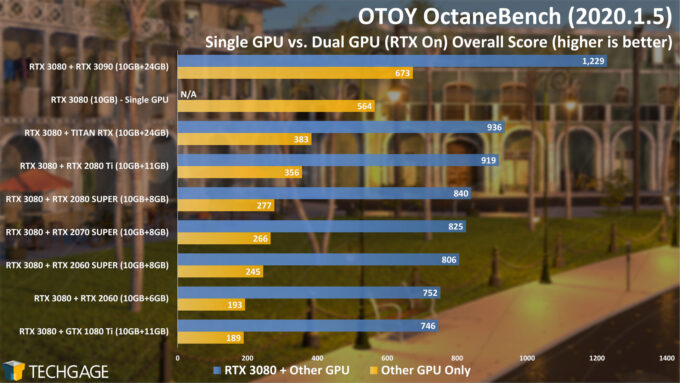

OctaneBench scales as well as we’d ever hope a benchmark would, to the point where it feels like a purely synthetic benchmark – but it does in fact represent real workloads (as we’ll see with our OctaneRender results below). With an RTX 3080 alone, the OctaneBench score is 564. Add a last-gen 2080 SUPER to the mix, and it rises 49% to 840.

Perhaps an even more interesting comparison is with the RTX 3080 and GTX 1080 Ti. Despite that Pascal-based card getting up there in age now, it combined with the new RTX 3080 can increase rendering performance by about 32%. With two generations to have come out since that card, it’s cool to see the GTX 1080 Ti adding so much here. But… there might be more to that than what immediately meets the eye.

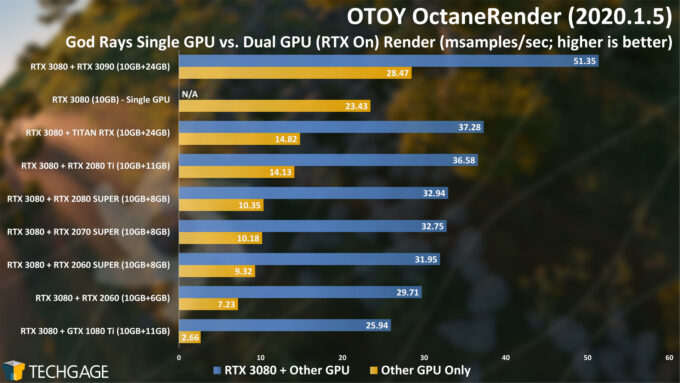

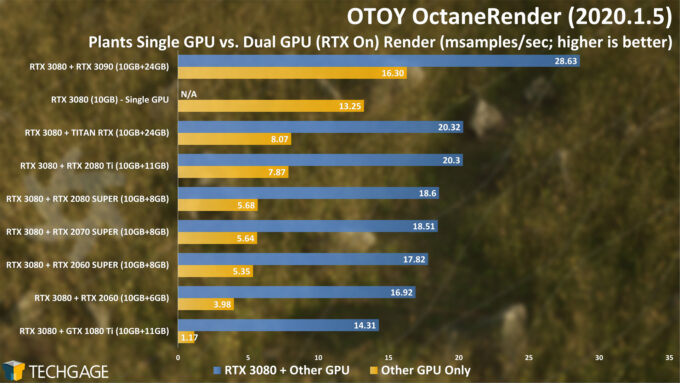

With our proper OctaneRender tests, we’re seeing slightly different gains around the collection of cards. Whereas 3080 + 2080S scored 49% higher in OctaneBench, both the God Rays and Plants projects in OctaneRender reflect closer to a 40% gain (which of course isn’t bad, just not as impressive).

On the GTX 1080 Ti front, the end results are far less impressive than they were in OctaneBench. Here, the combination of 3080 + 1080 Ti shows a 2% and 8% boost to rendering performance compared to the standalone RTX 3080. Note that if we were only testing without RTX acceleration, the gains for 1080 Ti would look more impressive, but when that acceleration is enabled, older hardware just can’t keep up.

RTX 2000-series offered a major boost to rendering performance itself, and again, RTX 3000 takes it to a new level, so it’s no surprise a two-gen-old card isn’t able to keep up as well. This does seem to prove that OctaneBench results can’t always be taken as gospel for dual-GPU mixed-generation configs, so it’s useful to have a second opinion.

Blender 2.90

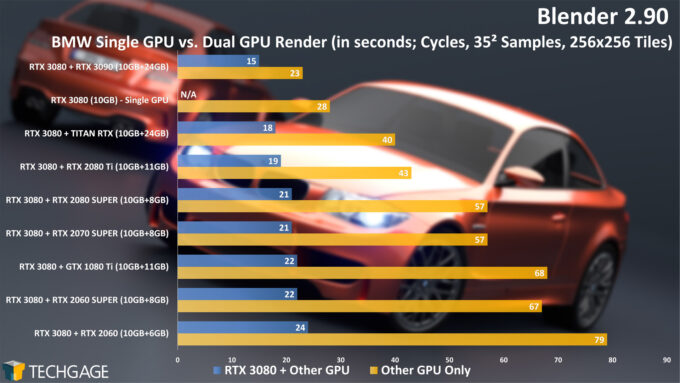

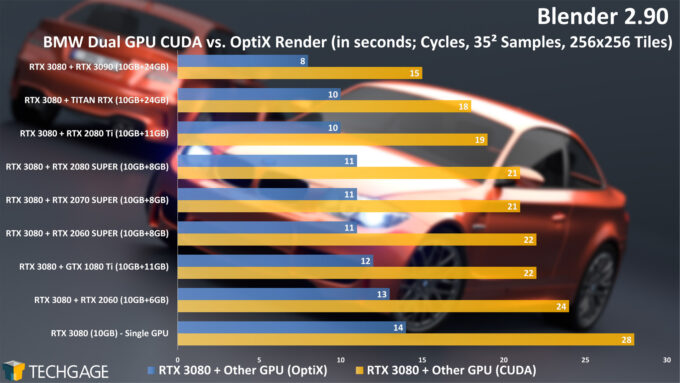

The BMW project isn’t the best showcase of Blender’s rendering capabilities, but it’s a classic test that every user of the ultra-popular open-source software seems to be familiar with. Here, the best-case scenario is that your last-gen card was a TITAN RTX, and in that case, the end render time will drop from 28 to 18 seconds. That’s a handsome gain, but we’re of course talking about last-gen’s top GPU.

If we again look at the RTX 2080 SUPER and RTX 3080 together, the render time will drop from 28 down to 21 seconds. If OptiX is enabled, the top-end last-gen 2080 Ti and TITAN RTX will hit the 10 second mark, whereas combining the RTX 3080 and 3090 will see sub-10s, absolutely crazy. As a reminder, that configuration is only included because we didn’t have a second 3080 on-hand, but wanted to show a rough estimate of the overall improvements that can be seen when doubling up with Ampere.

OK, it’s time to take this performance testing to school:

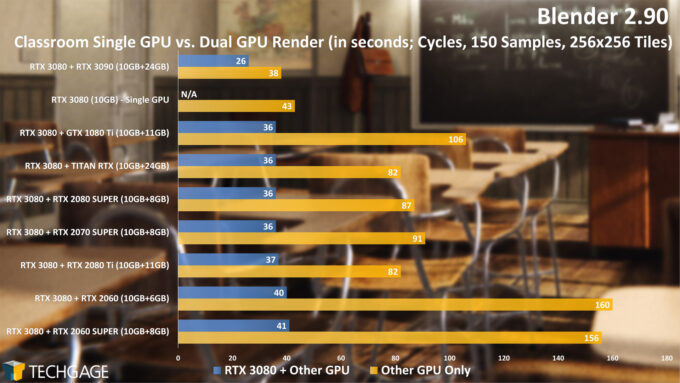

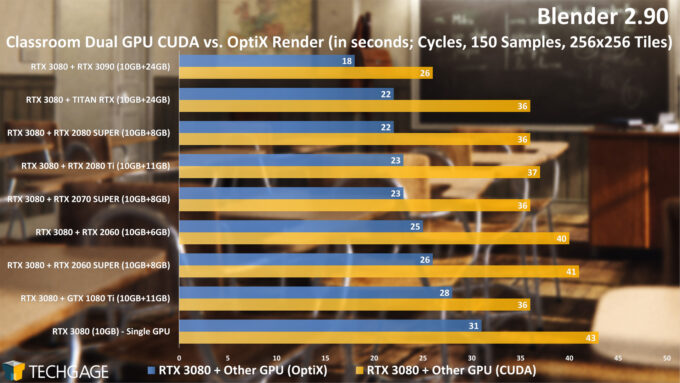

We’d expect the Classroom project to behave differently from the BMW one since it’s a lot more complex, but the scaling is still really interesting. With the older GTX 1080 Ti, the RTX 3080 tag-team can deliver top-rate combined performance, keeping up with the best of them – but the gains are not as impressive as they were with BMW, dropping only from 43 to 36 seconds.

The RTX 3080 + 3090 combination proves that two of these current-gen GPUs are going to offer the most impressive gains in performance. If OptiX is engaged, that 1080 Ti has less of an advantage, shaving just 3 seconds off of 31. Looking at the 2080S + 3080 match-up again, we see a drop from 31 to 22 seconds. That is definitely a lot more noticeable, and makes the extra power-draw more justifiable. However, overall we are seeing diminishing returns.

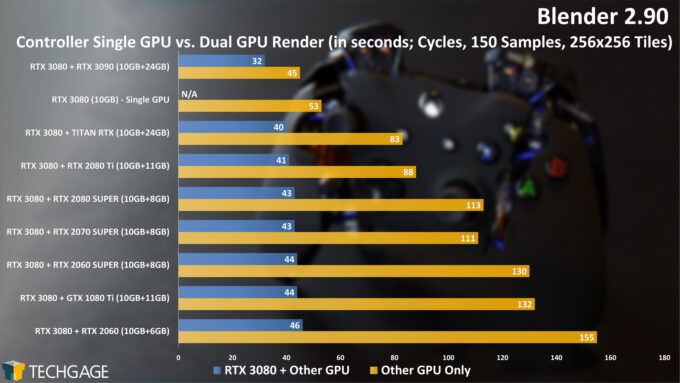

The Controller project utilizes some features that OptiX doesn’t yet support, so we can only do a CUDA render with it. As this third opinion, we’re seeing gains by adding any second GPU to the mix, but without OptiX, we don’t really get the full picture of what’s possible. With CUDA-only, though, the GTX 1080 Ti does manage to improve the rendering time quite well, but as you can see from the bottleneck, the RTX 3080 is doing most of the heavy lifting in all of these results, going from 53 seconds down to the 40-ish second range with all other GPUs.

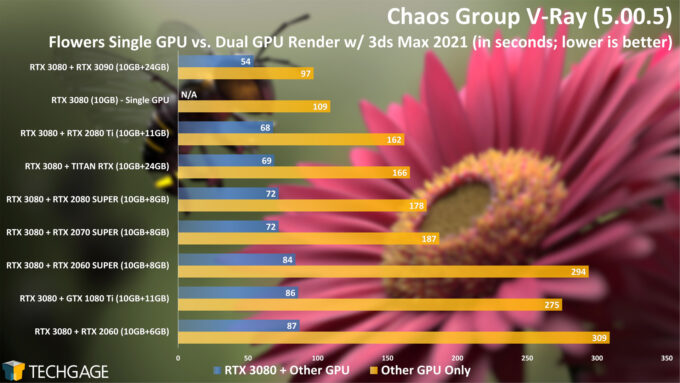

Chaos Group V-Ray 5

It looks like some sweet gains await those V-Ray users who plan to buy a new RTX 3080 and still make use of their last-gen card. By its lonesome, the RTX 3080 renders this project in 109 seconds, a time reduced to 87 seconds after a lowly RTX 2060 is added in. Combined with the last-gen 2080S, which also carried a $699 price tag, we see a drop from 109 to 72 seconds. For a single frame, that’s great; you can only imagine how much time that will end up saving over the long-run.

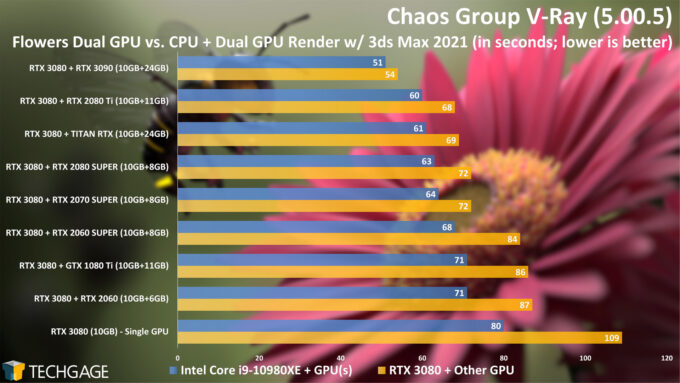

If you really want to punish your power outlet, you can also invite your CPU to the party:

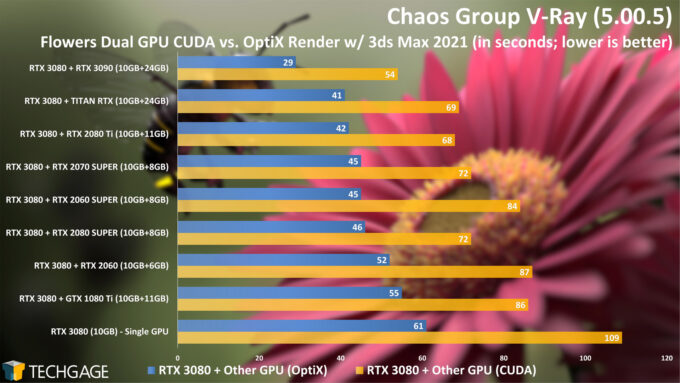

With these results, it’s hard to say if it’s even worth involving your CPU into the mix, because you must consider we’re adding 18 cores to this process, and the gains are still kind of modest considering the amount of power draw it adds (we’ll talk more about that later). What will likely prove even more beneficial is simply enabling RTX acceleration:

Whereas the 3080 + 2080S + CPU combination scored a render time of 63 seconds, using OptiX on both of the GPUs instead of using the CPU will further drop the time to ~45 seconds. Interestingly, when OptiX is engaged, a whack of the combinations perform similarly, leading to the odd occasion where a slower combination will be a second faster or slower than a technically faster one, which is all part of natural varience in results.

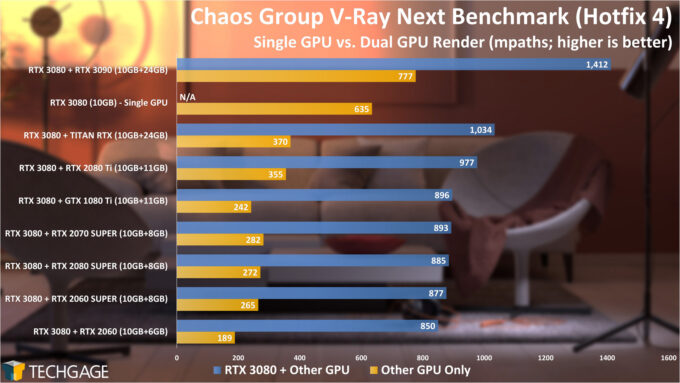

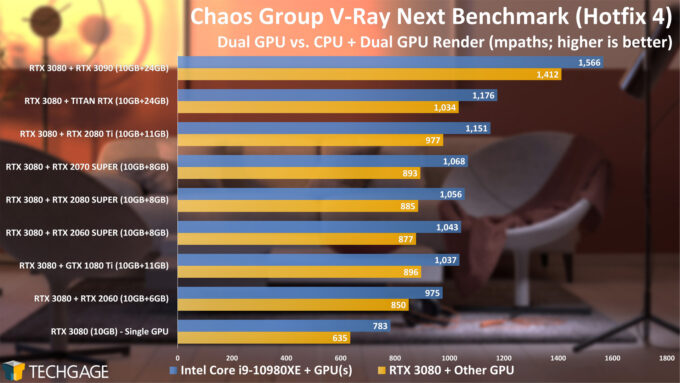

We can’t help but notice just how much of a lead is gained when using two Ampere cards together. While we’re using a 3090 as the second GPU here, the gains over a combination like 3080 + TITAN RTX is easily seen. While V-Ray’s standalone benchmark is based on the older 4.X variant, let’s use it as a second opinion:

This Ampere generation marks the first time we’ve been able to combine two GPUs together and breach the 1,000 points mark. That in itself is pretty impressive, but more than that is just the fact that everything scales so well together. This benchmark unfortunately lacks an OptiX implementation, but even without it, the generational gains with Ampere over Turing is really impressive to see.

Luxion KeyShot 9

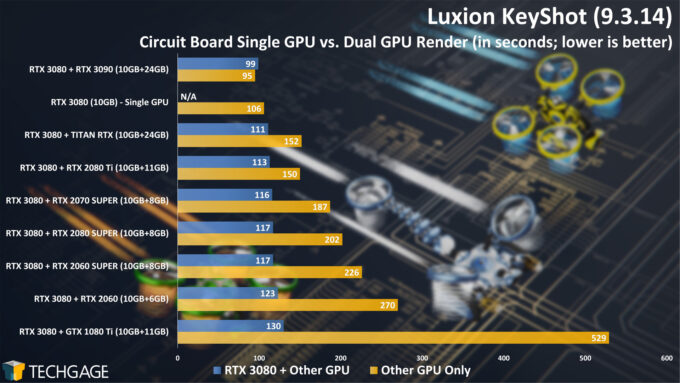

Aside from Arnold not utilizing our second GPU for some reason, KeyShot added the second anomaly to our testing. With the Circuit Board project, there wasn’t a single instance where using two GPUs proved to be quicker than sticking with a single RTX 3080. When combined with an RTX 3090, the render time somehow got even worse (albeit slight).

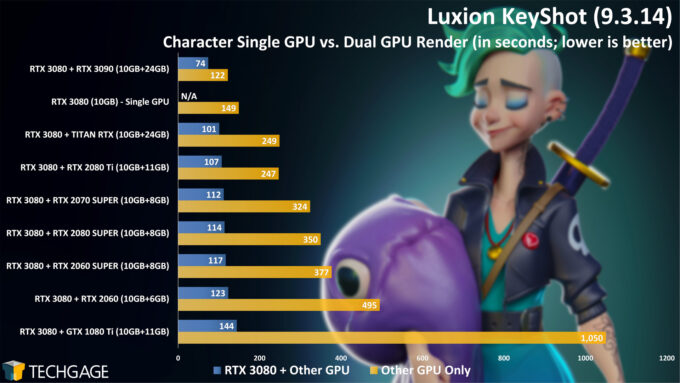

Luckily, things were far different for the Character project, with healthy gains in performance seen across most configurations. RTX 3080 + 2060S doesn’t exactly improve things much, but 3080 + 2080S can result in a render time drop from 149 to 114 seconds. This again still shows that the RTX 3080 is doing most of the heavy lifting here when paired with last-gen GPUs, with only the 3090 cutting times down in half compared to the single RTX 3080.

Unless we’re blind, KeyShot doesn’t offer a way to choose which GPU to use, which is unfortunate, and something we’d suspect will change in time. KeyShot fully supports running multiple clients at once (even with the same project) so that you can render one side with CPU, and the other with GPU. It’d be nice to expand that to allow multiple GPUs to be used for each open client.

We’re not sure when KeyShot 10 is going to drop, but we expect it to be sometime this fall, so we look forward to revisiting this kind of testing and see if anything has improved with overall scaling across multiple projects.

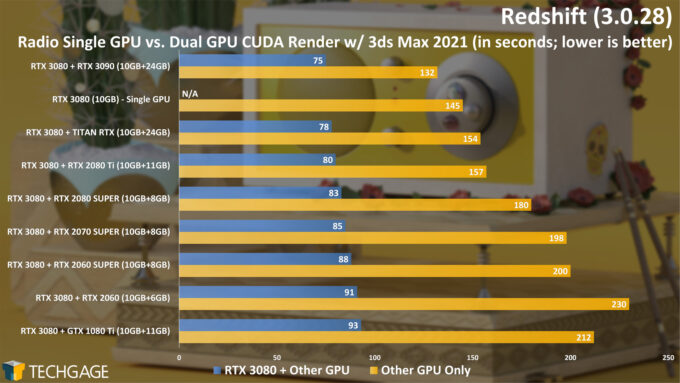

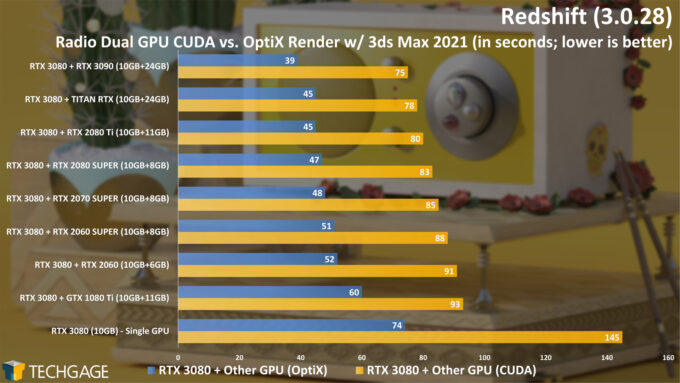

Maxon Redshift 3

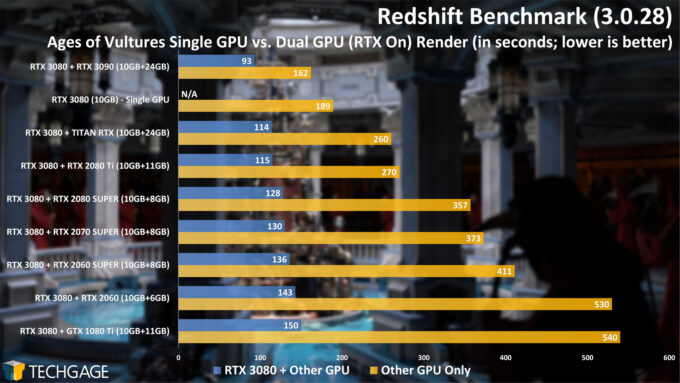

Using Redshift’s built-in benchmark, we’re seeing some excellent results out of, well… every single configuration in the list. Even the 1080 Ti + RTX 3080 drops the standalone RTX 3080 result from 189 to 150 seconds. If we go back to our classic RTX 3080 + 2080S match-up, we see a drop from 189 to 128 seconds. We might not see perfect scaling here, but it’s great scaling, nonetheless. It seems totally justified for anyone to keep their last-gen GPU to combine with their new RTX 3080 for Redshift.

But first, let’s see how it compares to our real-world result:

With only CUDA being used, the GTX 1080 Ti gets to strut its strengths a bit better than in some of the other tests, and once again, we’re seeing each combination deliver impressive gains over using only a single RTX 3080. Let’s add some OptiX acceleration to the mix:

With the CUDA-only result, we see 75 seconds being the fastest result, made possible by combining an RTX 3080 with 3090. If we enable OptiX, that time drops to 39 seconds. It’s important to note the true differences between CUDA and OptiX here. With OptiX, the RTX 3080 by itself rendered this scene in 74 seconds, which beats out every single dual-GPU configuration in the CUDA chart (besting the RTX 3090 combination by one second).

OptiX definitely takes things to a new level here. If you were to move from single GTX 1080 Ti to RTX 3080 + 1080 Ti with OptiX, you’d effectively drop your render time from 212 to 60 seconds. Note that the GTX 1080 Ti doesn’t have the ability to accelerate ray tracing since it lacks the additional cores, so that device would fall back to CUDA, and let the RTX 3080 do most of the legwork.

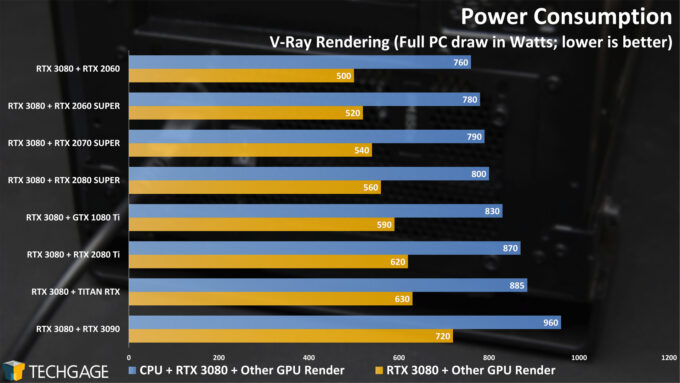

Power Consumption

We used Blender for our power tests in both our RTX 3080 and RTX 3090 launch reviews, but because V-Ray handles adding a CPU to the dual-GPU configuration a bit better, we’ve opted to use it instead. Note that we don’t necessarily recommend CPU+GPU+GPU over GPU+GPU w/OptiX, but the goal here was to find a worst-case scenario. OptiX will in time better support CPU usage, so those results could become more relevant in time.

Nonetheless, it’s immediately obvious that if you pair up two GPUs in your rig, you are going to want a beefy power supply. When combining the RTX 3080 with RTX 2060, the peak wattage hovers around 500W, which is pretty modest, all things considered. At the top-end, an RTX 3080 combined with 3090 will peak the rig at around 720W. We’d assume dual RTX 3080 would be 700W, which means you’d want at least an 800W PSU as the bare minimum.

If we add a big CPU into the mix, the power draw can increase significantly, with our RTX 3080 + 2080S + CPU configuration peaking at 800W. That seems like a lot of power draw for a single workstation nowadays, but we’re talking about an obscene amount of performance on offer as a result.

You should always give yourself some breathing room when it comes to your power supply. If your configuration peaks at 700W, and your PSU is 800W, that doesn’t exactly give you a ton of wiggle room. If you add some SSDs and HDDs, you’ll inch closer to that 800W mark, and if you decide to add a CPU into the process, you may very well go over your limit.

Final Thoughts

After poring over all of that data, it’s clear that there are some sweet gains to be had if you do stick with your last-gen GPU to combine its forces with NVIDIA’s latest hotness. As with most things ProViz, though, some workloads are going to benefit from certain configurations more than others, so hopefully this breakdown of results will help you better figure out which route you should take.

In some cases, sticking with an older GPU might not seem as worth it if the render times are not improved too much, but the power draw is increased another couple of hundred watts. You will have to figure out whether you’re going with the right balance, or if you’d be better off selling off your old card to help fund the new one, simply because the gains are not too great. Or, if you have a really involved workflow that would benefit from dedicating the older GPU to a rendering task on a second screen, that’s also an option.

It really does seem, though, that most scenarios will favor having a second GPU. We encountered an oddity with Arnold not touching our second GPU, so we’d recommend holding off on eyeing any dual-GPU configuration with that solution until you know for certain it will scale for you. We’ve been in touch with Autodesk over this, so we hope to get to the bottom of our issue soon.

Naturally, you will want to make sure your dual-GPU configuration is going to be supported by your power supply. If you’re rocking a modest 600W PSU, the dual-GPU route might not be the best one to take until you swap it out for something beefier. While we hit 500W with RTX 2060 + RTX 3080, adding a CPU to that mix will easily peg the power near 600W. Add HDDs, SSDs, and other peripherals to the mix, and it’s easy to see 600W as not being sufficient enough.

We should also note that while we’ve focused on rendering here, there will be other scenarios where multiple GPUs can be utilized just fine, especially if it has to do with compute. If your primary focus is video editing, you’re not likely going to benefit much from a second GPU, unless the solution you’re using explicitly denotes support for it (and even then, it normally takes a special case to actually benefit from more than one GPU). As always, know your workload.

If you have any other questions, please comment below. In the meantime, we’re still wrapping up gaming testing with the RTX 3090, but decided to churn through this article first since too many games have been updated and need to be retested with our stack. With the RTX 3070 due in a few weeks, we’ll be preparing to deliver more ProViz content around that, as well. As always, stay tuned for more.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!