- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

A Quick Look At The High Bandwidth Cache Controller On AMD’s Radeon RX Vega

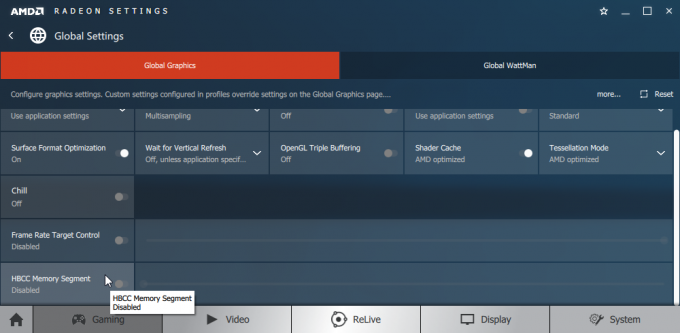

AMD’s Vega GPU architecture brings many notable features to the table, but the one to find its way into Radeon chief Raja Koduri’s heart is HBCC – or “high-bandwidth cache controller”. In this article, we’re going to take a look at what HBCC is, why it offers no benefit right this moment, and talk about what it could offer in the future.

After spending quite a number of hours testing AMD’s Radeon RX Vega in both gaming and compute workloads, I wondered if there was some other interesting angle I could take a look at the card from. Then I remembered a little feature called “HBCC”, a major addition to RX Vega that I ended up putting on the back burner until this weekend.

At its Capsaicin & Cream event held alongside GDC in February, AMD made a big fuss about RX Vega’s HBCC (High-Bandwidth Cache Controller), promising huge benefits to gaming and professional performance in certain scenarios. At its RX Vega editor’s day a couple of weeks ago, Radeon chief Raja Koduri called it his favorite feature of the new architecture.

“If it’s so amazing, why is it disabled by default in the driver?”, I asked myself. AMD has made it clear that this is a forward-thinking technology, one that will enable grand environments in our future games due to a huge boost in data transfer efficiency. But… I remembered seeing some sort of gaming benchmark in the past, and lo and behold, it was at that aforementioned Capsaicin & Cream event.

During his presentation (YouTube), Raja touted the potential of seeing +50% gains to average framerates, and a staggering +100% boost to minimum framerates in our gaming. In the demo he gave, Deus Ex: Mankind Divided was run on a 4GB RX Vega, giving us a good use case of how HBCC could help with big games on more modest hardware.

Of course, AMD doesn’t currently sell any 4GB RX Vega hardware, and I am not sure when we’ll see any considering the fact that HBM2 is expensive beyond the means of a midrange card. Despite all that, I felt compelled to see if I could find a single worthwhile test result from using HBCC, so, despite knowing I’d probably be testing in vain, I decided to trek on.

But first, what is HBCC?

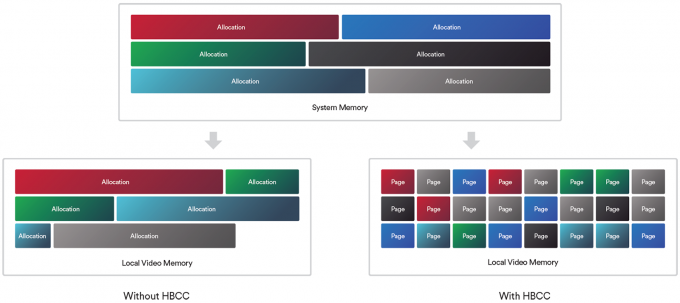

In a nutshell, HBCC is, as its “high-bandwidth cache controller” name gives away, a complement to the framebuffer (or “high-bandwidth cache” as AMD now calls it) that will treat the VRAM as a last-level cache, and some system memory as VRAM. If a resource is requested by the GPU, but it’s not currently in video memory, the memory pages relating to the data will be pulled in to Vega’s HBC (framebuffer) for quicker access, while unused pages will be flushed out.

In time, we could see application developers utilize this mechanic to maximize GPU efficiency in huge workloads. The pool of data that the Radeon Software will create is called the “HBCC Memory Segment” (HMS), and on our 32GB test PC, the minimum value that could be selected was ~11GB (max was ~24GB).

In the future, this technology could enable us to enjoy massive worlds without having to spend thousands of dollars on increasing our video memory. That said, there are caveats with this kind of design, including an obvious one of adding latency to the data fetch. If the system RAM holds data that the GPU happens to need, it’ll have to flow on down through the PCIe bus before the GPU can snatch it up.

HBCC is designed to be intelligent in how it manages the data, although at this point, it’s not entirely clear how data is handled today, or how it will be handled in the future. AMD talked at length about game developers who were in love with the idea of HBCC, so it could be that those same developers will have their own ideas on how to maximize the returns of HBCC.

If it were possible to use a BIOS that disabled one of the HBM2 dies on RX Vega, this feature could probably be better tested. Currently, having a memory-starved card is going to be the only way you’ll notice a benefit from HBCC, and for many, memory is not going to be the limitation.

| Resolution Comparisons | |||

| Pixels | 1080p | 4K | |

| 1080p | 2,073,500 | 100% | 25% |

| 1440p | 3,686,400 | 177% | 44% |

| 4K (3840×2160) | 8,294,440 | 400% | 100% |

| 5K (5120×2880) | 14,745,600 | 711% | 177% |

| 8K (7680×4320) | 33,177,600 | 1600% | 400% |

| Example: 1440p has 177% the number of pixels of 1080p | |||

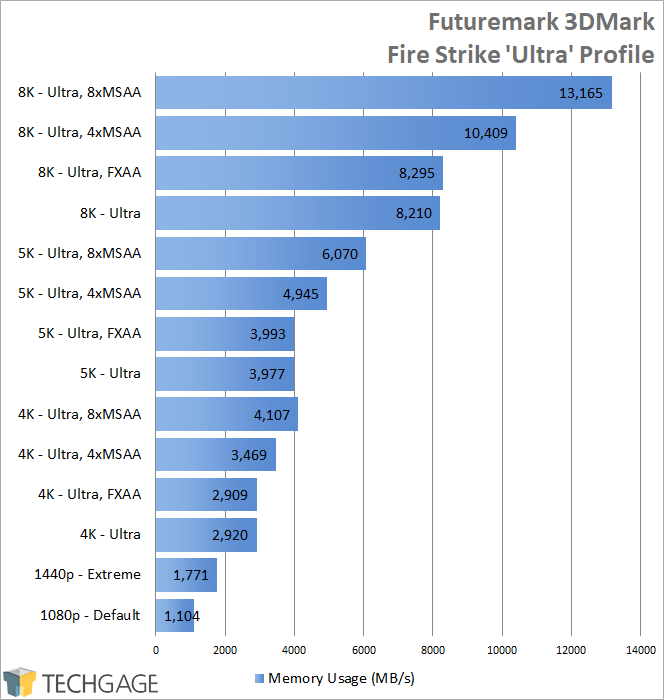

A couple of years ago, I used Futuremark’s 3DMark to test VRAM scaling on different resolutions. At that time, the card used for testing was a 12GB Maxwell-based TITAN X, with the absolute top-end config managing to peak it. I felt that had the framebuffer been larger than 12GB, we’d see an even higher peak. Remembering the 24GB Quadro P6000 on hand, I decided to toss that in the test PC and run the same top tests to verify the old results, and found a new one at the top-end: 13.1GB of VRAM used during 8K 8xMSAA Fire Strike testing.

It should be said that this kind of testing is unrealistic. The fact that I had to run an already strenuous test at 8K resolution (equivalent to 4K x 4), and pile on an absurd (in today’s age) anti-aliasing mode to breach the 12GB mark, really says something. This is just one test, though, and there are surely going to be games coming within the next couple of years that will begin to make an 8GB framebuffer seem small. Watch Dogs 2 is a good current-gen title known for using a lot of VRAM, although NVIDIA has said in the past that 5K resolution (77% more pixels than 4K) would be required to take advantage of the TITAN Xp’s 12GB framebuffer.

Crashing AMD’s HBCC Caching Party

I hopefully made my doubts about seeing any improvement today with HBCC clear. The RX Vega 64 GPU is neither starved for memory, nor can it somehow pull forward datasets of the future. But, for a quick sanity check, I ran a number of timedemos three times over, and chose the highest reported values across the three:

| HBCC Testing @ 1440p | ||

| Minimum | Average | |

| Deus Ex: Mankind Divided (Off) | 61 | 76 |

| Deus Ex: Mankind Divided (On) | 58 | 74 |

| Ghost Recon: Wildlands (Off) | 51 | 64 |

| Ghost Recon: Wildlands (On) | 53 | 65 |

| Metro Last Light Redux (Off) | 42 | 69 |

| Metro Last Light Redux (On) | 40 | 69 |

| Rise of the Tomb Raider (Off) | 60 | 70 |

| Rise of the Tomb Raider (On) | 60 | 69 |

| HBCC Testing @ 4K | ||

| Minimum | Average | |

| Deus Ex: Mankind Divided (Off) | 29 | 37 |

| Deus Ex: Mankind Divided (On) | 31 | 37 |

| Ghost Recon: Wildlands (Off) | 28 | 37 |

| Ghost Recon: Wildlands (On) | 28 | 38 |

| Metro Last Light Redux (Off) | 20 | 31 |

| Metro Last Light Redux (On) | 21 | 32 |

| Rise of the Tomb Raider (Off) | 33 | 38 |

| Rise of the Tomb Raider (On) | 32 | 38 |

It’s clear that any variance seen in these benchmarks is due to normal benchmark variance, rather than it be a testament to how HBCC is used. There’s not a single noteworthy example above, but I didn’t want to give up. Using the same 3Dmark Fire Strike test used for the VRAM scaling tests, and knowing that the peak setting could exceed Vega 64’s 8GB framebuffer high-bandwidth cache, some simple benchmarking got underway.

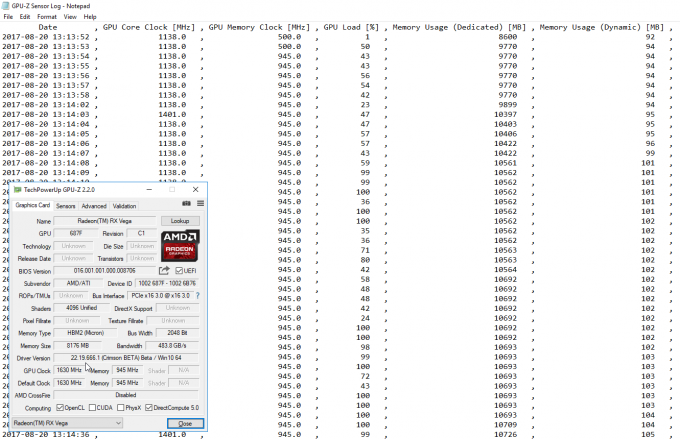

Before explaining the results, note that when you enable HBCC, the Radeon Software will let you scale how much memory you want to allocate to the cache. Our 32GB test PC started this scale at 11GB, so that’s what was used for testing.

| Futuremark 3DMark 8K Testing | ||

| HBCC Off | HBCC On | |

| GPU Score (0xMSAA) | 1,403 | 1,419 |

| Graphics Test 1 (0xMSAA) | 7.88 FPS | 7.85 FPS |

| Graphics Test 2 (0xMSAA) | 4.98 FPS | 5.09 FPS |

| Peak VRAM (0xMSAA) | 8,287 MB | 8,118 MB |

| Peak VRAM (4xMSAA) | 8,117 MB | 10,773 MB |

| Peak DRAM (0xMSAA) | 3,309 MB | 3,534 MB |

| Peak DRAM (4xMSAA) | 9,689 MB | 6,602 MB |

This is a simple table, but there’s a lot of explaining to do. While I was able to provide benchmark results for 8K 0xMSAA testing, I couldn’t for the 4xMSAA testing because the end results were so sporadic (ranging from 0.20 – 5 FPS). With current games and benchmarks, pushing a lot of VRAM is genuinely difficult.

With or without HBCC, Vega 64 peaked at 8GB used with anti-aliasing disabled. With it enabled, and set to 4xMSAA, we can see that HBCC does have to step in, with GPU-Z reporting close to 11GB of memory used (see below) even though the GPU really has only 8GB.

The story doesn’t end there, though. If you’ll notice, the system RAM increased 3GB in the HBB On test, which makes sense since the VRAM increased 3GB, but what’s peculiar is the DRAM result at 4xMSAA. Somehow, general system memory become flooded with extra data as a byproduct of being absolutely inundated with work.

And that’s the kind of oddity you can expect to see with such specific, bleeding-edge and hardware-punishing testing. 3DMark didn’t always fare well through these repeated benchmarks, which doesn’t really matter as this particular use case is non-existent.

Final Thoughts

It’s too bad that at this point in time, a feature that Raja Koduri himself calls his “favorite” Vega feature cannot be exercised properly in order to generate some real world test results. As mentioned earlier, HBCC really is a forward-thinking technology, one that could prove very important down the road as our game content becomes even richer, and our professional workloads, even hungrier for memory.

Speaking of professional workloads, I hadn’t touched on those until this point, but it’s indeed another potential beneficiary of HBCC. Ultimately, HBCC will try to shove unused data to the background in order to keep your game or work running as smooth as you need it to be.

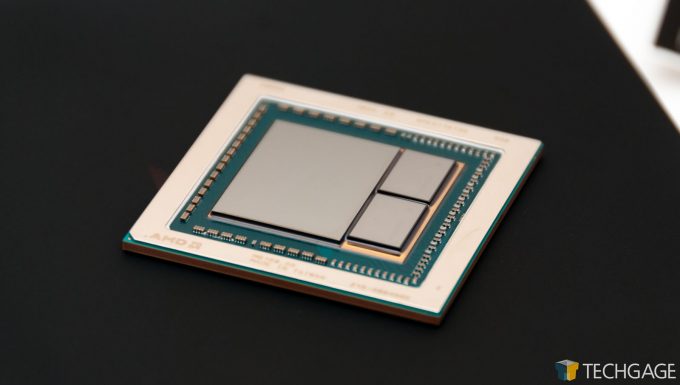

It is worth pointing out that HBCC is the building block for AMD’s Pro SSG, the solid state graphics card that was announced back with the other Radeon Pro cards. The SSG has a 2TB SSD bolted on to a Vega-based WX 9100, and uses the HBCC for processing huge data sets, like debayering 8K video. It’s this workload that the HBCC was likely built for, and not necessarily gaming. The fact this feature is enabled on the RX Vega card is more likely a side-effect of the architecture choice.

At this time, it’s going to take a while before we can really appreciate what HBCC can offer. We are still exploring other tests and workloads, namely to do with rendering and Premiere, to see if HBCC has something to offer the workstation market, at least with these gaming cards. RX Vega already has some impressive compute performance, so perhaps there is more to eek out.

It’s worth reiterating that this is still preliminary results and may not even be a fully functional feature at this time. HBCC is something for the long-haul that won’t see major use for a while, so stay tuned as we revisit this at a later date.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!