- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Exploring Performance With Autodesk’s Arnold Renderer GPU Beta

Rendering complex scenes has long been done using the CPU, but many modern renderers have either introduced GPU support, or been built entirely around it. In the case of Arnold, it’s been CPU-bound for most of its life, with the first GPU-supported version having hit the streets just a couple of weeks ago. Join us as we take an initial performance look at the renderer, in both single and multi-GPU configurations.

Until just a couple of weeks ago, Autodesk’s Arnold Renderer had been designed entirely around rendering to the CPU, and as we’ve seen in previous performance reports, it scales well across the stack. With the latest release of Arnold, though, support for the GPU has been introduced, allowing designers to (potentially) render faster using their highly parallel graphics device.

Before we move too far in, we want to note that our initial experiences haven’t been totally expected, so our findings should not be treated as gospel unless others also run into the same anomalies. Despite how popular Arnold is, there’s little user experience we can find around the web to clue us into other experiences, so if you’ve given Arnold GPU a go, we’d love to hear from you in the comments.

If you’d like to jump aboard the Arnold GPU train, we’d recommend reading through a couple of pages. First is the release notes for the 3.2 release, as well as “Getting Started With Arnold GPU“. Here are some important things to bear in mind:

- Arnold GPU (currently) uses only Camera AA sampling.

- Best performance will be seen after using the ‘Pre-populate GPU Cache’ utility.

- NVIDIA GeForce/Quadro driver 419.67+ required.

- There is no AMD Radeon support with Arnold GPU.

- To “match noise” vs. CPU render, use (at least):

- Camera (AA): 3~4

- Max Camera (AA): 30~50

- Adaptive Threshold: 0.015~0.02

- All textures need to fit into VRAM.

- There is no hybrid rendering (CPU+GPU) option.

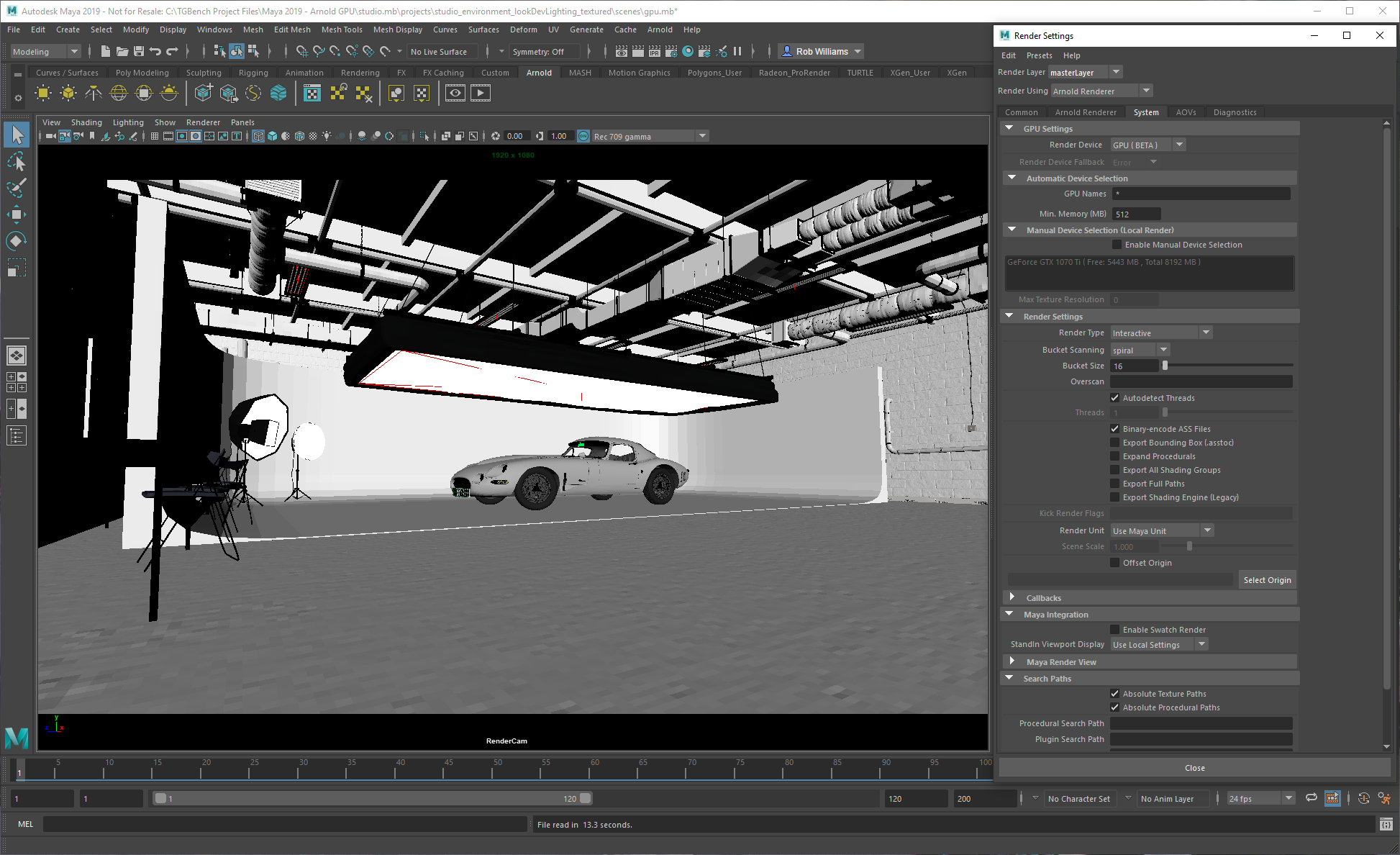

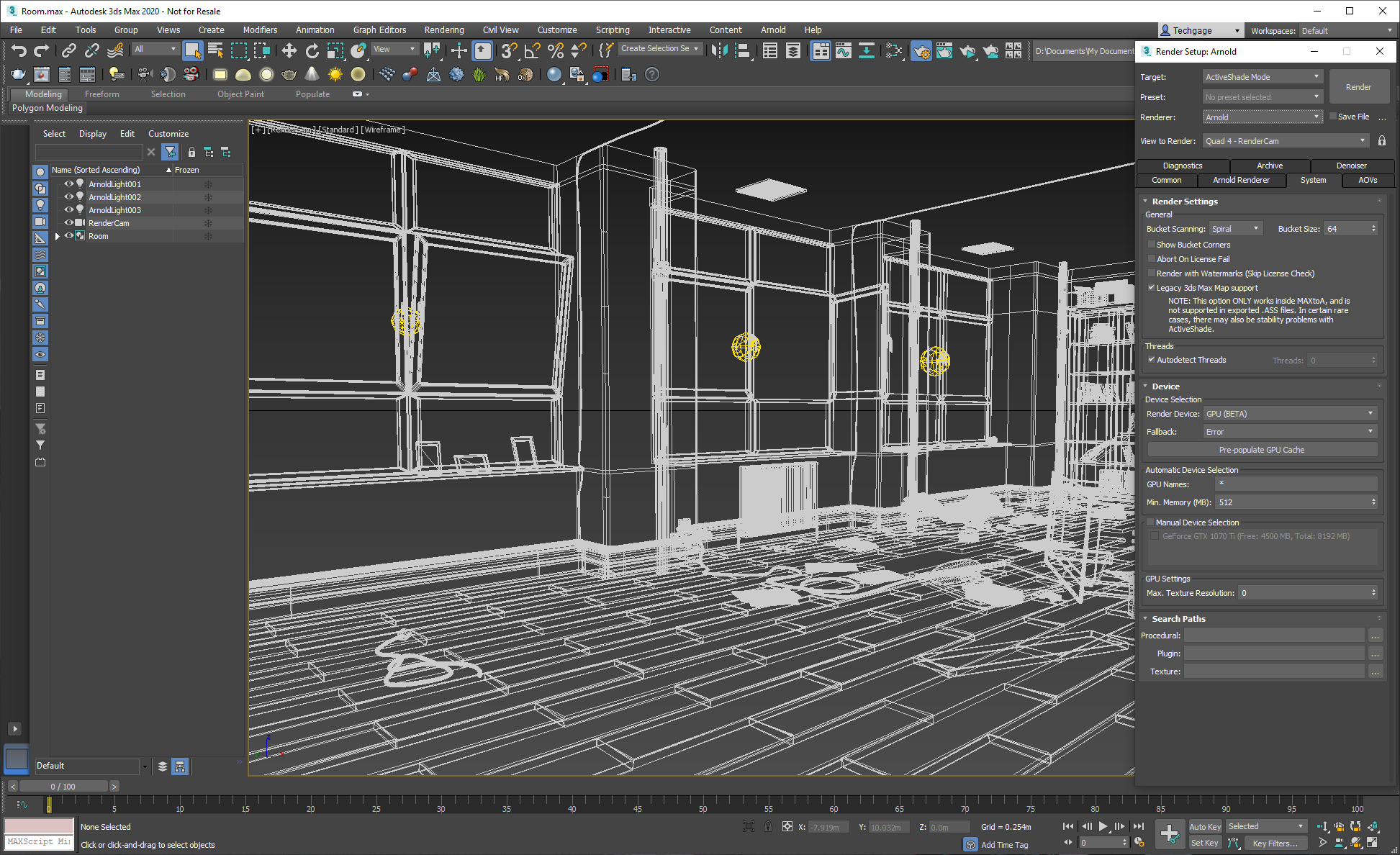

In some renderers, changing between the CPU and GPU might reflect a change to the UI, since options may differ between them. While there are some options disabled with Arnold GPU for the time-being, the overall UI thankfully remains the same (at least in Maya and 3ds Max).

The main thing to note is that when selecting the GPU as the rendering device, Arnold will disable the sliders for every sampling type aside from Camera (AA). That doesn’t mean that basic features like subsurface scattering are disabled; it just can’t be controlled via this slider. Autodesk has noted that more support will be released over time.

In the bullet list above, settings are suggested for obtaining similar noise results from the GPU as the CPU. We originally wanted to compare performance between CPU and GPU directly, but due to some key differences, such as certain sampling sliders being disabled, we couldn’t get what we felt to be accurate apples-to-apples results. Once the GPU renderer evolves, those comparisons might become easier. Our overall impressions so far definitely give the nod to GPUs.

We’ve found that it’s generally easy to get great results with the GPU renderer, although whether or not you want to give Arnold GPU a try today will depend on your willingness to beta test, and your hardware. Currently, Autodesk does not recommend using the GPU renderer for production use, but since it comes conjoined to the latest version of the CPU plugin, there’s no harm in switching over and running a test render to see how you fare.

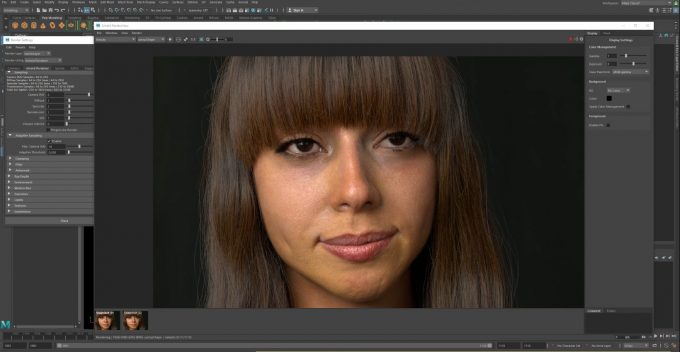

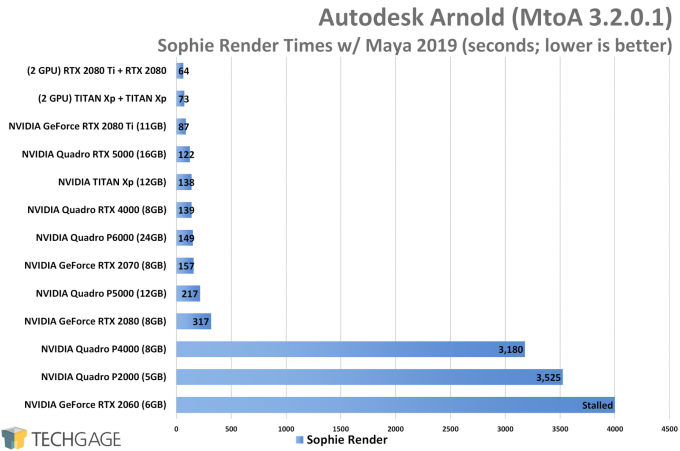

For all of our testing, we used two projects provided to us by Autodesk. One scene is simple, a girl named Sophie wielding a colorful umbrella, while the other is a studio shot of a Jaguar E-Type (or close). This scene is complex for lighting, and will take a while on either the CPU or GPU to reach acceptable noise levels.

Performance seen from either CPU or GPU rendering with Arnold will vary depending on how fast a given processor is. If you have a weak graphics card paired with a strong processor, then it would make sense to focus on CPU rendering. Likewise, a smaller CPU will still allow a big GPU to do good work. At the moment, Arnold does not offer a heterogeneous rendering option, but it does allow you to render to multiple (compatible) GPUs.

Speaking of rendering, here are some full-sized CPU and GPU renders of the two tested scenes:

Please note that the level of noise seen in the images isn’t reflective of whether one device performed better than another, as we haven’t done proper comparisons there (it will make more sense to dig deeper further down the development line). In this case, our settings in the CPU save of the scene caused the render to take a lot longer than the GPU render, but it could be that quality settings could be adjusted to draw up a more fair comparison. For now, we just want to analyze the focus of this renderer: the GPU.

While there are some minor differences between the scenes, the fact that both the CPU and GPU renders look so strikingly similar is impressive. Consider that Arnold GPU has only just entered beta, and Autodesk promises to improve parity between both renderers as time goes on. We hope hybrid rendering will make an appearance at some point. We’d assume that it’s not included yet because Arnold GPU is built around CUDA, whereas Arnold CPU isn’t, but since CUDA is single-source, Autodesk could technically allow heterogeneous rendering through NVIDIA’s API with an update, matching V-Ray’s design. But, that’s all being said as a non-developer who has no insight into Arnold’s internal design.

Performance Testing

We’ve talked a lot so far without any mention of performance, so let’s get a move on. Below is a look at our workstation test PC, which includes all of the tested GPUs and their driver versions. Because Arnold GPU is based around CUDA, non-NVIDIA GPUs are locked out. Arnold’s previous owner, Solid Angle, showed off AMD GPU support at SIGGRAPH in 2014, so hopefully we’ll see Radeon support made possible again through OpenCL or Vulkan in the future.

| Techgage Workstation Test System | |

| Processor | Intel Core i9-9980XE (18-core; 3.0GHz) |

| Motherboard | ASUS ROG STRIX X299-E GAMING |

| Memory | G.SKILL Flare X (F4-3200C14-8GFX) 4x8GB; DDR4-3200 14-14-14 |

| Graphics | NVIDIA TITAN Xp (12GB; Creator Ready 419.67) NVIDIA GeForce RTX 2080 Ti (11GB; Creator Ready 419.67) NVIDIA GeForce RTX 2080 (8GB; Creator Ready 419.67) NVIDIA GeForce RTX 2070 (8GB; Creator Ready 419.67) NVIDIA GeForce RTX 2060 (6GB; Creator Ready 419.67) NVIDIA Quadro RTX 5000 (16GB; Quadro 419.67) NVIDIA Quadro RTX 4000 (8GB; Quadro 419.67) NVIDIA Quadro P6000 (24GB; Quadro 419.67) NVIDIA Quadro P5000 (16GB; Quadro 419.67) NVIDIA Quadro P4000 (8GB; Quadro 419.67) NVIDIA Quadro P2000 (5GB; Quadro 419.67) |

| Audio | Onboard |

| Storage | Kingston KC1000 960GB M.2 SSD |

| Power Supply | Corsair 80 Plus Gold AX1200 |

| Chassis | Corsair Carbide 600C Inverted Full-Tower |

| Cooling | NZXT Kraken X62 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro build 17763 (1809) |

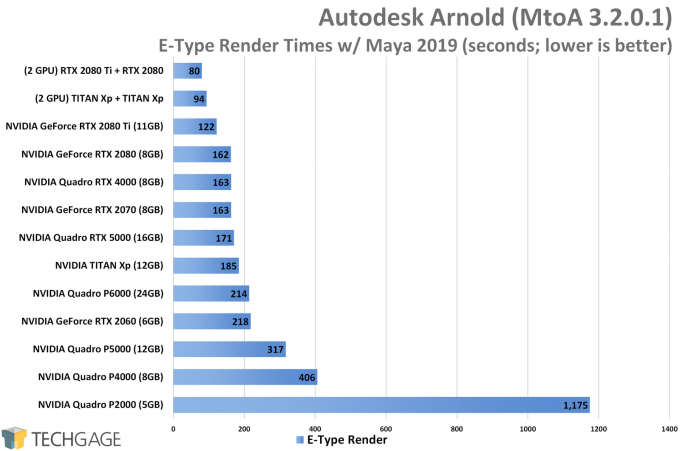

Let’s begin our quick performance look with the car photo shoot scene:

With these first results, we can see some good scaling from the bottom to top, with NVIDIA’s Turing-based graphics cards showing some serious strengths. Turing’s perks (possibly concurrent INT/FP) allows a mid-range card like the RTX 2060 to perform just as well as the last-gen Pascal-based Quadro P6000 (ignoring framebuffer limitations on the smaller card).

The performance boosts seen with this generation of NVIDIA cards ensures that the top-end models perform real well. The RTX 2080 Ti, in particular, exhibits extremely strong performance; you’d think there was supposed to be another GPU in between it and the RTX 2080.

For serious rendering work, a small GPU is going to hold you back. The P2000 performs poorly here, with the somewhat modest jump in hardware to the P4000 making a tremendous difference in overall render time. Even the gap between the P4000 and P5000 is nothing like what we see from the bottom two cards.

But this lineup is not without its oddities, the most notable being the Quadro RTX 4000 performed better than the Quadro RTX 5000, despite there being quite a significant jump in computational performance between the two.

Those who are able to combine multiple GPUs of the same type will enjoy huge speed increases. Unfortunately, we found that you can’t mix-and-match current- and last-gen cards, as only the primary card will be used (if it doesn’t kick back to an error). We tested this with both the RTX 2080 Ti + Quadro P6000, and Quadro RTX 4000 + Quadro P4000. GPUs of the same architecture will mesh together well, with identically spec’d cards delivering 100% GPU usage on average, and dissimilar cards (eg: RTX 2080 Ti + RTX 2080) delivering 97~99% GPU utilization on average.

Looking at both rendered scenes, it should be easy to tell that the car is going to require more computation than Sophie, due to all the extra light sources, and shadows in the ceiling. However, the Sophie character seems to hate certain GPUs, and specific ones, at that. Right at the bottom of the stack, the RTX 2060 couldn’t render this scene at all; or, if we let it run to completion, it might have taken a couple of days. And yes, the RTX 2070 really did outperform the RTX 2080, and yes… we reinstalled each GPU to retest, only to find the same results.

The bottom two Quadros had a seriously rough time with this scene, while everything else that could be classified as “high-end” survived the punishment just fine – ignoring the RTX 2080. At first, we thought the limited framebuffers on some cards could cause issue with this scene, defying what we see when looking at it, but the P4000 is no more limited in framebuffer than the RTX 2080, RTX 2070, and RTX 4000, yet those cards handled themselves just fine.

Odd results aside, one thing is clear: NVIDIA’s Turing-based graphics cards provide a huge performance advantage over the last-gen Pascals. That’s something users planning to upgrade would hope to see, so it’s nice to see such a notable uptick.

Final (For Now) Thoughts

Our first impressions of Arnold GPU from a performance perspective are good, although based on some of our test results, there is clearly some work to be done to ensure a solid experience for everyone. We can’t begin to figure out why some render times didn’t scale as expected, but this isn’t the first time we’ve seen something like it. The RTX 5000 falling behind the RTX 4000 in the E-Type render scene reminds us of a last-gen oddity which saw the P5000 falling behind the P4000 in LuxMark.

Autodesk deserves a high-five for its multi-GPU implementation. For the first release of a new renderer, we didn’t really expect that solid scaling would be seen, but overall, the renderer handled our similarly matched configurations no problem.

While the Arnold GPU feature set isn’t 1:1 with Arnold CPU, Autodesk has committed to bringing both renderers as close to parity as possible in the future. Ultimately, the company would love it if a render looked the same on both CPU and GPU, and it goes without saying that artists would appreciate that also. For us, being performance fiends, we’d love to see hybrid rendering support added in the future. As it stands now, both the CPU and GPU Arnold renderers perform well, so it’d be great to see the efforts of both processors combined for even faster overall performance.

More in time…

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!