- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Blender 2.83: Best CPUs & GPUs For Rendering & Viewport

To greet the launch of the Blender 2.83 release, we loaded up our test rigs to generate fresh performance numbers. For rendering, we’re going to pore over CPU, GPU, CPU and GPU, and NVIDIA’s OptiX. For good measure, we’ll also look at viewport frame rates, and the impact of tile sizes with Cycles GPU rendering.

Page 2 – Tile Size Considerations, Viewport Performance & OptiX Denoising

Throughout Cycles’ life, there have been general rules for choosing the right tile size for rendering, at least initially. For CPU, 16×16 or 32×32 has been suggested, as it’s not going to choke the entire chip by doling out too much work at once. GPUs are more capable of large parallel projects, so 256×256 has been the go-to, although 512×512 and higher has been possible in recent years.

The tile size dictates how big of a batch of work a CPU or GPU will take on at once. In truth, the defaults that are set are almost assuredly going to be suitable, but if you’re desperate for more performance, it might be worth tweaking the size a bit and running your own quick tests. For a while now, this tweaking has been less important, something that our initial tests showed, as changing tile sizes often didn’t result in dramatic time differences between renders. However, there are a few occasions where it does, and it’s a trap we fell into in this round of testing.

We’ve taken a look at tile size performance in the past, but made the mistake of testing only one vendor GPU, ignorant of the fact that there is different behavior between them. So, for the performance below, we loaded up the Classroom scene and tested 8~1024 tiles on both the RTX 2060 SUPER and RX 5700 XT – two $400 GPUs.

| AMD RX 5700 XT | NVIDIA RTX 2060S | |

| 8×8 Tiles | 23m 10s | 5m 30s |

| 16×16 Tiles | 7m 00s | 4m 40s |

| 32×32 Tiles | 2m 47s | 4m 31s |

| 64×64 Tiles | 1m 44s | 4m 22s |

| 128×128 Tiles | 1m 36s | 4m 23s |

| 256×256 Tiles | 1m 35s | 4m 29s |

| 512×512 Tiles | 1m 36s | 4m 39s |

| 1024×1024 Tiles | 1m 36s | 5m 05s |

| Note | Classroom project used. | |

There are just a handful of results here, but there’s still a lot to chomp on. On Radeon, it looks as though using too low of a tile size choice will absolutely ruin your performance. But then, after a certain point, the performance doesn’t change much at all, at least up to 1024×1024. We also tested 1536×1536, and on both vendors, the performance got just a bit worse, compared to 1024.

Ultimately, 256×256 worked best for AMD with this particular project and on this particular GPU, which is great, since that’s what all of our GPU tests are locked-in at. NVIDIA’s 2060 SUPER suffered a little bit at 256×256 vs. its optimal 64×64, but only by a smidgen. Ten seconds difference will matter a lot if we’re talking about animation; again, you’ll want to do your own testing if you want to lock in the perfect tile size. While we used factors of eight in our testing here, you can choose any multiple you want. It does need to be stressed that different projects will behave differently with alternative tile sizes, and mixed render modes when using the CPU and GPU will need to be weighted with a smaller tile size for the CPU to work efficiently.

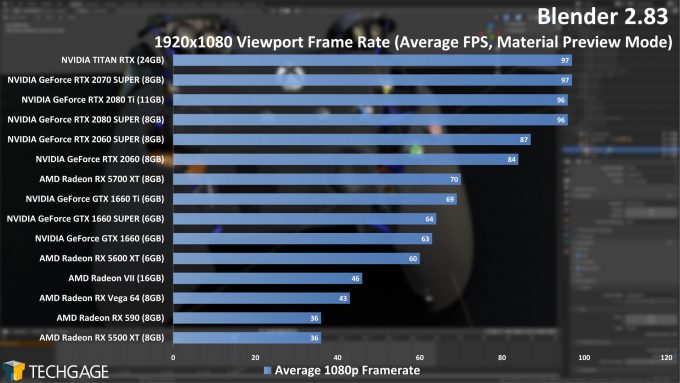

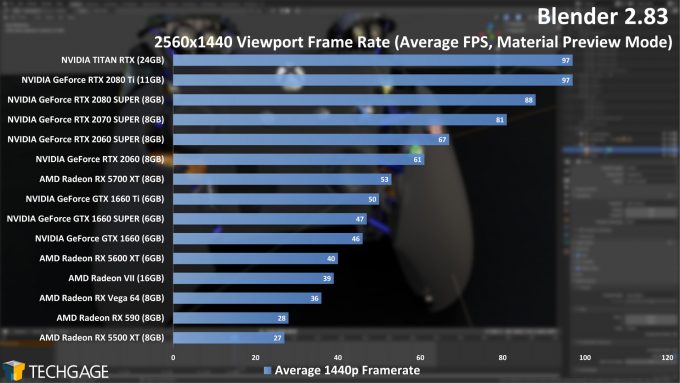

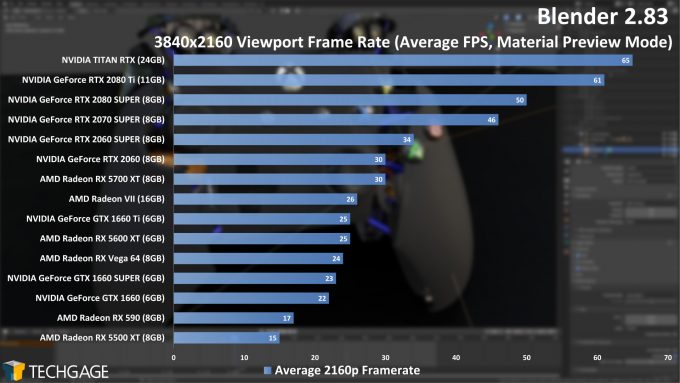

Viewport Performance

In our look at Blender 2.80 last summer, our viewport test involved a complex scene – almost too complex, as the results given didn’t bode well for any GPU at 4K. With this go, we decided to swap out our old test with the new Controller scene, as it looks great, and won’t make low-end GPUs croak.

The results above reflect the Material Preview (LookDev) mode, which is somewhat of a stop-gap between a real render and one that’s “good enough”. You can’t realistically roam around a rendered scene, but once the shaders are compiled, you can easily do that with Material Preview – even during animation playback.

We’ve seen NVIDIA show off its strengths well with our viewport tests in the past, and even with this different project, nothing changes. What we do see, though, is that at 1080p, the top-end GPUs don’t treat it much differently than 1440p, although greater deltas will be seen as you move down the stack. At 4K, the top GPUs can hit 60 FPS, while the lowbie RX 5500 XT delivers 15 FPS.

OptiX Denoising & Wrapping Up

With that onslaught of performance data behind us, we hope you’re better informed about what hardware you should be going after. Like most ProViz work, how you use Blender can dictate which vendor CPU or GPU to go with. Some may prefer a beefy CPU and modest GPU due to external workloads demanding lots of CPU threads; or, someone focusing on GPU rendering could go with a high-frequency but modest core-count CPU (eg: eight).

Calling eight cores “modest” might seem a bit off, but AMD’s Ryzen series has made eight-core CPUs available to the masses for over three years, and if you’re any sort of heavy multi-tasker, those extra cores will make a difference. If you’re hoping to get important work done on your machine, you won’t want to slow down your general use.

Users can get away with lesser hardware as long as they know the limitations. Today, even a 6-core Ryzen 3600X (~$249 USD) and RTX 2060 SUPER (~$399 USD) would be a powerful combination for the price, but you also need to look to the future, and set expectations for how long it will take before that hardware will be in need of a replacement. Naturally, if you’re still unsure of what to do, feel free to leave a comment below.

On the CPU rendering side, core count matters more than anything, at least with rendering, but core-for-core, AMD still does manage to eke ahead of Intel in each of our match-ups (although we don’t have as many Intel CPUs as we do AMD). Notably, AMD’s 16-core 3950X (~$749 USD) manages to beat out Intel’s 18-core 10980XE (~$999 USD).

For GPUs, it’s impossible to ignore the strengths of NVIDIA’s GPUs across all tests. There are many scenarios where AMD is strong, but from an overall standpoint, NVIDIA delivers strong performance in every metric we test. That includes Cycles rendering with or without OptiX acceleration, Eevee still or animation rendering, and viewport frame rate.

Part of the reason for weaker AMD performance may be the lack of may be lack of optimization for AMD’s architecture. Radeon ProRender is a good alternative to Cycles if you build your project around it, as simply converting your existing Cycles project to ProRender won’t always work. Even NVIDIA GPUs can use ProRender (and quite well, based on previous testing).

On the topic of OptiX, Blender 2.81 introduced us to rendering images through NVIDIA’s accelerated API, while 2.83 now adds AI denoising to the mix. This new feature exclusively affects the viewport, allowing RTX owners to use their GPU’s Tensor cores to speed-up in-place renders. The first image in the slider above is of Blender’s Material Preview mode (introduced in 2.80). The second image is of a 30 second CUDA render, while the third is a 10 second render with AI denoising enabled.

The coolest thing about the OptiX denoising is just how fast it takes place. For a few seconds, you’ll see an image iterate a few samples, and then it reaches a point where it effectively pops to the end result. How accurate this type of viewport render will be depends on countless variables, and in some cases, using the look development mode might be suitable enough. As with all things AI, this feature’s effectiveness will improve over time.

As mentioned in the intro, the next major release for Blender will be 2.90, due in August. We’re not sure at this point all of what it will introduce, or whether it will be worth retesting for, but we’ll start to eye things once that version gets closer. As always, if you have questions, comments, or suggestions, we welcome you to comment below.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!