- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Blender 3.4 Performance Deep-dive: Cycles, Eevee & Viewport

Blender 3.4 released just a few weeks ago, and as usual, we couldn’t wait long before jumping in and exploring its performance with current GPUs from AMD, Intel, and NVIDIA. With 22 graphics cards and six Cycles and Eevee projects in-hand for rendering and viewport testing, let’s find out which GPUs rise above the rest.

Page 1 – Blender 3.4: Introduction; Cycles Performance

Get the latest GPU rendering benchmark results in our more up-to-date Blender 3.6 performance article.

We have a hard time not talking about Blender, so what better article to greet 2023 with than a deep-dive look at performance in the newest 3.4 version? As always, we’ll be taking a look at the latest version with our collection of GPUs across rendering tests for both Cycles and Eevee, as well as frame rates from inside the interactive viewport.

The release of Blender 3.4 ties into the release of a new Blender Studio open movie called Charge. These open movies exist not just to highlight what Blender is capable of, but to provide the actual project files so that users can dig in and better understand how everything comes together. If you haven’t seen Charge yet, definitely check it out:

We had hoped to include the Charge splash screen project in our benchmarking results here, but it currently crashes Blender when trying to render to any one of our configurations. We’ll revisit the project ahead of the 3.5 launch, as it’d be really great to include for testing since it’s one of the best-looking projects we’ve seen.

For those who want a quick look at the key features added to Blender 3.4, check out the official video that explains it in five minutes. If you want to get into the nitty gritty, you should explore the full-blown release notes.

Despite this being a GPU-focused article, one of the notable features found in Blender 3.4 revolves around Intel’s path guiding library, which can dramatically improve sampling efficiency in CPU-bound rendering workloads. In effect, you will see a much more detailed result with the same sample level. We’ve yet to test Blender 3.4 for CPU rendering, but will dive in shortly for an upcoming review.

While Blender 3.3 introduced support for Intel’s Arc, it’s suggested that the latest drivers be used for the best experience in it and 3.4. With AMD HIP in Linux, an upgrade to ROCm 5.3 or newer will be needed in order to render to Radeon. On Apple, Intel GPUs can now be used for rendering in macOS 13.

AMD, Intel & NVIDIA GPU Lineups, Our Test Methodologies

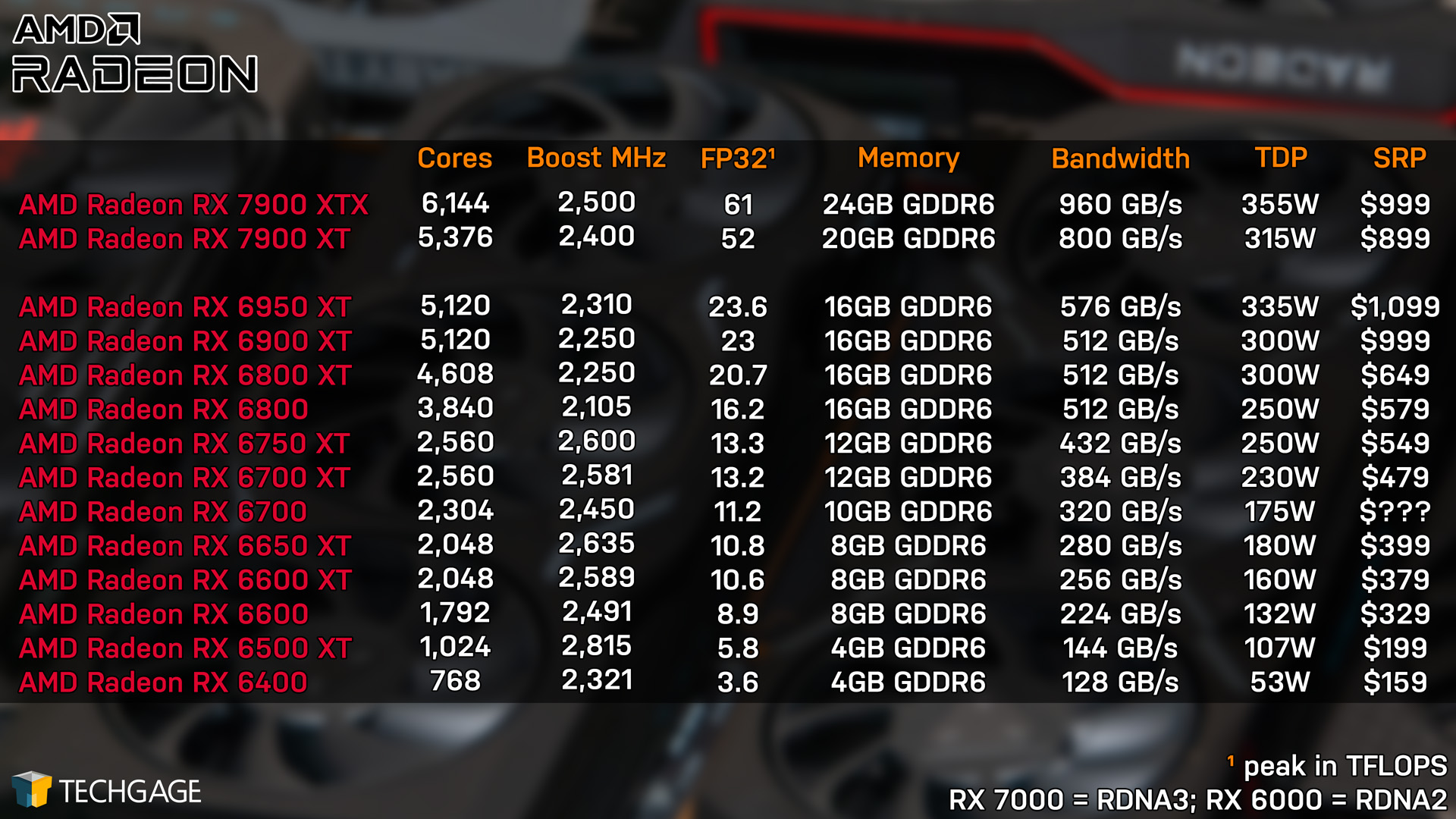

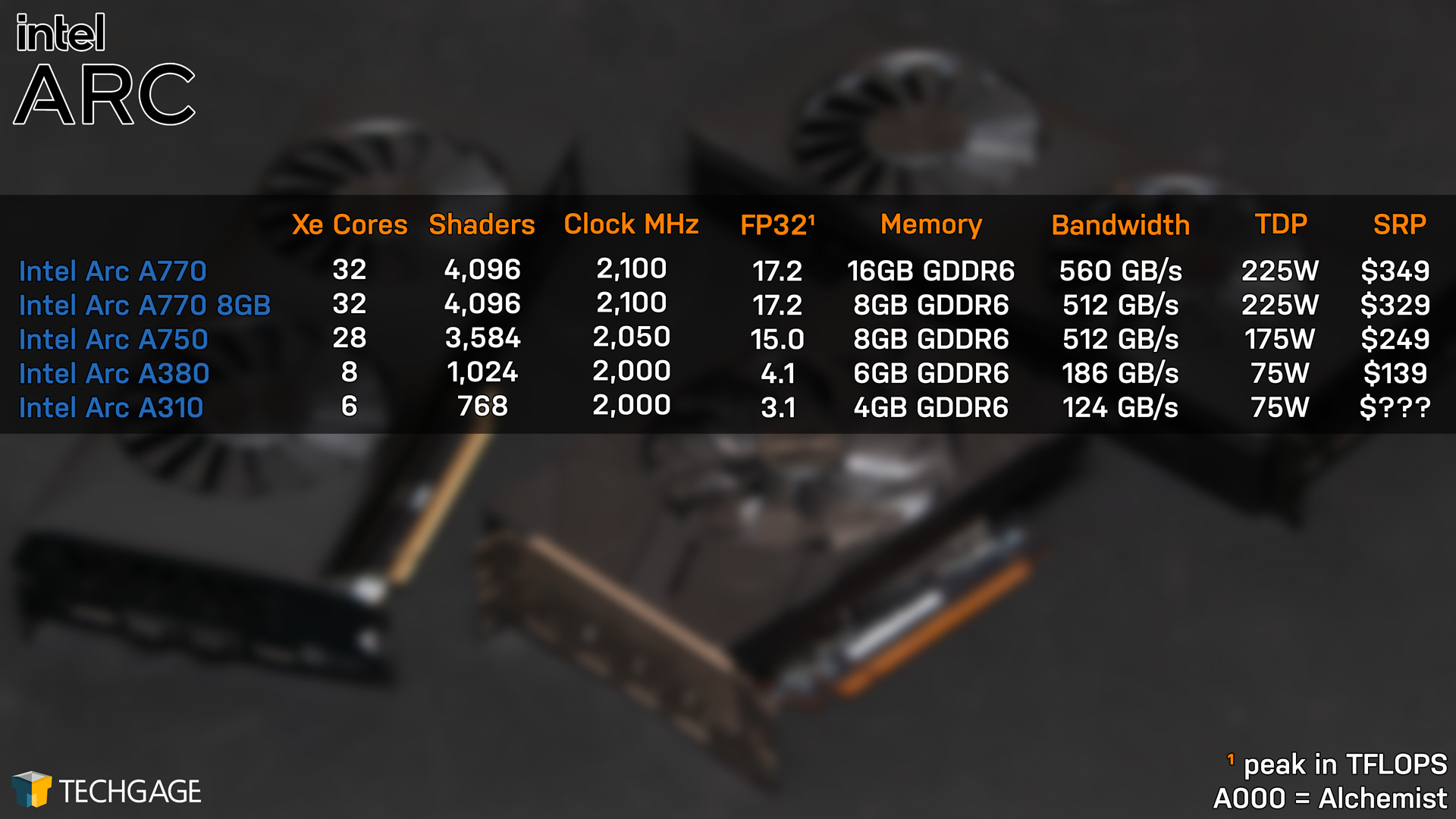

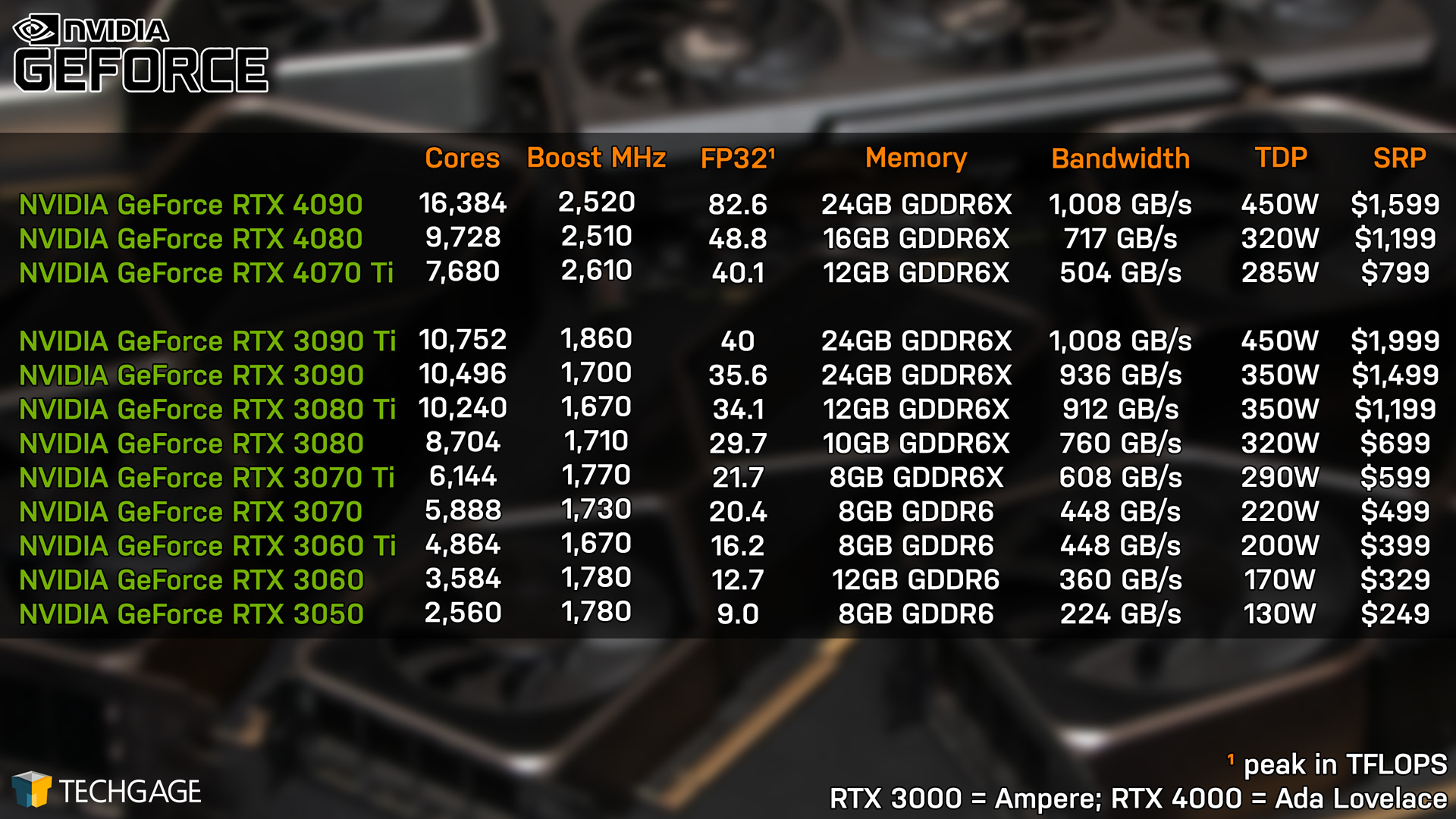

The tables below represent the current- and last-gen GPU lineups from all three graphics vendors. Since our Blender 3.3 deep-dive was posted, AMD launched its newest Radeons, part of the RDNA3 architecture.

With GPU pricing being all over the place lately, it’s harder than ever to pin one model as being the “best” bang-for-the-buck. SRP-wise, the most alluring choice to us continues to be NVIDIA’s GeForce RTX 3070, although for more modern workloads, its 8GB frame buffer might feel limiting before long.

Currently, AMD’s and NVIDIA’s newest GPUs cater to those who are willing to spend $800 or more on their new graphics card, so if that’s too rich for your blood, the used market is going to be worth looking at. With these new GPU generations rolling out, last-gen cards are filling up various marketplaces, so if you keep your eyes peeled, you may be able to score a competent last-gen GPU model for an actually attractive price.

Nonetheless, performance is what this article is all about, so let’s move on to a quick look at our test PC and general testing guidelines, then get on with the show:

| Techgage Workstation Test System | |

| Processor | AMD Ryzen 9 5950X (16-core; 3.4GHz) |

| Motherboard | ASRock X570 TAICHI (EFI: P5.00 10/19/2022) |

| Memory | Corsair VENGEANCE (CMT64GX4M4Z3600C16) 16GB x2 Operates at DDR4-3600 16-18-18 (1.35V) |

| AMD Graphics | AMD Radeon RX 7900 XTX (24GB; Adrenalin 22.12.2) AMD Radeon RX 7900 XT (20GB; Adrenalin 22.12.2) AMD Radeon RX 6900 XT (16GB; Adrenalin 22.11.2) AMD Radeon RX 6800 XT (16GB; Adrenalin 22.11.2) AMD Radeon RX 6800 (16GB; Adrenalin 22.11.2) AMD Radeon RX 6700 XT (12GB; Adrenalin 22.11.2) AMD Radeon RX 6600 XT (8GB; Adrenalin 22.11.2) AMD Radeon RX 6600 (8GB; Adrenalin 22.11.2) AMD Radeon RX 6500 XT (4GB; Adrenalin 22.11.2) |

| Intel Graphics | Intel Arc A770 (16GB; Arc 31.0.101.3959) Intel Arc A750 (8GB; Arc 31.0.101.3959) Intel Arc A380 (6GB; Arc 31.0.101.3959) |

| NVIDIA Graphics | NVIDIA GeForce RTX 4090 (24GB; GeForce 527.56) NVIDIA GeForce RTX 4080 (16GB; GeForce 527.56) NVIDIA GeForce RTX 4070 Ti (12GB; GeForce 527.62) NVIDIA GeForce RTX 3090 (24GB; GeForce 527.56) NVIDIA GeForce RTX 3080 Ti (12GB; GeForce 527.56) NVIDIA GeForce RTX 3080 (10GB; GeForce 527.56) NVIDIA GeForce RTX 3070 Ti (8GB; GeForce 527.56) NVIDIA GeForce RTX 3070 (8GB; GeForce 527.56) NVIDIA GeForce RTX 3060 Ti (8GB; GeForce 527.56) NVIDIA GeForce RTX 3060 (12GB; GeForce 527.56) NVIDIA GeForce RTX 3050 (8GB; GeForce 527.56) |

| Audio | Onboard |

| Storage | AMD OS: Samsung 500GB Enterprise SSD (SATA) Intel OS: Samsung 500GB Enterprise SSD (SATA) NVIDIA OS: Samsung 500GB Enterprise SSD (SATA) |

| Power Supply | Corsair RM850X |

| Chassis | Fractal Design Define C Mid-Tower |

| Cooling | AMD Wraith Prism Air Cooler |

| Et cetera | Windows 11 Pro build 22621 (22H2) AMD chipset driver 4.11.15.342 |

| All product links in our specs tables are affiliated, and help support our work. | |

All of the benchmarking conducted for this article was completed using an up-to-date Windows 11 (22H2), the latest AMD chipset driver, as well as the latest (as of the time of testing) graphics driver.

Here are some general guidelines we follow:

- Disruptive services are disabled; eg: Search, Cortana, User Account Control, Defender, etc.

- Overlays and / or other extras are not installed with the graphics driver.

- Vsync is disabled at the driver level.

- OSes are never transplanted from one machine to another.

- We validate system configurations before kicking off any test run.

- Testing doesn’t begin until the PC is idle (keeps a steady minimum wattage).

- All tests are repeated until there is a high degree of confidence in the results.

Note that all of the rendering projects tested for this article can be downloaded straight from Blender’s own website. Default values for each project have been left alone, so you can set your render device, hit F12, and compare your render time in a given project to ours.

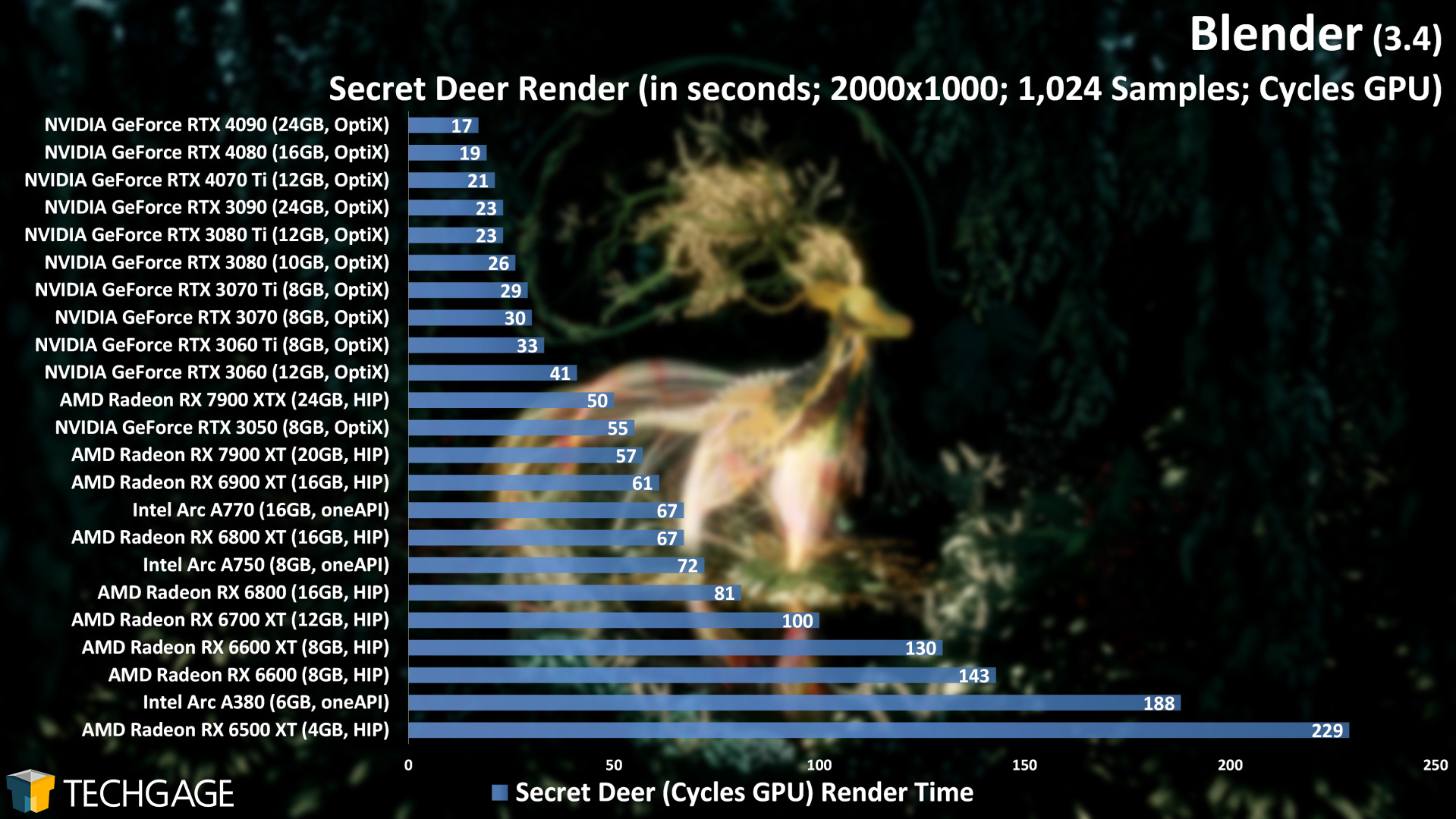

Cycles GPU: AMD HIP, Intel oneAPI & NVIDIA OptiX

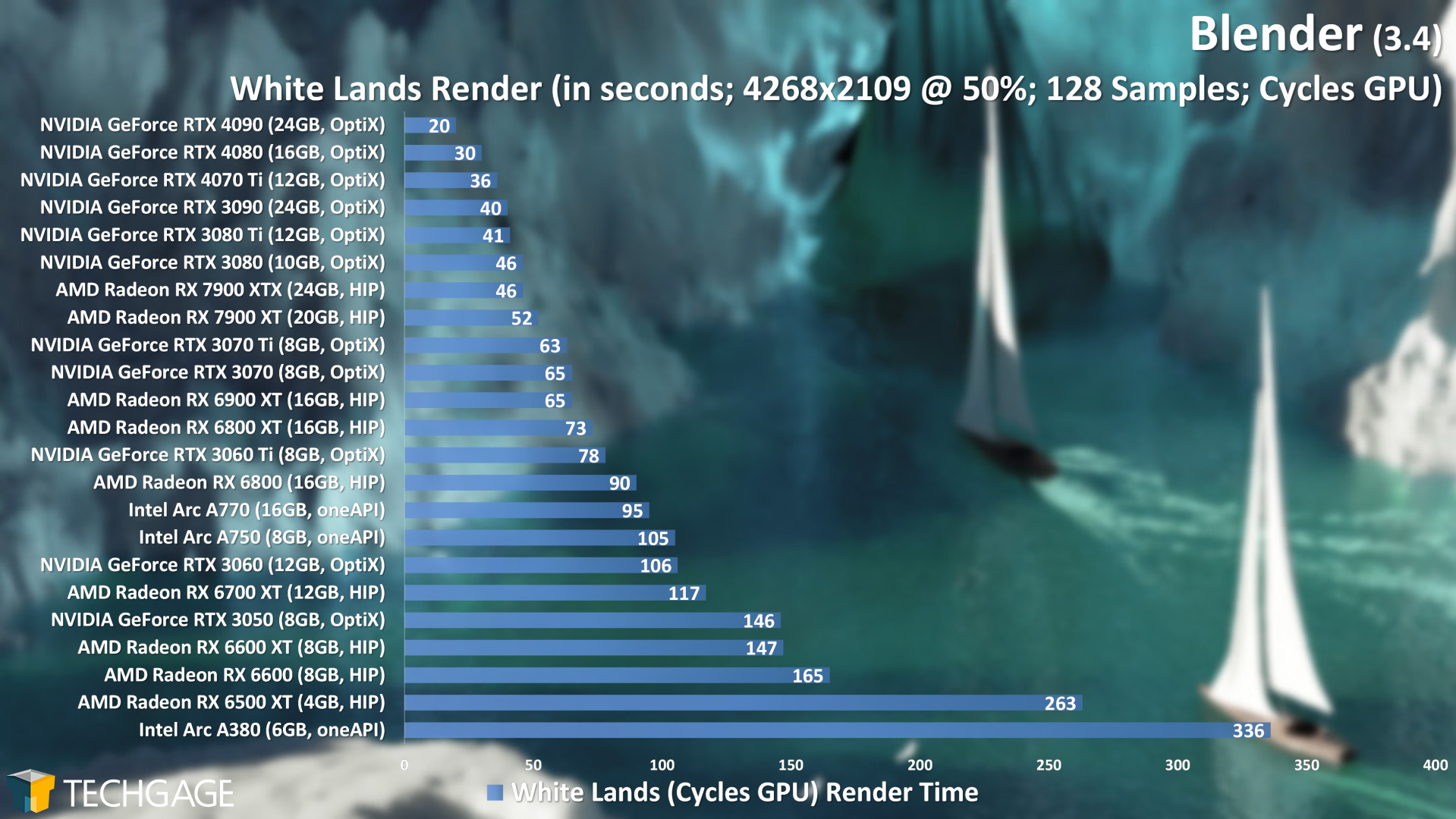

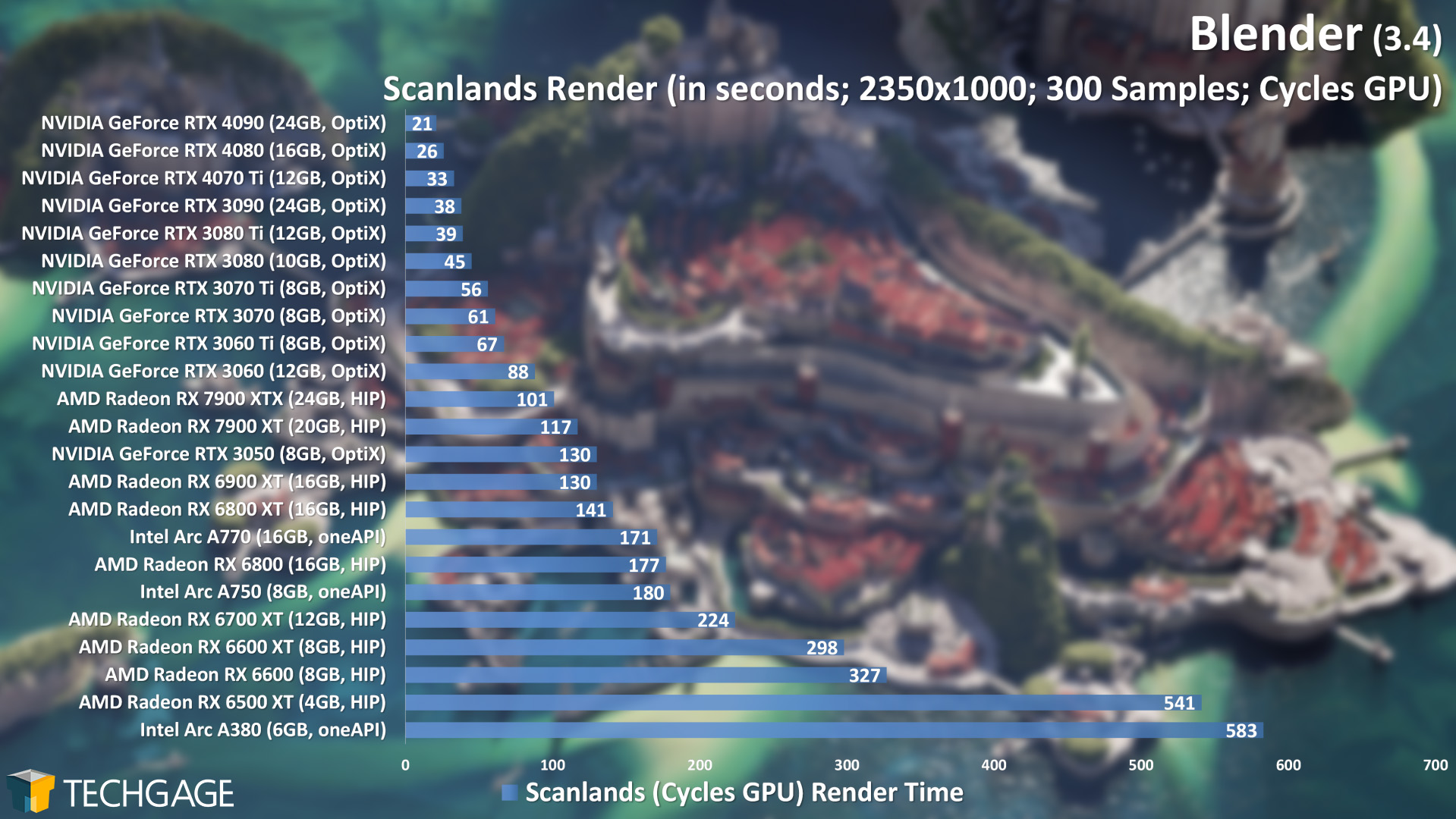

Comparing these results to those found in our Blender 3.3 performance deep-dive, we can see that little has changed. Some numbers did shift around – for the better – but not to a significant degree. The most major difference is that these latest charts have AMD’s new RDNA3 GPUs. Both the RX 7900 XT and RX 7900 XTX struggle to keep up with NVIDIA’s OptiX API when used with even lower-end GPUs, although both prove mighty in the White Lands project.

We’re beginning to sound like a broken record, but where Blender’s Cycles is concerned, NVIDIA can’t be beat. It really says something when AMD’s top-end current-gen flagship struggles to overtake one of NVIDIA’s low-end options from the previous gen. OptiX is just too powerful for the competition to keep up with right now, which leads us to:

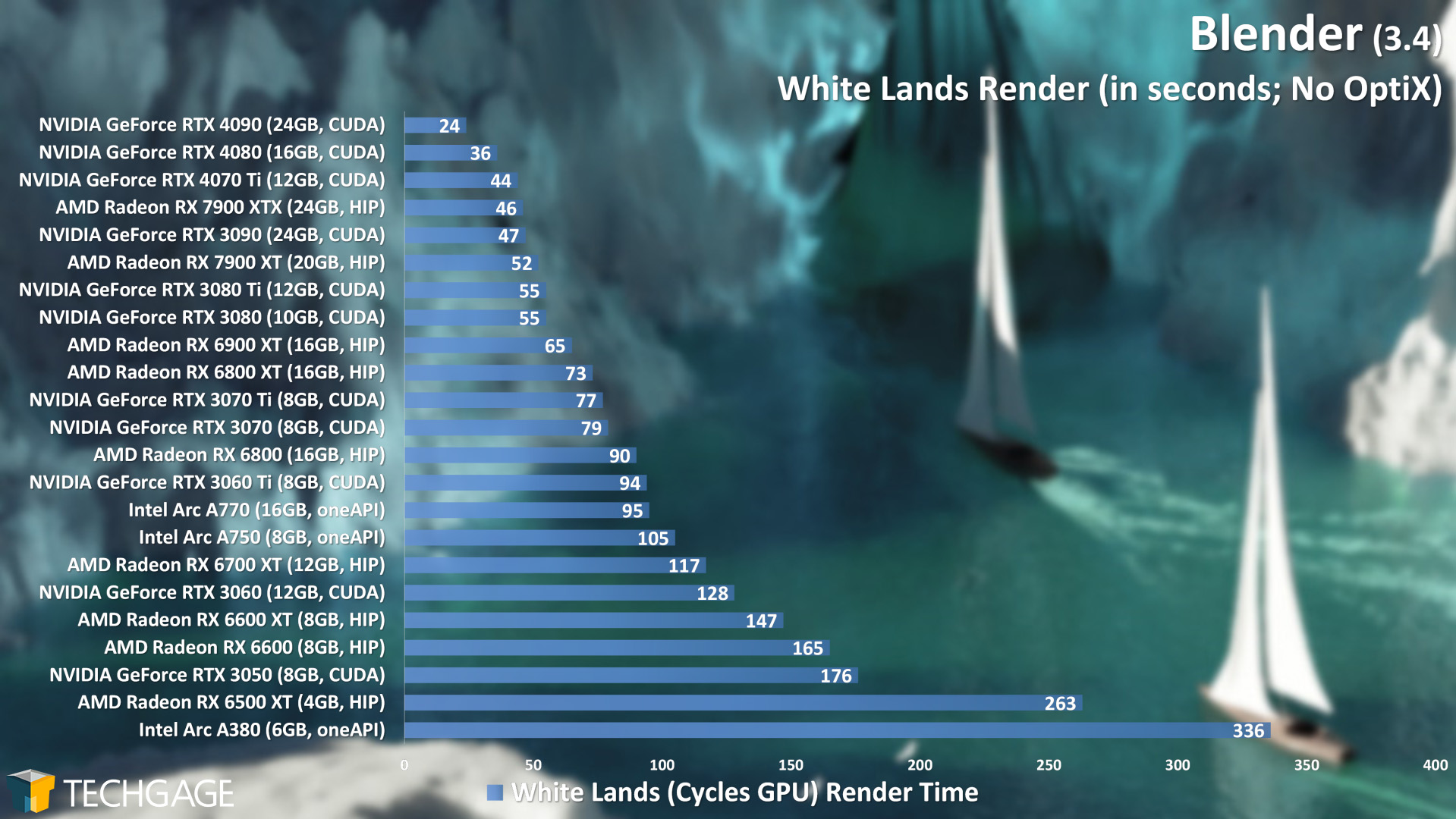

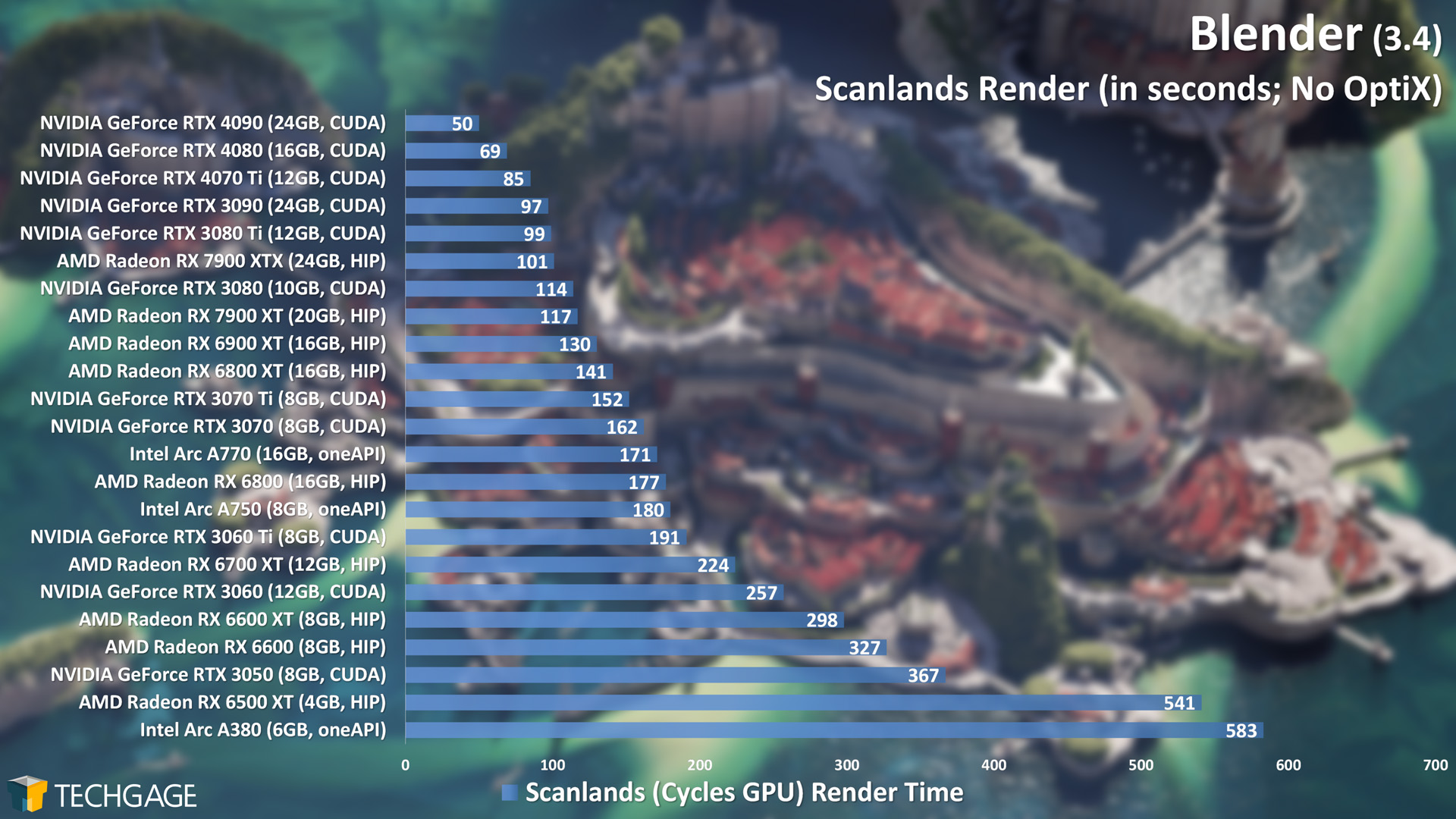

No OptiX: AMD HIP, Intel oneAPI & NVIDIA CUDA

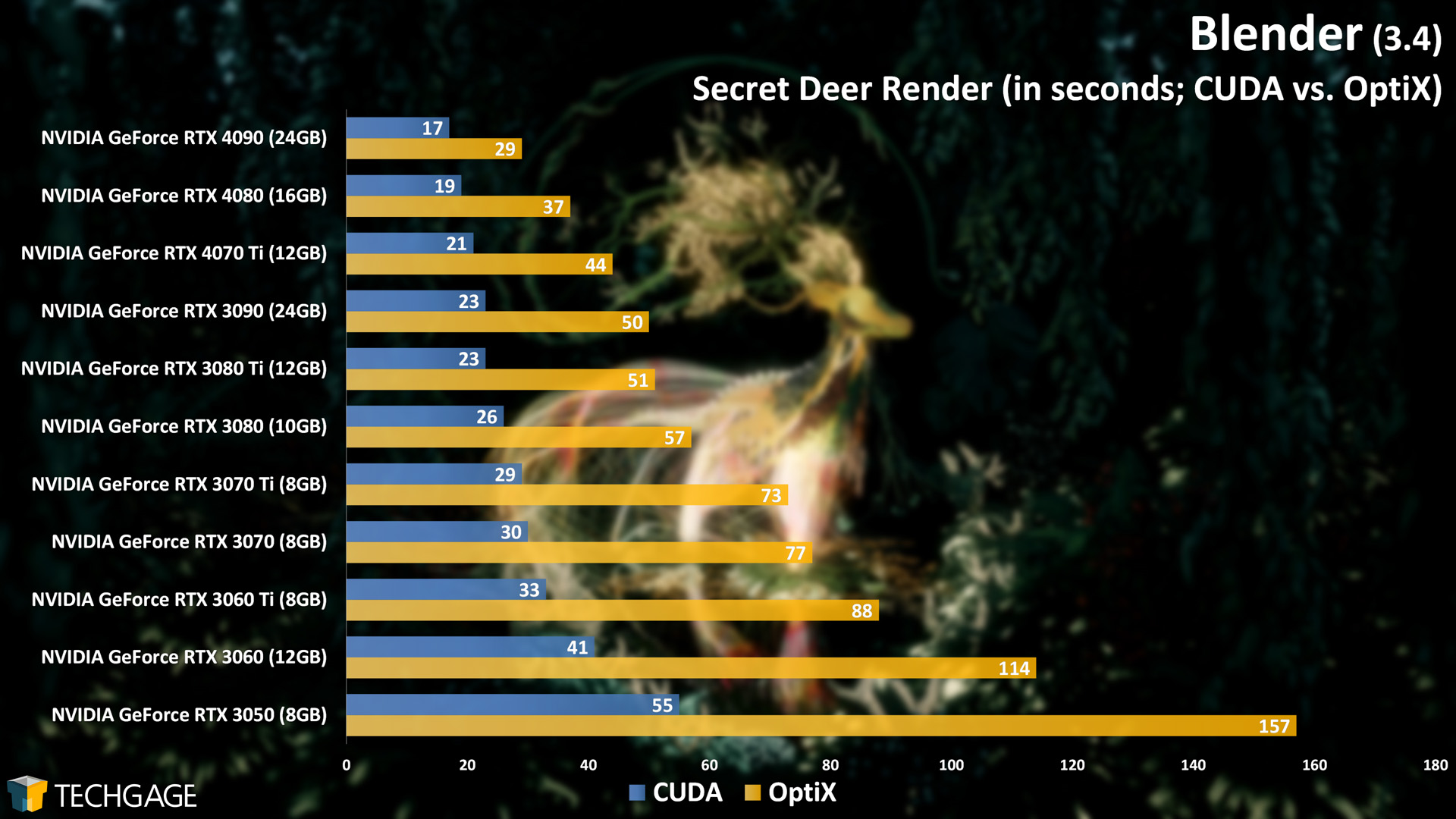

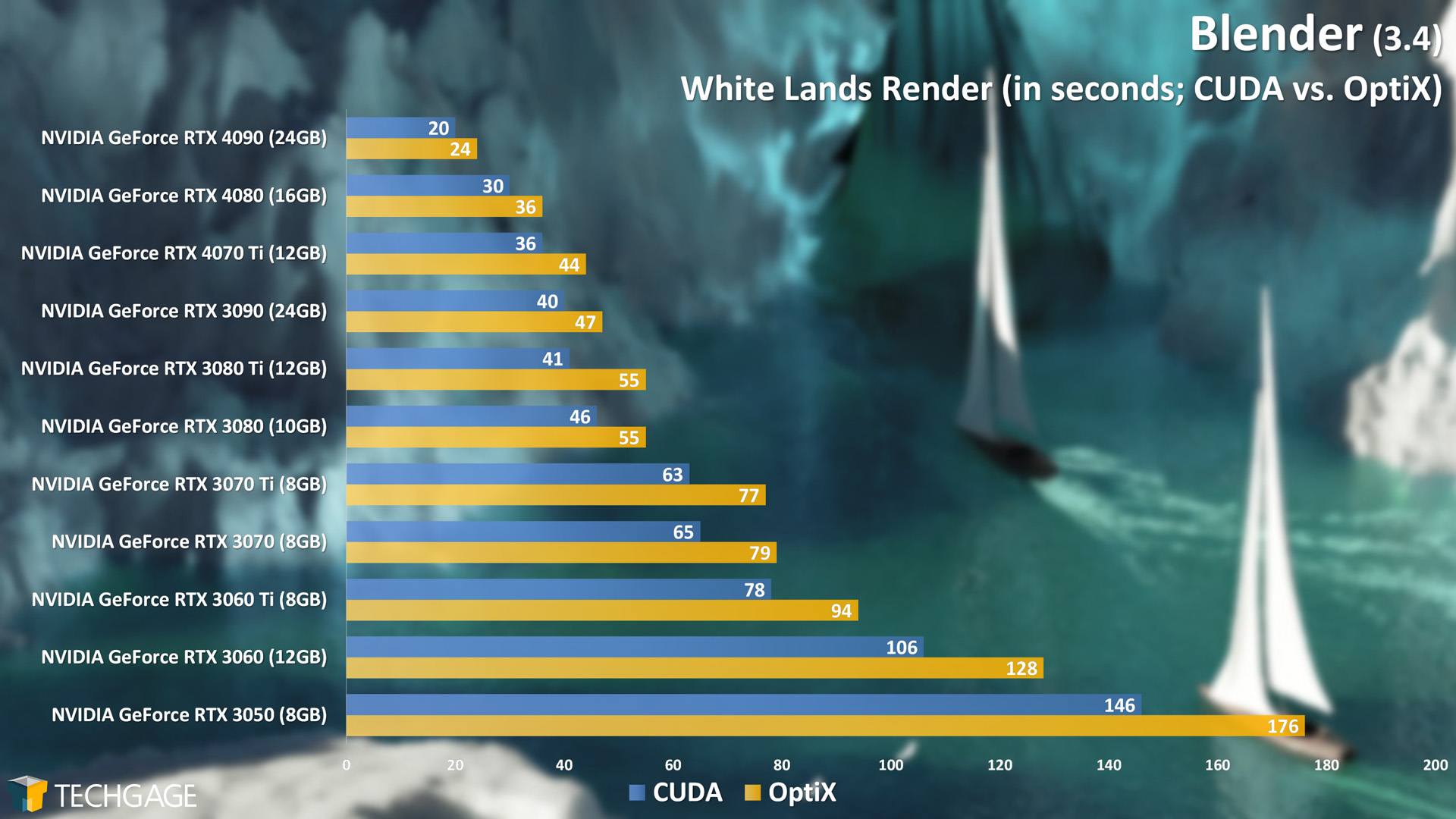

When setting up a render device in a modern version of Blender, you’ll spot both a CUDA and OptiX option which can be used with NVIDIA GPUs. The OptiX option first appeared in version 2.81, and in no time, it blew our socks off. Following its initial deployment, we regularly tested both CUDA and OptiX to show the huge performance differences between them.

About a year ago, we deemed that it was finally safe enough to consider OptiX in Blender a replacement for CUDA, so we dropped CUDA entirely, and simplified our testing by benchmarking the best API for each vendor. Because OptiX can tap into RTX GPU’s accelerated ray tracing cores, and because the resulting image quality is the same, choosing OptiX is a no-brainer.

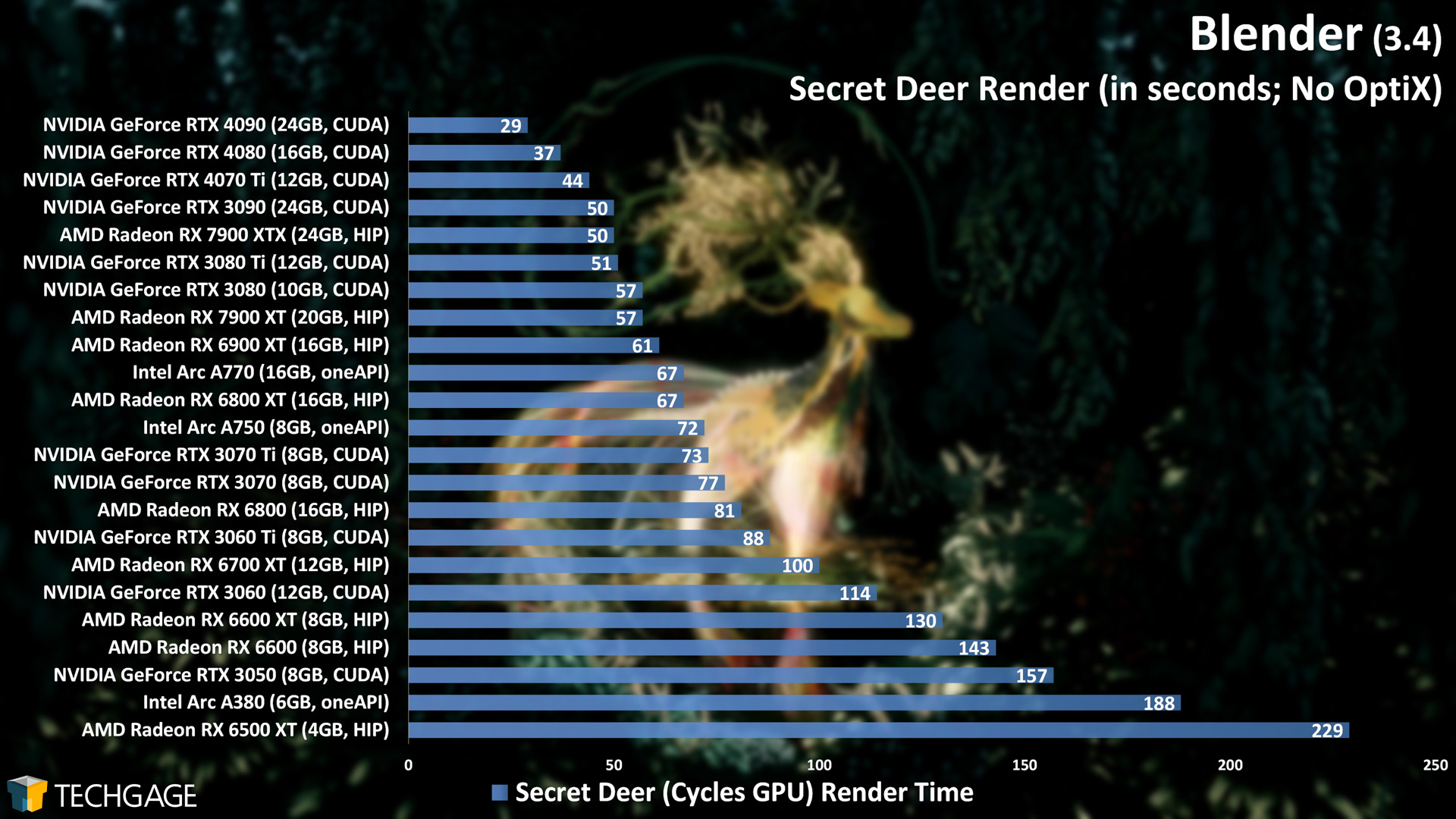

With the launch of Intel’s Arc GPUs a few months ago, we’ve heard from multiple readers that it might be a good time to compare all of the GPUs in an apples-to-apples way – that is, to opt for CUDA with NVIDIA GPUs, and stick to HIP for AMD, and oneAPI for Intel. That effectively means all GPU vendors are on a level playing field.

So, the next handful of graphs use the same projects as those above, but swap out NVIDIA’s OptiX for CUDA. We’re also including NVIDIA-only graphs that easily highlight the performance advantages OptiX enables across-the-board:

There’s a lot that can be gleaned from all of these results. In the Secret Deer project, for example, AMD’s top-end Radeon RX 7900 XTX sat behind the GeForce RTX 3060 when OptiX was involved, but when using CUDA, it manages to propel itself to sit beside NVIDIA’s last-gen top-end offerings. Those performance strengths for AMD continue through the other graphs.

Another thing that stands out is that even without OptiX, NVIDIA’s newest top-end Ada Lovelace GeForces can’t be touched. Both of them pull themselves ahead enough to find themselves in another level of performance. When we look at the Optix vs. No OptiX graphs, we see plenty of examples of where those RT cores can more than halve render times.

This gives us hope that when AMD and Intel gain their RT acceleration capabilities in Blender, we might see huge upticks in performance for them, as well. That said, we’re not going to count on anything until we see it, because as we’ve seen in the past, AMD’s Radeon ProRender offers RT acceleration for AMD’s GPUs, and NVIDIA’s even managed to win those battles, without the ability to tap into OptiX.

We’re particularly keen on seeing how Intel’s gaining of RT acceleration will help things. While Intel only caters to the lower-end part of the market right now, its Arc cards have been proven to offer great rendering performance for their price-point. With our CUDA battle, the Arc A770 places just behind the RTX 3070 in each project – that NVIDIA GPU we often call the best bang-for-the-buck. Meanwhile, the last-gen comparable AMD card, 6700 XT, falls behind both.

It’s expected that both AMD and Intel will have RT acceleration added to Blender by at least its 3.6 release, so if all goes well, we’ll get answers to all of our questions within the next six months.

The Cycles render engine is just one part of the Blender equation; on the next (and final) page, we’re going to explore performance when rendering with Eevee, and also working inside of the viewport.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!