- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GeForce RTX Performance In OctaneRender, Redshift & V-Ray

We don’t have Quadro RTX in the house for testing (yet), but we do have GeForce RTX, and in case you were wondering: they do in fact work just fine for workstation use. We’re pitting the GeForce RTX 2080 Ti, 2080, and 2070 (among eight others) against OctaneRender, Redshift, and V-Ray.

As covered a couple of times in the news section over the past week, we’ve been hard at work benchmarking way too many things at the same time, and thus we’re soon to have an explosion of performance content posted on the site (and to the YouTube channel).

Our workstation test PC has been in heavy use over the past week, benchmarking eight primary NVIDIA configurations, and then a few others on the side for good measure (which will be seen on this page). While the majority of the workstation applications we test with work for both AMD and NVIDIA, this article is dedicated to the three that are exclusive, or somewhat exclusive, to the green team.

Both OTOY’s OctaneRender and Redshift’s namesake renderer only support CUDA, so that rules out those sleek blue cards from AMD’s Radeon Pro camp. Chaos Groups’ V-Ray is an exception, in that there is now OpenCL support for Radeon cards, but none of the projects we have access to render accurately on them. If I come into contact with a project that renders fine for the blue team, I’ll introduce Radeons into the testing.

At the moment, most of NVIDIA’s current-gen GeForces and Quadros are Turing-based, with the Volta-based GV100 proving to still be a good choice for those with specific deep-learning needs (and a lot of memory bandwidth). TITAN V could easily belong in the GeForce table, but given its focus, it’s worthier of being on the Quadro side. It’s also Volta-based, but has TITAN Xp-matching levels of VRAM, at 12GB.

| NVIDIA’s Quadro Workstation GPU Lineup | |||||||

| Cores | Base MHz | Peak FP32 | Memory | Bandwidth | TDP | Price | |

| GV100 | 5120 | 1200 | 14.9 TFLOPS | 32 GB 7 | 870 GB/s | 185W | $8,999 |

| RTX 8000 | 4608 | 1440 | 16.3 TFLOPS | 48 GB 1 | 672 GB/s | ???W | $10,000 |

| RTX 6000 | 4608 | 1440 | 16.3 TFLOPS | 24 GB 1 | 576 GB/s | 295W | $6,300 |

| RTX 5000 | 3072 | 1350 | 11.2 TFLOPS | 16 GB 1 | 448 GB/s | 265W | $2,300 |

| TITAN V | 5120 | 1200 | 14.9 TFLOPS | 12 GB 8 | 653 GB/s | 250W | $2,999 |

| P6000 | 3840 | 1417 | 11.8 TFLOPS | 24 GB 3 | 432 GB/s | 250W | $4,999 |

| P5000 | 2560 | 1607 | 8.9 TFLOPS | 16 GB 3 | 288 GB/s | 180W | $1,999 |

| P4000 | 1792 | 1227 | 5.3 TFLOPS | 8 GB 6 | 243 GB/s | 105W | $799 |

| P2000 | 1024 | 1370 | 3.0 TFLOPS | 5 GB 6 | 140 GB/s | 75W | $399 |

| P1000 | 640 | 1354 | 1.9 TFLOPS | 4 GB 6 | 80 GB/s | 47W | $299 |

| P620 | 512 | 1354 | 1.4 TFLOPS | 2 GB 6 | 80 GB/s | 40W | $199 |

| P600 | 384 | 1354 | 1.2 TFLOPS | 2 GB 6 | 64 GB/s | 40W | $179 |

| P400 | 256 | 1070 | 0.6 TFLOPS | 2 GB 6 | 32 GB/s | 30W | $139 |

| Notes | 1 GDDR6 + ECC; 2 GDDR6; 3 GDDR5X + ECC; 4 GDDR5X 5 GDDR5 + ECC; 6 GDDR5; 7 HBM2 + ECC; 8 HBM2 Architecture: P = Pascal; V = Volta; RTX = Turing |

||||||

Turing has so far infused three GPUs for both GeForce and Quadro, with the top-dog being the Quadro RTX 8000, priced at $10,000. If 48GB is actually too much VRAM, or you’re wanting to save a few thousand dollars, the 24GB RTX 6000 is going to be a worthy consideration. Even the RTX 5000 is attractive at its price-point in comparison to the last-gen P5000, though that could be said about all of the RTX cards.

Performance from Pascal to Turing has seen a nice boost as it is, but the future prospects of RTX features being taken advantage of is what makes the new Quadros (and GeForces) exciting.

For those hoping to get great performance on the cheap, you’ll be happy to know that GeForces will handle all three of the tasks on this page without issue. What I am not entirely sure on at this point is whether or not RTX feature sets will vary at all in the future between GeForce and Quadro.

| NVIDIA’s GeForce Gaming GPU Lineup | |||||||

| Cores | Base MHz | Peak FP32 | Memory | Bandwidth | TDP | Price | |

| RTX 2080 Ti | 4352 | 1350 | 13.4 TFLOPS | 11GB 2 | 616 GB/s | 250W | $999 |

| RTX 2080 | 2944 | 1515 | 10.0 TFLOPS | 8GB 2 | 448 GB/s | 215W | $699 |

| RTX 2070 | 2304 | 1410 | 7.4 TFLOPS | 8GB 2 | 448 GB/s | 175W | $499 |

| TITAN Xp | 3840 | 1480 | 12.1 TFLOPS | 12GB 4 | 548 GB/s | 250W | $1,199 |

| GTX 1080 Ti | 3584 | 1480 | 11.3 TFLOPS | 11GB 4 | 484 GB/s | 250W | $699 |

| GTX 1080 | 2560 | 1607 | 8.8 TFLOPS | 8GB 4 | 320 GB/s | 180W | $499 |

| GTX 1070 Ti | 2432 | 1607 | 8.1 TFLOPS | 8GB 6 | 256 GB/s | 180W | $449 |

| GTX 1070 | 1920 | 1506 | 6.4 TFLOPS | 8GB 6 | 256 GB/s | 150W | $379 |

| GTX 1060 | 1280 | 1700 | 4.3 TFLOPS | 6GB 6 | 192 GB/s | 120W | $299 |

| GTX 1050 Ti | 768 | 1392 | 2.1 TFLOPS | 4GB 6 | 112 GB/s | 75W | $139 |

| GTX 1050 | 640 | 1455 | 1.8 TFLOPS | 2GB 6 | 112 GB/s | 75W | $109 |

| Notes | 1 GDDR6 + ECC; 2 GDDR6; 3 GDDR5X + ECC; 4 GDDR5X 5 GDDR5 + ECC; 6 GDDR5; 7 HBM2 + ECC; 8 HBM2 Architecture: GTX & TITAN = Pascal; RTX = Turing |

||||||

A Few Things To Bear In Mind

To nip a question in the bud: we do not have Quadro RTX cards in the lab. That could change in the future, but I’m at the mercy of what NVIDIA wants to send, and when it wants to send it. Fortunately, all three of the tests featured in this article perform the same on either GeForce or Quadro, which makes the GeForce RTX inclusion a good gauge of what can be expected from the equivalent workstation GPUs (with the Quadro RTX cards expecting to perform slightly better due to having the full GPU unlocked).

On the topic of RTX, none of the tests included here can take advantage of the special features of Turing (right now); namely, the Tensor and RT cores. Chaos Group reported on its own benchmarking work this past week, at the same time noting that support for the RT core will come once NVIDIA’s OptiX engine itself supports it. I’d expect to see a few renderers take advantage of RTX features by the end of the year, but most of the love is going to come in 2019.

Across the ecosystem, Turing support is a little rough in parts right now, but that’s par for the course when a brand-new GPU architecture launches. In particular, the most up-to-date version of Blender will not render a scene with Turing, an issue known both to Blender and NVIDIA. Currently, the blobs needed to support Turing are being kept internal, and I have no timeline on when that will transition into public code. Whenever it happens, I’ll be throwing RTX at it.

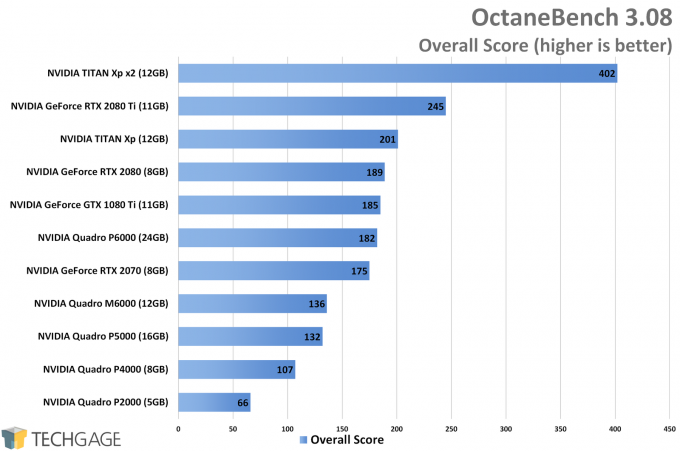

Even OctaneBench, included in this performance look, doesn’t support Turing. How we got it to work was via a tip from the fine folks at Puget Systems, which is to simply download the 3.08 OctaneRender demo, and copy its engine files into the OctaneRender folder. That’s really all it takes to add Turing support to OctaneBench, and as we’ll see, the results are impressive.

Before finally jumping into testing, I have a few thoughts on V-Ray. While the renderer now offers OpenCL support for use with non-CUDA GPUs, AMD’s Radeon cards have yet to render a scene properly that does render properly on NVIDIA. That’s at least based on the public scenes I can find. I can only use what’s available to me, so it could be that your project would render just fine. But it renders poorly enough with the projects I do have to forego Radeon inclusion for the time-being.

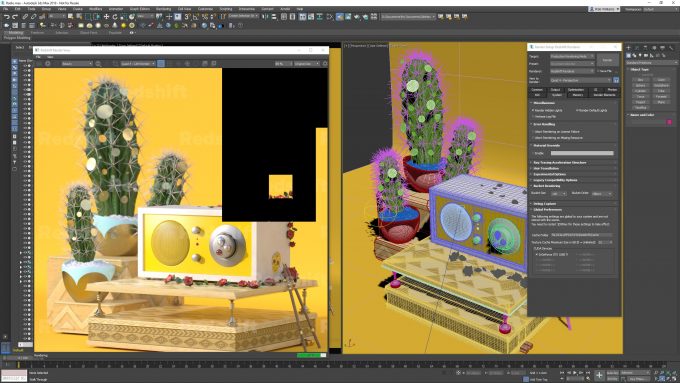

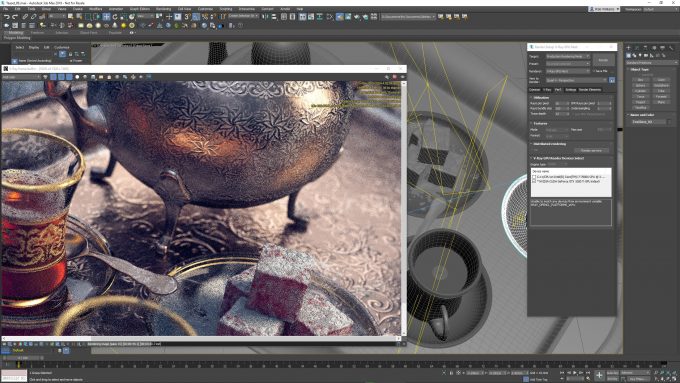

For an example of what I’m talking about, compare the Teaset render on both NVIDIA/CUDA, and then AMD/OpenCL (notice the color of the Turkish Delight):

I’m not entirely opposed to drinking tea that looks like it does on the AMD card, but when it’s supposed to look like the much lighter tea in the original shot, I think I’d pass.

I had thought for about half a second to include standalone V-Ray Benchmark results here as well, but the fact of the matter is, that doesn’t work great on AMD GPUs, either. I’ve seen sporadic results from Radeon cards using that benchmark that I have not seen on GeForces and Quadros. Adding to that, the latest version of the benchmark is based on the last-gen version of V-Ray (3.5), so it’s not an accurate gauge of current 4.0 performance (which you can read about here).

| Techgage Workstation Test System | |

| Processor | Intel Core i9-7980XE (18-core; 2.6GHz) |

| Motherboard | ASUS ROG STRIX X299-E GAMING |

| Memory | HyperX FURY (4x16GB; DDR4-2666 16-18-18) |

| Graphics | NVIDIA GeForce RTX 2080 Ti 11GB (NVIDIA FE; 416.34) NVIDIA GeForce RTX 2080 8GB (NVIDIA FE; 416.34) NVIDIA GeForce RTX 2070 8GB (ASUS STRIX; 416.34) NVIDIA TITAN Xp 12GB (416.34) NVIDIA GeForce GTX 1080 Ti 11GB (416.34) NVIDIA Quadro P6000 24GB (416.30) NVIDIA Quadro P5000 16GB (416.30) NVIDIA Quadro P4000 8GB (416.30) NVIDIA Quadro P2000 5GB (416.30) NVIDIA Quadro M6000 12GB (416.30) |

| Audio | Onboard |

| Storage | Kingston KC1000 960GB M.2 SSD |

| Power Supply | Corsair 80 Plus Gold AX1200 |

| Chassis | Corsair Carbide 600C Inverted Full-Tower |

| Cooling | NZXT Kraken X62 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro build 17763 (1809) |

| For an in-depth pictorial look at this build, head here. | |

I won’t talk about our test rig outside of saying that it is in fact using the latest 1809 version of Windows, even though Microsoft pulled it not long after its release. I began this testing as soon as the October update released, and thus had working media before it was pulled. While the build has known issues with file corruption, fresh installs have been fine (for me).

OK, let’s get on with the important bits:

OctaneRender

The most interesting cards of this bunch are GeForce RTXs, and not just because I drew attention to them in the title. The GeForce variants of RTX might not be as ideal as Quadro in some situations, since optimizations are going to improve things in some places, but for rendering performance (not viewport), GeForce will almost always perform just like Quadro.

The TITAN Xp might be last-gen at this point, but it’s still one hell of a GPU. Despite that, the new top-end GeForce RTX card manages to outperform it by about 22%. That’s not bad for a generational leap, but it also seems fair, since the 2080 Ti FE costs the same that the TITAN Xp did. The TITAN Xt, or whatever the top Turing will be called, is sure to cost more than the Xp did.

The nice thing about renderers is that the more GPU horsepower you have, the better the performance you’re going to get. As this chart proves, the performance can scale just as you’d expect. Add multiple GPUs, and your performance will really take off.

As the M6000 result proves, NVIDIA’s delivered some huge performance leaps from generation to generation, with the Pascal-based P6000 performing far better than that M6000, and the RTX 2080 Ti performing far better than that P6000. Since Quadro RTX 6000 has even more cores than the 2080 Ti, it’d pull even further ahead of the P6000.

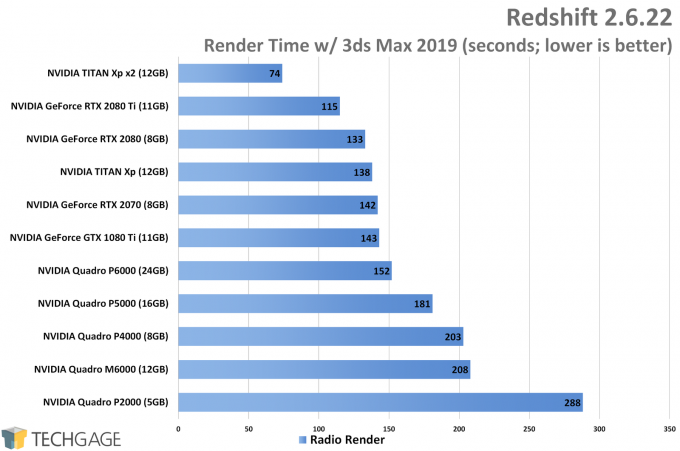

Redshift

The dual-GPU configuration wasn’t meant to be a focal point of this article, but Redshift helps prove once again that the more GPU horsepower there is on hand, the faster the render will be.

In the OctaneRender results, last-gen’s TITAN Xp outperformed the current-gen RTX 2080, but that position has been reversed in Redshift. It’s probably safe to say that the RTX 2080 doesn’t match a TITAN Xp, but in many cases, it will come close. That’s of course ignoring RTX potential in the future, which could help a card like the 2080 far surpass the performance of last year’s top-end offering in certain areas.

The result that stood out to me most here was actually with the Quadro M6000, as it fell behind the less powerful P4000. The result was repeatable (have I ever mentioned how much I love reinstalling GPUs for sanity checks?!), so either the Pascal architecture brought a major performance boost to the renderer, or something else is holding Maxwell’s top-end Quadro back. It sure isn’t the VRAM.

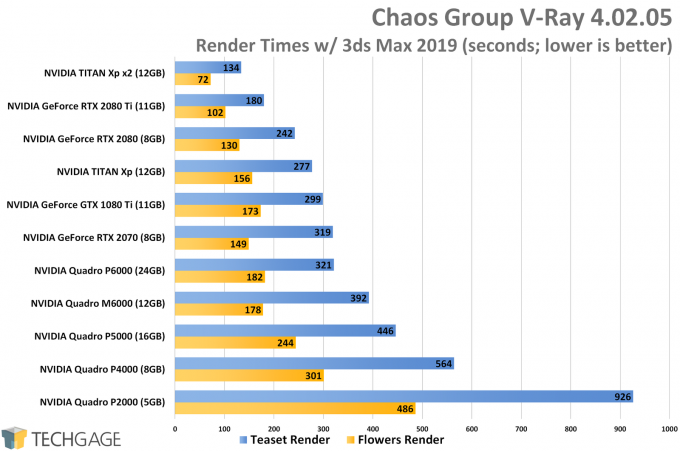

V-Ray GPU Next

The result out of the 2080 Ti in V-Ray is impressive. If we compare the 1080 Ti to the 2080 Ti, the gap is enormous. It’s still enormous if we bump the 1080 Ti to a TITAN Xp. Even without the RTX special features excercised, Turing exhibits some huge performance gains here. At the moment, the only thing that will displace the top single-GPU result is the Quadro RTX 6000 or 8000 – and that’s ignoring the fact that those have a lot more VRAM to work with than the GeForce equivalents.

The RTX 2080 also shows its strengths quite well here, as it also beats out last-gen’s top dog, TITAN Xp. While RTX doesn’t currently accelerate every workload, it sure does make a difference to some of them – like V-Ray. Remember, this is without the Tensor and RT cores being fully utilized.

Again, the Quadro M6000 delivered some interesting performance, because while it fell behind the P4000 in Redshift, it places ahead of the P5000 here. That’s despite the fact that the P5000 is rated at over 8 TFLOPS, and the M6000, 6 TFLOPS. I have to consider this another quirk that just happens to exist, as like the other anomaly, it’s repeatable.

Final Thoughts

As mentioned before, NVIDIA’s RTX technologies are not widely supported at the moment, hence the lack of testing on our part up to now. I have a suspicion V-Ray will be one of the first renderers out the door with official support for Turing RTX, and I can’t wait to dive in when it gets here. Redshift and Octane likewise have support en route.

I admit that it’s rather nice that GeForce RTX cards worked in all three renderers on this page without any issue, since we ran into a complete roadblock with Blender, and its Cycles renderer. Across 16 tests, Blender proved to be the only outlier in our testing, so that fares well for Turing overall. That Blender build can’t get here quick enough, though.

This article was designed to talk about nothing other than NVIDIA-specific tests, but there have been many other results benchmarked over the past week (and more will be generated over the next few days) that will grace the website in the very-near future. Something something Radeon Pro WX 8200 something. Stay tuned.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!