- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GTC 2016 Recap – Pascal GPUs, Iray VR, AI and Autonomous Racing

With the NVIDIA GTC event underway, a multitude of announcements were made detailing upcoming projects and research initiatives. While the Pascal powered Tesla P100 takes many of the headlines, there’s a lot more at play than just hardware. Have a read through the impact it will have on machine learning, Iray rendering, VR, and a new motorsport, Roborace.

Pascal Is Here: Tesla P100

NVIDIA’s annual GTC conference is usually abuzz with new consumer products, and to keep businesses up to speed on what’s to come. This year, things were a little different in that there was very little in terms of consumer products (still no new replacement for that GTX 980 Ti just yet). However, just because there haven’t been any consumer products, doesn’t mean that what’s going on doesn’t affect consumers. In fact, what we are seeing now at GTC, will trickle down into future generation products, beyond the venerable GPU.

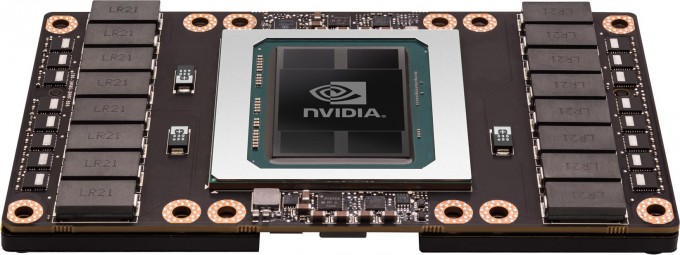

Yesterday was a storm of press announcements around what can only be called a beast of a compute card, the Tesla P100. In an odd turn of events, the very first Pascal architecture-based product to come from NVIDIA will not be for graphics, but for servers. Tesla cards are headless compute cards with zero graphics capabilities; instead they are built for massive parallel data crunching (which is effectively what graphics rendering is, but semantics).

With some outrageous numbers such as 21 TFLOPS of FP16 compute, 16GB of HBM2 RAM, 720GB/s memory bandwidth, 16nm FinFET production process packing 15 billion transistors into a 600mm squared package, and NVLink for 160GB/s interconnect over multiple GPUs per system – the very first Pascal card is 12 times faster than the previous Maxwell-based compute cards, the M40 and M4. These cards aren’t ‘coming soon’ either, they are in production right now. NVIDIA will also be bundling 8 of these new Tesla cards in its own supercomputer modules called DGX-1, but more on that later.

FinFET Brings 16nm Manufacture Process to Pascal

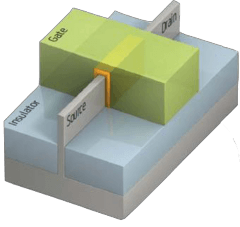

Much of the performance leap is in no small part due to the the manufacture process. For the last few years, GPUs have effectively been stuck on the same 28nm production process – this goes for both AMD and NVIDIA. While 24, 22 and even 20nm methods have been available, they don’t work very well for monolithic chips like GPUs due to transistor leakage. When TSMC started to experiment with FinFET based transistors (rather than a flat transistor, the gate contact is doped on top of the drain and source, creating a 3D-like transistor), we saw the first few revelations of 16nm and 14nm processes, capable of handling large chip designs.

You can read more about FinFet in a related AMD article on its Polaris architecture, since the benefits of the process are the same. Going from 28nm down to 16nm means there is up to a 60% reduction in power requirements (and thus, heat); couple this with smaller transistors, and it allows companies to pack in a lot more on the same wafer.

When it comes to compute though, hardware is only half the equation (realistically, it’s a lot less, but you get the idea), it’s also the software that needs to be able to handle all this raw electrical muscle. During the GTC keynote, a number of items were dedicated to new developer tools and APIs to help leverage the new advances in hardware, but also to bring about NVIDIA’s next major target market – machine learning.

NVIDIA Deep Learning AI Libraries

NVIDIA breaks down its software packages into seven key section, with a good chunk of it dedicated to AI and neural networks. Deep learning is a primitives set for building neural networks, pattern recognition and learning algorithms. This leads into the next two major categories of self-driving cars (fairly obvious), and autonomous machines (robots, drones, industrial machines). These three software packages make use of CUDA extensions called cuDNN. NVIDIA has a number of plans involving vehicles, which we’ll touch on later.

Accelerated computing is big business, as there is an ever greater demand in not just centralized supercomputers, but also distributed cloud-based processing too. Finance, oil and gas all make use of heavy computation, not just for research but also for monitoring. Pascal’s updated NVLink interconnect in the Tesla P100 will help share resources across hardware quickly, but also allows for universal memory (those 16GBs of VRAM can be accessed by other GPUs in the system).

This scalability is what brings in a new product range, which we covered briefly with the previous news post, and that’s the DGX-1 server. This is a 3U rack unit that packs up to 8x Tesla P100 compute cards that can serve up to 170 TFLOPS of FP16. Such systems are being rolled out for medical AI diagnosis analysis, rapid AI training, and image recognition.

While gaming was mentioned at GTC, it was more of repetition on the roll-out of the GameWorks libraries for developers to implement advanced rendering techniques (such as VXAO, Hybrid Frustum Traced Shadows and additional AA methods).

Exploring Mars 2030

Exploring Mars in VR!

Posted by Techgage on Tuesday, 5 April 2016

Woz: “I feel dizzy, like I am going to fall out of my chair.” Jen-Hsun: “That was not a helpful comment.” I don’t think I’ve ever laughed so hard at a keynote before. -RobW

Posted by Techgage on Tuesday, 5 April 2016

Of course, VR rendering can only realistically be performed on rasterized workloads, but we’re also at a stage where VR will soon start making its way into movies, and even live demonstration of your next car purchase, thanks to high-tech car salesmen. The problem is that realtime ray-tracing is still a staggering workload, even today with some of the best hardware going, which is why new methods of rendering are required.

Iray VR – Realtime Photorealism

We’ve talked about Iray at Techgage a fair bit, as it’s NVIDIA’s multi-pass rendering system that builds up realism in an image, bit-by-bit (or pixel-by-pixel), complete with a live preview. Make a component change, see the effects of different materials thanks to the MDL libraries, and have it all appear and change in real time (see the video in the linked article).

Iray VR is a natural extension to this rendering system that allows a user to not just see, but walk through an environment, or see their new car or home, before its even built. Throw enough rendering power at it (say with a few dozen DGX-1 systems), and we could very well see photorealism in realtime – in VR!

Autonomous Racing

To come full circle though, the last announcement will have a certain number of people excited, and that’s NVIDIA’s Autonomous races powered by DRIVE PX 2. As a sub-division of the Formula E races, Roborace is a 10 team challenge where identical cars are pitted against each other, for a battle of brains over brawn.

Each team will get two cars with a DRIVE PX 2 system installed, and it’s up to their software ingenuity to overcome their adversaries. Deep learning will be at the forefront of this adventure, making sense of all the sensor inputs, building a model of where the car is and its surroundings, as well as coming up with tactics to overtake the other teams. Research from this will eventually find its way into the ever inevitable self-driving cars of the future.

We still have the rest of the week to go, but NVIDIA sure dropped a few bombshells on us. For consumer products, there isn’t a whole lot going on, but what was announced will lead to new developments in the consumer space over the next couple years. As the rest of the event unfolds, we’ll keep you apprised of anything spicy.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!