- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA’s Pascal Is Shipping: Company Announces Tesla P100 Compute GPU & DGX-1 Deep Learning Server

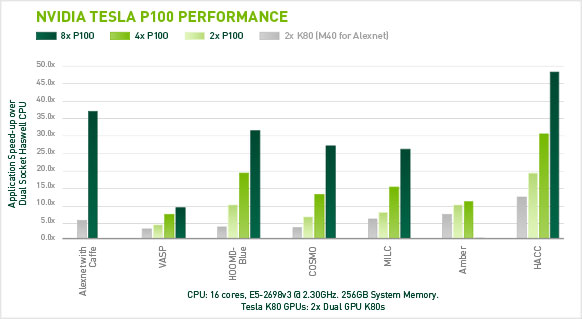

At GTC 2016, NVIDIA announced details regarding its first HPC-targeted compute GPU that will utilize its upcoming Pascal architecture. The expected performance increases and power savings will allow companies to scale to petaflop levels of computation, using a fraction of the power and real estate.

NVIDIA’s Tesla series GPUs are headless cards for server integration, and are used extensively in machine-learning, complex math, and even self-driving cars. The Tesla P100 will be one of the first Pascal GPUs to hit the market, and it’ll bring with it some seriously impressive numbers.

Pascal, much like AMD’s Polaris, is a jump from TSMC’s 28nm manufacture process, down to 16nm FinFET, offering a 60% drop in power and untold improvements in computation. It also marks NVIDIA’s first attempt into HBM2 memory, a more advanced form of the on-die stacked memory used in AMD’s Fiji-based GPUs. Tesla P100s will come with 16GB of VRAM, with a rather impressive 720GB/s of bandwidth. This is even bundled with ECC protection for improved reliability.

On the computation front is where things get interesting (well, more interesting); expect to see nearly double the performance of the previous generation Tesla cards, the M40 and M4. The Pascal-based Tesla P100 currently has listed three main floating-point references:

- 5.3 TFLOPS double-precision (FP64)

- 10.6 TFLOPS single-precision (FP32)

- 21.2 TFLOPS half-precision (FP16)

So, some perspective. The current top-line consumer GPU from NVIDIA is the TITAN X, and while no slouch in of itself, it can ‘only’ push 7 TFLOPS FP32. Each P100 has a monolithic number of transistors, too: 15 billion on a 600mm squared chip. Almost everything about this card is huge. With all of the new tech thrown in together, CEO Jen-Hsun Huang said we should expect to see a 12x increase in performance over Maxwell.

It’s not just single card performance that needs to be tackled; it’s scalability that matters most when it comes to datacenters and supercomputers, which is where NVIDIA’s next announcement comes in – NVLink and the DGX-1 deep learning supercomputer. The Tesla P100s will feature the long-awaited new bidirectional interconnect capable of 160GB/s called NVLink, allowing for high-speed communication between cards. Put 8x Tesla P100s in a system, and you have a DGX-1 supercomputer:

- Up to 170 teraflops of half-precision (FP16) peak performance

- Eight Tesla P100 GPU accelerators, 16GB memory per GPU

- NVLink Hybrid Cube Mesh

- 7TB SSD DL Cache

- Dual 10GbE, Quad InfiniBand 100Gb networking

- 3U – 3200W

By our calculations, just over 300x DGX-1 systems would be able to out-process the current number-one supercomputer in the world, with only a fraction of the power (not to mention real-estate and ancillary cooling too). These mini supercomputers will be at the forefront of NVIDIA’s deep learning project.

Deep learning is designed around artificial intelligence and neural networks. Its main purpose is to both simplify and standardize how scientists can build AI and neural networks, without having to waste time on large and complex custom-built systems. A single DGX-1 box has the equivalent throughput of 250 x86 servers.

The Tesla P100 is already in production, but is limited by current HBM2 supply. Systems are already being built around the new Pascal GPU, but we expect to see full server solutions being rolled out by early 2017. While this wasn’t the consumer release people wanted, it is a good indication of what to expect from more mainstream products. Keep your eyes peeled for more info.