- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA Accelerates Hyperscale Machine Learning With New Tesla M4 & M40 GPUs

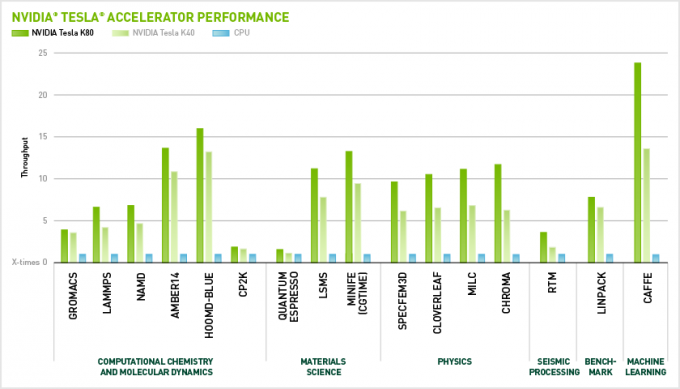

Over the span of just a few years, machine- and deep-learning went from being mere murmurs to major focal-points at some of the world’s biggest companies. A couple of those focusing hard on the software side of things include Amazon, Google, and Microsoft, while on the hardware side, NVIDIA has been instrumental in designing hardware that can dramatically accelerate processing of important data.

At the previous couple of GPU Technology Conferences, NVIDIA CEO Jen-Hsun Huang’s opening keynotes have included revelations about machine-learning. At last spring’s event, he invited Google’s Jeff Dean and Baidu’s Andrew Ng on the stage to explain how they make use of GPUs to speed-up their work. During the keynote, we didn’t just witness something expected like a search engine that becomes smarter; we even saw examples of where computers could teach themselves to play – and get better at – games, one being Breakout. There should be no doubt at this point that machine-learning is going to be a major part of our future, even if it’s not so obvious to the casual observer.

Machine-learning capabilities are not restricted to certain pieces of hardware, but certain pieces of hardware could be a lot better at achieving an overall goal. At that aforementioned GTC, Jen-Hsun explained just how powerful the desktop-targeted GeForce GTX TITAN X is in deep-learning, able to churn through an image recognition project with AlexNet much faster than on a CPU alone. Alternatively, researchers could have made use of Quadro workstation or Tesla compute cards to get the job done.

Well, now there’s a new option. Or two, actually: Tesla M40, and Tesla M4.

The big gun, Tesla M40, is specs-equivalent to the GeForce TITAN X and Quadro M6000. That’s to say that it has 12GB of GDDR5 memory, 3,072 CUDA cores, and a TDP of 250W. Despite the similarities, NVIDIA says that this card can use GPU Boost to achieve single-precision performance of 7 TFLOPs.

While both of these new Tesla accelerators are suitable for similar purposes, NVIDIA targets the M4 at a couple of specific workloads: video transcoding, video processing, image processing, and machine-learning inference. The M4’s form-factor is low-profile, so it can fit into tight enclosures. It includes 1,024 CUDA cores, 4GB of GDDR5, and peaks at 2.2 TFLOPs. Interestingly, it has a variable TDP, based on the profile chosen; this ranges between 50 – 75W.

Along with these cards, NVIDIA’s also shipping what it calls the NVIDIA Hyperscale Suite. This library includes cuDNN, for developing deep-learning algorithms; GPU-accelerated FFmpeg, acceleration of video processing; GPU REST Engine, allowing the rolling out of high-throughput / low-latency Web services; and Image Compute Engine, one that works with the REST Engine to resize images at up to 5x faster over a traditional CPU.

Pricing on either of these two new Tesla accelerators has not been announced, but the M40 and the Hyperscale Suite will become available “later this year”. The M40 will become available in Q1 2016.