- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GTC 2019: RTX Servers, Omniverse Collaboration, CUDA-X AI, And More

We’re here at NVIDIA’s GPU Technology Conference 2019, and there is a tonne to talk about. From RTX Servers and Workstations, Omniverse collaboration software to unite 3D film studios, CUDA-X AI libraries, a huge focus on data science, a $99 Jetson Nano dev kit, and more.

NVIDIA likes to do long keynote announcements, and this year was no different. In a three-hour presentation, we were serenaded with technology after technology, on just about everything that NVIDIA has worked on over the last year, and there’s a lot to cover.

Not everything you see and hear at GTC will have a direct impact on upcoming products, but it gives an overall strategy as to where NVIDIA is heading, how it plans to get there, as well as who it’s partnering with.

Graphics is a big part of NVIDIA, but not the only part – something that’s becoming more apparent as the years have gone by.

NVIDIA is more a parallel processing company, rather than a GPU vendor. It builds increasingly powerful server farms and workstations, research into new processing technologies, artificial intelligence driven by deep learning, autonomous vehicles, robotics, datacenter analysis, and the latest buzzword for this year is Data Science – an extension to Computer Science.

Turing and RTX

RTX, unsurprisingly, took a fair chunk of the time at this year’s GTC. It’s part of NVIDIA’s latest architecture and there’s a lot of development going into it behind the scenes. It’s not just about games, but content creators – the 3D designers, animators, film studios, and the like. RTX is as much an ecosystem than a product, as there are many elements involved, not just some extra silicon taking up real estate on the chips.

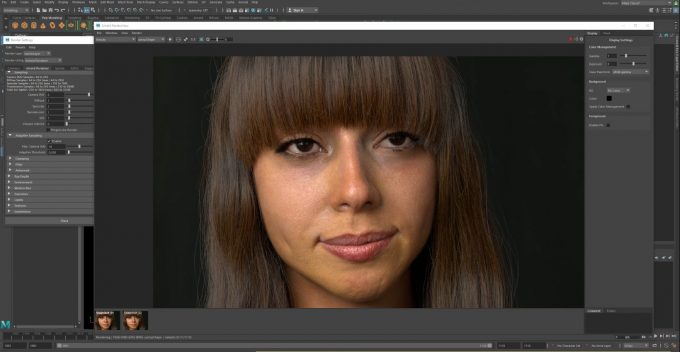

Earlier today we saw the introduction of GPU rendering with Arnold, one of the premier rendering engines used by film studios, and it’s one of many suites to not just enable GPU rendering, but RTX capabilities as well.

The list of supported software is growing very rapidly, and if you keep up with our workstation content, you’ll see quite a few familiar names, with the likes of Adobe Dimension and Substance, Chaos Group’s V-Ray, Dassault’s CATIA and SolidWorks, Daz 3D, KeyShot, OTOY Octane, Pixar Renderman, Redshift, Siemens NX, and Unity.

GPU ray traced rendering has been a pipe dream for a very long time, and even though it’s still not quite there yet with the extremely complex scenes, it offers a huge performance advantage on the more common workloads. While there were fears of poor quality in the early days, the software is now up to the same quality point as CPU rendering these days, which can be attested by Arnold’s involvement.

The final render is only a part of the process, the design and scene construction still takes up a large amount of time, which is where the RTX option comes in. While you won’t see RTX being used in a final render, it will be used extensively in allowing artists to see a high quality approximation of the final render, in a fraction of the time. Material and lighting can be set and tested with many different effects in only a couple of minutes, instead of over an hour for a single test frame.

Omniverse

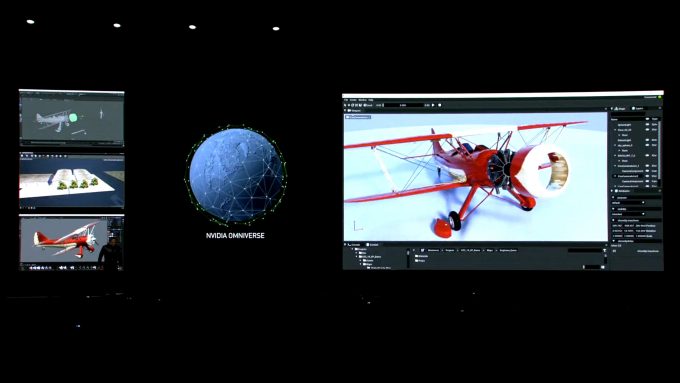

One of the tricky aspects about 3D design is collaboration, something that various film studios will be all too familiar with. 3D is not just a single element, it’s the combination of dozens of different pieces of software used across different teams to build a project. Quite often, those different software suites are not directly compatible, nor can you easily pull everything together to get the right look and feel of an object in a scene.

We’ve seen some form of collaboration mechanics with different software suites over the years, such as the now common live editing of textures in Photoshop, updating the materials used in a model in Maya or Dimension.

NVIDIA is introducing a new collaboration suite called Omniverse, that pulls together all the different aspects of a project for live editing and previewing. The demonstration on stage saw scene building, a 3D model being manipulated and edited, and the model being painted and textured, all independently of one another, and then seen as a single live preview with Omniverse Viewer.

Omniverse supports Pixar’s Universal Scene Description tech for meta data, and includes NVIDIA’s own Material Definition Language for surface details. The scene can be rendered in real time using NVIDIA’s RTX features, including the use of both CUDA and Tensor Cores.

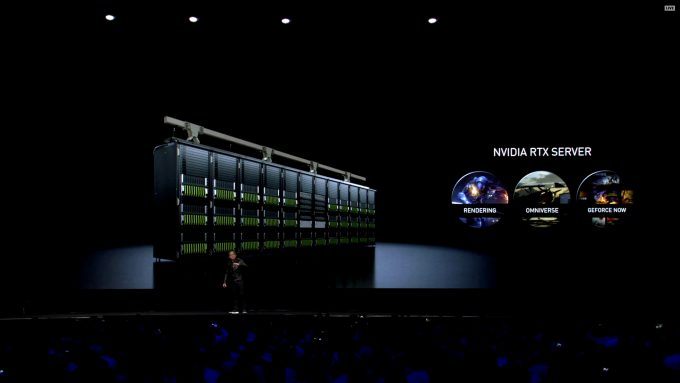

RTX Servers and Quadro vDWS

In classic NVIDIA style of ‘the more you buy, the more you save’, a new server configuration will be made available that builds on the capabilities of the DGX 1 and 2 4U rack units, and the DGX Station. The RTX Server is a beast of a machine that comprises 1280 GPUs on 32 8U blade servers. Each blade holds 40 GPUs which can be configured for a multi user environment using GRID, and these blades are linked together with NVIDIA’s latest acquisition, Mellanox, the creator of Infiniband networking.

What are such machines even used for you may ask? Well, for one thing, GeForce NOW, NVIDIA’s game streaming platform that works as the backbone for many game streaming services. These are rent-a-PC gaming systems that are fitted with GPUs capable of playing the latest games from pretty much any vendor, and the system streamed to any device with an internet connection, be it a phone, Mac, or low-end PC.

These large networked gaming servers have had backing from SoftBank and LG Uplus, deploying such gaming experiences over 5G networks. 5G gets special mention here because of its focus not on just pure bandwidth, but latency too. Latency was a big part of the overall theme with these RTX servers, as data scaling is becoming less bound by bandwidth bottlenecks.

The other aspect to these huge servers are render farms, or more general purpose compute, something that Amazon Web Services will be putting to work in its own datacenters. For video production, these servers support Quadro Virtual Data Center Workstation Software (vDWS) which can scale processing power based on each worker’s use, allocating more GPUs for more demanding applications, or pooled together for Omniverse collaborations.

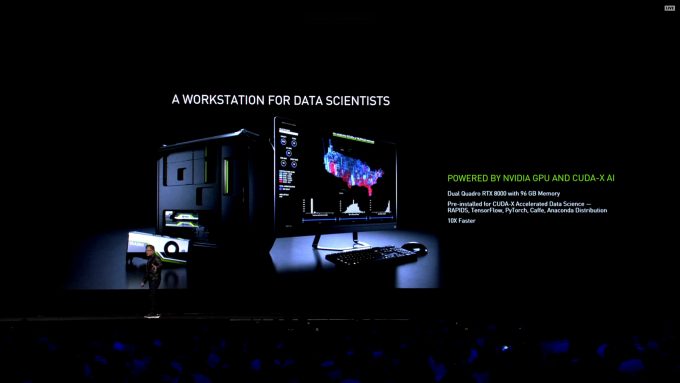

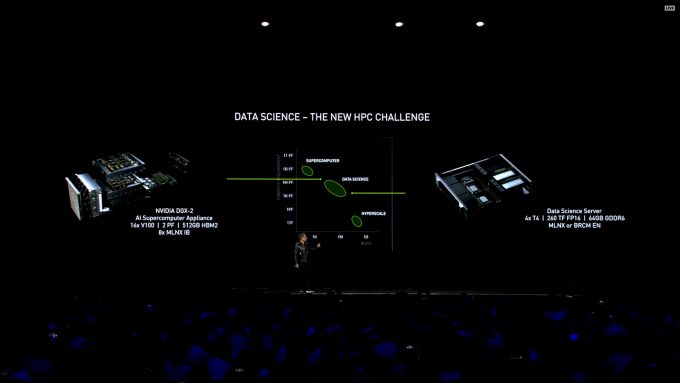

Data Science Workstations and CUDA-X AI

At this year’s GTC, the one section that took up the majority of the time would have to be NVIDIA’s focus on enterprise level processing and data science. With deep learning, neural networks, machine learning, and artificial intelligence all being the buzzwords over the last few years, it’s no surprise there will be a greater focus on it from companies like NVIDIA, that creates the hardware used by developers to build these AI-powered services.

CUDA-X AI is not, in of itself, a new API or SDK, but a collection of libraries that allow for acceleration of compute workloads on NVIDIA hardware. These libraries include cuDNN, cuML, and TensorRT, all built around accelerating deep learning algorithms, and can be integrated into any of the common frameworks, such as TensorFlow, PyTorch, and MXNet.

The more heavy-duty, enterprise-grade data analytics is where a lot of attention was placed, something that NVIDIA has worked on for several years now. The focus at this point has been on data science, an offshoot of computer science, which focus on extremely large databases, in excess of 1TB in size.

During the presentation, NVIDIA showed an example with Charter, and the huge datasets it has to deal with. Using RAPIDS, an RTX Server, and some clever analytics software, Charter could integrate hundreds of datasets from multiple sources, in different database formats, process them in realtime, and generate usage predictions for internet services across the country. This can be fed into management software that can allocate resources as and when they’re needed, as well as help with the placement of new internet access points.

Jetson Nano

The last part of the GTC 2019 keynote was the robotics and automotive section. Things are still pretty much the same on the self-driving vehicle front, with the recent addition of Toyota to the rather large crowd of companies that make use of NVIDIA’s DRIVE Constellation and Xavier packages of hardware and software.

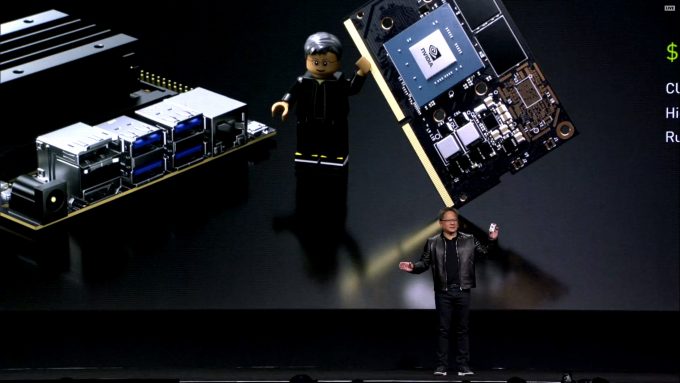

Also on show was a rather delightful miniature computer, the $99 devkit called the Jetson Nano. It’s tiny, but a very powerful kit that has 472 GFLOPs of compute on a near credit-card sized frame, that sips 5 watts of power.

These small devkits provide students and developers quick and easy access to some of the AI technologies that NVIDIA has been working on, from CUDA-X AI, ISAAC robotics simulation, as well as full-blown autonomous vehicles. The small and lightweight PCB makes it perfect for drones too, as multiple sensors can be integrated into it, such as cameras and Lidar.

The small cost of entry is also a gateway to the much larger and more capable Jetson modules, like the AGX Xavier and TX2, as they’re all built around the same framework and are interoperable. JetPack SDK is built on CUDA-X AI and the accompanying software stacks that come with it.

RTX On

There’s a lot more covered during the livestream, which you can check out yourself if you wish, including a demonstration of Quake II with RTX enhancements enabled, such as global illumination and reflected surfaces. While no new major announcements about an upcoming GPU or new info on the next-gen architecture, this year’s GTC did cover a lot of different industry sectors and technologies.

AI will remain a huge growth industry for NVIDIA for a long time to come, something that’s a little more permanent than the Blockchain bubble it gambled on last year. The autonomous vehicles research its doing is still slow going, but many more industry partners are getting involved, and the availability of a cheap devkit will help students get to grips with one of the building blocks much easier.

For gamers, we’re still in a very early and experimental phase of RTX, and it’s not something that will change at least for the next year. On the professional creators side, there is huge adoption of RTX in rendering and 3D design, as seen by the large list of software partners earlier in this article.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!